Mobileye has broken industry norms from the very beginning by using monocular camera vision to perceive road environments, significantly reducing costs and promoting the popularization of driver assistance. Its EyeQ series chips feature an architecture optimized for visual computing, making it one of the mainstream platforms for visual driver assistance systems worldwide.

Currently, Mobileye has three application paths: Mobileye SuperVision, Mobileye Chauffeur , and Mobileye Drive.

SuperVision is a multi-camera-based advanced driver assistance system that supports hands-free driving on specific roads and has been mass-produced in brands like Geely; Chauffeur integrates LiDAR and radar based on SuperVision to achieve point-to-point autonomous driving in more complex environments, targeting high-end passenger vehicles; Drive represents the highest level of autonomous driving platform, completely eliminating driver intervention, primarily used for Robotaxi and commercial autonomous delivery vehicles. These three application paths extend from L2 to L4.

EyeQ 6 ChipCONTENT

EyeQ 6 ChipCONTENT

EyeQ6 High chip uses MIPS I6500-F architecture, containing 8 cores and 32 threads, distributed across two clusters, each with 4 cores, with each core supporting 4 threads, capable of handling L2 level and above driver assistance and high-load control in L4 autonomous driving; two general-purpose computing accelerators integrate multiple heterogeneous accelerators – MPC, VMP, PMA; two deep learning accelerators provide 34 DL TOPS (INT8) computing power for advanced perception functions such as object detection, free space perception, pedestrian avoidance, and visual path planning under high-resolution multi-camera input; equipped with LPDDR5 memory. Compared to the EyeQ5 High chip, the computing power has increased by over 100%, while power consumption has only increased by 22%.

EyeQ6 Lite chip is equipped with 2 CPU cores for control tasks, system scheduling, and low-load computing tasks. Although the number of cores is limited, it is sufficient to support the real-time and stability required for L1/L2 levels; one general-purpose computing accelerator integrates multiple heterogeneous accelerators – MPC, VMP, PMA; the convolutional neural network accelerator provides 5 DL TOPS (INT8) of deep learning computing power, suitable for basic driver assistance functions such as lane detection, pedestrian recognition, and traffic sign recognition; equipped with LPDDR4/4x memory.

Mobileye chips are designed not to compete on specifications but to pursue energy efficiency. Unlike many driver assistance chip manufacturers that pursue high TOPS, Mobileye focuses on how to achieve the same or even more complex perception and decision-making tasks with a more efficient architecture at lower power consumption.

The image shows the internal structure of EyeQ6 High / Lite chips

EyeQ 6Supported Driving ScenariosCONTENT

SuperVision

* SuperVision scenarios are driven by the following functions

Supported by two EyeQ6 High chips

Responsibility-Sensitive Safety (RSS) safety model and driving strategy to balance safety and efficient driving

Autonomous driving maps based on Road Experience Management (REM), a crowdsourced map that supplements sensor-captured data

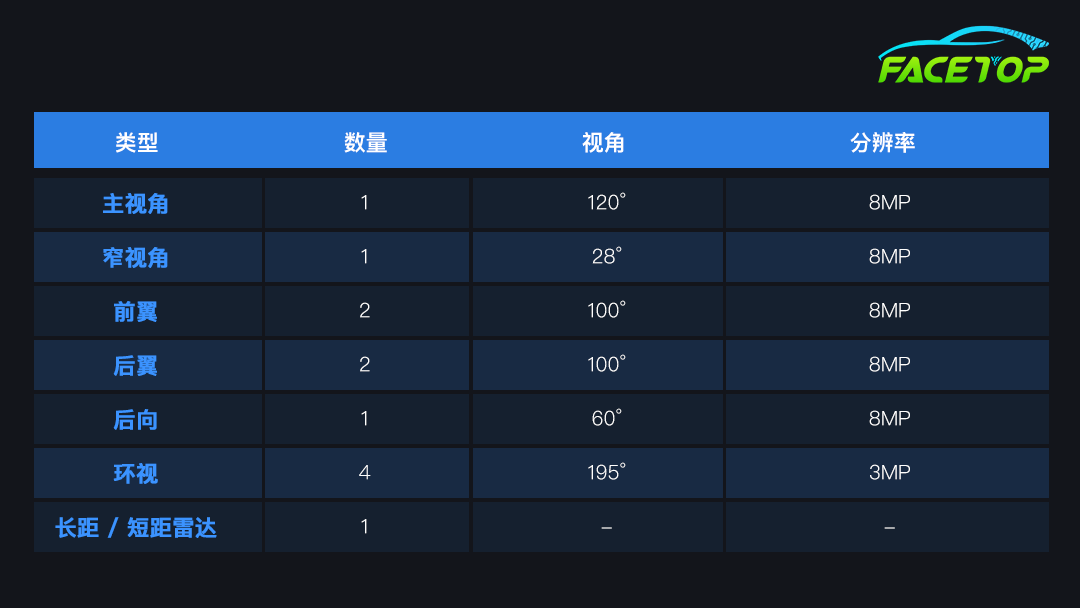

Surround high-definition visual perception with 11 cameras

The image shows the number and position of sensors in the SuperVision scenario

Chauffeur

* Chauffeur scenarios are driven by the following functions

Supported by three EyeQ6 High chips

Responsibility-Sensitive Safety (RSS) safety model and driving strategy to balance safety and efficient driving

Autonomous driving maps based on Road Experience Management (REM), a crowdsourced map that supplements sensor-captured data

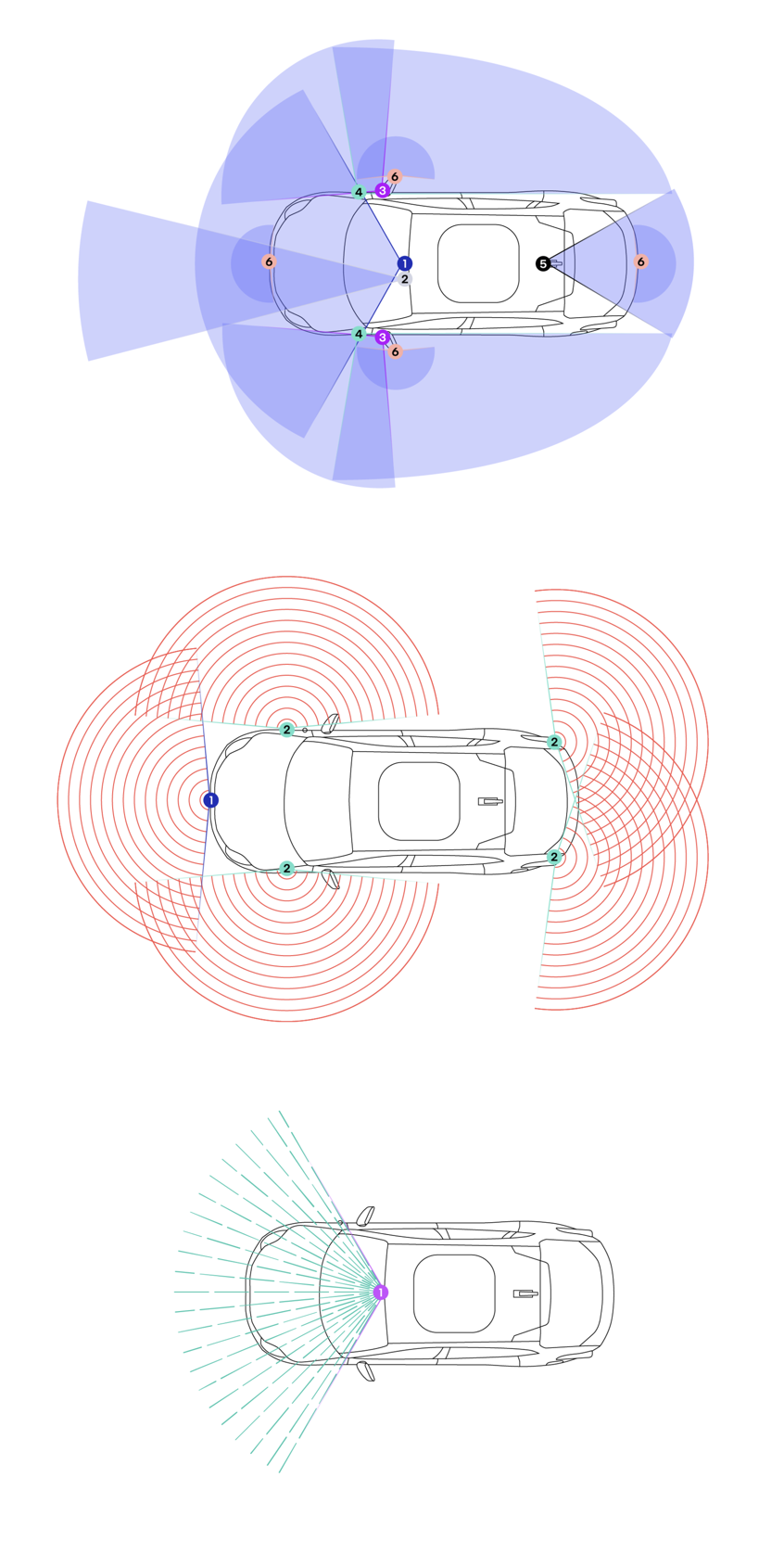

A camera system and a radar/LiDAR system to enhance stability and safety

The image shows the position and coverage of cameras (top), radar (middle), and LiDAR (bottom) in the Chauffeur scenario

Drive

* Drive scenarios are driven by the following functions

Supported by four EyeQ6 High chips

Responsibility-Sensitive Safety (RSS) safety model and driving strategy to balance safety and efficient driving

Autonomous driving maps based on Road Experience Management (REM), a crowdsourced map that supplements sensor-captured data

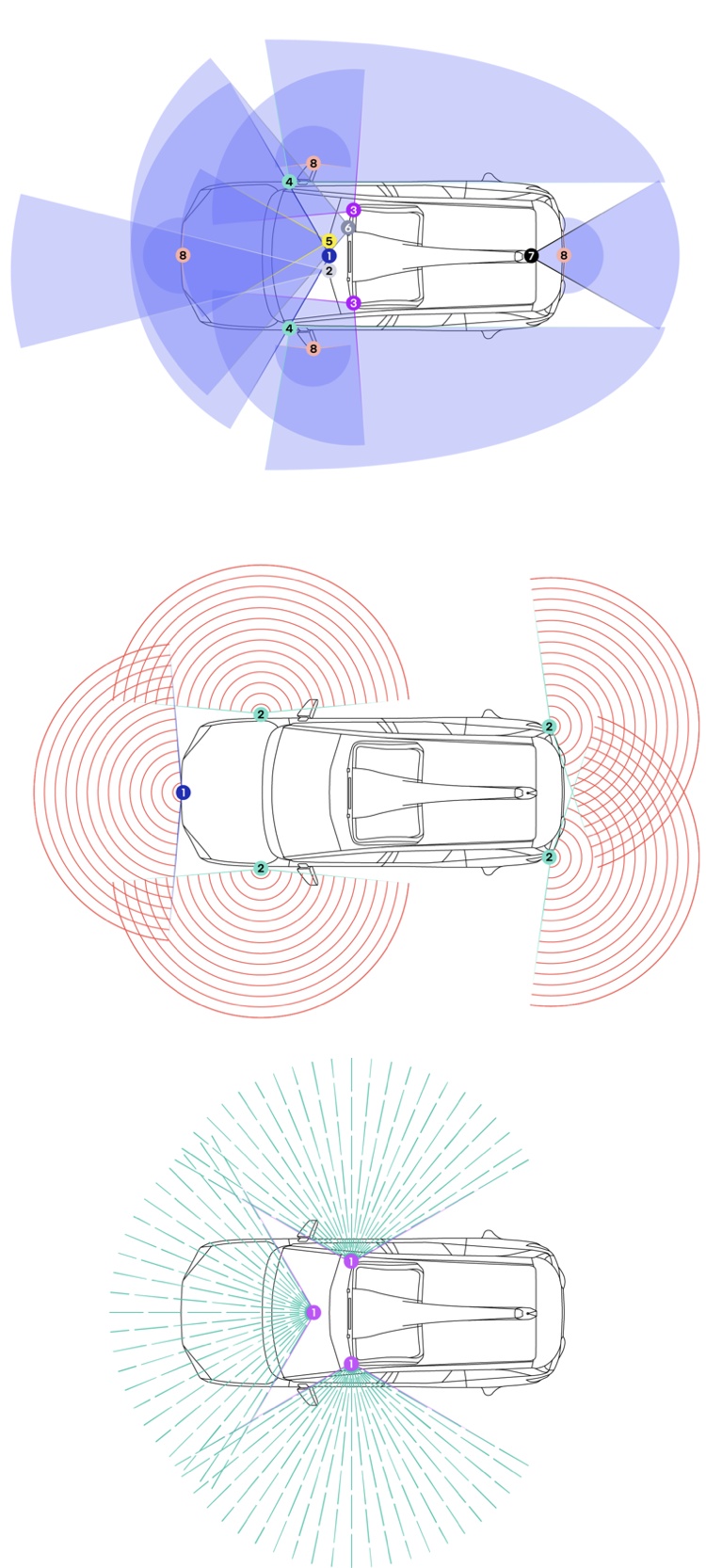

A camera system and a radar/LiDAR system to enhance stability and safety

The image shows the position and coverage of cameras (top), radar (middle), and LiDAR (bottom) in the Drive scenario

Mobileye Technical ConceptsCONTENT

Responsibility-Sensitive Safety Model (RSS)

This is a formal mathematical framework used to define how autonomous vehicles should drive safely in various traffic situations. This model translates the common-sense safety judgments of human drivers into verifiable, manufacturable mathematical rules. It does not rely on predicting the behavior of other vehicles but instead formulates driving strategies based on “reasonable worst-case assumptions” to make safe decisions in uncertain environments.

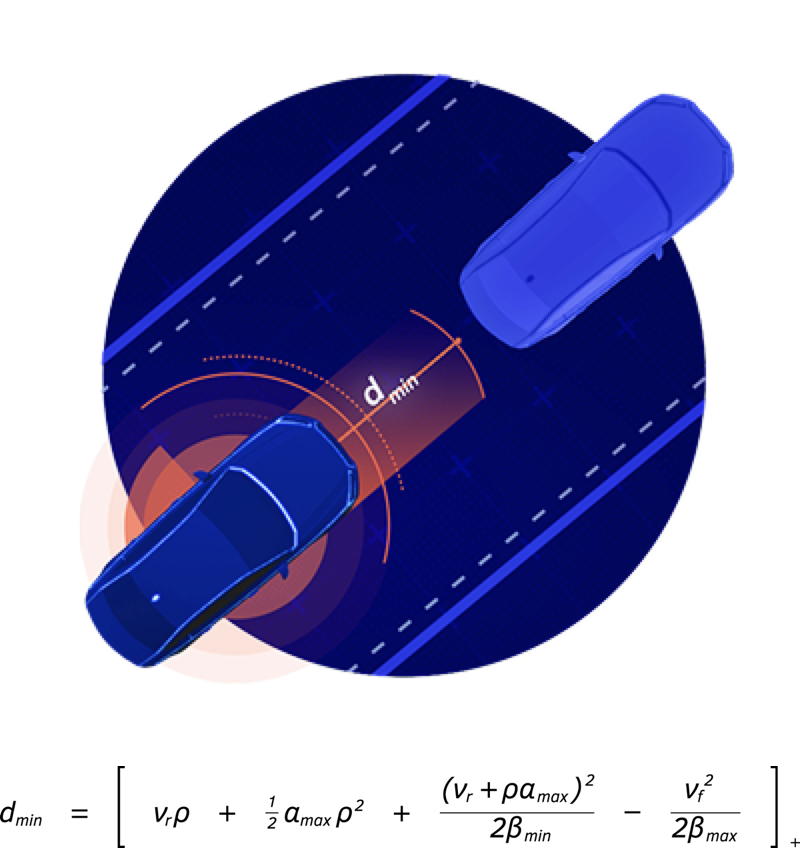

* Safety Distance – Do not collide with the vehicle in front

According to basic physical laws and over a century of human driving experience, novice drivers are typically taught to maintain a safe distance from the vehicle in front to allow sufficient reaction time in case of sudden braking. The so-called “two-second rule” is an intuitive method that allows drivers to estimate a safe following distance without calculating the speeds of both vehicles, reaction times, and the braking capabilities of the vehicle in front.

For autonomous vehicles, they can be programmed to precisely calculate and maintain a safe following distance based on mathematical formulas. Once the distance between the two vehicles is less than the minimum safe distance dₘᵢₙ, the autonomous vehicle will begin to brake until it returns to a safe following distance or comes to a complete stop, whichever occurs first.

The image shows a scenario of maintaining a safe following distance and the related mathematical formulas

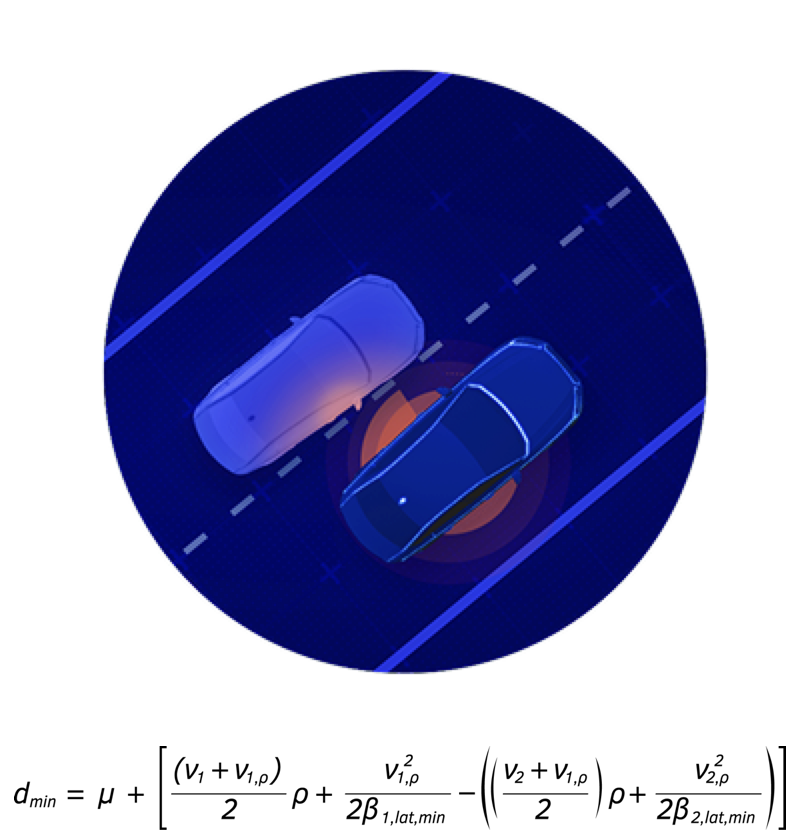

* Lane Change – Do not make reckless lane changes

The safest human drivers typically stay within their lanes and avoid dangerous forced lane changes when merging into other lanes. The second rule of RSS formalizes the lateral safety distance, ensuring that autonomous vehicles can recognize when other vehicles dangerously cut into their lanes, potentially compromising lateral safety.

Humans often naturally make slight adjustments while driving. When a vehicle unsafely cuts into their lane, experienced drivers instinctively apply the brakes lightly to maintain a safe longitudinal distance from the vehicle in front. If they judge that a collision is imminent, they will also actively steer to avoid the oncoming vehicle. The RSS model formalizes these safety distance behaviors through mathematical formulas.

The image shows a scenario of maintaining a safe following distance and the related mathematical formulas

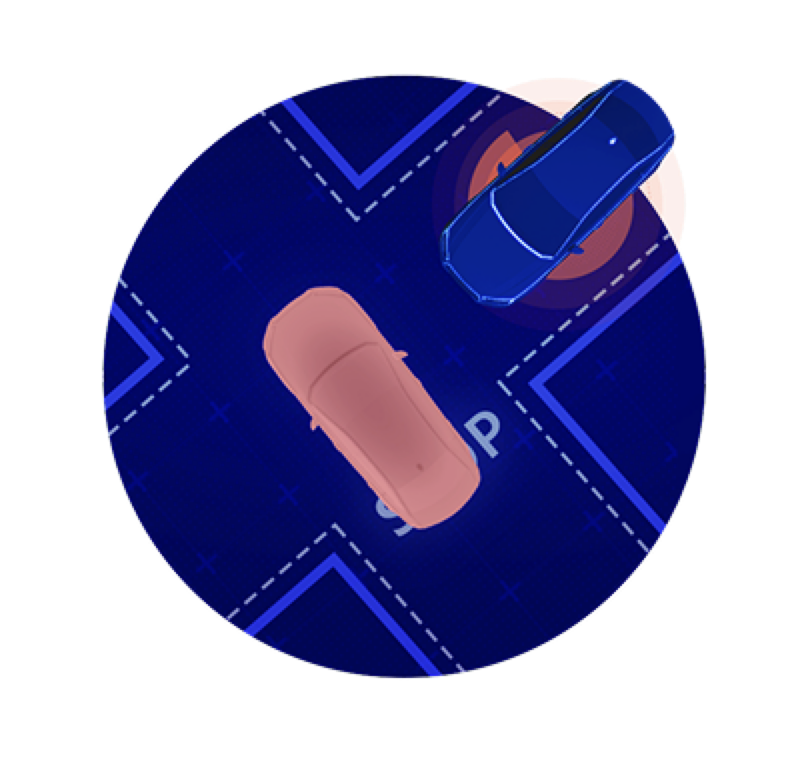

* Road Priority – Road rights are given, not taken

Lane markings, traffic signs, and traffic lights collectively define the right of way at road intersections. Under normal circumstances, human drivers trust that other vehicles will adhere to these rules and yield correctly at intersections.

However, in reality, there are often drivers who violate right-of-way rules, and human drivers typically instinctively relinquish their right of way to avoid accidents. For autonomous vehicles, this judgment must be formalized so that they can protect themselves when faced with human drivers who do not follow the rules. Even if an autonomous vehicle has the right of way by the rules, it should not allow a collision to occur simply because it is in the right.

The image shows a scenario of road priority

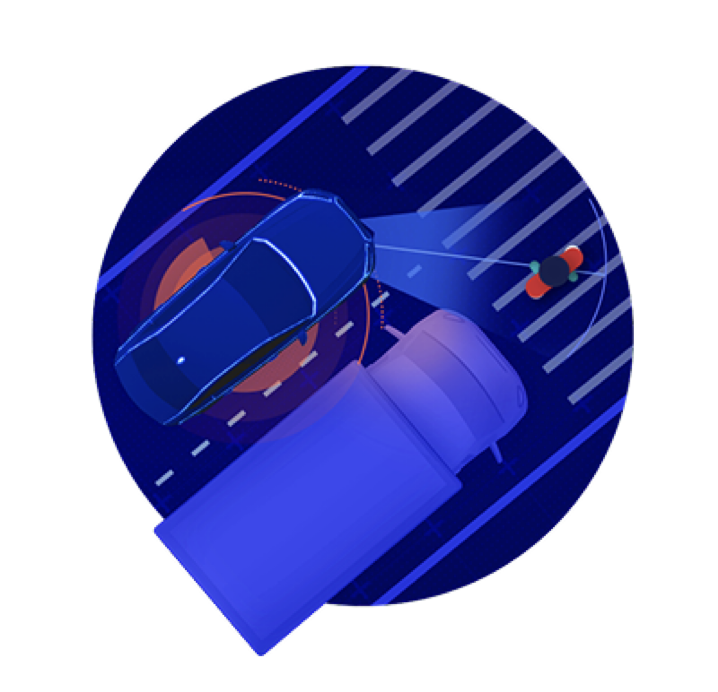

* Limited Visibility – Maintain caution in areas with obstructed views

Many factors can affect visibility while driving. Besides weather, road terrain, buildings, and other vehicles can also obstruct our view of the road and other road users. Depending on the surrounding environment, human drivers naturally adjust their behavior to avoid potential dangers. For example, in crowded school pick-up zones, people instinctively drive more cautiously because children may act unpredictably, and they often do not realize that the vehicle’s visibility is limited.

To allow autonomous vehicles to safely share the road with humans, they must also make similar assumptions and exhibit sufficient caution in areas with obstructed views to ensure the safety of all road users.

The image shows a scenario of limited visibility and maintaining caution

* Avoid Collisions – If an accident can be avoided without causing another accident, it must be avoided

The first four rules provide formal definitions for identifying dangerous situations and how autonomous vehicles should respond. The fifth rule applies to those dangerous situations that arise suddenly, such that a collision cannot be avoided unless one or more traffic rules are violated.

For example, if an object suddenly appears in the path of an autonomous vehicle, the vehicle must take evasive action by changing lanes to avoid a collision, provided that this action does not trigger another accident. Even if this evasive maneuver violates traffic rules regarding lane markings, shoulders, etc., the primary task of the autonomous vehicle remains: to prioritize avoiding collisions as long as it can do so without creating new dangers.

The image shows a scenario of avoiding collisions

Map System (REM)

To achieve autonomous driving globally, Mobileye has built a crowdsourced, continuously updated global map system that does not merely pursue geometric accuracy of global coordinates but accurately provides just the right information needed for autonomous vehicles. This is a dedicated map for autonomous driving.

* Operational Process

1. Data Collection: Vehicles equipped with Mobileye technology automatically collect anonymous data from the road while driving.

2. Identification and Transmission: REM classifies relevant information into labeled data points and transmits these data points to the cloud with low bandwidth.

3. Aggregation and Alignment: Data points from different driving processes along the same road are aggregated and aligned.

4. Modeling: After alignment, algorithms clarify the precise contours of road infrastructure.

5. Semantic Recognition: Algorithms construct relevant semantics to enable vehicles to understand the immediate driving environment.

6. Road Book: This information is compiled into the Mobileye road book, which is a global database containing driving paths, semantic road information, and summarized driving behaviors.

7. Positioning: The global Mobileye road book is continuously sent to mass-produced vehicles for use in autonomous driving applications.

* Advantages of Mobileye Maps

1. REM maps do not rely on other data sources. The creation of the Mobileye road book is independent of any third-party maps and relies entirely on the above seven steps.

2. Unlike traditional static maps, Mobileye road books cover the dynamic historical driving behavior of drivers on any given road to better inform the decision-making process of autonomous vehicles.

3. Privacy is a fundamental principle for Mobileye. The data collection process follows strict methods to comply with data protection and privacy laws, and all collected and processed data is anonymous.

The table shows a dimensional comparison between the Mobileye map system and traditional map systems

—end—