In-Depth Analysis of Automotive SoC Chip Technology Architecture and Industry Ecosystem (2025 Edition)

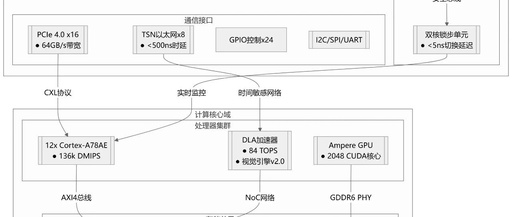

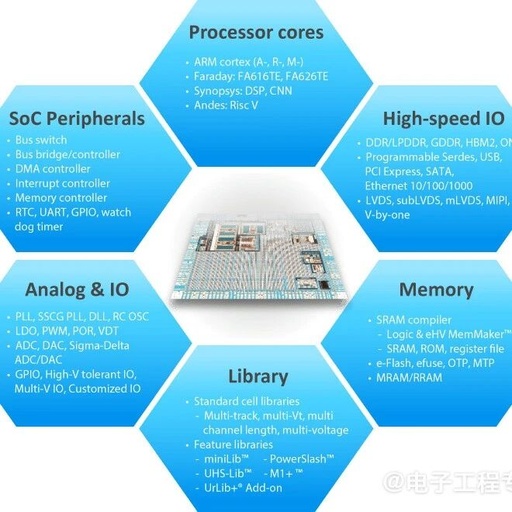

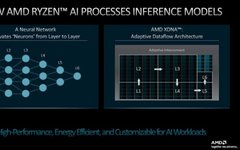

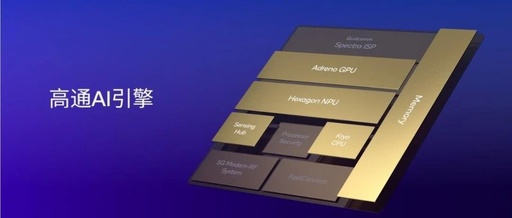

1. Heterogeneous Computing Units: The Collaborative Logic of the Car’s “Brain” 1. What is Heterogeneous Computing? The “heterogeneous computing” in automotive SoC chips refers to the integration of multiple processing units (such as CPU, GPU, AI accelerators, etc.) within the same chip, collaborating to meet the diverse needs of vehicles—from real-time control (like braking) to … Read more