Source: Lao Shi Talks Chips

Many of the world’s top “architects” may be people you have never heard of; they design and create many amazing structures that you may have never seen, such as the complex systems within chips that originate from sand. If you use a mobile phone, computer, or send and receive information over the internet, you are constantly benefiting from the great work of these architects.

Dr. Doug Burger is one of these “architects.” He is currently a Technical Fellow at Microsoft, having previously served as a distinguished engineer at Microsoft Research and a professor of computer science at the University of Texas at Austin. He is also the chief architect and main leader of Microsoft’s FPGA projects, Catapult and Brainwave. In 2018, Doug Burger shared his views and vision on the development of the chip industry in the post-Moore’s Law era during a podcast at Microsoft Research, looking ahead to the direction of chip technology in the era of artificial intelligence.

(Dr. Doug Burger, image from Microsoft)

Lao Shi has organized and edited his views. The first article is here. This article is the second, focusing on Dr. Doug Burger’s comprehensive analysis of the unique advantages of FPGA in the era of artificial intelligence, as well as his profound thoughts on the development of AI technology. The article is lengthy, but it is a deep yet accessible exposition of his decades of experience, showcasing the mastery of a great mind, and is worth reading.

Note: The “I” in the following text refers to Dr. Doug Burger.

Table of Contents

1. What is the Dark Silicon Effect

2. FPGA: An Effective Solution to the Dark Silicon Effect

3. What are the Unique Advantages of Using FPGA

4. What is the Catapult Project

5. Brainwave Project and Real-time AI

6. Key Criteria for Evaluating Real-time AI Systems

7. What is the Future Development Path of AI?

1. What is the Dark Silicon Effect

Before I joined Microsoft, my PhD student Hadi Esmaeilzadeh and I were conducting a series of research projects. He is now an associate professor at the University of California, San Diego. At that time, the main trend in academia and industry was multi-core architecture. Although it had not yet fully become a formal global consensus, multi-core architecture was a very hot research direction. People believed that if we could find ways to write and run parallel software, we could directly scale processor architectures to thousands of cores. However, Hadi and I were skeptical about this.

As a result, we published a paper in 2011, which gained significant recognition. Although the term “dark silicon” was not explicitly defined in that paper, its meaning was widely acknowledged.

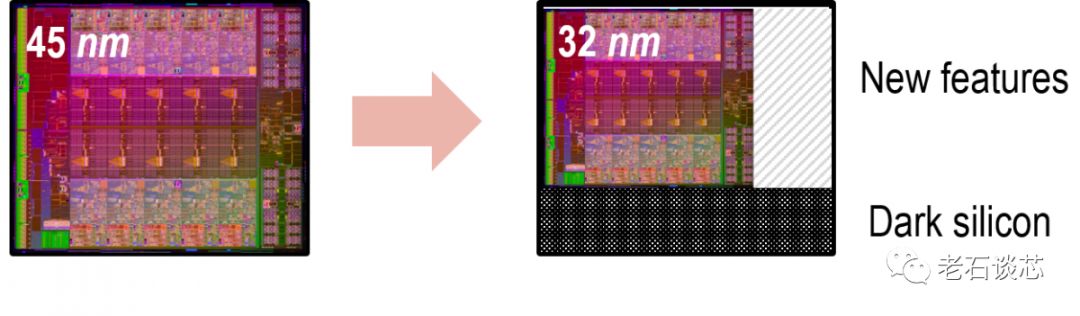

The dark silicon effect refers to the fact that while we can continuously increase the number of processor cores, we cannot operate them all simultaneously due to power consumption limitations. It is like a building with many rooms, but due to excessive power consumption, you cannot turn on the lights in every room, making the building appear to have many dark areas at night. The fundamental reason for this is that in the post-Moore’s Law era, the energy efficiency of transistors has stagnated.

(Diagram of dark silicon, image from NYU)

Thus, even if people develop parallel software and continuously increase the number of cores, the performance improvements will be much smaller than before. Therefore, the industry needs to make further progress in other areas to overcome the problem of “dark silicon.”

2. FPGA: An Effective Solution to the Dark Silicon Effect

In my view, a viable solution is to adopt “custom computing,” which means optimizing hardware design for specific workloads and scenarios. However, the main issue with custom computing or custom chips is the high cost. For a complex cloud computing scenario, neither designers nor users would adopt a system composed of 47,000 different chips.

Therefore, we placed our bets on a chip called FPGA. FPGA stands for “Field Programmable Gate Array,” which is essentially a programmable chip. People can repeatedly rewrite hardware designs in its programmable memory, allowing the FPGA chip to execute different hardware designs and functions. Additionally, you can dynamically change the functions it runs on-site, which is why they are called “field programmable.” In fact, you can change the hardware design running on the FPGA chip every few seconds, making this chip very flexible.

(Intel Stratix 10 FPGA chip, image from Intel)

Based on these characteristics, we have heavily invested in FPGA technology and widely deployed it in Microsoft’s cloud data centers. At the same time, we have begun to transition many important applications and functions from software-based implementations to FPGA-based hardware implementations. This can be seen as a very interesting computing architecture, which will also be our general computing platform based on customized hardware.

By using FPGA, we can conduct research and design on customized computing and custom chips early on, while also maintaining homogeneity that is compatible with existing architectures.

If specific application scenarios or algorithm developments are too rapid, or if the hardware scale is too small, we can continue to use FPGA to implement these hardware functions. As the application scale gradually expands, we can choose to directly convert these mature custom hardware designs into custom chips at the appropriate time to improve their stability, reduce power consumption, and lower costs.

Flexibility is the most important feature of FPGA. It is worth noting that FPGA chips have been widely used in the telecommunications field. These chips are very good at processing data streams quickly and are also used for functional testing before tape-out. However, in cloud computing, no one has truly succeeded in deploying FPGA on a large scale. By “deployment,” I mean not just for prototyping or proof of concept, but for actual industrial-grade use.

3. What are the Unique Advantages of Using FPGA

First, I want to say that both CPU and GPU are amazing computing architectures designed for different workloads and application scenarios.

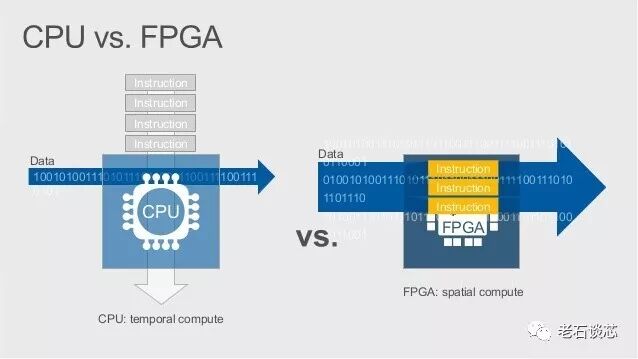

CPU is a very general architecture that operates based on a series of computer instructions, also known as an “instruction set.” In simple terms, the CPU extracts a small portion of data from memory, places it in registers or caches, and then uses a series of instructions to operate on that data. After processing, the data is written back to memory, another small portion of data is extracted, and the instructions are applied again, repeating this cycle. I refer to this method of computation as “temporal computation.”

However, if the data sets that need to be processed with instructions are too large, or if the data values are too large, the CPU cannot handle this efficiently. This is why we often need to use custom chips, such as network card chips, when processing high-speed network traffic, rather than CPUs. This is because in a CPU, even processing a single byte of data requires a series of instructions, and when data flows into the system at 12.5 billion bytes per second, this method of processing cannot keep up, even with many threads.

For GPUs, they excel at a type of parallel processing called “Single Instruction Multiple Data (SIMD).” The essence of this processing method is that there are many identical computing cores in the GPU that can process similar but not entirely identical data sets. Therefore, a single instruction can cause these computing cores to execute the same operation and process all data in parallel.

Then for FPGA, it is essentially a transpose of the CPU computing model. Instead of locking data to the architecture and processing it with a stream of instructions, FPGA locks the “instructions” to the architecture and runs the data stream on top of it.

(Comparison of CPU and FPGA computing models, image from Microsoft)

I refer to this method of computation as “structural computing,” while others call it “spatial computing,” in contrast to the CPU’s “temporal computing” model. The name doesn’t matter much, but the core idea is to implement a certain computing architecture with hardware circuits, continuously inputting data streams into the system to complete computations. In cloud computing, this architecture is very effective for high-speed transmitted network data and serves as a good complement to CPUs.

4. What is the Catapult Project

The main goal of the Catapult project is to deploy FPGA on a large scale in Microsoft’s cloud data centers. Although this project encompasses engineering practices such as circuit and system architecture design, its essence is still a research project.

At the end of 2015, we began deploying Catapult FPGA boards on almost every new server purchased by Microsoft. These servers are used for Microsoft’s Bing search, Azure cloud services, and other applications. So far, we have scaled up significantly, and FPGA has been deployed on a large scale worldwide. This has made Microsoft one of the largest FPGA customers in the world.

(Catapult FPGA board, image from Microsoft)

Internally at Microsoft, many teams are using Catapult FPGA to enhance their services. At the same time, we use FPGA to accelerate many network functions in cloud computing, allowing our customers to receive faster, more stable, and secure cloud computing and network services than ever before. For example, when network packets are transmitted at a rate of 50 billion bits per second, we can use FPGA to control, classify, and rewrite these packets. In contrast, if we were to use CPUs for these tasks, it would require massive CPU core resources. Therefore, for application scenarios like ours, FPGA is a better choice.

(Microsoft’s FPGA board, image from Microsoft)

5. Brainwave Project and Real-time AI

Currently, artificial intelligence has made significant progress, largely due to the development of deep learning technology. People have gradually realized that when you have deep learning algorithms, models, and build deep neural networks, you need a sufficient amount of data to train this network. Only by adding more data can the deep neural network become larger and better. Through the use of deep learning, we have made great strides in many traditional AI fields, such as machine translation, speech recognition, computer vision, etc. At the same time, deep learning can gradually replace the dedicated algorithms that have been developed in these fields for many years.

These tremendous developments and changes have prompted me to think about their impact on semiconductors and chip architecture. Thus, we began to focus on laying out customized hardware architectures for AI, machine learning, and especially deep learning, which is the main background for the Brainwave project.

In the Brainwave project, we proposed a deep neural network processor, also known as a Neural Processing Unit (NPU). For applications like Bing search, they require strong computing power because only through continuous learning and training can they provide better search results to users. Therefore, we accelerate large deep neural networks using FPGA and return results in a very short time. Currently, this computing architecture has been running globally for some time. At the 2018 Microsoft Developer Conference, we officially released the preview version of the Brainwave project on Azure cloud services. We also provided some users with FPGA boards so they could use their own servers to obtain AI models from Azure and run them.

(Brainwave FPGA board, image from Microsoft)

For the Brainwave project, another very important issue is the inference of neural networks. Many current technologies use a method called batch processing. For example, you need to collect many different requests together, package them, and send them to the NPU for processing, then receive all the answers at once.

In this situation, I often liken it to standing in line at a bank; you are second in line, but there are a total of 100 people waiting. The teller collects everyone’s information and asks each person what business they want to conduct, then processes the transactions and hands back the money and receipts to everyone. This way, everyone’s business is completed at the same time, which is called batch processing.

For batch processing applications, good throughput can be achieved, but it often comes with high latency. This is why we are trying to promote the development of real-time AI.

6. Key Criteria for Evaluating Real-time AI Systems

One of the main performance indicators for evaluating real-time AI is the size of latency. However, how small does latency need to be to be considered “small enough” is more of a philosophical question. In fact, it depends on the specific application scenario. For example, if you are monitoring and receiving multiple signals over the network and analyzing where an emergency situation is occurring, then a few minutes may be fast enough. However, if you are having a conversation with someone over the network, even very small latencies and stutters can affect the quality of the call, just like the situation where two people often talk at the same time during a live television interview.

Another example is that Microsoft’s other AI technology is the so-called HPU, which is used in HoloLens devices. HoloLens is a smart glasses device that can provide mixed reality and augmented reality functions, and its HPU also has neural network processing capabilities.

(Astronaut Scott Kelly using HoloLens on the International Space Station, image from NASA)

For HPU, it needs to analyze the environment around the user in real-time so that it can seamlessly display virtual reality content as you look around. Therefore, in this case, even a latency of just a few milliseconds can impact the user’s experience.

In addition to speed, another important factor to consider is cost. For example, if you want to process billions of images or millions of lines of text to analyze and summarize common questions people may ask or answers they may be looking for, as many search engines do; or if a doctor wants to find potential cancer indicators from many radiology scans, then the service cost becomes very important for these types of applications. In many cases, we need to balance two points: one is how fast the system’s processing speed is, or how to improve processing speed; the other is how much the cost is for each service request or processing.

In many cases, increasing the system’s processing speed inevitably represents more investment and rising costs, making it difficult to satisfy both. But this is the main advantage of the Brainwave project; by using FPGA, I believe we are in a very favorable position in both aspects. In terms of performance, we are the fastest, and in terms of cost, we are likely the cheapest.

7. What is the Future Development Path of AI?

To be honest, I am not worried at all about the doomsday of artificial intelligence. Compared to any existing biological system’s intelligence, the efficiency of artificial intelligence is still thousands of times away. You could say that our current AI is not really that “intelligent.” Additionally, we need to pay attention to and control the development of AI on an ethical level.

Regardless, our work has, to some extent, improved the efficiency of computation, making it possible to help solve significant scientific problems, and I feel a strong sense of accomplishment in this.

For those considering engaging in hardware systems and computer architecture research, the most important thing is to find that “North Star” that fills you with passion and strive for it relentlessly, then work hard for it without worrying too much about career planning or job choices; believe that there will be a way when you reach the mountain. The work you do should make you feel that it can truly bring about change and help you continue to move forward on the path of transformation.

Currently, people are beginning to realize that beyond the heterogeneous accelerators I refer to as the “post-Von Neumann era,” there are far deeper things waiting for us to explore. We are approaching the end of Moore’s Law, and the computing architecture based on the Von Neumann system has existed for quite a long time. Since Von Neumann invented this computing architecture in the 1940s, it has achieved remarkable success.

However, now, in addition to this computing structure, various hardware accelerators have emerged, and many new architectures are being developed, but overall, these new structures are in a relatively chaotic state.

I believe that beneath this chaotic surface lies deeper truths, which will be the most important discoveries for people in the next stage, and this is what I often think about.

I gradually realize that those things that may already be common will be the next huge leap in computing architecture. Of course, I could be completely wrong, but that is the fun of scientific research.

Warm Reminder

Thank you for reading the article from EETOP. If you like this article, please share it with your friends. For more information, please follow me.

Click to follow