Why LoRA Has Become an Indispensable Core Technology for Fine-Tuning Large Models?

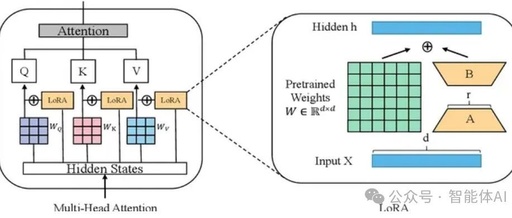

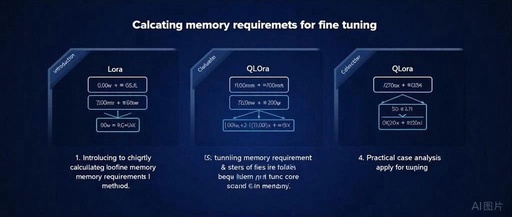

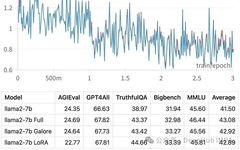

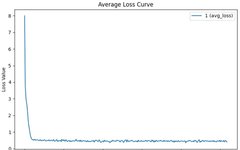

In the field of artificial intelligence, large language models (LLMs) such as Claude, LLaMA, and DeepSeek are becoming increasingly powerful. However, adapting these models to specific tasks, such as legal Q&A, medical dialogues, or internal knowledge queries for a company, traditionally involves “fine-tuning” the model. This often entails significant computational overhead and high resource costs. … Read more