Opportunities and Challenges of Edge Deployment of GenAI: NPU as the Key to Breakthrough

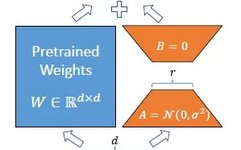

In the past decade, artificial intelligence (AI) and machine learning (ML) have undergone significant transformations—convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are transitioning to Transformers and generative artificial intelligence (GenAI). This transformation is driven by the industry’s urgent demand for models that are efficient, accurate, context-aware, and capable of handling complex tasks.Initially, AI … Read more