NVIDIA Introduces New SOTA Fine-Tuning Method for LLMs: LoRA

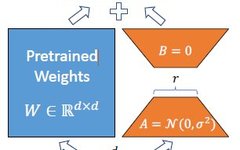

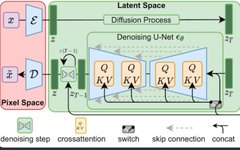

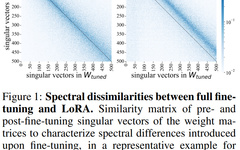

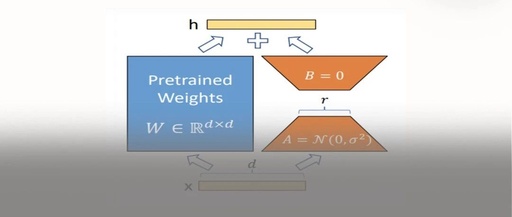

The mainstream method for fine-tuning LLMs, LoRA, has a new variant. Recently, NVIDIA partnered with Hong Kong University of Science and Technology to announce an efficient fine-tuning technique called DoRA, which achieves more granular model updates through low-rank decomposition of pre-trained weight matrices, significantly improving fine-tuning efficiency. In a series of downstream tasks, both training … Read more