No-Code LoRA Fine-Tuning: Easily Build Your Own LLM

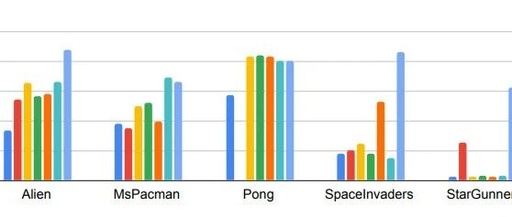

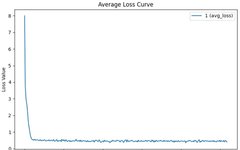

In the previous article, we discussed the theory of fine-tuning. How do we implement it? First, let’s take a look at the effects of fine-tuning. The ultimate goal of fine-tuning is to enhance the model’s performance on specific tasks. We demonstrate the effects of fine-tuning by comparing the original model, the model with added system … Read more