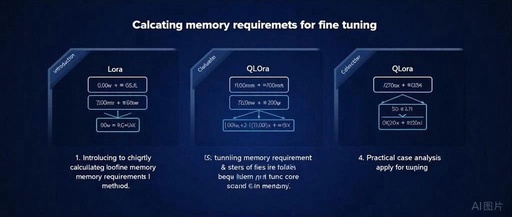

Guide to Calculating GPU Memory Requirements for LoRA and QLoRA Fine-Tuning: Understandable for Beginners

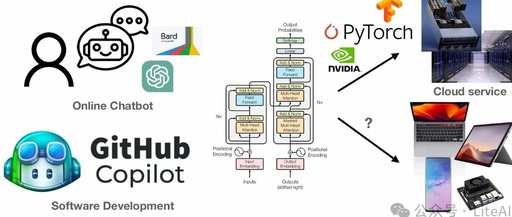

I have recently compiled a simple and easy-to-understand guide on the GPU memory requirements for fine-tuning with LoRA and QLoRA, which can help you estimate the memory needed when fine-tuning using LoRA and QLoRA. Below, we will explain step by step, requiring minimal background knowledge.1. What are LoRA and QLoRA? LoRA (Low-Rank Adaptation):This is a … Read more