Source: DeepHub IMBA

This article is approximately 2000 words long, suggesting an 8-minute read.

GaLore can save VRAM, allowing training of a 7B model on consumer-grade GPUs, but it is slower, taking almost twice as long as fine-tuning and LoRA.

Training large language models (LLMs), even those with “only” 7 billion parameters, is a computationally intensive task. The resources required for this level of training exceed the capabilities of most individual enthusiasts. To bridge this gap, parameter-efficient methods such as Low-Rank Adaptation (LoRA) have emerged, enabling fine-tuning of large models on consumer-grade GPUs.

GaLore is a new approach that reduces VRAM requirements not by directly decreasing the number of parameters, but by optimizing the training of these parameters. In other words, GaLore is a new model training strategy that allows the model to learn using all parameters while being more memory-efficient than LoRA.

GaLore projects these gradients into a low-rank space, significantly reducing computational load while retaining the essential information needed for training. Unlike traditional optimizers that update all layers simultaneously after backpropagation, GaLore implements layer-wise updates during backpropagation. This method further reduces memory usage throughout the training process.

Similar to LoRA, GaLore enables us to fine-tune a 7B model on consumer-grade GPUs with 24 GB of VRAM. The resulting model’s performance is comparable to full-parameter fine-tuning and appears to outperform LoRA.

Currently, Hugging Face does not have official code for this; we will manually use the code from the paper for training and compare it with LoRA.

Installing Dependencies

First, we need to install GaLore.

pip install galore-torchNext, we also need to install the following libraries, paying attention to the versions:

datasets==2.18.0 transformers==4.39.1 trl==0.8.1 accelerate==0.28.0 torch==2.2.1

Scheduler and Optimizer Classes

The Galore hierarchical optimizer is activated through model weight hooks. Since we are using the Hugging Face Trainer, we also need to implement an abstract class for the optimizer and scheduler ourselves. The structure of these classes does not perform any operations.

from typing import Optional

import torch # Approach taken from Hugging Face transformers https://github.com/huggingface/transformers/blob/main/src/transformers/optimization.py

class LayerWiseDummyOptimizer(torch.optim.Optimizer):

def __init__(self, optimizer_dict=None, *args, **kwargs):

dummy_tensor = torch.randn(1, 1)

self.optimizer_dict = optimizer_dict

super().__init__([dummy_tensor], {"lr": 1e-03})

def zero_grad(self, set_to_none: bool = True) -> None:

pass

def step(self, closure=None) -> Optional[float]:

pass

class LayerWiseDummyScheduler(torch.optim.lr_scheduler.LRScheduler):

def __init__(self, *args, **kwargs):

optimizer = LayerWiseDummyOptimizer()

last_epoch = -1

verbose = False

super().__init__(optimizer, last_epoch, verbose)

def get_lr(self):

return [group["lr"] for group in self.optimizer.param_groups]

def _get_closed_form_lr(self):

return self.base_lrsLoading GaLore Optimizer

The goal of the GaLore optimizer is specific parameters, mainly those named ‘attn’ or ‘mlp’ in the linear layers. By systematically hooking functions to these target parameters, the GaLore 8-bit optimizer begins to operate.

from transformers import get_constant_schedule

from functools import partial

import torch.nn

import bitsandbytes as bnb

from galore_torch import GaLoreAdamW8bit

def load_galore_optimizer(model, lr, galore_config):

# function to hook optimizer and scheduler to a given parameter

def optimizer_hook(p, optimizer, scheduler):

if p.grad is not None:

optimizer.step()

optimizer.zero_grad()

scheduler.step()

# Parameters to optimize with Galore

galore_params = [

(module.weight, module_name) for module_name, module in model.named_modules()

if isinstance(module, nn.Linear) and any(target_key in module_name for target_key in galore_config["target_modules_list"])

]

id_galore_params = {id(p) for p, _ in galore_params}

# Hook Galore optim to all target params, Adam8bit to all others

for p in model.parameters():

if p.requires_grad:

if id(p) in id_galore_params:

optimizer = GaLoreAdamW8bit([dict(params=[p], **galore_config)], lr=lr)

else:

optimizer = bnb.optim.Adam8bit([p], lr = lr)

scheduler = get_constant_schedule(optimizer)

p.register_post_accumulate_grad_hook(partial(optimizer_hook, optimizer=optimizer, scheduler=scheduler))

# return dummies, stepping is done with hooks

return LayerWiseDummyOptimizer(), LayerWiseDummyScheduler()

HF Trainer

Once the optimizer is ready, we start training using the Trainer. Here is a simple example using TRL’s SFTTrainer (a subclass of Trainer) to fine-tune llama2-7b on the Open Assistant dataset, running on a GPU like RTX 3090/4090 with 24 GB VRAM.

from transformers import AutoTokenizer, AutoModelForCausalLM, TrainingArguments, set_seed, get_constant_schedule

from trl import SFTTrainer, setup_chat_format, DataCollatorForCompletionOnlyLM

from datasets import load_dataset

import torch, torch.nn as nn, uuid, wandb

lr = 1e-5 # GaLore optimizer hyperparameters

galore_config = dict(

target_modules_list = ["attn", "mlp"],

rank = 1024,

update_proj_gap = 200,

scale = 2,

proj_type="std"

)

modelpath = "meta-llama/Llama-2-7b"

model = AutoModelForCausalLM.from_pretrained(

modelpath,

torch_dtype=torch.bfloat16,

attn_implementation = "flash_attention_2",

device_map = "auto",

use_cache = False,

)

tokenizer = AutoTokenizer.from_pretrained(modelpath, use_fast = False)

# Setup for ChatML model,

tokenizer = setup_chat_format(model, tokenizer)

if tokenizer.pad_token in [None, tokenizer.eos_token]:

tokenizer.pad_token = tokenizer.unk_token

# subset of the Open Assistant 2 dataset, 4000 of the top ranking conversations

dataset = load_dataset("g-ronimo/oasst2_top4k_en")

training_arguments = TrainingArguments(

output_dir = f"out_{run_id}",

evaluation_strategy = "steps",

label_names = ["labels"],

per_device_train_batch_size = 16,

gradient_accumulation_steps = 1,

save_steps = 250,

eval_steps = 250,

logging_steps = 1,

learning_rate = lr,

num_train_epochs = 3,

lr_scheduler_type = "constant",

gradient_checkpointing = True,

group_by_length = False,

)

optimizers = load_galore_optimizer(model, lr, galore_config)

trainer = SFTTrainer(

model = model,

tokenizer = tokenizer,

train_dataset = dataset["train"],

eval_dataset = dataset['test'],

data_collator = DataCollatorForCompletionOnlyLM(

instruction_template = "<|im_start|>user",

response_template = "<|im_start|>assistant",

tokenizer = tokenizer,

mlm = False),

max_seq_length = 256,

dataset_kwargs = dict(add_special_tokens = False),

optimizers = optimizers,

args = training_arguments,

)

trainer.train()

GaLore optimizer has some hyperparameters that need to be set as follows:

target_modules_list:Specifies the layers targeted by GaLore.

rank:The rank of the projection matrix. Similar to LoRA, a higher rank brings fine-tuning closer to full parameter fine-tuning. The authors of GaLore recommend 1024 for 7B.

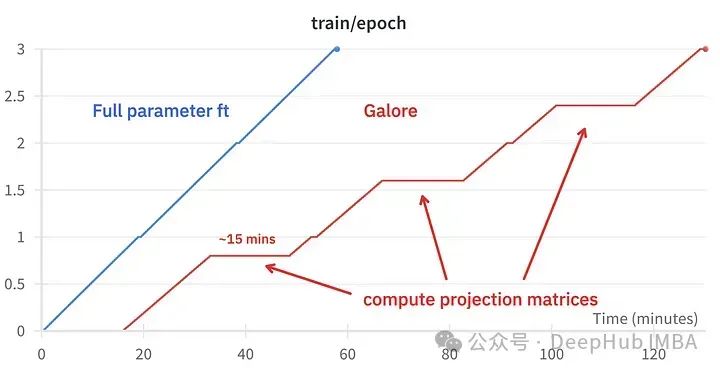

update_proj_gap:The number of steps to update the projection. This is an expensive step, taking about 15 minutes for 7B. It defines the interval for updating the projection, with a recommended range between 50 and 1000 steps.

scale:A scale factor similar to LoRA’s alpha, used to adjust the intensity of updates. After trying several values, I found that scale=2 is closest to classic full parameter fine-tuning.

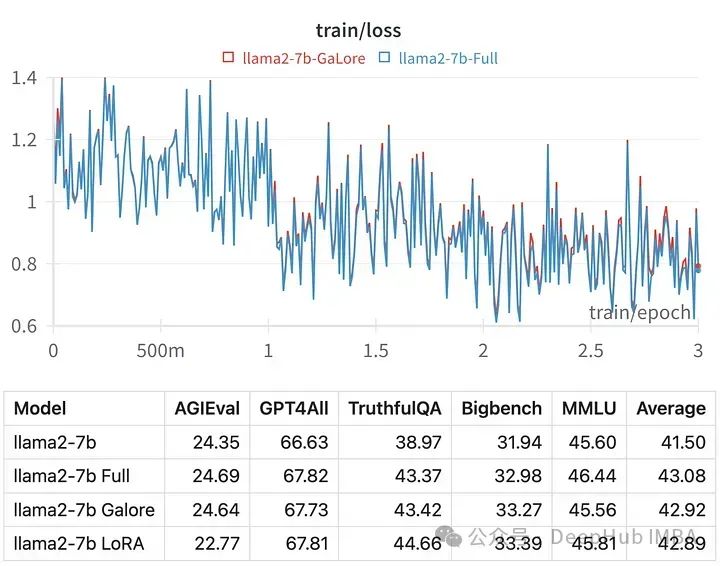

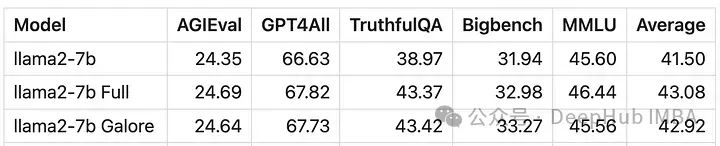

Comparison of Fine-Tuning Effects

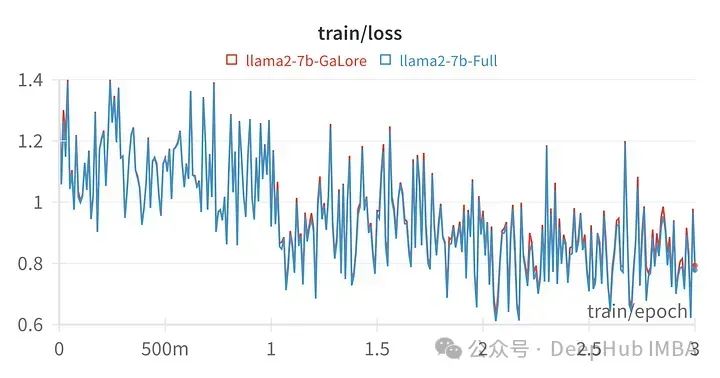

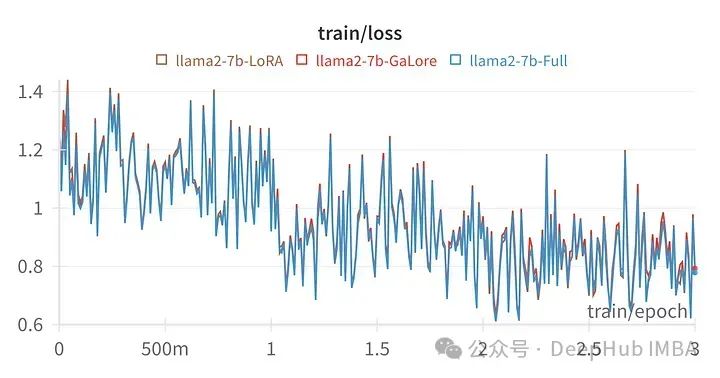

The training loss trajectory given the hyperparameters is very similar to that of full parameter tuning, indicating that the GaLore hierarchical method is indeed equivalent.

The model trained with GaLore scores very similarly to the full parameter fine-tuning.

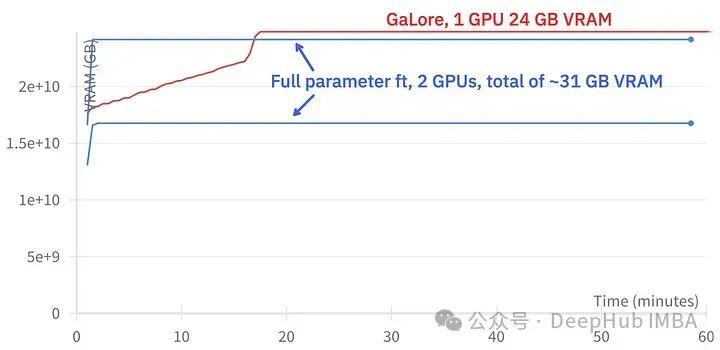

GaLore can save about 15 GB of VRAM, but it requires longer training time due to regular projection updates.

The above image compares the memory usage of two 3090s.

Comparison of training events: Fine-tuning: ~58 minutes. GaLore: about 130 minutes.

Finally, let’s look at the comparison between GaLore and LoRA:

The above image shows the loss graph for LoRA fine-tuning all linear layers, rank 64, alpha 16.

Numerically, GaLore represents a new method of approximate full parameter training, with performance comparable to fine-tuning and significantly better than LoRA.

Conclusion

GaLore can save VRAM, allowing training of a 7B model on consumer-grade GPUs, but it is slower, taking almost twice as long as fine-tuning and LoRA.

GaLore: Memory-Efficient LLM Training by Gradient Low-Rank Projection.

https://arxiv.org/abs/2403.03507

Complete code for this article:

https://github.com/geronimi73/3090_shorts/blob/main/nb_galore_llama2-7b.ipynb

Author: Geronimo

Editor: Huang Jiyan

Proofreader: Lin Yilin