Skip to content

This article introducesThe Hong Kong University of Science and Technology (Guangzhou)a paper on efficient fine-tuning of large models (LLM PEFT Fine-tuning) titled “Parameter-Efficient Fine-Tuning with Discrete Fourier Transform”, which has been accepted by ICML 2024, and the code has been open-sourced.

-

Paper link: https://arxiv.org/abs/2405.03003

-

Project link: https://github.com/Chaos96/fourierft

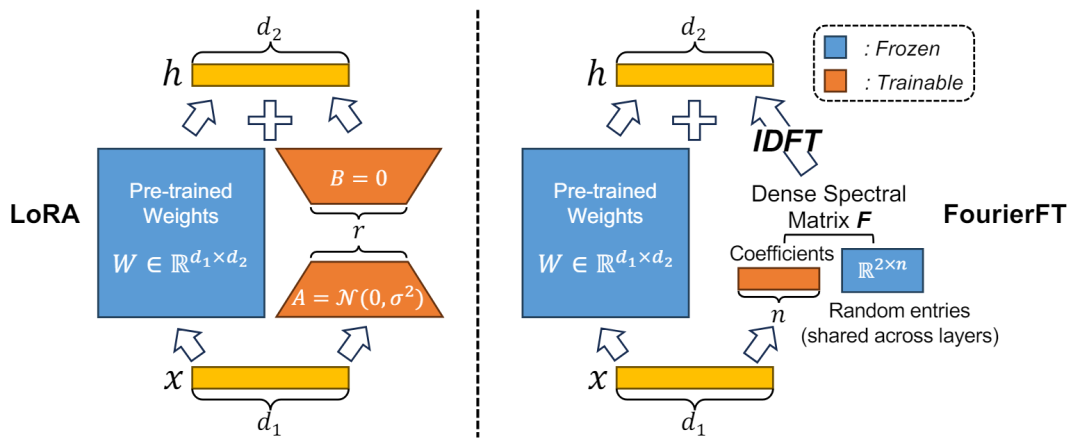

Large foundation models have achieved remarkable successes in the fields of natural language processing (NLP) and computer vision (CV). Fine-tuning large foundation models to better adapt to specific downstream tasks has become a hot research topic. However, as models grow larger and downstream tasks become more diverse, the computational and storage costs of fine-tuning entire models have become unacceptable. LoRA employs low-rank fitting to fine-tune increments, successfully reducing a significant amount of such consumption, but the size of each adapter is still non-negligible. This raises the core question of this paper: How can we further significantly reduce the trainable parameters compared to LoRA? Additionally, an interesting supplementary question is whether it is possible to achieve high-rank incremental matrices with fewer parameters.

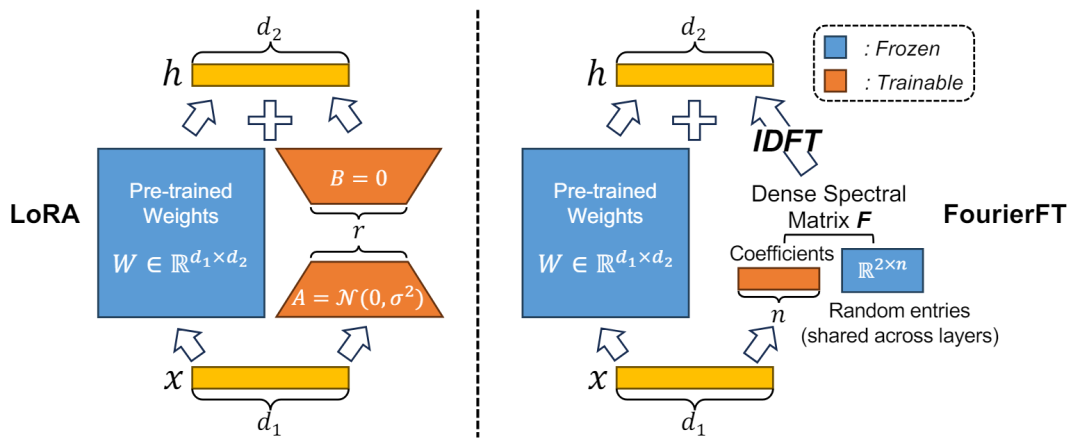

Fourier bases are widely used in various data compression applications, such as the compression of one-dimensional vector signals and two-dimensional images. In these applications, dense spatial domain signals are transformed into sparse frequency domain signals through Fourier transforms. Based on this principle, the authors speculate that the incremental model weights can also be viewed as a type of spatial domain signal, and its corresponding frequency domain signal can be achieved through sparse representation.

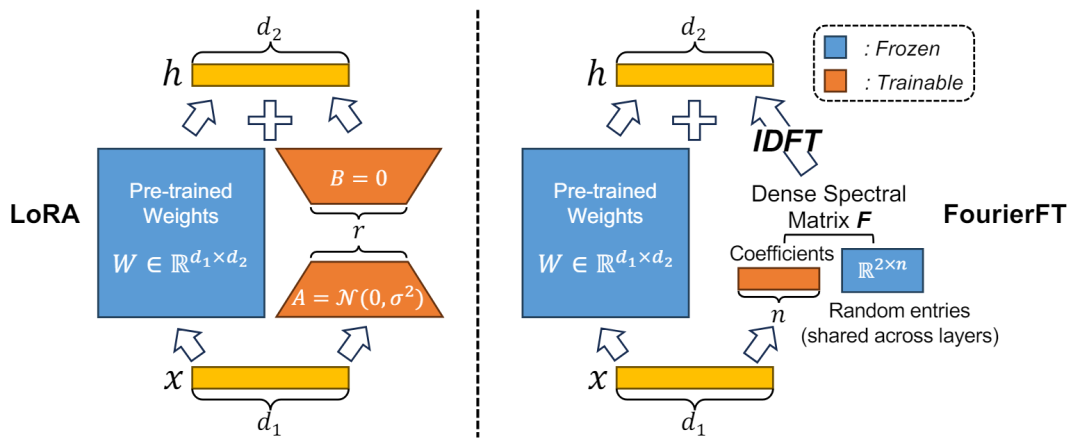

Based on this hypothesis, the authors propose a new method for learning incremental weight signals in the frequency domain. Specifically, this method represents spatial weight increments by sparse frequency domain signals at random positions. When loading the pre-trained model, n points are randomly selected as effective frequency domain signals, which are then concatenated into a one-dimensional vector. During the forward propagation process, this one-dimensional vector is used to recover the spatial matrix through Fourier transforms; during the backward propagation process, due to the differentiability of the Fourier transform, this learnable vector can be updated directly. This method not only effectively reduces the number of parameters required for model fine-tuning but also ensures fine-tuning performance. In this way, the authors not only achieve efficient fine-tuning of large-scale foundation models but also demonstrate the potential application value of Fourier transforms in the field of machine learning.

Thanks to the high information content of the Fourier transform basis, a very small n value is sufficient to achieve performance comparable to or exceeding that of LoRA. Generally, the trainable parameters for Fourier fine-tuning are only one-thousandth to one-tenth of those for LoRA.

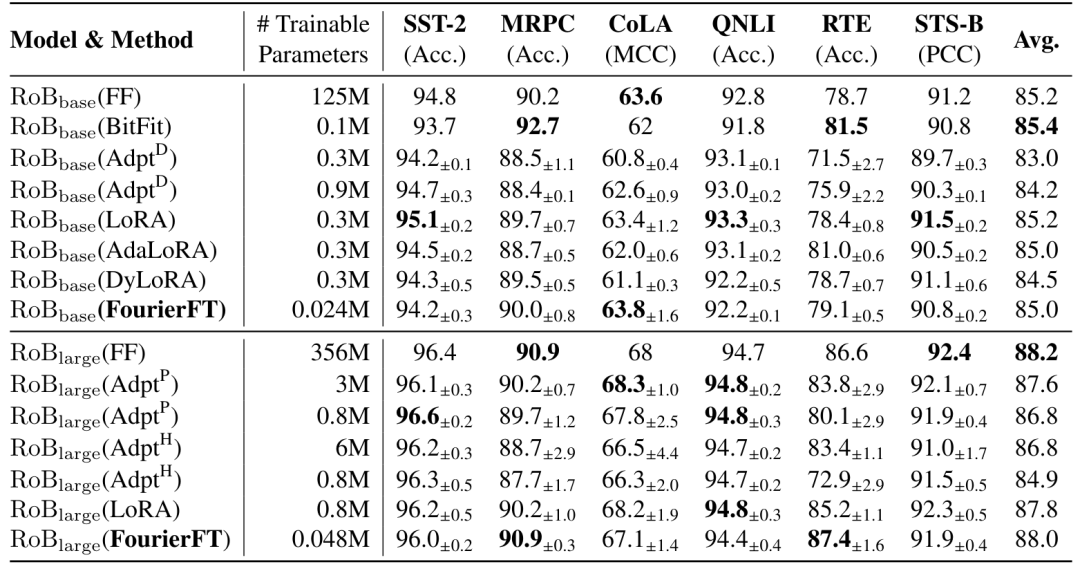

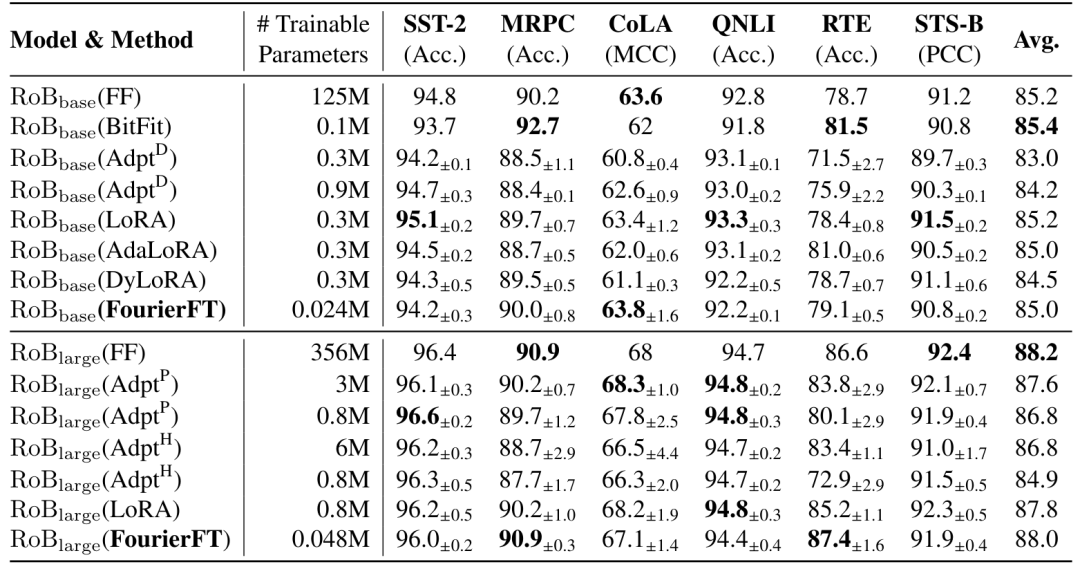

1. Natural Language Understanding

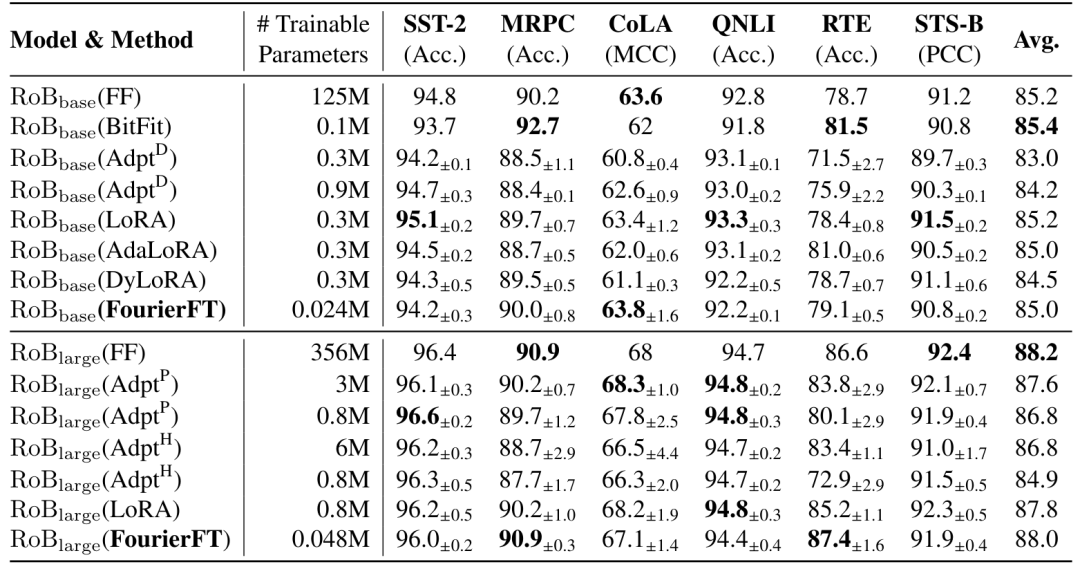

The authors evaluated the Fourier fine-tuning method on the GLUE benchmark for natural language understanding. The baseline comparison methods include full fine-tuning (FF), Bitfit, adapter tuning, LoRA, DyLoRA, and AdaLoRA. The table below shows the performance of various methods on different GLUE tasks and the required training parameters. The results indicate that Fourier fine-tuning achieves performance that meets or exceeds that of other fine-tuning methods with the least number of parameters.

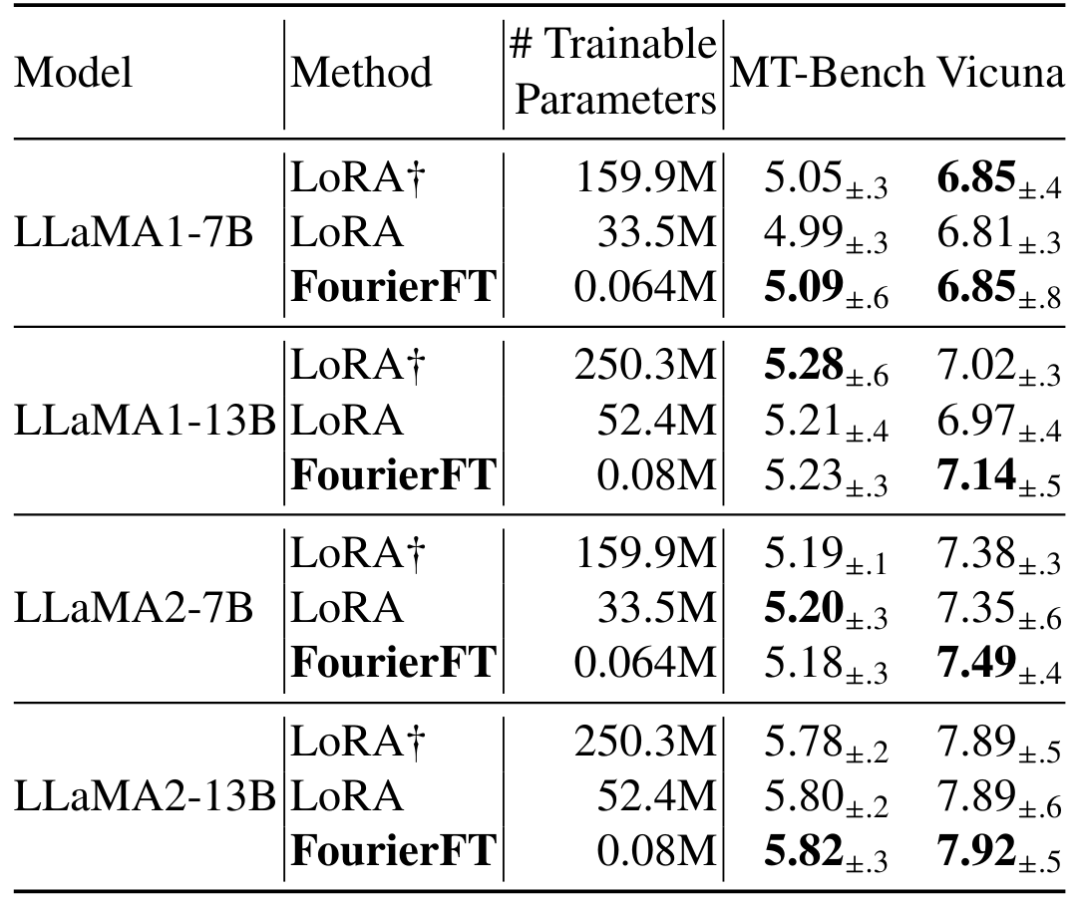

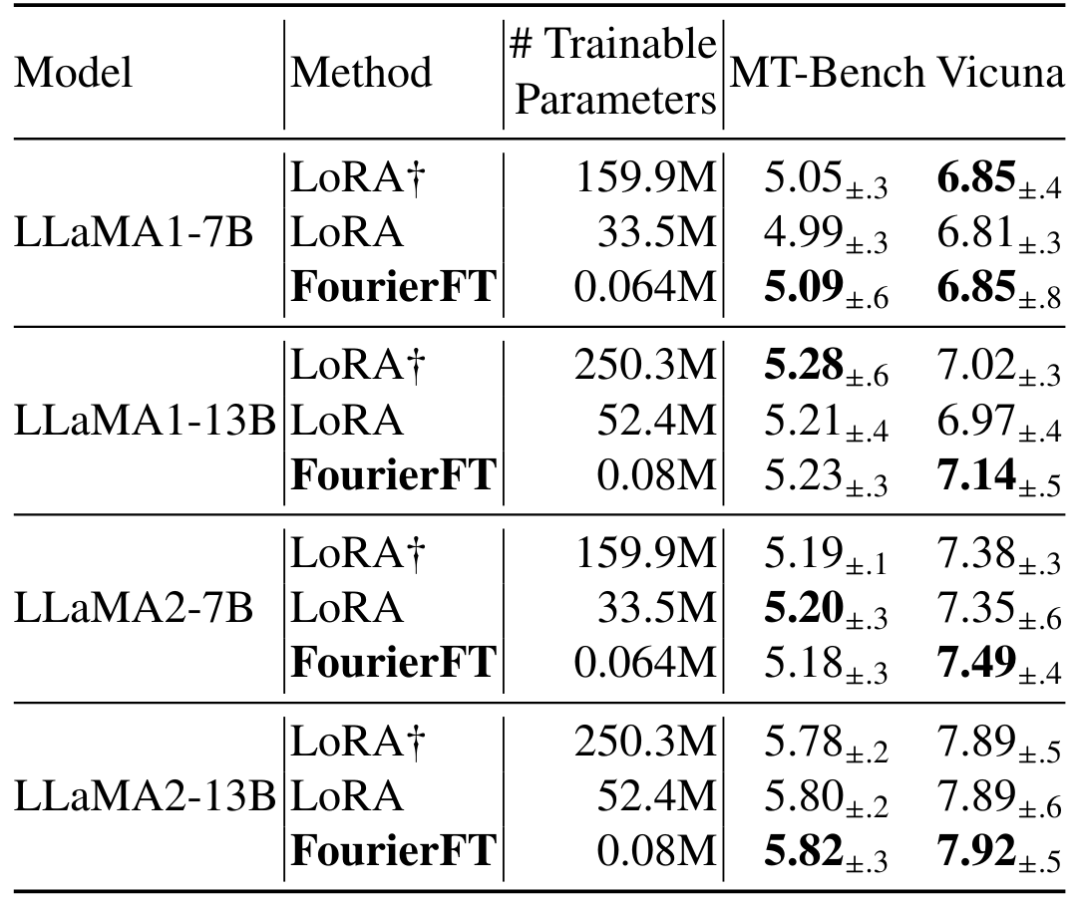

2. Natural Language Instruction Fine-Tuning

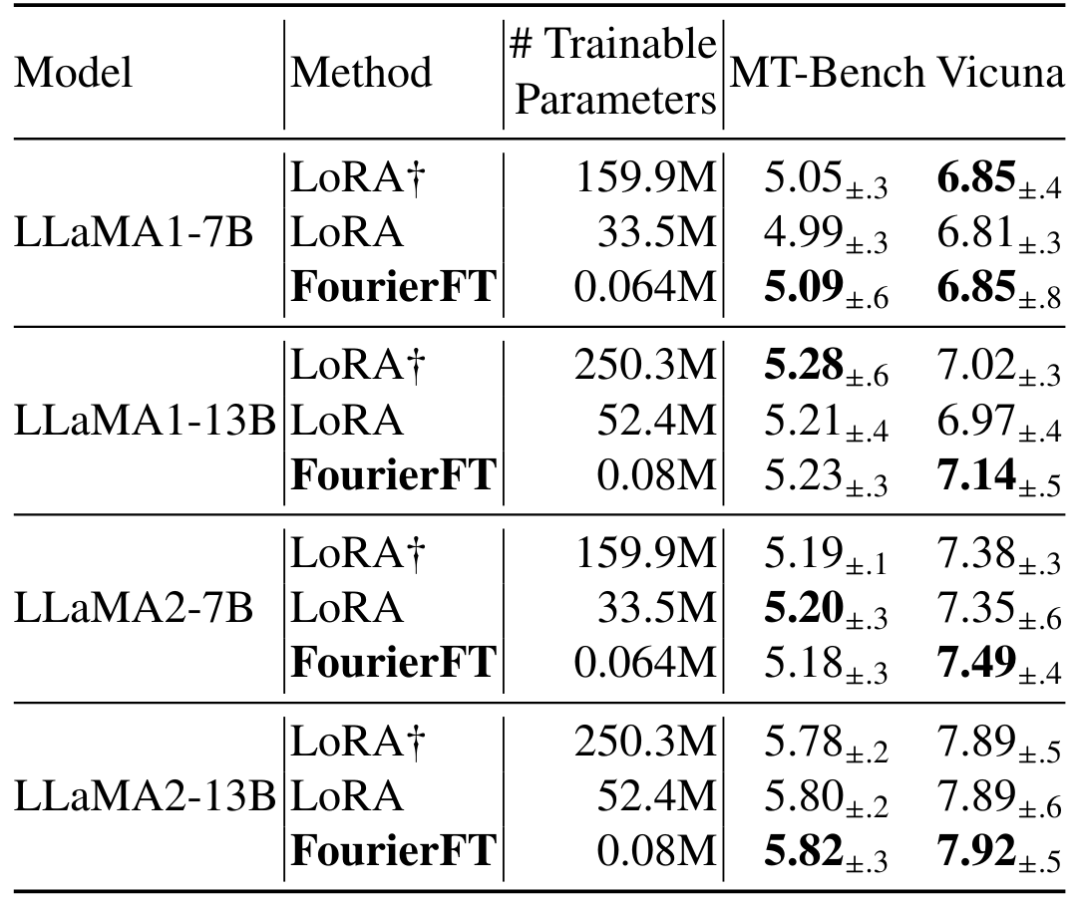

Natural language generation with large models is currently an important application area for model fine-tuning. The authors evaluated the performance of Fourier fine-tuning on LLaMA series models, MT-Bench tasks, and Vicuna tasks. The results show that Fourier fine-tuning achieves effects comparable to LoRA with extremely low training parameter amounts, further validating the universality and effectiveness of the Fourier fine-tuning method.

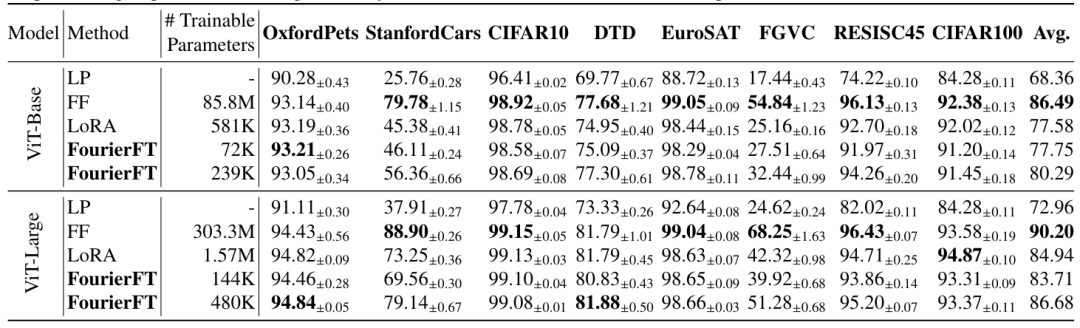

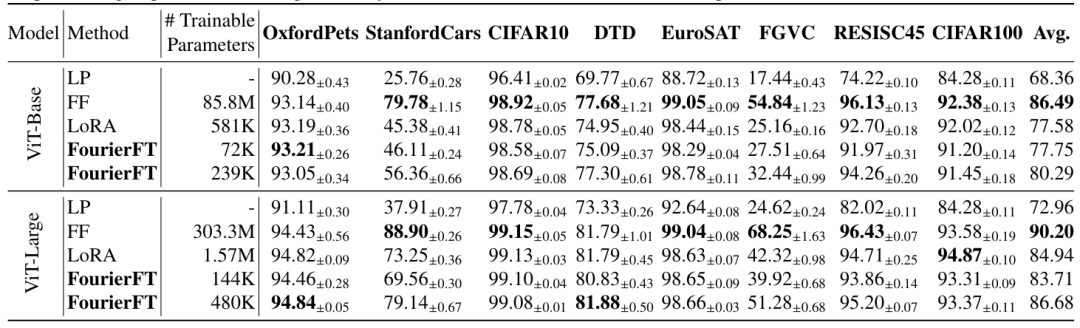

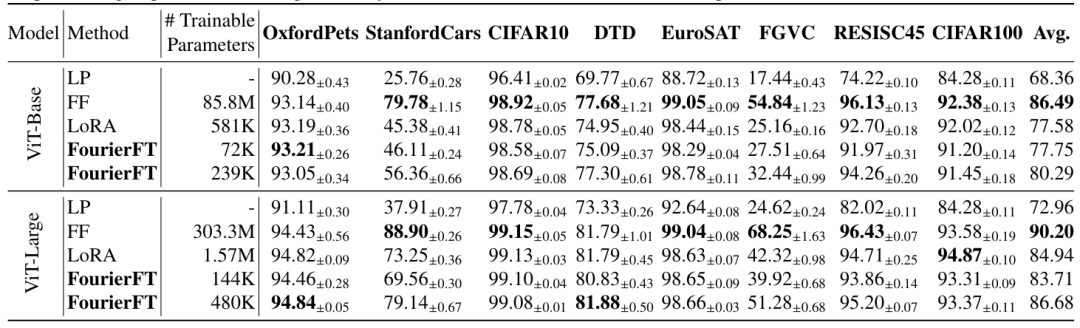

The authors tested the performance of Fourier fine-tuning on the Vision Transformer, covering 8 common image classification datasets. The experimental results indicate that although the compression rate of Fourier fine-tuning in image classification tasks is not significantly better than in natural language tasks, it still outperforms LoRA with far fewer parameters. This further demonstrates the effectiveness and advantages of Fourier fine-tuning in different application areas.

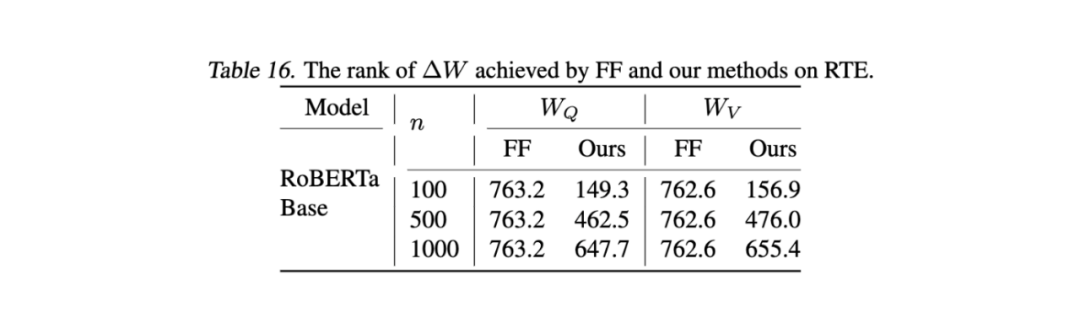

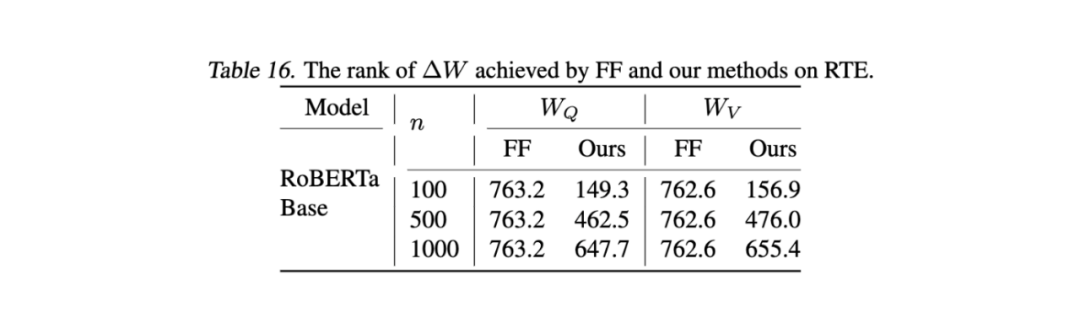

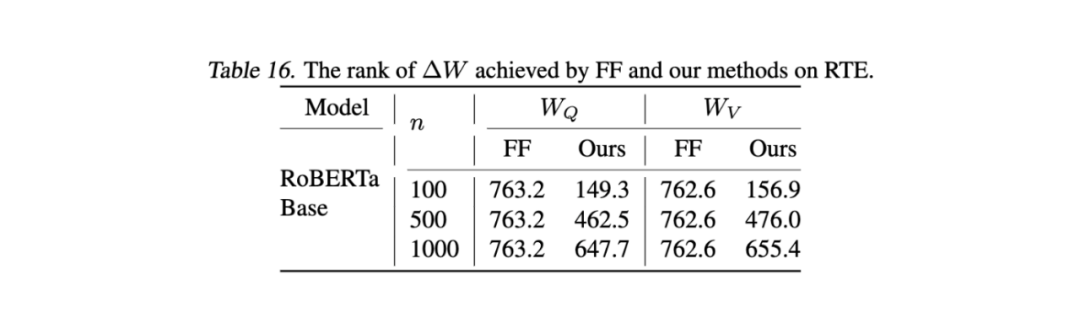

On the RTE dataset of the GLUE benchmark, FourierFT can achieve increments with a rank significantly higher than LoRA (typically 4 or 8).

5. GPU Resource Consumption

During the fine-tuning process, FourierFT can achieve lower GPU consumption than LoRA. The following figure shows the peak memory consumption using a single 4090 graphics card on the RoBERTa-Large model.

The authors introduced an efficient fine-tuning method called Fourier fine-tuning, which reduces the number of trainable parameters during fine-tuning of large foundation models by utilizing Fourier transforms. This method learns a small number of Fourier spectral coefficients to represent weight changes, significantly reducing storage and computational demands. Experimental results show that Fourier fine-tuning performs excellently in tasks such as natural language understanding, natural language generation, instruction tuning, and image classification, maintaining or exceeding the performance of existing low-rank adaptation methods (such as LoRA) while significantly reducing the required trainable parameters.