Implementing LLM from Bigram Model with 200 Lines of Python Code

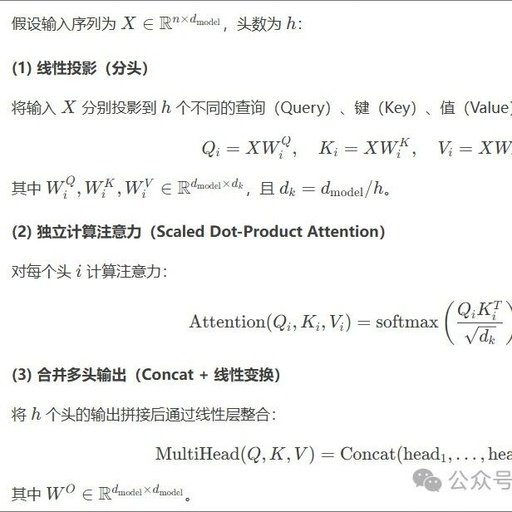

Introduction The previous article “Implementing LLM from Scratch with 200 Lines of Python” created a “poetry generator” starting from a “probabilistic” implementation, ultimately using PyTorch to realize a classic Bigram model. In the Bigram model, each character is only related to the previous character. Despite this, our <span>babygpt_v1.py</span> also outputs sentences like “Gradually realizing the … Read more