With the rapid development of artificial intelligence (AI) technology, intelligent agents (AI Agents) and multi-agent systems (Multi-Agent Systems) based on large language models (LLMs) are increasingly penetrating various application fields, from simple conversational assistants to complex autonomous decision-making systems. At the same time, the emergence of Model Context Protocols (MCP), which serve as a critical bridge connecting AI models with the external world (including tools, data sources, and other agents), further expands the capabilities of AI agents. However, this enhancement in capability and integration also brings unprecedented security challenges. This article will briefly analyze and summarize various disclosed security attack methods targeting large models, MCP, and AI agents, deeply exploring the principles, correlations, and impacts, aiming to provide a relatively comprehensive threat analysis view at the current point in time, and combining the latest research progress to offer insights for current defense strategies.

Chapter 1 Background: Expression of Information and Dimensionality Ascension

Before starting the systematic analysis of this article, it is essential to understand the basic operational mechanisms of agents, their connection with the LLM brain, and the interaction process with surrounding systems.

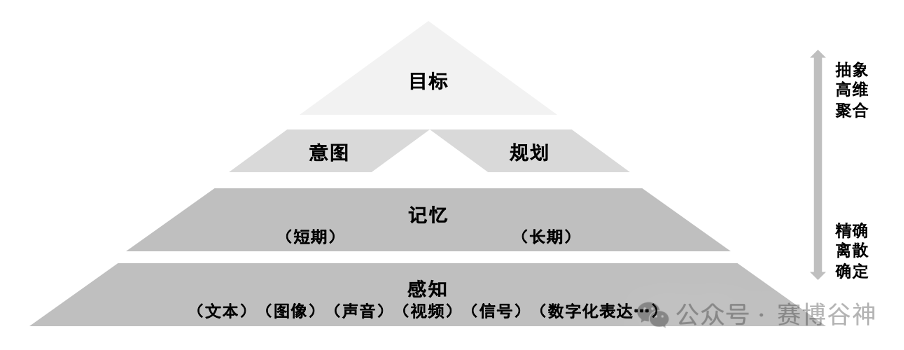

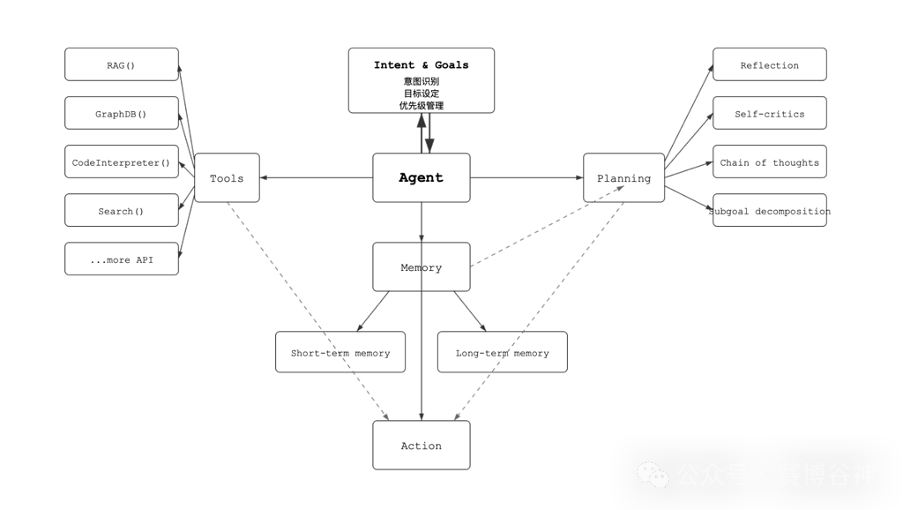

AI Agents exhibit intelligent behaviors that reflect the collaboration of their core cognitive modules, including Perception, Memory, Planning, Goal, Intent, and Action modules. These modules collectively construct the foundation for agents to understand, decide, and interact with their environment, enabling AI agents to evolve from simple instruction followers to entities capable of autonomously solving complex problems. In AI agent systems, information processing is not merely about storage and transmission; it undergoes a “dimensionality ascension” process from low-dimensional raw input to high-dimensional abstract knowledge, which is crucial for agents to understand the complex world and achieve advanced intelligence.

Specifically, in an Agent system, a typical Agent system interacts with the model in a manner similar to the diagram below, forming a closed loop of thinking and behavior from Perception->Reasoning->Planning->Decision-Making->Action->Feedback. The subsequent security analysis of multi-Agent and large model complex systems is also applicable to single Agent systems.

1.1 Perception

The perception module is the gateway for AI agents to interact with the external world. It is responsible for receiving raw inputs from the environment (such as text, images, audio, sensor data, etc.), processing, interpreting, and encoding them into observations or representations that the agent can understand internally. The perception process is fundamental for agents to comprehend their environment, marking the first step of dimensionality ascension from raw physical signals to internal digital representations.

-

Role of Perception:

-

Data Collection and Preprocessing: Receiving raw data from various modalities (such as visual, auditory, textual).

-

Feature Extraction and Representation: Transforming raw, often high-dimensional and noisy data into low-dimensional, meaningful features for subsequent cognitive processing. For example, an image recognition system converts pixel data into high-level representations such as objects and scenes, achieving initial abstraction of information.

-

Attention Mechanism: Agents selectively focus on perceptual information most relevant to the current task or goal through attention mechanisms, filtering out irrelevant or redundant content.

-

Association and Connection: Perception is the starting point for agents to interact with their environment. Its output is directly supplied to the Memory module for storage and encoding, providing real-time basis for Planning and Intent formation. High-quality perception is a prerequisite for agents to make accurate decisions.

1.2 Memory

The memory module is the cognitive repository for AI agents to store and retrieve information, similar to the human memory system, containing different levels and types of memory to support learning, adaptation, and solving complex problems. Memory is not only about information storage but also about organizing and refining information, thus achieving dimensionality ascension from perception representations to abstract knowledge.

-

Types of Memory:

-

Sensory Memory: The shortest form of memory, used to temporarily hold raw, high-fidelity sensory information from the perception module (milliseconds to seconds), such as brief images or sounds.

-

Short-Term Memory / Working Memory: A temporary workspace for storing information relevant to current tasks and recent interaction sequences, with limited capacity but quick access. It is crucial for real-time decision-making and contextual coherence. The information here undergoes initial contextualization and association, achieving dimensionality ascension from raw perception to task-relevant context.

-

Long-Term Memory: Used for the persistent storage of knowledge, past experiences, and acquired skills. It has a vast and stable capacity, supporting generalization and continual learning. The dimensionality ascension of information in long-term memory is achieved through the following forms:

-

Semantic Memory: Stores factual knowledge, concepts, and relationships about the world. It transforms discrete facts into interconnected knowledge graphs, achieving dimensionality ascension from data to knowledge.

-

Episodic Memory: Stores specific events experienced by individuals, including contextual details such as time, place, and participants. By abstracting and summarizing a series of perceptual events, it extracts key experiences.

-

Procedural Memory: Stores sequences of actions or skills acquired through repetition, automating task execution. This represents dimensionality ascension from specific actions to reusable skills and strategies.

-

Role of Memory:

-

Information Persistence: Ensures that critical information and experiences can be retained over long periods, spanning multiple interaction sessions or system restarts.

-

Knowledge Accumulation and Utilization: Provides rich background knowledge and lessons learned for agents’ Planning and Decision-Making.

-

Context Maintenance: In multi-turn dialogues or complex tasks, maintains contextual coherence by retrieving relevant memories.

-

Connections between Different Information Forms and Dimensions in Memory:

-

Perception -> Memory: The perception module transforms raw data from the environment into storable representations and transmits them to the memory module. For example, perceived textual information can be encoded and stored in long-term memory as semantic knowledge.

-

Memory -> Planning/Intent/Action: Memory serves as the foundation for Planning and Decision-Making. When agents engage in Planning, they retrieve relevant knowledge and past experiences from memory. The world model in long-term memory, as well as the Intent formed through value alignment, will influence the agents’ Action choices and execution.

-

Cyclic Feedback: The results of agents’ Actions (new Perception) continuously update Memory, forming a learning loop that enables agents to improve from experience.

1.3 Planning

The Planning module is the process by which AI agents strategically construct action sequences to achieve specific Goals. It involves breaking down complex macro goals into manageable subtasks and predicting the potential outcomes of different action paths. The planning process represents dimensionality ascension from discrete observations to future action blueprints.

-

Role of the Planning Module:

-

Task Decomposition: Breaks down complex tasks requested by users into a series of ordered, executable steps. This process achieves hierarchical ascension from abstract goals to concrete steps.

-

Strategy Formulation: Develops the optimal sequence of actions to achieve goals based on the current state of the environment and available resources.

-

Outcome Prediction: Utilizes the world model (the agent’s internal representation of environmental dynamics) to simulate the consequences of different action paths, assessing their feasibility and efficiency. This includes predicting future states from the current state, achieving dimensionality ascension from immediate information to temporal dimension information.

-

Adaptive Adjustment: Dynamically adjusts the plan based on environmental feedback and actual progress during task execution.

-

Connections with Other Related Modules:

-

Driven by Goals and Intent: Planning is directly driven by the agent’s Goals and Intent. A clear goal and well-aligned intent are the foundation for effective planning.

-

Dependent on Perception and Memory: The effectiveness of planning heavily relies on the accurate environmental information provided by the Perception module and the knowledge and experiences stored in the Memory module. Agents extract relevant knowledge from memory to construct the world model, enabling more accurate predictions.

-

Guiding Actions: The result of planning is to generate a series of executable Actions, which will be executed by the Action module.

1.4 Goal

Goal is the desired outcome or end state that AI agents aim to achieve. It provides the ultimate purpose and direction for all agent activities, driving their behavior. Goals represent the highest level of abstraction for all agent actions, embodying the dimensionality ascension of information from complex behaviors to concise results.

-

Role of Goals:

-

Behavior Guidance: Provides clear ultimate guidance for all agents’ Perception, Memory, Planning, and Action.

-

Utility Assessment: Serves as the primary metric for evaluating agent performance and success.

-

Connections and Relationships of the Goal Module with Other Modules:

-

Source of Intent: Goals are the macro abstraction of Intent. Intent is often a more specific and executable expression of goals, reflecting the motivation and means by which agents achieve their goals.

-

Driver of Planning: The Planning process aims to find the best path to achieve the Goals.

-

Feedback Measurement: After agents achieve their goals through Actions, the feedback from the environment (new Perception) will be used to measure the degree and quality of goal achievement, thus influencing the Memory and Learning processes.

1.5 Intent

Intent is the underlying motivation or purpose behind AI agents’ behaviors, explaining why agents choose to execute specific Actions or adopt particular Planning strategies. Intent can be explicitly specified by humans or autonomously formed by agents through learning and reasoning. Intent transforms the agents’ internal beliefs, values, and goals into specific action tendencies, representing the conceptual dimensionality ascension from belief levels to action tendencies.

-

Role of Intent:

-

Behavior Explanation: Helps understand the “why” behind agent behaviors, rather than just the “what,” enhancing the explainability and transparency of agents.

-

Constraints and Guidance: In Planning and Decision-Making processes, Intent serves as an internal constraint and guide, ensuring that agents’ behaviors align with expected values and safety guidelines.

-

Alignment Measurement: The alignment of agents with human Intent is a key measure of their safety and trustworthiness.

-

Connections and Relationships:

●Bridge between Goals and Planning: Intent connects abstract Goals with concrete Planning. Agents select and construct sequences of Actions to achieve Goals based on their Intent.

●Influenced by Perception and Memory: Agents’ Intent adjusts and evolves based on new Perception information and updated Memory (especially the world model in long-term memory and acquired values).

●Affects Action Selection: Intent directly influences the selection of Actions, ensuring that the Actions executed by agents are not only effective but also align with their internal motivations and values.

1.6 Action

Action Module is the executor that transforms internal decisions and planning of AI agents into actual executable behaviors. It represents the agents’ ability to interact with the environment and exert influence, marking the final dimensionality ascension from internal cognition to the external world.

-

Role of the Action Module:

-

Instruction Execution: Converts action sequences generated by the Planning module or tool invocation commands into actual physical movements (such as robotic operations) or digital operations (such as API calls, code execution).

-

Environmental Interaction: By executing Actions, agents can change their Environment, generating new Perception information and forming feedback loops.

-

Problem Solving: Represents the final step for agents to solve complex tasks and achieve Goals.

-

Connections and Relationships of the Action Module with Other Concepts:

-

Output of Planning: Actions are the direct output of the Planning module. An effective plan will directly translate into a series of clear Actions.

-

Key to Feedback Loops: The results of Actions will affect the Environment, which will then be captured by the Perception module, updating Memory and the world model, forming a closed loop of learning and adaptation. This emphasizes the central role of Actions in the self-evolution process of agents.

-

Constrained by Intent and Goals: Actions must align with the agents’ Intent and Goals; otherwise, it may lead to safety alignment issues.