The First Multi-Character Digital Human Open Source Project MultiTalk: Supporting Karaoke, Realistic Cartoons, and Animal Generalization with Low-Cost Solutions

The recently open-sourced multi-party dialogue audio-driven digital human project<span>MultiTalk</span> has gained significant attention in the digital human community, natively supporting<span>single</span>,<span>multi-party dialogue</span>, capable of driving<span>realistic</span>,<span>cartoon</span> tasks, and can perform<span>karaoke</span>, with impressive<span>animal</span> generalization capabilities.

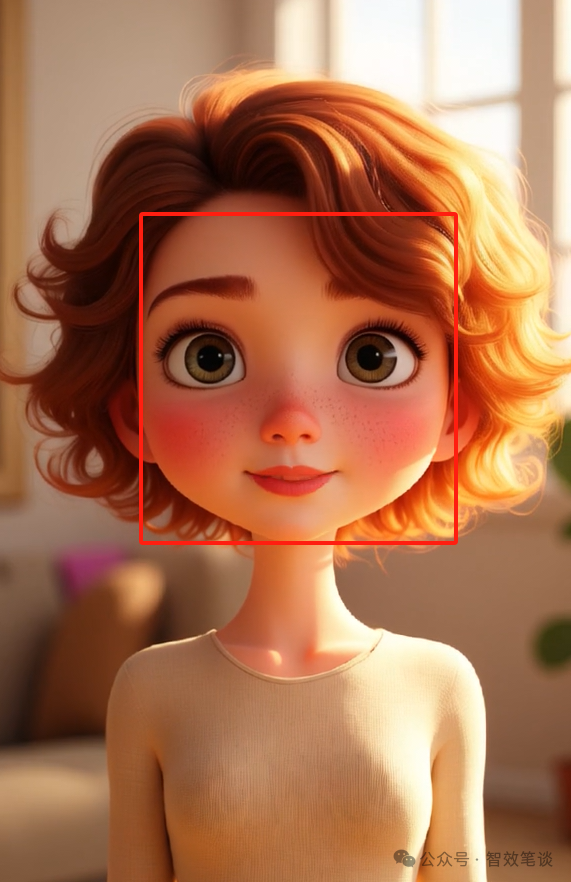

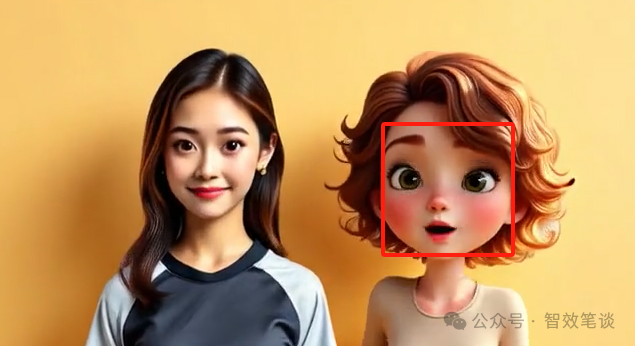

My test results show a mixed generation of realistic and animated characters:

Official effects

By simply uploading an image and corresponding audio, high-quality digital human videos can be generated with high lip-sync accuracy and good image quality, and in evaluations across multiple datasets, MultiTalk significantly outperforms others.

This article is divided into two parts:

1. The Latest MultiTalk Multi-Character Digital Human Project 2. Low-Cost Real-Time Solutions for Multi-Character Digital Humans

Quick Start

Project address:

https://github.com/MeiGen-AI/MultiTalk

Single-Character Scenario

- 1. Official Version: Directly visit the project homepage and follow the steps to complete the installation

- 2. ComfyUI: Currently, the KJ master has adapted ComfyUI for single-character scenarios, providing high quality and stability, with a synthesis speed increase of 5-6 times

Multi-Character Scenario

- 1. Online Experience: Experience online through platforms like RunningHub or XianGong Cloud

- 2. Official Version: Directly visit the project homepage and follow the steps to complete the installation (minimum 24GB VRAM required)

Application Scenarios

- • Film and Entertainment: Multi-party dialogue in animated films, game cutscenes (reducing manual frame-by-frame adjustment costs)

- • Education: Interactive teaching in virtual classrooms, language learning dialogue simulation (e.g., tour guide explaining dialect scenarios)

- • Marketing: Virtual customer service product demonstrations, multi-character advertisement videos (e.g., showcasing sneakers with real person explanations)

- • Social Media: Creative multi-character short dramas, virtual host singing (supporting cartoon character generalization)

Limitations

- • Hardware Threshold: Multi-party video generation requires high VRAM, and local deployment costs are high (recommended 48GB VRAM, minimum 24GB VRAM)

- • Slow Speed: Actual tests show that generating 100 frames in multi-party video synthesis takes 10 minutes (RTX 4090)

Low-Cost Solutions

Although<span>MultiTalk</span> represents a significant breakthrough in<span>multi-character</span> dynamic video generation, its L-RoPE binding technology and multi-task training strategy provide industrial-grade tools for digital content creation, the slow synthesis<span>speed</span> and high synthesis<span>cost</span> limit its application in production.

So, is there a low-cost and fast synthesis method? The answer is yes, it is the now outdated<span>wav2lip</span>. Although wav2lip is a project from a few years ago, its generalization and quality cannot be compared to some of the current digital human projects, but its low-cost and fast synthesis characteristics still make it the preferred solution for current<span>real-time digital humans</span>.

wav2lip features:

- • Fast Speed (real-time)

- • Low Hardware Cost (real-time on low-end consumer graphics cards)

How does wav2lip drive multi-character digital human generation?

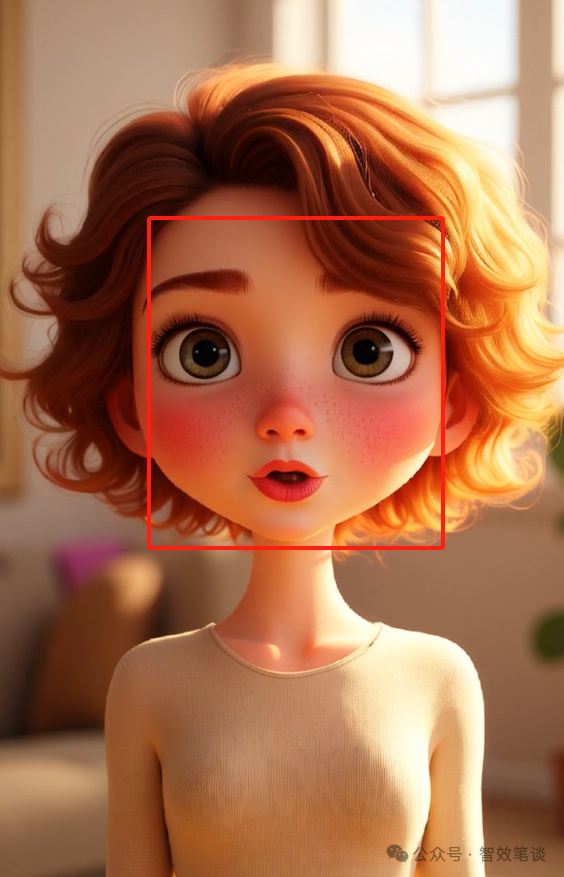

wav2lip Single-Character Driving Principle

Before understanding this issue, let’s look at the<span>working principle</span> of wav2lip in synthesizing digital humans. As shown in the figure below, the model first<span>detects the face frame</span>, then extracts the<span>face</span> within the red box, along with the<span>driving audio</span>, and sends it to the model.

After passing through the wav2lip network, the model predicts the<span>next frame lip shape</span> of the face, and then the predicted result is<span>mapped back to the original image</span>, thus generating a new frame.

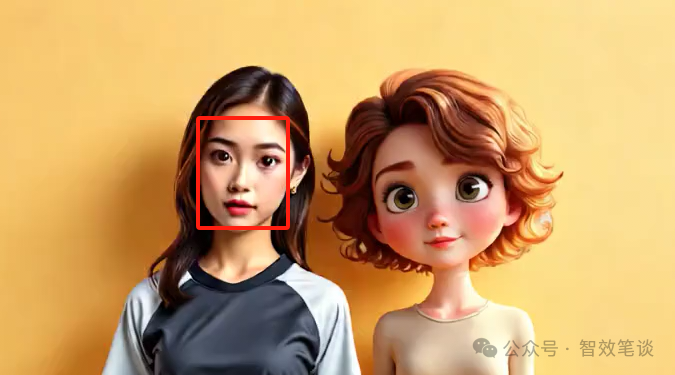

wav2lip Multi-Character Driving Principle

Having understood single-character driving, multi-character driving is quite simple. As shown in the figure below, for example, we create a 4-second<span>multi-character digital human</span>:

- • 0-2s period: The model simultaneously detects two faces, but through

<span>program preset conditions</span>, only the specified face (on the left) is extracted and sent to the lip shape synthesis network

<span>left</span> character is speaking.

- • 2-4s period: Similarly, modify the lip shape on the right side, and now only the

<span>right</span>character is speaking.

Isn’t it both<span>cost-effective</span> and<span>time-saving</span><span>? Of course, wav2lip also has its limitations; to achieve good results, specific characters need to be fine-tuned, unlike MultiTalk's generalization capability!</span>

Postscript

In engineering applications, we will definitely prefer this real-time and cost-effective solution, but this does not mean that MultiTalk has lost its significance. On the contrary, it is a new milestone in technological development, and it is the relentless efforts of countless predecessors in scientific research that have led to gradual technological progress, so we should pay tribute to them<span>and thank them!</span>

If you have any questions, feel free to add my personal WeChat for further discussion!