AI agents (those smart assistants) are becoming increasingly popular and powerful. With various impressive frameworks, you can connect these AIs to external tools, enabling them to retrieve data, perform calculations, and interact with the outside world.

These tools significantly enhance the capabilities of AI, allowing them to search for information online, solve mathematical problems, and interact with APIs. How can we connect tools to AI? There are several methods, but one particularly popular approach is called the “Model Context Protocol” (MCP). MCP has two modes of operation:

- STDIO: Direct local connection, where the AI and tools communicate on the same machine;

- SSE: Network connection, allowing the AI to seek assistance from tools remotely.

With SSE, MCP acts like a bridge between a client and server, allowing tools to run in different locations, making it more flexible and scalable, capable of handling complex environments.

What are the issues?

Although MCP is useful, there are some limitations:

- Cannot add tools arbitrarily: Most MCP servers need to have their tools predefined at startup; if you want to add a new tool later, you have to restart.

- Hard to manage multiple servers: In SSE mode, each server occupies a port. If you have several MCP servers running, managing all those ports can be cumbersome, especially in container environments like Kubernetes.

How to solve it? Try Multi-MCP

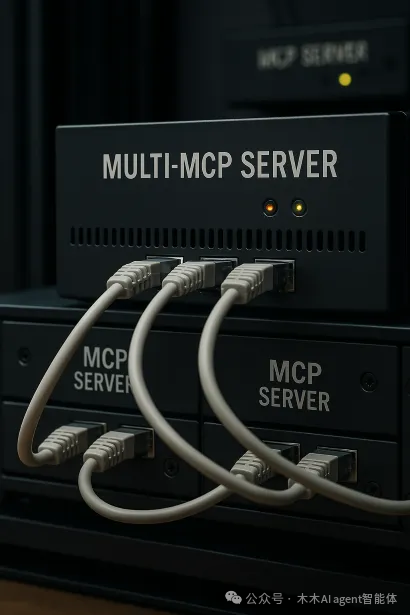

To address these issues, we developed Multi-MCP, which can be simply described as a “super proxy.” For the AI, it appears as a single MCP server, but it can manage multiple MCP servers behind the scenes, whether they are using STDIO or SSE.

What are the benefits of using Multi-MCP?

- Supports both STDIO and SSE, no issues there.

- Can connect to any MCP server, no restrictions.

- One interface to manage all backend servers, simplifying the process.

- Automatically discovers tools and resources at startup, so you don’t have to worry about it.

- Want to add a new server or remove an old one? Use the HTTP API to do it anytime.

- Even if tool names collide on different servers, it’s fine; use “namespaces” to differentiate them.

- Deployment in Kubernetes is super easy; just open one port.

How to use it?

Using Multi-MCP is straightforward. First, create a <span>mcp.json</span> file that specifies the MCP servers you want to use, for example:

{

"mcpServers": {

"weather": {

"command": "python",

"args": ["./tools/get_weather.py"]

},

"calculator": {

"command": "python",

"args": ["./tools/calculator.py"]

}

}

}

Then you can run it in various ways:

- Run it on your own computer;

- Put it in a Docker container;

- Deploy it in Kubernetes as a Pod.

Once it’s running, how do you add a new server? Use the HTTP API, for example:

curl -X POST http://localhost:8080/mcp_servers \

-H "Content-Type: application/json" \

--data @new_mcp.json

Now you don’t need to restart; you can add whatever you want, and the AI will immediately have access to the new tools. Want to learn more? Check out the Multi-MCP GitHub repository for more information.

What’s next?

This Multi-MCP is just the beginning; the goal is to create a flexible and extensible tool ecosystem. As AI becomes smarter and the tasks it performs become more complex, such tools will become increasingly important.

If you are also exploring similar topics or working on tools for AI, feel free to reach out and share your ideas!