Real-Time Efficient Dense Optical Flow Accelerator Based on FPGA

Real-Time Efficient Dense Optical Flow Accelerator Based on FPGA

Yutai Feng, Wenyang Xu, Fan Chen, Jiaxing Wang, Yongming Tang, Hao Sun

(Southeast University, School of Electronic Science and Engineering)

Abstract: The optical flow method constructs a dense motion field representation by analyzing pixel displacement between frames, enabling the quantification of the motion direction and speed of objects in a scene with sub-pixel accuracy. This is a core technology for intelligent perception and positioning navigation in applications such as embodied intelligence and low-altitude economy. However, dense optical flow algorithms face high computational complexity, and their multi-layer pyramid structure and inter-layer data dependencies lead to low memory access efficiency and idle computational resources, which collectively limit the real-time and efficient deployment of these algorithms on the edge. To address this issue, an optimized strategy based on algorithm, architecture, and circuit co-design is proposed for a real-time and efficient FPGA hardware acceleration solution for the dense LK pyramid optical flow algorithm. This solution enhances algorithm accuracy and hardware friendliness through batch bilinear interpolation and time gradient generation, optimizes hardware architecture parallelism through pyramid multi-layer pipeline folding design, and improves memory access efficiency during the pyramid down-sampling process through a three-level segmented processing architecture, significantly enhancing the energy efficiency and real-time performance of dense optical flow computation. Experimental results on the AMD KV260 platform show that this accelerator achieves a processing speed 102 times faster than a high-performance CPU, with real-time processing capability of 62f/s at a resolution of 752×480, an average endpoint error (AEE) of 0.522 pixels, and an average angular error (AAE) of 0.325°, providing a hardware acceleration solution that combines high precision and low latency for high dynamic visual perception scenarios.

Highlights of This Paper

Highlights of This Paper

In the field of computer vision, the optical flow method is a key technology that provides precise visual perception capabilities for dynamic scenes by analyzing the motion vectors of pixels between consecutive frames. With the widespread adoption of intelligent applications, the optical flow method plays an irreplaceable role in areas such as robot navigation, autonomous driving, and visual SLAM. However, traditional optical flow algorithms face numerous challenges in edge deployment, such as high computational complexity, significant memory bandwidth pressure, and difficulty in ensuring real-time performance.

To address these issues, the team led by Hao Sun from Southeast University published a paper titled “Real-Time Efficient Dense Optical Flow Accelerator Based on FPGA” in the 2025 issue 6 of the journal “Integrated Circuits and Embedded Systems”, providing a hardware acceleration solution that combines high precision and low latency for high dynamic visual perception scenarios.

1. Research Background and Challenges

The optical flow method can quantify the motion direction and speed of objects in a scene with sub-pixel accuracy, making it a core technology for intelligent perception and positioning navigation applications. However, dense optical flow algorithms face limitations in real-time and efficient deployment on the edge due to high computational complexity, multi-layer pyramid structures, and inter-layer data dependencies, which lead to low memory access efficiency and idle computational resources. Existing research on optical flow algorithms still faces key challenges in optimization at the algorithm, architecture, and circuit levels, such as the sequential computation mode in multi-scale pyramid iterative optimization limiting the potential for hardware parallel acceleration; dynamic data flow and irregular memory access in FPGA acceleration schemes leading to low resource utilization; and although customized modules at the circuit level reduce computation latency, the conflict between limited on-chip cache resources and multi-scale data expansion causes frequent off-chip memory accesses, resulting in a surge in power consumption in high-resolution scenarios.

2. Research Methods and Innovations

(1) Algorithm Optimization

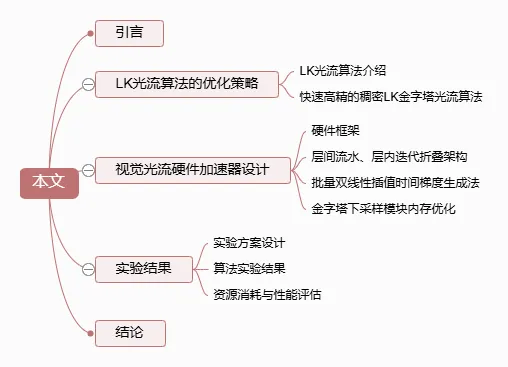

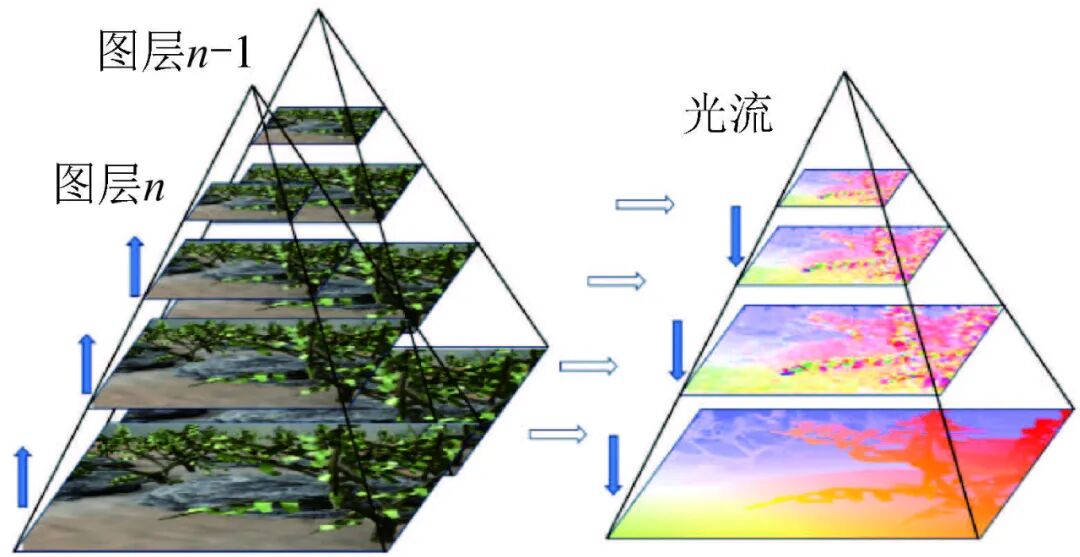

Multi-scale pyramid LK optical flow architecture: A three-level image pyramid is constructed, with the original image as the 0th layer and the image down-sampled by 1/2L as the Lth layer. The pixel displacement of fast-moving targets at low-resolution levels is compressed to the effective range of Taylor expansion, achieving sub-pixel level motion estimation through bilinear interpolation.

Gradient descent method replaces traditional least squares solving strategy: Through multiple iterations, the position estimates of all pixels are continuously updated until a preset convergence threshold is met. Additionally, bilinear interpolation is introduced to upsample the optical flow field, accurately extending the coarse-grained optical flow matrix to the fine-grained layer, effectively improving the accuracy and computational efficiency of optical flow estimation.

(2) Hardware Architecture Design

A real-time optical flow hardware acceleration system with a heterogeneous collaborative architecture: The system consists of an ARM Cortex A53 processor on the PS side and shared memory DDR4, as well as an image pyramid generation module and optical flow computation array on the PL side. During initialization, the PS side ARM processor creates a multi-level pyramid and stores it in shared memory while transferring pixel values to the PL side; the PL side processes the image through the image pyramid generation module, gradually reducing the resolution to fit the pyramid structure, and then the optical flow computation array calculates the optical flow in a layer-wise pipeline and intra-layer parallel manner, utilizing an image buffer module to improve data transfer efficiency; after computation, the results are sent back to the PS side for post-processing and visualization.

A layer-wise pipeline and intra-layer iterative folding architecture: To address the serial computation bottleneck caused by inter-layer data dependencies in the LK pyramid optical flow algorithm, a layer-wise pipelined architecture design is proposed. By reconstructing the traditional pyramid structure’s computation paradigm, the strong temporal dependencies are transformed into spatial parallel advantages. Specifically, by implementing independent scheduling and synchronized advancement of multi-level optical flow computation units at the hardware level: when the high-level completes the coarse-grained optical flow estimation for each pixel, the computation results are passed to the adjacent level, while the current level immediately releases resources to process the next pixel, thereby eliminating inter-level data waiting delays. This architecture ensures that the overall computation time only depends on the maximum processing delay of a single layer while supporting the one-time generation of optical flow fields for full-resolution images.

Batch bilinear interpolation time gradient generation method: A double-buffering mechanism is introduced in the storage layer design, allowing the current frame and reference frame image data to be efficiently written to BRAM1 and BRAM2, respectively, while simultaneously receiving displacement vector (U, V) feedback from the optical flow iterative array, constructing a motion-adaptive coordinate mapping relationship that effectively resolves data read/write bottlenecks and enhances system throughput. In the interpolation layer, an improved bilinear interpolation kernel is used to achieve precise sub-pixel coordinate positioning based on the displacement vector, and a neighborhood pixel address generator synchronously retrieves four adjacent pixel points (P00~P11) from BRAM1/BRAM2, enhancing data access efficiency through a parallel access mechanism to generate motion-compensated predicted frame pixel values. In the derivative computation layer design, a differential operation module performs pixel-level brightness fine comparison between the current frame and the predicted frame, generating the time gradient field It based on the optical flow brightness constancy assumption, enhancing the algorithm’s adaptability to brightness changes and improving the accuracy of optical flow estimation.

Pyramid down-sampling module memory optimization: The pyramid down-sampling algorithm innovatively adopts a three-level segmented processing architecture, achieving efficient spatial compression by eliminating redundant logical judgments. The first row processing stage directly extracts odd-column pixels from the original image to construct the initial row of the target matrix; the middle row processing stage employs a double-row coupling mechanism, retaining only the first pixel value after reading two adjacent pixels, combined with a stepping strategy of two rows in the vertical direction as a group; the last row processing module is symmetrically designed with the first row, executing boundary protection operations separately. The entire architecture optimizes spatiotemporal locality, achieving a balance between computational efficiency and hardware resource utilization while maintaining algorithm correctness.

3. Experimental Results and Performance Evaluation

(1) Experimental Scheme Design

The proposed hardware accelerator is implemented based on the AMD KV260 FPGA evaluation platform, achieving a working frequency of 200MHz. All functional modules are implemented using HLS (High-Level Synthesis) hardware description language and synthesized, placed, and routed using the Vivado 2022.2 toolchain. The four-core ARM Cortex A53 processor on the platform controls data loading and transmission of the accelerator through the AXI4 Stream interface. At an input image resolution of 752×480, dynamic fixed-point technology is employed to derive the minimum fixed-point bit width required for each variable to ensure computational accuracy: pixel grayscale values are represented as 8-bit unsigned integers, while other computational data are quantized to the minimum multi-bit fixed-point numbers that meet accuracy requirements. The system employs a three-layer pyramid multi-scale architecture to achieve optical flow computation.

(2) Algorithm Experimental Results

The Euroc dataset and MiddleBury dataset are used for evaluation, with the pyramid optical flow algorithm in the OpenCV function “calcOpticalFlowPyrLK()” serving as the software benchmark, and endpoint average error (AEE) and angular average error (AAE) metrics used to evaluate hardware results. Experimental results indicate that the optical flow hardware implementation achieves high frame rates and real-time performance while maintaining accuracy close to that of the software OpenCV optical flow.

(3) Resource Consumption and Performance Evaluation

A comprehensive comparison of the proposed optical flow hardware accelerator with existing FPGA solutions is conducted from the perspectives of throughput, resource consumption, and real-time performance. At a resolution of 752×480, it achieves a frame rate of 62f/s and a throughput of 22.38 Mpixels/s, enabling dense optical flow computation. Compared to other solutions, this design demonstrates significant advantages in throughput and resource consumption, such as a 128% increase in throughput compared to Barranco, and a 23% reduction in BRAM resource consumption compared to similar multi-scale solutions. In terms of power consumption, it meets the power requirements for deployment on edge devices.

4. Conclusion and Outlook

This research addresses the issues of poor real-time performance and high resource consumption of traditional LK pyramid dense optical flow algorithms in edge computing scenarios by proposing a heterogeneous acceleration architecture based on FPGA. Through collaborative optimization of algorithms and hardware, real-time processing capability of 62f/s at a resolution of 752×480 is achieved on the AMD KV260 development board. At the algorithm level, a multi-scale pyramid architecture is adopted, replacing the traditional least squares solving with a gradient descent iterative method, yielding an average endpoint error (AEE) of 0.522 pixels and an average angular error (AAE) of 0.325° on the MiddleBury and Euroc datasets; at the hardware architecture design level, an innovative pyramid pipeline folding structure is constructed, enhancing throughput to 22.38 Mpixels/s through inter-level pipelined scheduling and odd-column filtering mechanisms, achieving a 128% increase compared to literature solutions at the same resolution, while optimizing memory access strategies to reduce BRAM resource consumption (187 blocks) by 23% compared to similar multi-scale solutions. Experimental results demonstrate that this architecture effectively resolves the data dependency bottleneck of traditional algorithms while ensuring optical flow accuracy, providing high precision and low latency embedded solutions for real-time scenarios such as drone navigation and visual SLAM.

Citation format: Yutai Feng, Wenyang Xu, Fan Chen, et al. Real-Time Efficient Dense Optical Flow Accelerator Based on FPGA[J]. Integrated Circuits and Embedded Systems, 2025, 25(6): 78-86. FENG Y T, XU W Y, CHEN F, et al. Real-time efficient dense optical flow accelerator based on FPGA[J]. Integrated Circuits and Embedded Systems, 2025, 25(6): 78-86 (in Chinese).PreviousIssueIndexList