What Role Does NPU Play?

Workload Determines Everything

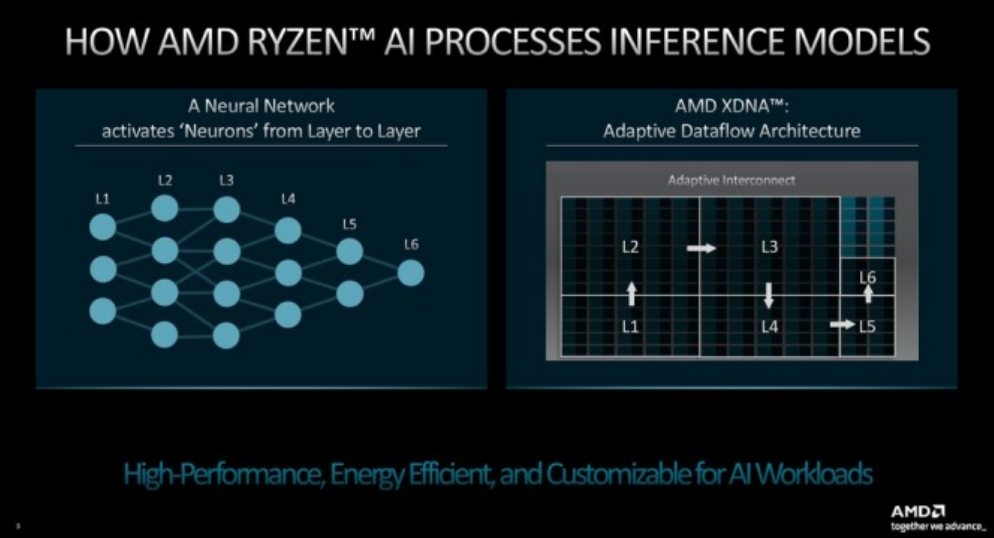

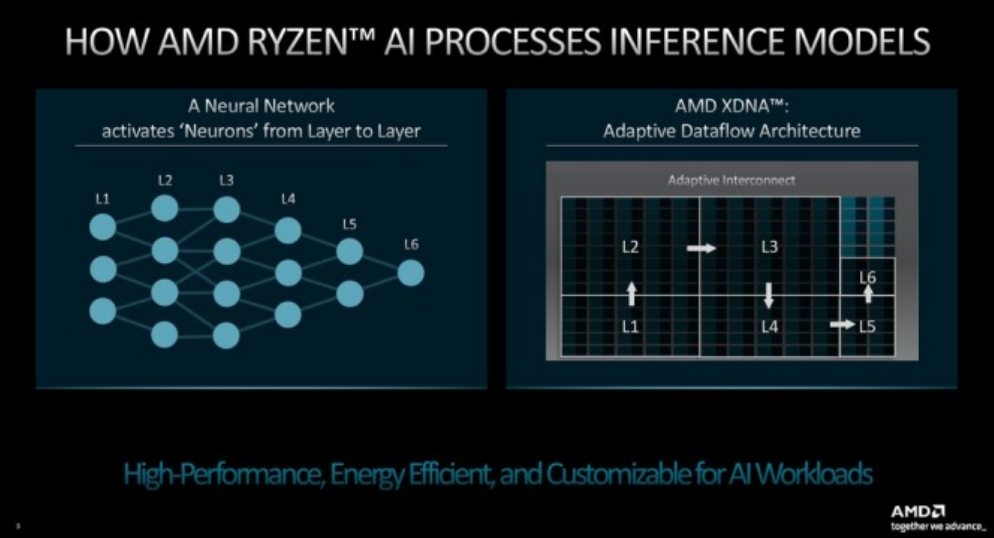

How Does NPU Work?

NPU and Edge Intelligence

Original link: https://www.extremetech.com/computing/what-is-an-npu

What Role Does NPU Play?

Workload Determines Everything

How Does NPU Work?

NPU and Edge Intelligence

Original link: https://www.extremetech.com/computing/what-is-an-npu