Skip to content

At the Intel Innovation conference, Intel introduced the Meteor Lake processor, which includes an AI inference acceleration unit: NPU. What is the significance of this for PCs?

In this article, we will discuss the NPU (Neural Processing Unit) of Meteor Lake. This series of articles also includes an introduction to the key technologies of Meteor Lake, as well as Intel 4 manufacturing technology and Foveros packaging technology.

In fact, the introduction of the NPU unit for AI local inference acceleration was clearly highlighted by Intel in the technical overview of the first-generation Core Ultra processor codenamed Meteor Lake. This setup aligns well with current trends, as mainstream PC processor suppliers are generally starting to adopt this approach. Moreover, this year Intel is promoting the significant value of AI technology for edge devices.

News about Meteor Lake running AI was initially previewed earlier this year. However, at that time, Intel mentioned CPU, GPU, and VPU. Furthermore, during the Raptor Lake (13th generation Core) period, Movidius VPU was also available as an independent chip for 13th generation Core laptops.

At the media sharing session before the Intel Innovation conference, the NPU mentioned by Intel might be the VPU referred to earlier. The reason is simple. Intel reiterated the example of running Stable Diffusion on Meteor Lake to illustrate the current practice of Intel XPU.

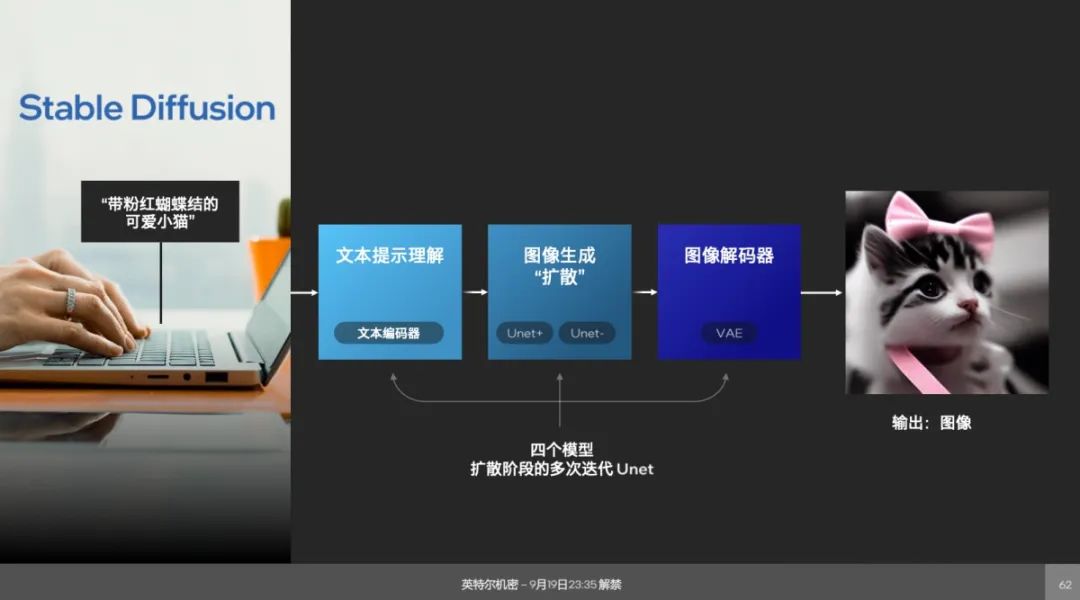

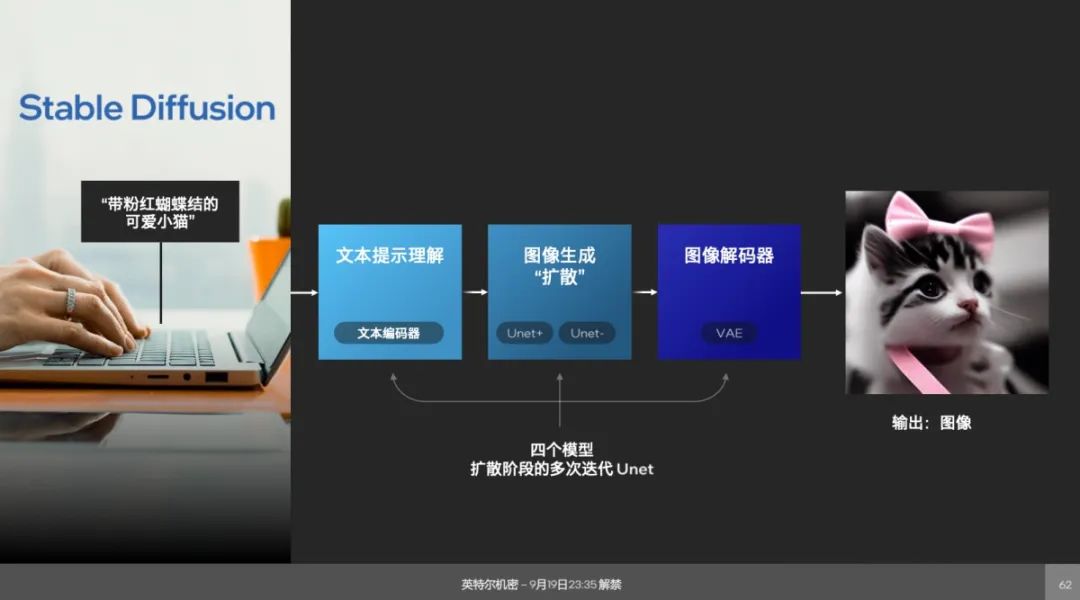

The process is as follows: The network structure of Stable Diffusion is divided into several parts, with the front end being a text encoder. To generate an image from text, a prompt such as “a cute kitten with a pink bow” must first be converted into a “hidden space” using the CLIP model.

The second step involves two Unets. Intel explains: “One Unet network handles the positive prompt, while the other handles the negative prompt, completing the so-called diffusion step. This step is quite resource-intensive and represents a high computational load in the entire processing flow of Stable Diffusion.” “Repeating this about 20 times will yield a reasonably good image.”

The final step involves restoring the image from the hidden space to pixel space using the image encoder VAE, resulting in the output image.

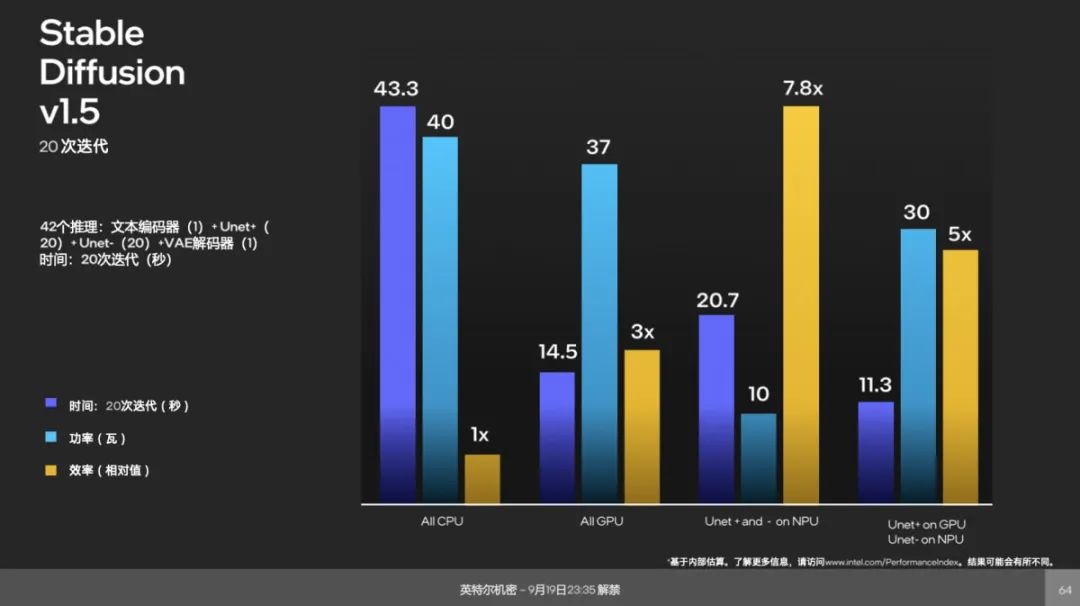

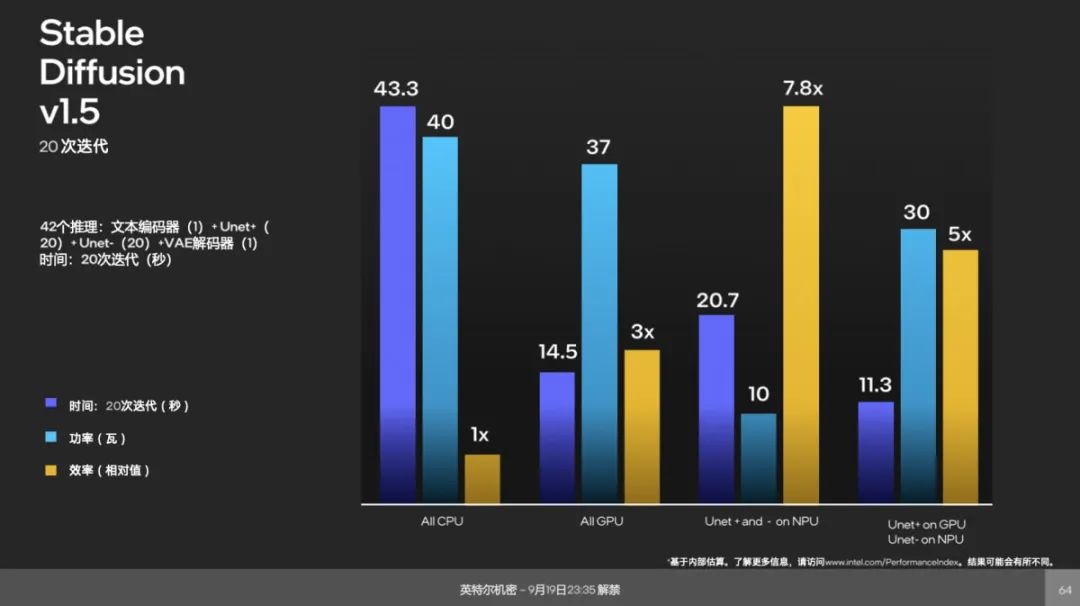

If this entire process runs on the CPU, the Unet +/- iterations for 20 times would take about 43 seconds with a power consumption of 40W—setting this energy efficiency value as unit 1. If run on the GPU, it would take only 14.5 seconds with a power consumption of 37W.

If both Unet +/- run on the NPU (with the assumption that the GPU may assist in the encoder’s work), the entire process would take 20.7 seconds. While this is slower than running entirely on the GPU, the power consumption would only be 10W, achieving an energy efficiency of 7.8 times. Additionally, if the GPU runs Unet+ and the NPU runs Unet-, the process would take even less time, but the energy efficiency would not surpass the third option.

This example was mentioned by Intel a few months ago, where scheduling is accomplished using OpenVINO, enabling deep learning deployment across different processors. It is evident that the NPU on Meteor Lake could be the VPU previously advertised.

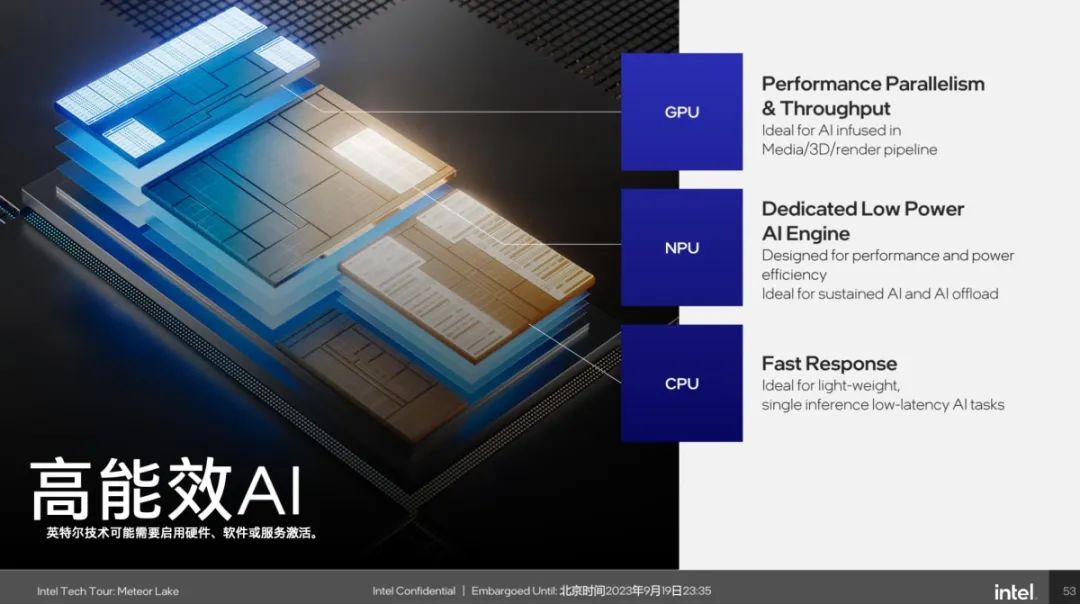

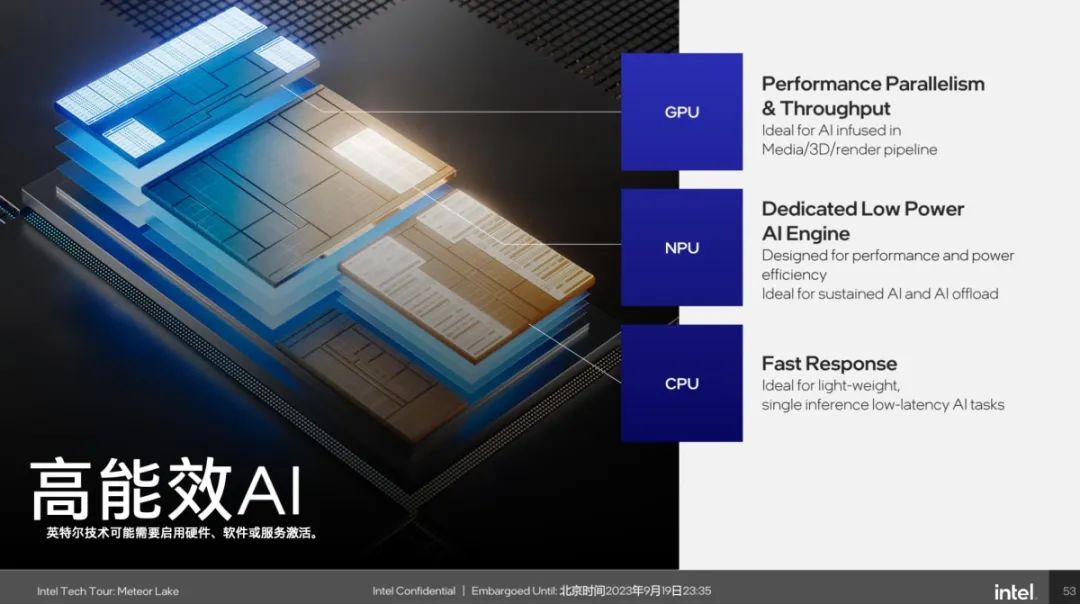

Based on Intel’s ongoing advocacy for the XPU strategy, the positioning of CPU, GPU, and NPU for edge AI inference is as follows:

In Intel’s view, all three units must participate in AI inference tasks: either through collaborative division of labor or by selecting the most suitable unit for specific tasks. Just last month, we reported on Intel’s various efforts to enable the CPU to run generative AI tasks.

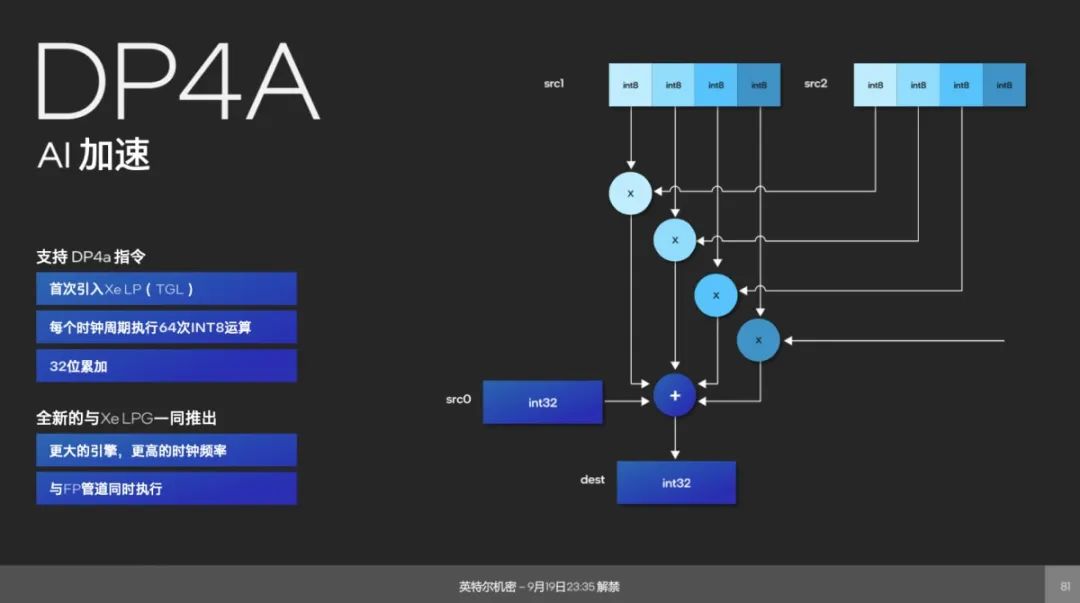

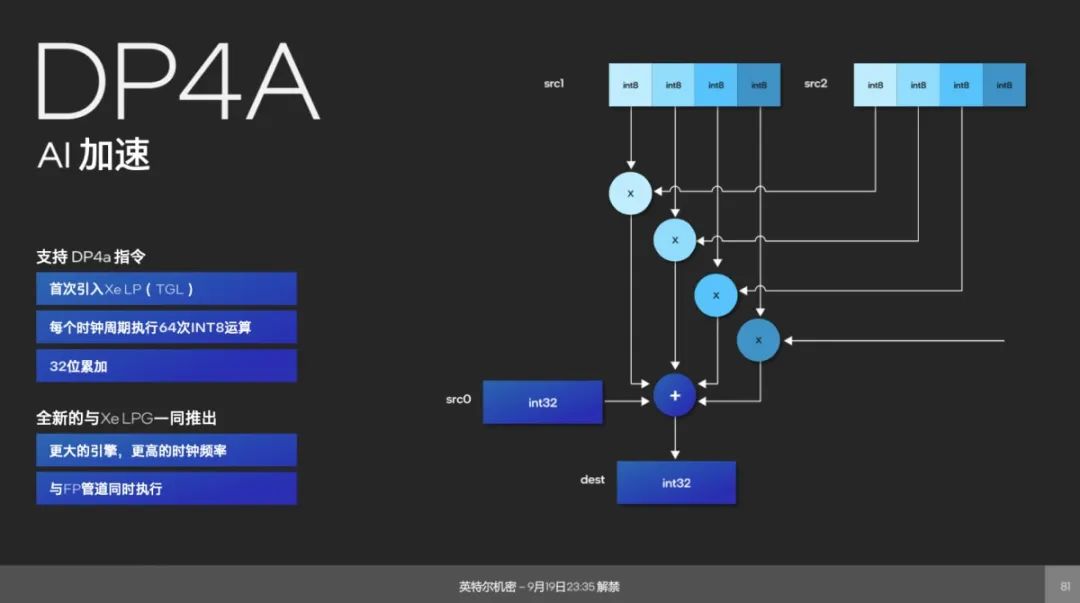

The GPU is suitable for AI tasks that require high concurrency and high throughput. Here is an introduction to the GPU supporting DP4a instructions, executing 64 Int8 operations per cycle (see below). From the introduction of Meteor Lake’s key technologies, we can anticipate the AI performance of Meteor Lake’s integrated graphics—after all, this generation’s vector engine has significantly improved compared to the previous generation.

The NPU is a low-power AI engine designed for continuous AI workloads. For example, during video conferencing, tasks such as eye contact correction, image super-resolution, or noise reduction are more suitable for the NPU. The CPU, known for its quick response, is ideal for lightweight, low-latency inference tasks.

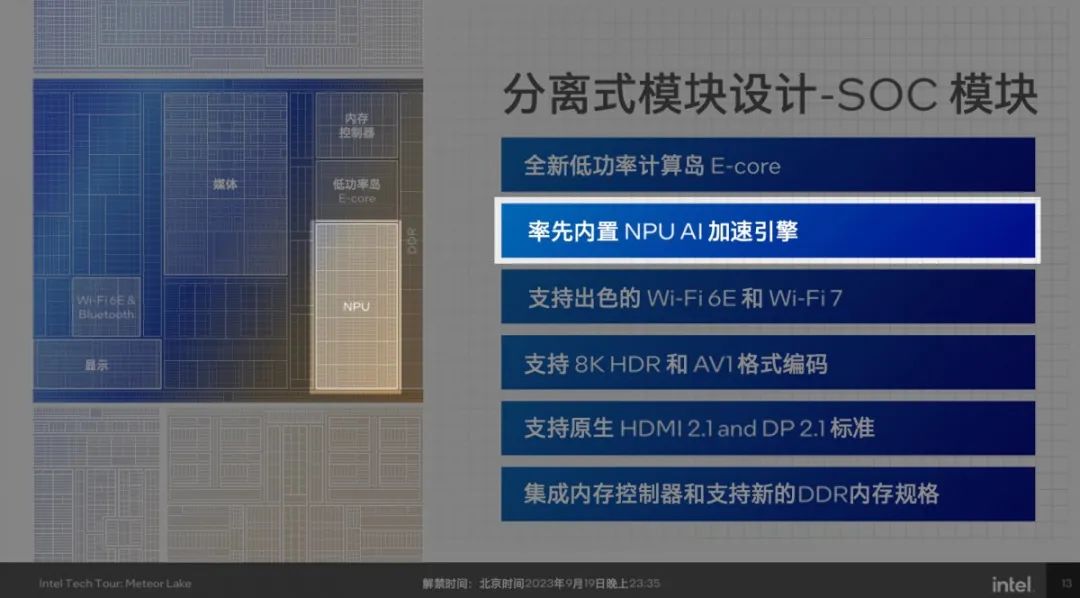

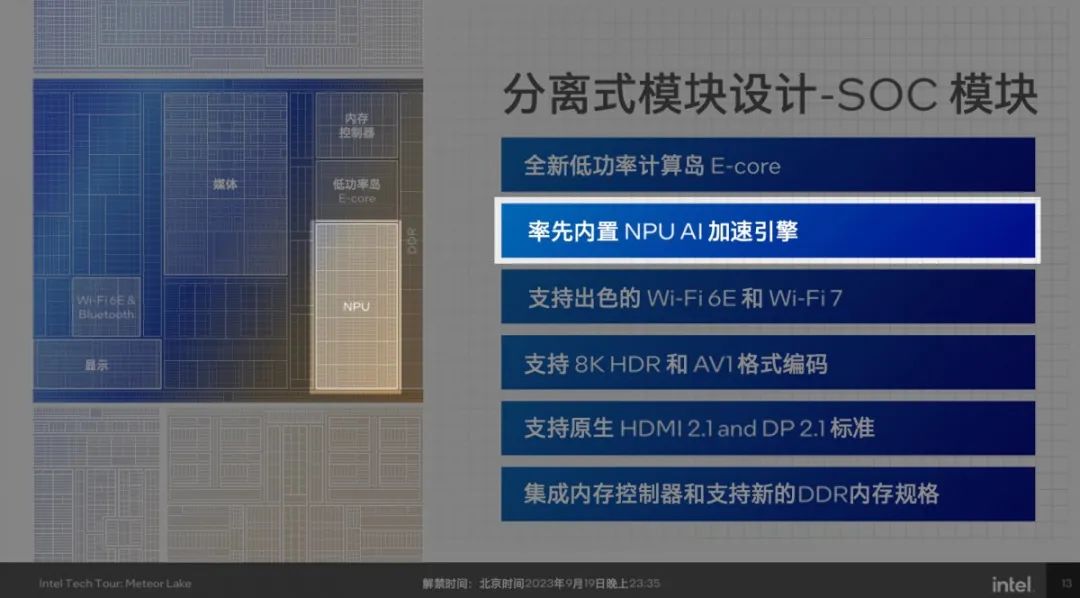

During this media sharing session, Intel unusually provided details about the NPU architecture, although the specific scale remains unclear. The NPU is primarily located above the SoC tile. Given that the SoC tile is referred to as a low-power island by Intel, this block’s design is undoubtedly aimed at achieving low power consumption and high energy efficiency.

NPU accelerators typically include MAC and precision conversion components. The main computational part consists of two Neural Compute Engines, which focus on parallel capabilities and can be relatively easily scaled in a modular fashion.

These two engines support Int8 and FP16 operations, equipped with dedicated data conversion units that “support data type conversion and fusion operations for quantized networks” and “support output data re-layout”. At the hardware level, they “support various activation functions under FP precision, such as ReLU.”

The MAC array is used to support matrix multiplication and convolution operations, “supporting optimal data reuse to reduce power consumption,” with a single engine providing 2048 MAC/cycle. Each engine is paired with a DSP for supporting more precise data.

Other components include an MMU for accessing system memory, DMA for direct memory access, and on-chip storage resources (Scratchpad RAM). Achieving convolution→ReLU→quantization for networks like Resnet50 is a standard AI acceleration process.

Regarding the software stack, it is worth mentioning that Intel’s NPU driver complies with the Microsoft MCDM framework (Microsoft Compute Device Manager). Therefore, from Windows Explorer, the NPU can be directly seen as a computing device, including its load status.

It is said that an AI Benchmark tool specifically for XPU is already on the way. Future PC processors will begin to incorporate AI inference capabilities.

AI Software Stack and Practical Applications on PC

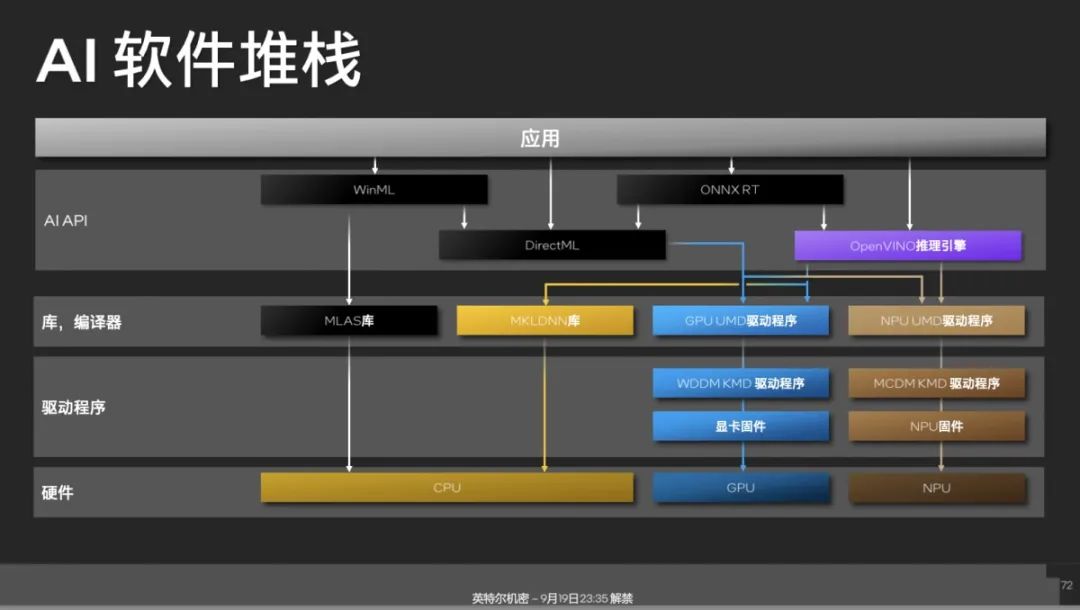

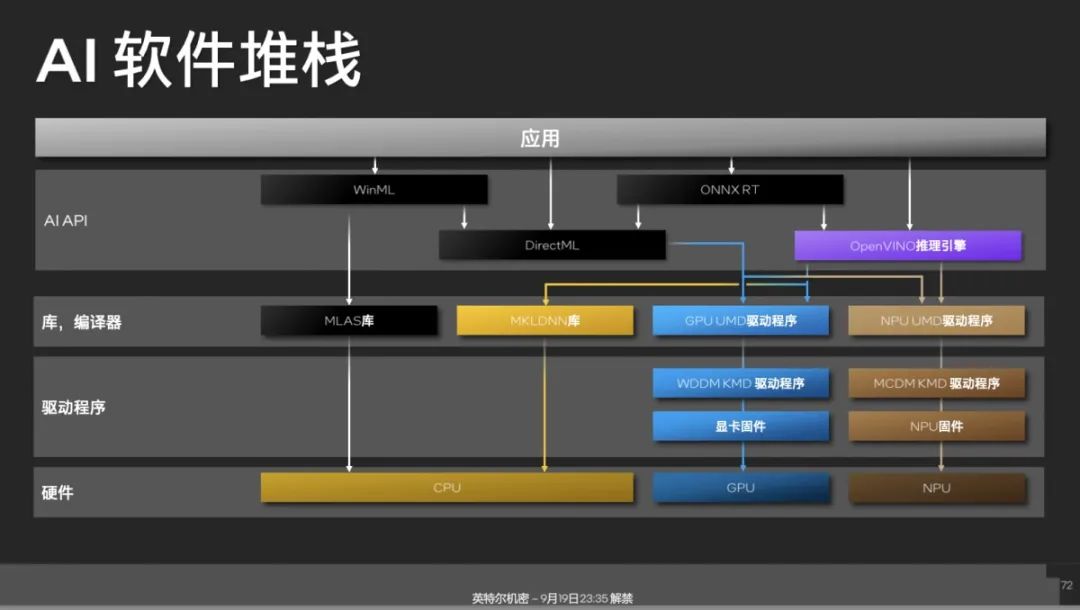

Here, I would like to take some space to discuss Intel’s software stack for edge AI ecology. When developing AI applications on Windows, there are various API options available, including Microsoft’s WinML and lower-level DirectML. Additionally, there are open-source options like ONNX RT and Intel’s OpenVINO.

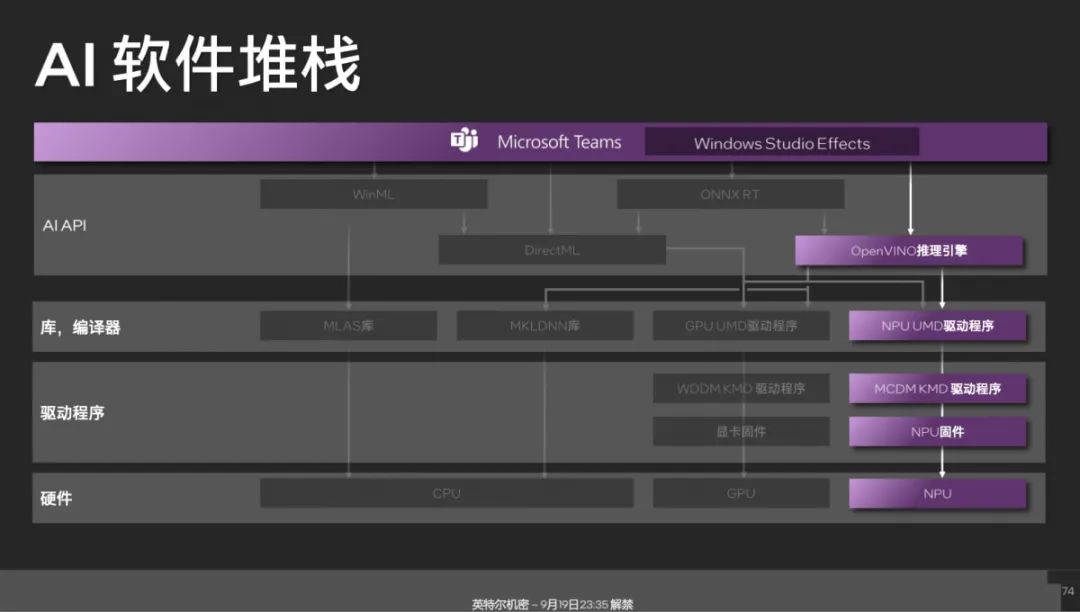

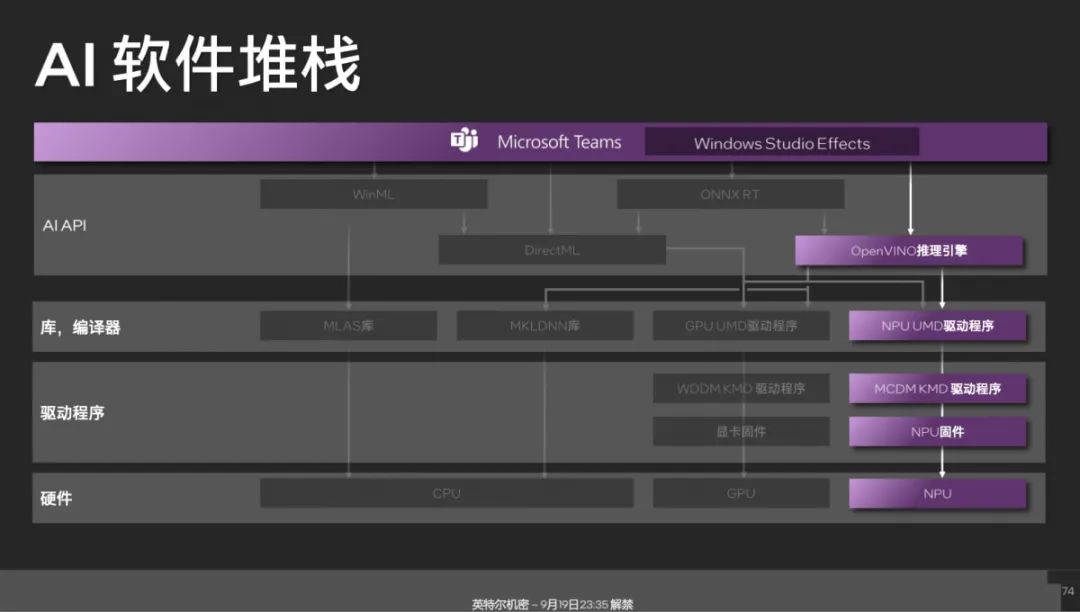

Middle-layer libraries and specific driver layers are illustrated. As an application case, Intel collaborated with Microsoft to create Windows Studio Effects—a virtual camera that performs AI processing on the original camera video, enabling background replacement, blurring, face tracking, etc. The lower layer of Windows Studio Effects is based on OpenVINO.

For application developers, they only need to call Windows Studio Effects to directly obtain these effects. For instance, Microsoft’s Teams directly utilizes Windows Studio Effects, allowing these AI effects to run on the NPU. The software stack path is roughly as follows:

Other developers, such as Adobe, prefer to use DirectML for various AI implementations in content creation. Intel mentioned that some video analysis and image applications have begun to rely heavily on OpenVINO. The aforementioned points should provide technical enthusiasts and developers with references; these are also important components in building the AI ecology.

There are more components related to the development ecology, which we have discussed extensively before, so I will not elaborate further. However, it is clear that with the addition of the NPU in Meteor Lake, coupled with other XPUs, Intel aims to truly popularize AI technology at the edge, even though the construction of this ecology is still in its early stages.

“We currently have over 100 partners working on various terminal-side AI applications to enrich the user experience on PCs.” In terms of the CCG business ecology, Intel primarily collaborates with Microsoft.

The NPU currently supports the following neural networks and applications:

“We feel that the development of edge AI is progressing rapidly. By leveraging the XPU capabilities introduced with Meteor Lake, we enable products to better support the entire ecosystem, allowing developers to introduce some AI workloads on PCs, thereby enhancing the user experience.”

It seems that the application proposition for edge NPUs is not easy to solve; even Apple has been working on NPUs for a long time, and the AI ecology on the Mac side is still not very rich. However, Intel’s consideration for building an AI ecology this time is to take an open-source approach. What remains to be seen is whether developers can create blockbuster applications based on Core processors that become essential for PC users.