⬆️ Click to follow + star 🌟 Don’t miss out on exciting content ⬆️

The competitive landscape of the AI server market is quietly changing. A recent report from Nomura Securities reveals that as cloud service giants like Google, Amazon AWS, and Meta accelerate their development of self-researched ASICs (Application-Specific Integrated Circuits), the AI server market, long dominated by NVIDIA, is undergoing a transformation from “general-purpose GPUs” to “custom ASICs.”

Current Market Situation: NVIDIA Remains Dominant, but the ASIC Chip Craze Intensifies: Shipments may exceed NVIDIA next year.

Currently, NVIDIA is still the absolute leader in the AI server market. Statistics show that its AI GPUs (Graphics Processing Units) account for over 80% of the market share, while ASIC chips only account for 8%-11%. However, from the perspective of shipment volume, the competitive landscape has quietly reversed.

For example, by 2025, Google’s self-researched TPU chip is expected to ship 1.5 to 2 million units, while Amazon AWS’s Trainium 2 ASIC is projected to be around 1.4 to 1.5 million units. Together, these two will approach 40%-60% of NVIDIA’s AI GPU shipments (5 to 6 million units) during the same period. If we add Microsoft’s planned large-scale ASIC deployment starting in 2027 and Meta’s expected production of 1 to 1.5 million MTIA chips in 2026, the overall shipment of ASICs is likely to surpass NVIDIA’s GPUs by 2026.

⬅️Long press/scan the QR code

Join the Computing Power Community

This trend is driven by the urgent need for cloud service providers to “reduce costs and increase efficiency.” Compared to general-purpose GPUs, ASIC chips can be customized for specific AI tasks (such as large model training and inference), offering advantages in computing efficiency and power consumption. For cloud service providers like Google and AWS, which handle massive amounts of data daily, developing their own ASICs not only reduces dependence on NVIDIA but also enhances service cost-effectiveness through hardware optimization.

ASIC Competition: Technical Breakthroughs and Real-World Challenges Coexist

Companies like Google, AWS, and Meta have entered the “deep water” of ASIC development. Taking Google’s TPU as an example, its latest version has been deeply optimized for the Transformer architecture (the core technology of large models), improving computational efficiency by over 30% compared to the previous generation; Amazon AWS’s Trainium 2 focuses on distributed training scenarios, supporting parallel computation for models with hundreds of billions of parameters. These technical features allow it to perform in specific scenarios that are approaching or even surpassing NVIDIA’s A100 GPU.

However, the large-scale deployment of ASICs is not without its challenges.

First is the capacity bottleneck. For instance, Meta’s planned mass production of the MTIA chip in 2026 relies on TSMC’s CoWoS (Chip-on-Wafer-on-Substrate) technology, but the current CoWoS wafer capacity can only support 300,000 to 400,000 units, far below its target of 1 to 1.5 million units. If Google, AWS, Microsoft, and others simultaneously expand production, CoWoS capacity may become a key limitation for ASIC scaling.

Secondly, there are technical barriers. Large CoWoS packages (like the CoWoS-S used in NVIDIA’s H100) have very high requirements for chip design and material consistency, with system debugging cycles lasting 6 to 9 months (similar systems from NVIDIA require 6 to 9 months for validation). Even mature companies like Google and AWS need to invest significant resources to address issues like heat dissipation and signal interference. Additionally, the “specialization” of ASICs means their applicable scenarios are limited—if the AI model architecture iterates (for example, shifting from Transformer to a new architecture), the previously invested ASICs may face the risk of becoming “obsolete.”

NVIDIA’s “Moat”: A Triple Barrier of Technology, Ecosystem, and Supply Chain

In the face of ASIC challenges, NVIDIA has not passively responded but has instead reinforced its advantages through technological iteration and ecosystem strengthening. At the 2025 COMPUTEX conference, it launched the NVLink Fusion technology, which opens up interconnect protocols, allowing third-party CPUs or xPUs to seamlessly collaborate with NVIDIA GPUs. This strategy may seem like a “compromise,” but it actually expands ecosystem coverage through open interfaces while maintaining its dominance in computing cores (GPUs).

⬅️Long press/scan the QR code

Join the Computing Power Community

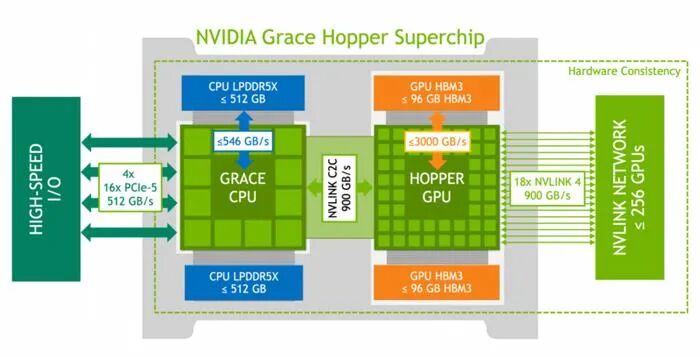

A deeper barrier lies in technological accumulation. NVIDIA still leads the industry in chip computing density (computational power per unit area) and NVLink interconnect technology (communication efficiency between chips). For example, the latest H100 GPU has a computing density about 20% higher than that of contemporaneous ASIC chips, and the NVLink interconnect bandwidth is more than 1.5 times that of self-researched ASICs. This performance advantage makes it irreplaceable in complex tasks like training large models.

More critically, the “network effect” of the CUDA ecosystem is significant. Over 90% of global enterprise AI solutions are based on CUDA development, creating a path dependency for developers from model training to deployment. Even if ASIC computing power approaches that of NVIDIA GPUs, companies would need to invest heavily to reconstruct their software ecosystems, and this “conversion cost” constitutes NVIDIA’s most core moat.

Jensen Huang has also stated that NVIDIA’s growth rate will continue to exceed that of ASICs.Huang’s reasoning is that many ASIC projects will emerge, but about 90% will fail, just as many startups emerge but most will ultimately fail. Even if some escape this fate, they may struggle to sustain themselves over the long term.

Future Outlook: Short-Term Differentiation, Long-Term Coexistence?

In the short term, the rise of ASICs is more of an “incremental supplement” rather than a “replacement of existing stock.” Cloud service providers, considering cost and efficiency, will introduce ASICs in specific scenarios (such as large model training and edge inference), but general-purpose GPUs will still be the first choice for most enterprises. NVIDIA’s advantages in technology, ecosystem, and supply chain ensure its absolute dominance in the high-end training market (such as models with hundreds of billions of parameters).

In the long term, the market may present a “layered competition” pattern: NVIDIA continues to lead the general AI computing power market, while ASICs gain advantages in vertical scenarios (such as model fine-tuning for specific industries and low-latency inference). For sovereign AI (AI systems that are independently controllable by various countries), some nations limited by NVIDIA’s supply may accelerate the development of their own ASICs, but this requires overcoming multiple barriers in technological accumulation, talent reserves, and ecosystem building, making it difficult to shake NVIDIA’s global position in the short term.

Conclusion

The rise of ASICs is essentially a reflection of the AI industry’s evolution from “general computing power” to “specialized efficiency.” The entry of companies like Google, AWS, and Meta has diversified the market, but NVIDIA, with its deep barriers in technology, ecosystem, and supply chain, remains the “rule maker” in this competition. In the future, the competition in the AI server market may present a dual-track parallel pattern of “general-purpose GPUs + custom ASICs”—the former supporting cutting-edge exploration and the latter meeting scenario implementation, both driving the popularization and deepening of AI technology.

Click below to enter the “Computing Power Community” mini-program

Long press the image above to scan the QR code to join the community