Source: ZHUAN ZHI

This article is approximately 1000 words long and is recommended for a 5-minute read.

This article presents SparseLoRA, a method to accelerate the fine-tuning of large language models through contextual sparsity.

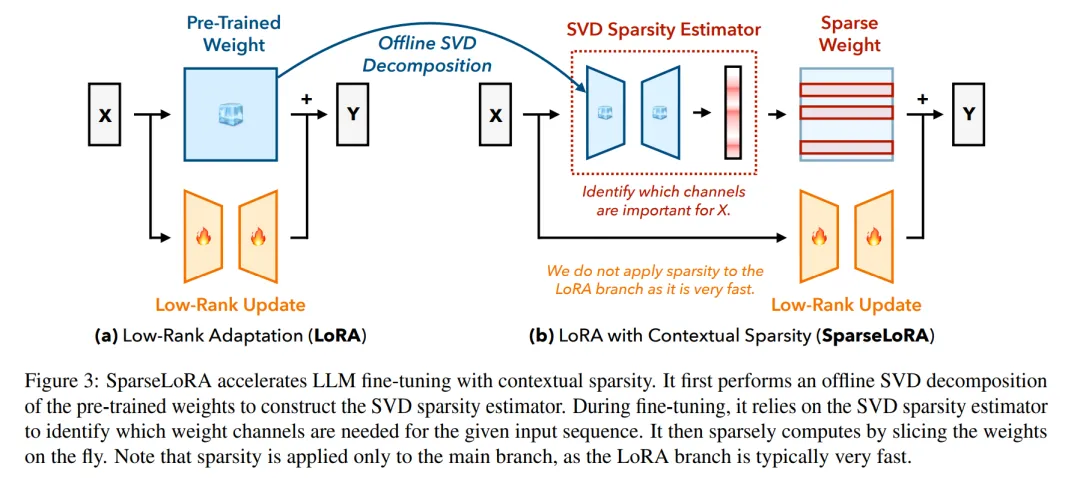

Fine-tuning large language models (LLMs) is often both computationally intensive and memory-consuming. While parameter-efficient fine-tuning methods such as QLoRA and DoRA can reduce the number of trainable parameters and lower memory usage, they do not reduce computational costs and may even slow down fine-tuning in some cases. This article introduces SparseLoRA, a method to accelerate the fine-tuning of large language models through contextual sparsity. We introduce a lightweight, non-training SVD sparsity estimator that dynamically selects a subset of sparse weights for loss and gradient computation. Additionally, we systematically analyze and address sensitivity issues across layers, tokens, and training steps. Experimental results show that SparseLoRA can reduce computational overhead by up to 2.2 times while maintaining accuracy across various downstream tasks (including commonsense and arithmetic reasoning, code generation, and instruction following), achieving an actual speedup of up to 1.6 times.

Fine-tuning large language models (LLMs) is often both computationally intensive and memory-consuming. While parameter-efficient fine-tuning methods such as QLoRA and DoRA can reduce the number of trainable parameters and lower memory usage, they do not reduce computational costs and may even slow down fine-tuning in some cases. This article introduces SparseLoRA, a method to accelerate the fine-tuning of large language models through contextual sparsity. We introduce a lightweight, non-training SVD sparsity estimator that dynamically selects a subset of sparse weights for loss and gradient computation. Additionally, we systematically analyze and address sensitivity issues across layers, tokens, and training steps. Experimental results show that SparseLoRA can reduce computational overhead by up to 2.2 times while maintaining accuracy across various downstream tasks (including commonsense and arithmetic reasoning, code generation, and instruction following), achieving an actual speedup of up to 1.6 times.

About Us

Data Pie THU, as a public account focused on data science, is backed by the Tsinghua University Big Data Research Center, sharing cutting-edge research dynamics in data science and big data technology innovation, continuously disseminating knowledge in data science, and striving to build a platform for gathering data talent, creating the strongest group in China’s big data.

Sina Weibo: @Data Pie THU

WeChat Video Account: Data Pie THU

Today’s Headlines: Data Pie THU