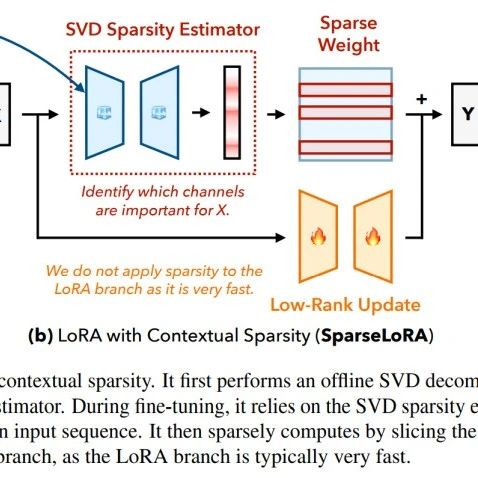

SparseLoRA: Accelerating Large Language Model Fine-Tuning Using Contextual Sparsity

Source: ZHUAN ZHI This article is approximately 1000 words long and is recommended for a 5-minute read. This article presents SparseLoRA, a method to accelerate the fine-tuning of large language models through contextual sparsity. Fine-tuning large language models (LLMs) is often both computationally intensive and memory-consuming. While parameter-efficient fine-tuning methods such as QLoRA and DoRA … Read more