Guests | Zhang Yu, Duan Xiongchun, Song Muchun, Xie Yongji, Deng Liang

Author | Ling Min

With the rapid evolution of the internet, many star products shine under the halo of the times; but behind the noise, there is a group of people quietly safeguarding the stability of the internet world. Today, we introduce this group of behind-the-scenes guardians.

From 2012 to 2022, it was a decade of rapid expansion for ByteDance’s product line and a decade of rapid growth for its infrastructure scale. Behind this, there is a team that silently ensures the stability of ByteDance’s upper-level business, known as the Byte System Department’s STE team.

As early as 2015, the STE team began to take shape, primarily driven by issues, providing technical support for ByteDance’s internal infrastructure and software systems. With the explosive growth of user numbers for popular products like Douyin in 2017, which became a phenomenon-level app, the scale of ByteDance’s internal servers grew increasingly large, making system maintenance a top priority.

In 2018, the team was officially named STE (System Technologies & Engineering). Today, the STE team has expanded from its initial 20 members to several hundred, with R&D centers established in the UK, the US, and other locations. The technical scope has also expanded from operating system kernels to server firmware, compiler technology, system virtualization, host networking, and intelligent system operation and maintenance, empowering business with foundational software engineering capabilities.

In this interview, InfoQ had the privilege of speaking with several core members of the STE kernel team to understand their technical practices and experiences in optimizing the Linux kernel, as well as the value these efforts bring to the business. We also get a glimpse into the spirit and culture of this team, which focuses on building infrastructure and constructing walls with technology in the “invisible places.”

1 Focus on Linux Kernel: A Team of Post-90s

It is understood that the STE kernel team provides Linux kernel services to all internal businesses at ByteDance, mainly responsible for kernel management, process scheduling, virtualization, and networking. According to Duan Xiongchun, the head of the STE kernel team, when the STE team first took shape in 2015, there were already developers responsible for kernel-related work. In 2018, the STE team was officially established, and the kernel direction became one of its sub-teams, gradually expanding to have dedicated developers responsible for solving issues with the Linux kernel and adding and maintaining new features.

We noticed that this is a very young team, with most kernel maintainers being post-90s, which is quite rare. After all, “Linux’s father” Linus Torvalds once lamented, “Most current maintainers are from the 50s and 60s, and the community faces a generational update problem.” Linus Torvalds even worried that after this batch of Linux kernel maintainers ages, it would be difficult to find new successors, as many young developers find the “Linux kernel project not that interesting.”

Zhang Yu, the head of the STE team, who has been deeply involved in the field of system technology for many years, also expressed, “Currently, there is a growing scarcity of talent focusing on foundational software and operating system kernels, leading to two extremes: one is developers with a solid computer foundation but lack interest in kernel development; the other is those very interested in the kernel but lacking foundational knowledge. Talents who possess both advantages are very few. For our kernel team, youth is our advantage; it is this obsession and love for kernel technology that brings us together.”

Injecting fresh blood into the team is not difficult; the challenge lies in how to encourage these young people to become more courageous and persistent in the Linux kernel direction. In this regard, Zhang Yu mentioned two words: inspire. Provide a platform for young people to ignite their interest; give them opportunities to stimulate their ambition. “We also have many senior developers, but they prefer to leave good opportunities for young people. This tradition of ‘mentoring’ allows young developers to step into the spotlight and gain confidence. Otherwise, if young people continue to receive no encouragement and are not inspired, it will be difficult for them to achieve results and go far on this arduous path,” Zhang Yu mentioned.

It is in this cultural atmosphere that the STE kernel team relies on the Linux kernel to produce very hardcore outputs in virtualization, cloud-native, eBPF, and other technical directions. For example, in September 2020, the team contributed the HVO solution to the Linux kernel community, which addresses the issue of memory management redundancy in the Linux kernel.

2 Addressing Linux Kernel Memory Management Redundancy: A Long-standing Industry Challenge

Since Linus Torvalds released the first version in 1991, the Linux kernel has developed for over 30 years. It is said that the first version of the Linux kernel had only 10,250 lines of code, occupying 65 KB, while today, the number of lines of code in the Linux kernel has exceeded 27 million.

Each year, millions of lines of code are added or removed from the Linux kernel, incorporating more and more excellent features. Such a complex and bloated project inevitably faces various challenges in different business scenarios.

The Linux operating system supports the operation of all ByteDance servers. The STE kernel team found that some cloud computing scenarios bring additional memory management overhead, which amplifies this loss as the server scale increases.

“The Linux kernel manages physical memory in pages (generally 4 KB). Each 4 KB page corresponds to a struct page structure. Using a struct page to manage a page is not a problem in itself. However, when the size of the pages used is 2 MB or even 1 GB, the Linux kernel still allocates struct page in 4 KB units, which is clearly a waste of memory. My goal is to minimize this redundant memory management overhead as much as possible,” said STE engineer Song Muchun. In July 2019, Song Muchun joined the Byte STE kernel team, and over three years, he grew with the team, completing the transition from a Linux kernel developer to a Linux kernel maintainer, and became the maintainer of two core sub-modules of the Linux kernel community: HugeTLB and Memory Cgroup.

In fact, the issue of memory management redundancy in the Linux kernel is not new; it has existed in the industry for over a decade. Many companies have conducted research in the past but have not found a solution. Nevertheless, the STE kernel team still wanted to make some attempts. After continuous discussions and validations of various solutions’ feasibility, the team found that this problem could potentially be solved.

“Certain scenarios use large pages, and each large page requires 8 struct pages to manage, which means 8x4K of memory. We hope to ultimately occupy only one page of physical memory (4KB). Our proposed solution is to reuse, mapping the remaining 7 virtual addresses to the unique physical page. If the solution can be successfully implemented, it means that a 1T server can save nearly 16GB of memory. Even optimizing by 1% can bring significant benefits to the company,” said Song Muchun.

This solution is called HVO (HugeTLB Vmemmap Optimization). With the solution in place, the next step is to conduct code research.

Linux kernel management is a very complex and core module, intertwined with various modules, and its stability will inevitably affect the overall stability of the Linux kernel. Therefore, the STE kernel team needs to minimize the scope of the code involved in this solution and ensure that it does not affect other functions in the system. To this end, from April 2020 to June 2021, the team embarked on a year-long journey of code research, development, testing, and refactoring.

Is it worth spending a year to solve a technical problem? Duan Xiongchun gave a positive answer.

“We measure the value of this matter; clearly, we also face a lot of pressure, but truly difficult tasks require more time to examine. We need to do this. Based on our passion for technology, we often gather on weekends to discuss and think, then bring these ideas into practical scenarios for better refinement and optimization. This is a long-term process, and these breakthroughs can bring enormous revenue to the company and the industry, which is valuable,” he said.

At the same time, HVO has also received widespread recognition in the industry: Huawei, Google, AWS, and Oracle are preparing to implement this solution, and some companies have sent thank-you letters to the team. “But this is not the end; we will continue to optimize the HVO solution,” Duan Xiongchun said.

3 New Explorations in Linux Kernel Cloud-Native Technology

Device virtualization technology, as one of the most important foundational technologies in the cloud computing field, has been steadily evolving over the years. Among them, virtio and VFIO have been the mainstream device virtualization technologies, incorporated into the Linux kernel mainline in 2008 and 2012, respectively. To combine the advantages of virtio and VFIO, the vDPA (Virtio Data Path Acceleration) technology framework was incorporated into the Linux kernel mainline in 2020.

Meanwhile, the cloud-native process within ByteDance is also underway.

ByteDance began promoting the cloud-native process internally as early as 2016, conducting large-scale containerization transformations for its business. By the end of 2021, over 95% of ByteDance’s applications had achieved cloud-native transformation. During this process, the STE kernel team found that containers are somewhat limited in certain I/O-related solutions compared to traditional virtualization solutions. “At that time, we hoped to provide a framework in the Linux kernel that developers could use to simulate various devices, which could be directly accessed by containers. This would further compensate for the shortcomings of container-based cloud-native solutions in I/O and even allow for a certain degree of technical reuse with relatively mature virtualization solutions,” said STE engineer Xie Yongji to InfoQ.

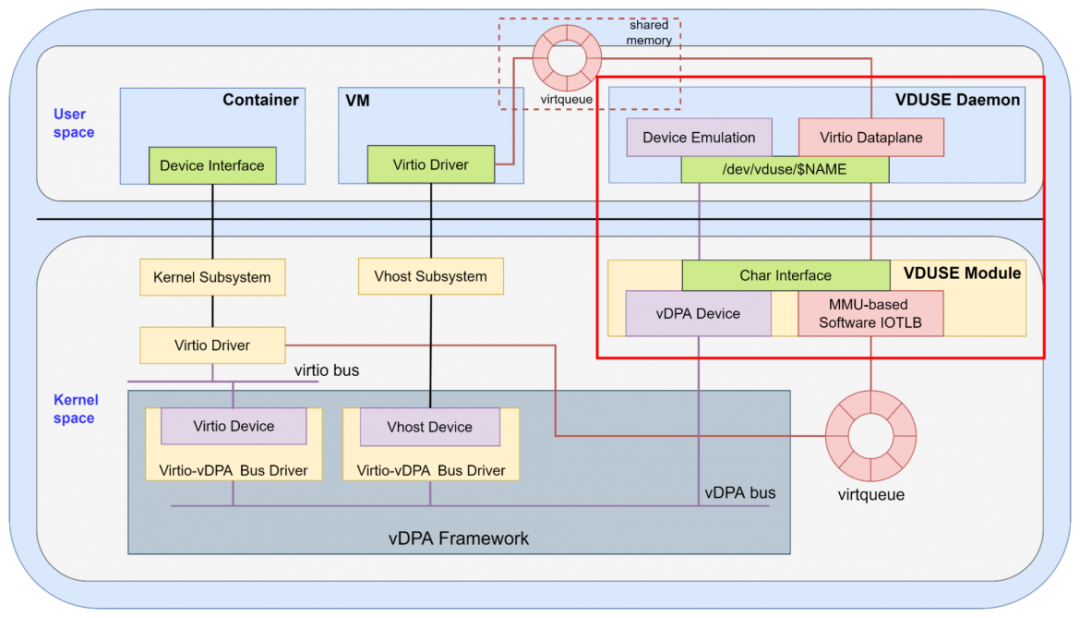

Thus, the VDUSE framework was born. Through VDUSE, developers can implement a software-defined vDPA device in a user process and connect it to the virtio or vhost subsystem for use by containers or virtual machines.

According to the introduction, the specific implementation principle is that the VDUSE device is created by the ioctl (VDUSE_CREATE_DEV) of /dev/vduse/control. Through this ioctl, the user space can specify some basic configurations for this simulated device, such as device name (unique identifier for the VDUSE device), virtio features, virtio configuration space, number of virtqueues, etc. Then, a character device interface (/dev/vduse/$NAME) will be exported to user space for device simulation. User space can use the VDUSE_VQ_SETUP ioctl on /dev/vduse/$NAME to initialize the configuration of each virtqueue, such as the maximum length of the virtqueue.

After initialization, the VDUSE device can be bound to the vDPA bus through the netlink message VDPA_CMD_DEV_NEW. Subsequently, user space can use read()/write() on /dev/vduse/$NAME to receive and respond to control requests from the VDUSE kernel module, and can also map a segment of shared memory through mmap() for data communication with the corresponding virtio driver in the kernel.

In October 2020, the STE kernel team officially open-sourced VDUSE to the Linux kernel community. After a year, VDUSE was officially incorporated into Linux version 5.15.

“In a compute-storage separation architecture, we can simulate various storage devices for containers or virtual machines using the VDUSE framework on compute nodes, where the back-end of these storage devices is often remote storage nodes. Now, this solution has begun large-scale deployment in ByteDance’s cloud-native scenarios. In the future, we will continue to explore the feasibility of applications in high-performance network scenarios in cloud-native environments,” said Xie Yongji.

The Linux kernel continues to evolve. In the view of STE engineer Deng Liang, “As cloud-native application scenarios continue to expand and hardware develops towards high-density heterogeneous models, new requirements are placed on the Linux kernel. The kernel serves as a bridge connecting underlying hardware and upper-layer cloud-native applications, and we are also considering whether the current single monolithic kernel is appropriate, and we are exploring some alternatives.”

4 Embracing the Community for Greater Technical Output and Value

If overcoming technical challenges relies on hard technical strength, then promoting and integrating our code into the community requires sufficient patience for communication and advocacy.

The STE kernel team has also accumulated its own experience in communicating with the community.

In September 2020, when the first version of HVO was sent to the community, the team received many skeptical voices. “The community initially had doubts about our solution; we needed to prove to the community that this solution was sound. At the same time, the community would raise some targeted questions, many of which were scenarios we had not considered before. Based on these questions, we iterated and maintained the solution. For HVO, we iterated over 20 versions, needing to continuously prove the solution’s effectiveness in different scenarios, and this process took a long time,” recalled Song Muchun about HVO’s journey in the community. He found it particularly lengthy. In his view, the community needs to consider whether the maintenance cost outweighs the benefits, which is understandable. As developers and community contributors, our job is to clearly explain the value that the technical solution can generate, allowing the community to see the benefits it brings and proving the feasibility and stability of the solution.

Internally, the process from development to application of a technical solution is not complicated. However, when facing the community, developers need to consider many more issues. “Often, we need to break through our own scenarios to look at other scenarios and pain points in the community, which we may have never encountered before.” Clearly, embracing the community comes with high communication costs, but in Duan Xiongchun’s view, contributing code to the community can achieve a win-win situation, which is worthwhile.

“Now our work environment is basically a simulation of the community environment, and our working mode is consistent with the community. This standardized operation mode can effectively reduce code maintenance costs, and the openness of community thinking also provides us with more ideas for problem-solving, thus creating more possibilities for generating value through technology.”

5 Venturing into the ‘Unmanned Zone’

For the vision and goals of the STE kernel team, Zhang Yu described it with three “closeness”: closeness to business to understand pain points; closeness to the community to understand its direction; and closeness to new hardware technologies to maximize the kernel’s value in soft-hard collaborative design. The kernel itself cannot directly create value; it creates value more through serving the business. Therefore, when the team conducts R&D, it must clearly understand what the benefits to the business are and whether it can truly solve business pain points, thereby creating business value.

The STE kernel team has been deeply involved in the community for many years. Based on the open-source Linux operating system, the team has made some optimizations to meet internal enterprise needs, and conversely, the team also gives back these good features to the community, such as the aforementioned HVO and VDUSE, which have been incorporated into the Linux kernel mainline. In Zhang Yu’s view, the STE kernel team does not aim to create a groundbreaking operating system; rather, it stems from a technical original intention, hoping to contribute its strength to the community, hand it over to Linux, and continuously bring in new ideas.

Zhang Yu stated that the STE team places great importance on “pragmatism,” which is also one of ByteDance’s core values. “What we do revolves around infrastructure, improving its stability, optimizing performance, etc. Compared to other star products within the company that can directly generate revenue, we are a department that does subtraction, reducing infrastructure costs through technical means. In the business chain, we are in a very backward position, like defenders on a football field, ensuring the basic stability/reliability of the system while also being able to assist in scoring. Returning to the team’s vision, my idea is quite simple and pragmatic: I hope the team first meets business needs, then exceeds business needs, and leads the industry with some technologies. Initially, we followed the community and industry leaders’ paths, but as our technical capabilities continue to improve to meet the diverse needs of business scenarios, we will move forward and inevitably enter the ‘unmanned zone.'”

Once in the ‘unmanned zone,’ there is no guiding direction or reference, which tests the team’s ability and resilience the most. “I hope the team has a pioneering spirit, can identify reasonable directions, and walk firmly down that path. There may be adjustments along the way, but ultimately, the route drawn will be a wavy line rather than a completely aimless curve,” Zhang Yu said.

6 In Conclusion

To this day, the team continues to optimize HVO. In March 2022, the team optimized the performance of HVO in 2 MB HugeTLB, further reducing the struct page overhead of 2 MB HugeTLB by 12.5%; in April 2022, HVO supported ARM 64 architecture; in May 2022, HVO supported runtime switches, no longer limited to enabling via cmdline.

Relying on the Linux kernel, the team continues to explore in virtualization, cloud-native, eBPF, and other technical directions.

“Currently, we are focusing on two main scenarios: one is cloud-native, which is also a direction everyone is paying attention to, but there is currently no particularly good solution. Even in some infrastructure scenarios for cloud gaming, the industry has not yet formed a standard; the other is soft-hard collaboration, defining hardware through software and defining software through hardware. We are also conducting research around these directions,” Zhang Yu believes. “If the team continues to do repetitive tasks, there is no passion or combat effectiveness. The expectation for the team is to go beyond meeting business needs and do some industry-leading things.”

In Zhang Yu’s view, developing foundational software like operating systems is not a momentary passion; it requires long-term investment. As the sands of time wash away, only the true gold will be revealed. “Currently, major domestic and foreign companies are also working on soft-hard integration around their business scenarios, involving foundational system software, chip board server hardware development, etc. While doing this, we must also elevate it to a level of social responsibility, considering how much strength we can contribute. These are all aspects that require continuous reflection.”

Guest Introduction:

Zhang Yu, Head of ByteDance STE Team

Duan Xiongchun, Head of ByteDance STE Kernel Team

Song Muchun, Engineer at ByteDance STE, Maintainer of the Linux Kernel Community’s HugeTLB and Memory Cgroup Core Submodules

Xie Yongji, Engineer at ByteDance STE

Deng Liang, Engineer at ByteDance STE

Today’s Recommended Articles

Silicon Valley’s New Technical Focus: Breaking Free from the Patchwork of Multi-Cloud Design is the True Destination of Cloud Computing

Elon Musk Claims Twitter May Go Bankrupt; Meta Lays Off 11,000 People, Mark Zuckerberg Admits Mistakes; GitHub Annual Report: Indian Developers Outpace China | Q News

Code with Just Your Voice! Copilot Tests New Feature “Hey, GitHub,” Bidding Farewell to Keyboard Coding

Embrace Serverless Before It’s Too Late | Q Recommendation

Event Recommendation

This article is selected from “China’s Outstanding Technical Team Interviews” (Q4 2022), featuring selected experiences and insights from technical teams at ByteDance, Kingsoft Office, Industrial and Commercial Bank of China, OceanBase, Cocos Engine, and more regarding technology implementation and team building. Scan the QR code in the image below or click to read the original text to download the full content of this issue and see more exclusive interviews!

“China’s Outstanding Technical Team Interviews” is a significant content product created by InfoQ, planning a series of interviews based on various excellent IT technical teams from domestic enterprises, aiming to convey the working methods/technical practices of outstanding technical teams to the outside world, allowing developers to understand their knowledge accumulation, technical evolution, product refinement, and team culture, and gain valuable insights from it.

The interview series is now open for long-term registration. If you are in a traditional enterprise undergoing digital transformation or are conducting innovative technology research and development in an internet company and hope InfoQ can pay attention to and interview your technical team, you can add WeChat: caifangfang_wechat, please indicate your intention and company name.

Event Preview

The wave of digital innovation is coming; are you keeping up? How to choose a tech stack? How to deploy architecture based on business processes? How to choose the most suitable services for your enterprise? 🔥 Amazon Web Services’ “This is My Architecture – Live Week” series is back with a bang! Industry veteran architects and technical leaders will guide you through the best practices and paradigms for digital transformation.