Source: Deephub Imba

This article is about 4000 words long and is recommended to be read in 6 minutes.

In this article, we will explain the basic concept of LoRA itself and then introduce some variants that improve the functionality of LoRA in different ways.

LoRA can be considered a major breakthrough in efficiently training large language models for specific tasks. It is widely used in many applications. In this article, we will explain the basic concept of LoRA itself and then introduce some variants that improve the functionality of LoRA in different ways, including LoRA+, VeRA, LoRA-fa, LoRA-drop, AdaLoRA, DoRA, and Delta-LoRA.

LoRA

Low-Rank Adaptation (LoRA) is a widely used technique for training large language models (LLM) today. Large language models can generate various content for us, but to solve many problems, we still hope to train LLMs on given downstream tasks, such as classifying sentences or generating answers to given questions. However, using fine-tuning directly requires training large models with millions to billions of parameters.

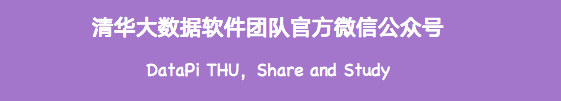

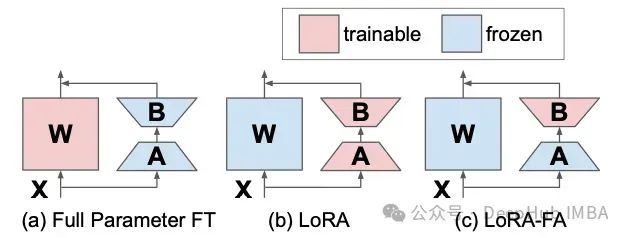

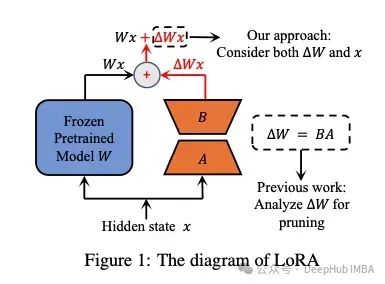

LoRA provides another training method by reducing the number of parameters trained, making the process faster and easier. LoRA introduces two matrices A and B; if the original matrix size of parameter W is d × d, then the sizes of matrices A and B are d × r and r × d respectively, where r is much smaller (usually less than 100). The parameter r is called the rank. When using LoRA with rank r=16, the shapes of these matrices are 16 x d, significantly reducing the number of parameters that need to be trained. The biggest advantage of LoRA is that it requires fewer trained parameters compared to fine-tuning, yet achieves performance comparable to fine-tuning.

One technical detail of LoRA is that at the beginning, matrix A is initialized with random values that have a mean of zero but some variance around the mean. Matrix B is initialized as a completely zero matrix. This ensures that the LoRA matrix does not randomly change the output of the original W from the start. Once the parameters of A and B are adjusted in the desired direction, the updates to A and B in the output of W should complement the original output.

LoRA greatly reduces the resource consumption for training LLMs. Therefore, many different variants have emerged based on the original LoRA method, improving the original method in various ways.

LoRA+

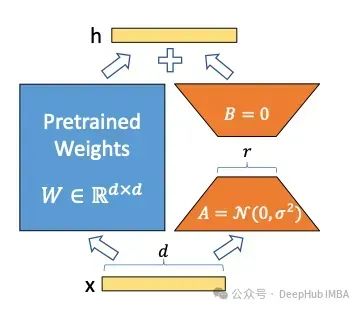

LoRA+ introduces a more efficient way to train LoRA adapters by introducing different learning rates for matrices A and B. In standard LoRA training, the learning rate is applied to all weight matrices. However, the authors of LoRA+ have demonstrated that using a single learning rate is suboptimal. By setting the learning rate for matrix B to be much higher than that for matrix A, training becomes more efficient.

The authors demonstrate that the required mathematics is quite complex (if you are really interested, you can check the original paper). Here is a simple explanation: matrix B is initialized to 0, so it requires larger update steps than the randomly initialized matrix A. By setting the learning rate for matrix B to be 16 times that of matrix A, the authors have been able to achieve a slight improvement in model accuracy (about 2%) while speeding up the training time of models like RoBERTa or Llama-7b by 2 times.

VeRA

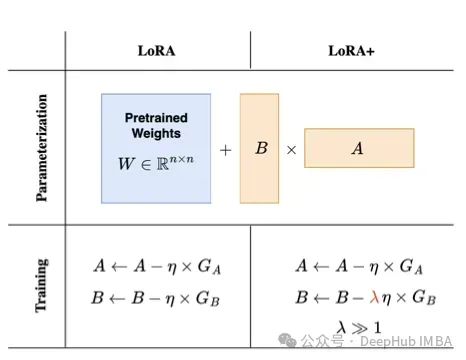

VeRA (Vector-based Random Matrix Adaptation) introduces a method to significantly reduce the size of LoRA parameters. Instead of training matrices A and B, they initialize these matrices with shared random weights (i.e., all matrices A and B across all layers have the same weights) and add two new vectors d and B, training only vectors d and B during fine-tuning.

A and B are random weight matrices. If they have not been trained at all, how can they contribute to the model’s performance? This method is based on an interesting research area known as random prediction. Quite a bit of research has shown that in a large neural network, only a small portion of the weights are used to guide behavior and lead to the expected performance on training tasks. Thus, due to random initialization, certain parts (or sub-networks) of the model are biased towards the desired model behavior from the very beginning.

However, this means that all parameters need to be trained during the training process, which is no different from complete fine-tuning. The authors of VeRA only train the relevant sub-networks by introducing vectors d and b, in contrast to the original LoRA method where matrices A and B are frozen, and matrix B is no longer initialized to zero but is randomly initialized like matrix A.

This method results in many parameters that are much smaller than the full matrices A and B. If a LoRA layer with a rank of 16 is introduced into GPT-3, it would have 755,000 parameters. With VeRA, only 2.8 million are needed (a 97% reduction). How does performance hold up with such a small number of parameters? The authors of VeRA evaluated using common benchmarks like GLUE or E2E as well as models based on RoBERTa and GPT-2 Medium. The results show that the performance of the VeRA model is only slightly lower than that of the fully fine-tuned model or the model using the original LoRA technique.

LoRA-FA

LoRA-FA is the abbreviation for LoRA with Frozen-A, where matrix A is frozen after initialization and serves as a random projection. Matrix B is not added with new vectors but is trained after being initialized to zero (just like in the original LoRA). This halves the number of parameters while maintaining performance comparable to standard LoRA.

LoRA-drop

The LoRA matrix can be added to any layer of the neural network. LoRA-drop introduces an algorithm to decide which layers to fine-tune with LoRA and which layers do not need it.

LoRA-drop consists of two steps. In the first step, a subset of data is sampled, and LoRA is trained for several iterations. Then the importance of each LoRA adapter is calculated as BAx, where A and B are the LoRA matrices, and x is the input. This is the output of LoRA added to the output of the frozen layers. If this output is large, it indicates a significant change in behavior. If it is small, it suggests that LoRA has a negligible effect on the frozen layers.

There are also different methods to select the most important LoRA layers: importance values can be aggregated until a threshold is reached (controlled by a hyperparameter), or only the most important n fixed LoRA layers can be selected. Regardless of the method used, a full training on the entire dataset is still required (since a subset of data was used in the earlier steps), with other layers fixed to a shared set of parameters that will not change during training.

The LoRA-drop algorithm allows for training the model using only a subset of LoRA layers. According to the evidence presented by the authors, the accuracy is only slightly changed compared to training all LoRA layers, but the number of parameters that need to be trained is reduced, thus decreasing computation time.

AdaLoRA

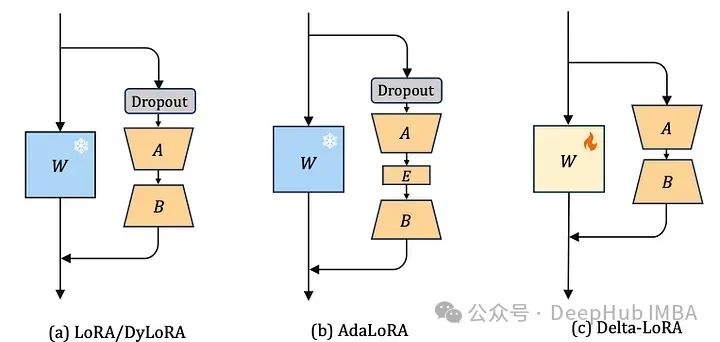

There are many ways to determine which LoRA parameters are more important than others, and AdaLoRA is one of them. The authors of AdaLoRA suggest considering the singular values of the LoRA matrices as indicators of their importance.

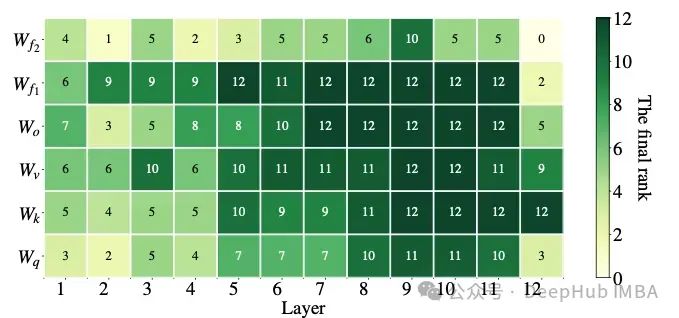

In contrast to the above LoRA-drop, where layer adapters are either fully trained or not trained at all, AdaLoRA can determine that different adapters have different ranks (in the original LoRA method, all adapters have the same rank).

AdaLoRA has the same total number of parameters as standard LoRA with the same rank, but the distribution of these parameters is different. In LoRA, all matrices have the same rank, whereas in AdaLoRA, some matrices have higher ranks, and some have lower ranks, resulting in the same total number of parameters. Experiments show that AdaLoRA produces better results than the standard LoRA method, indicating a better distribution of trainable parameters in parts of the model, which is particularly important for given tasks. The following figure shows an example of how AdaLoRA assigns ranks for a given model. As we can see, it provides higher ranks for layers closer to the end of the model, indicating their greater importance.

DoRA

Another way to modify LoRA for better performance is weight-decomposition Low-Rank Adaptation (DoRA). Since each matrix can be decomposed into a product of size and direction. For vectors in two-dimensional space, it can easily be imagined: a vector is an arrow starting from the position 0 and ending at a point in the vector space. For the elements of the vector, if your space has two dimensions x and y, you could say x=1 and y=1. Alternatively, the same point can be described differently by specifying size and angle (i.e., direction), such as m=√2 and a=45°. This means moving the arrow from point 0 along a 45° direction with a length of √2. This yields the same point (x=1,y=1).

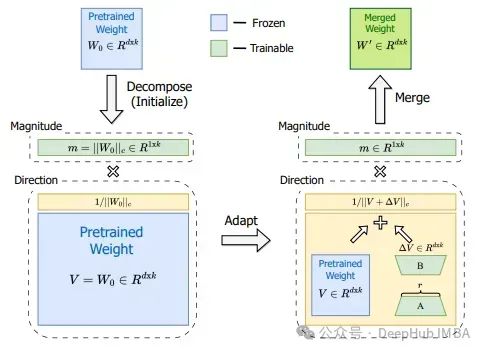

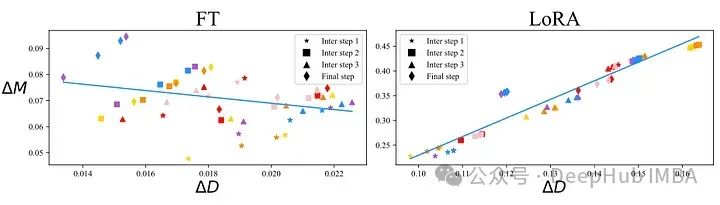

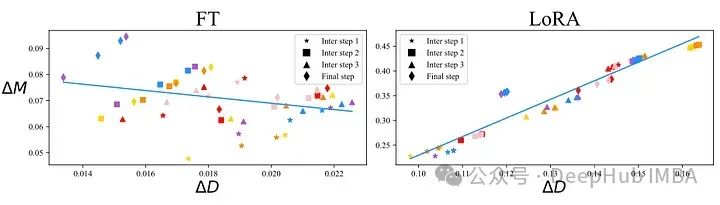

This decomposition of size and direction can also be accomplished with higher-order matrices. The authors of DoRA apply this to weight matrices, which describe the updates of models trained with standard fine-tuning and those trained with LoRA adapters during training steps. The comparison of these two techniques can be seen in the following figure:

Fine-tuned model (left) and model trained with LoRA adapters (right). On the x-axis, the changes in direction can be seen, and on the y-axis, the changes in magnitude can be observed, with each scatter point in the figure belonging to a layer of the model. There is an important distinction between these two training methods. In the left image, there is a small negative correlation between direction updates and magnitude updates, while in the right image, there is a stronger positive correlation. You might wonder which is better or if this makes any sense. However, the main idea of LoRA is to achieve performance comparable to fine-tuning with fewer parameters. That is to say, as long as the cost does not increase, LoRA training can share as many properties as possible. From the above figure, we can also see that the relationship between direction and magnitude in LoRA is different from that in complete fine-tuning, which may be one reason why LoRA sometimes underperforms compared to fine-tuning.

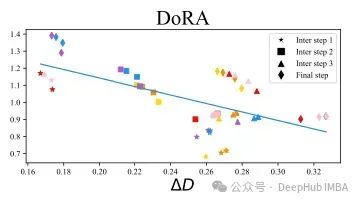

The authors of DoRA introduce a method to separate the pre-trained matrix W into a size vector m of size 1 x d and a direction matrix V, allowing size and direction to be trained independently. Then the direction matrix V is enhanced by B * a, while m is trained as is. Although LoRA tends to change both magnitude and direction simultaneously (as indicated by the high positive correlation between the two), DoRA can more easily adjust the two separately or compensate for one change with a negative change in the other. Thus, the relationship between direction and size in DoRA is more similar to fine-tuning:

In several benchmarks, DoRA outperformed LoRA in accuracy. Decomposing weight updates into size and direction allows DoRA to perform training that is closer to what is done in fine-tuning while still using the smaller parameter space of LoRA.

Fine-tuned model (left) and model trained with LoRA adapters (right). On the x-axis, the changes in direction can be seen, and on the y-axis, the changes in magnitude can be observed, with each scatter point in the figure belonging to a layer of the model. There is an important distinction between these two training methods. In the left image, there is a small negative correlation between direction updates and magnitude updates, while in the right image, there is a stronger positive correlation. You might wonder which is better or if this makes any sense. However, the main idea of LoRA is to achieve performance comparable to fine-tuning with fewer parameters. That is to say, as long as the cost does not increase, LoRA training can share as many properties as possible. From the above figure, we can also see that the relationship between direction and magnitude in LoRA is different from that in complete fine-tuning, which may be one reason why LoRA sometimes underperforms compared to fine-tuning.

The authors of DoRA introduce a method to separate the pre-trained matrix W into a size vector m of size 1 x d and a direction matrix V, allowing size and direction to be trained independently. Then the direction matrix V is enhanced by B * a, while m is trained as is. Although LoRA tends to change both magnitude and direction simultaneously (as indicated by the high positive correlation between the two), DoRA can more easily adjust the two separately or compensate for one change with a negative change in the other. Thus, the relationship between direction and size in DoRA is more similar to fine-tuning:

In several benchmarks, DoRA outperformed LoRA in accuracy. Decomposing weight updates into size and direction allows DoRA to perform training that is closer to what is done in fine-tuning while still using the smaller parameter space of LoRA.

Delta-LoRA

Delta-LoRA introduces another idea for improving LoRA, allowing the pre-trained matrix W to play a role again. The main idea of LoRA is not to adjust the pre-trained matrix W, as that is resource-intensive. LoRA introduces new smaller matrices A and B, which learn the downstream task capabilities less effectively, so the performance of models trained with LoRA is generally lower than that of fine-tuned models.

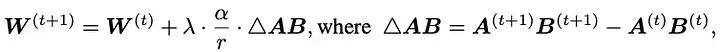

The authors of Delta-LoRA propose using the gradient of AB to update matrix W, where the gradient of AB is the difference of A*B at two consecutive time steps. This gradient is scaled by a hyperparameter λ, which controls how much influence the new training should have on the pre-trained weights.

This introduces more parameters that need to be trained with almost no computational overhead. Instead of calculating the gradient of the entire matrix W as in fine-tuning, it uses the gradients already obtained during LoRA training to update it. The authors compared this method with standard LoRA methods on many benchmarks using models like RoBERTA and GPT-2, finding improved performance compared to standard LoRA methods.

Conclusion

The research field of LoRA and its related methods is very active, with new contributions being made every day. This article explains the core ideas of some methods. If you are interested in these methods, please check the papers:

[1] LoRA: Hu, E. J., Shen, Y., Wallis, P., Allen-Zhu, Z., Li, Y., Wang, S., … & Chen, W. (2021). LoRA: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685.

[2] LoRA+: Hayou, S., Ghosh, N., & Yu, B. (2024). LoRA+: Efficient Low Rank Adaptation of Large Models. arXiv preprint arXiv:2402.12354.

[3] VeRA: Kopiczko, D. J., Blankevoort, T., & Asano, Y. M. (2023). VeRA: Vector-based random matrix adaptation. arXiv preprint arXiv:2310.11454.

[4]: LoRA-FA: Zhang, L., Zhang, L., Shi, S., Chu, X., & Li, B. (2023). LoRA-FA: Memory-efficient low-rank adaptation for large language models fine-tuning. arXiv preprint arXiv:2308.03303.

[5] LoRA-drop: Zhou, H., Lu, X., Xu, W., Zhu, C., & Zhao, T. (2024). LoRA-drop: Efficient LoRA Parameter Pruning based on Output Evaluation. arXiv preprint arXiv:2402.07721.

[6] AdaLoRA: Zhang, Q., Chen, M., Bukharin, A., He, P., Cheng, Y., Chen, W., & Zhao, T. (2023). Adaptive budget allocation for parameter-efficient fine-tuning. arXiv preprint arXiv:2303.10512.

[7] DoRA: Liu, S. Y., Wang, C. Y., Yin, H., Molchanov, P., Wang, Y. C. F., Cheng, K. T., & Chen, M. H. (2024). DoRA: Weight-Decomposed Low-Rank Adaptation. arXiv preprint arXiv:2402.09353.

[8]: Delta-LoRA: Zi, B., Qi, X., Wang, L., Wang, J., Wong, K. F., & Zhang, L. (2023). Delta-LoRA: Fine-tuning high-rank parameters with the delta of low-rank matrices. arXiv preprint arXiv:2309.02411.

Editor: Wang Jing