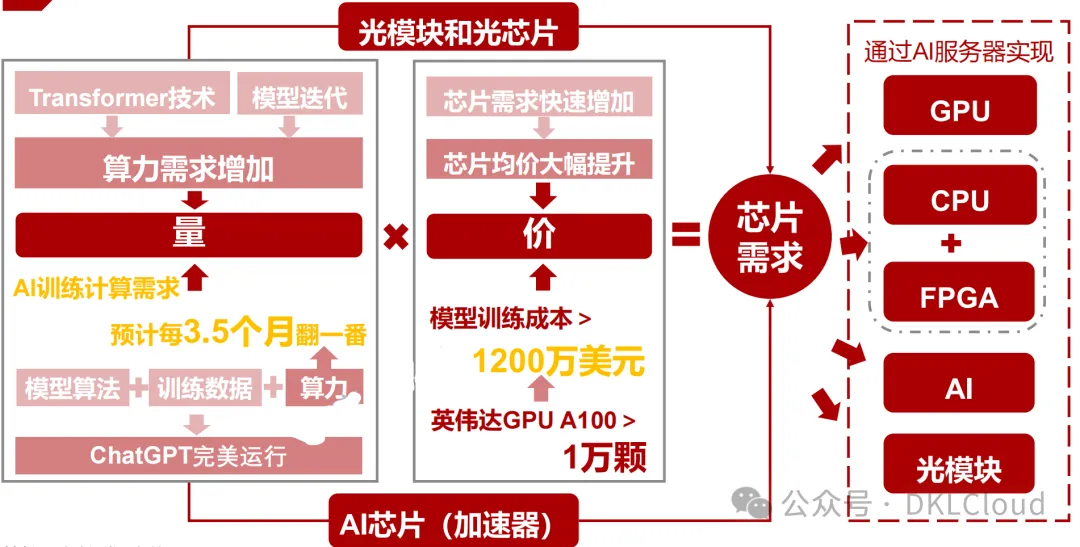

The explosion in computing power demand drives both the quantity and price of chips to rise.

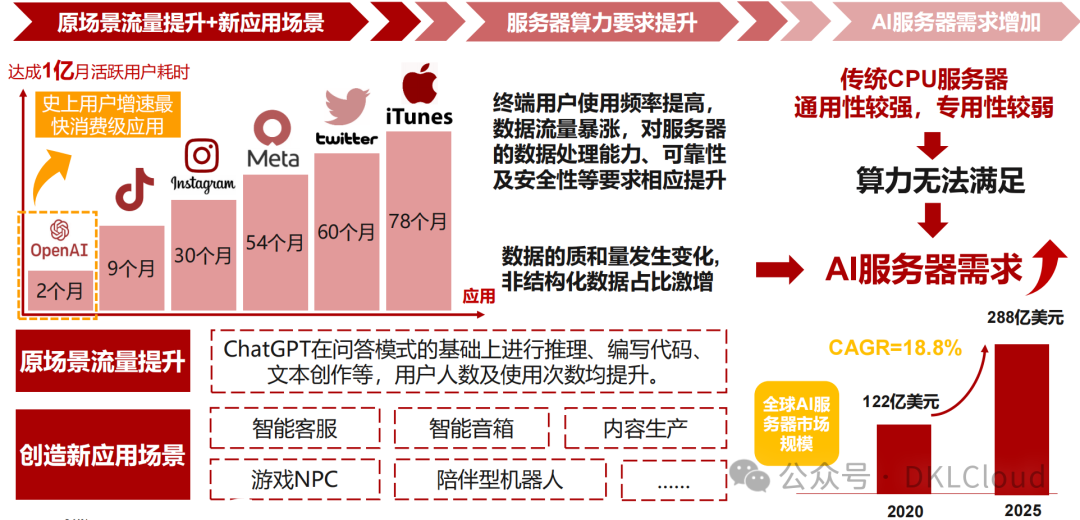

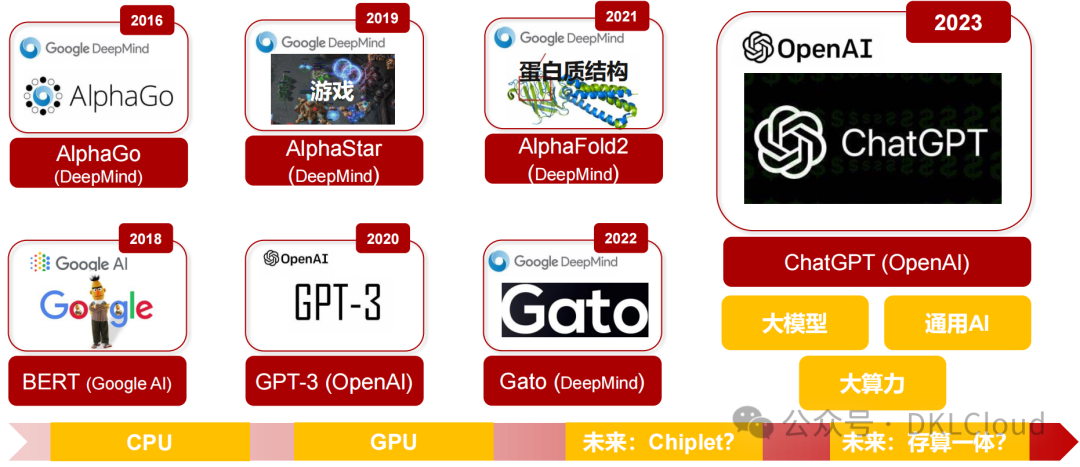

ChatGPT has swept the globe.ChatGPT (Chat Generative Pre-trained Transformer) was launched by OpenAI in December 2022 and has attracted widespread attention since its release. By January 2023, its monthly active users reached 100 million, making it the fastest-growing consumer application in history. Based on a Q&A model, ChatGPT can perform reasoning, code writing, text creation, and more. This unique advantage and user experience have significantly increased the traffic in application scenarios.

1▲ Chip Demand= Quantity↑ x Price↑ , AIGC drives the simultaneous rise in chip quantity and price.

1) Quantity:AIGC brings new scenarios + the original scenario traffic has significantly increased.① From a technical principle perspective:ChatGPT is based on Transformer technology. As the model continues to iterate, the number of layers increases, leading to a growing demand for computing power; ② From an operational conditions perspective:ChatGPT requires three perfect operating conditions: training data + model algorithms + computing power, which requires large-scale pre-training on the base model. The ability to store knowledge comes from 175 billion parameters, which require a lot of computing power.

2) Price: The demand for high-end chips will drive up the average price of chips. The cost of purchasing a top NVIDIA GPU is 80,000 yuan, and the cost of GPU servers usually exceeds 400,000 yuan. The computing power infrastructure supporting ChatGPT requires at least tens of thousands of NVIDIA GPU A100, and the rapid increase in demand for high-end chips will further raise the average price of chips.

2, the “unsung heroes” behind ChatGPT: chips, optimistic about domestic GPU, CPU, FPGA, AI chip and optical module industry chain.

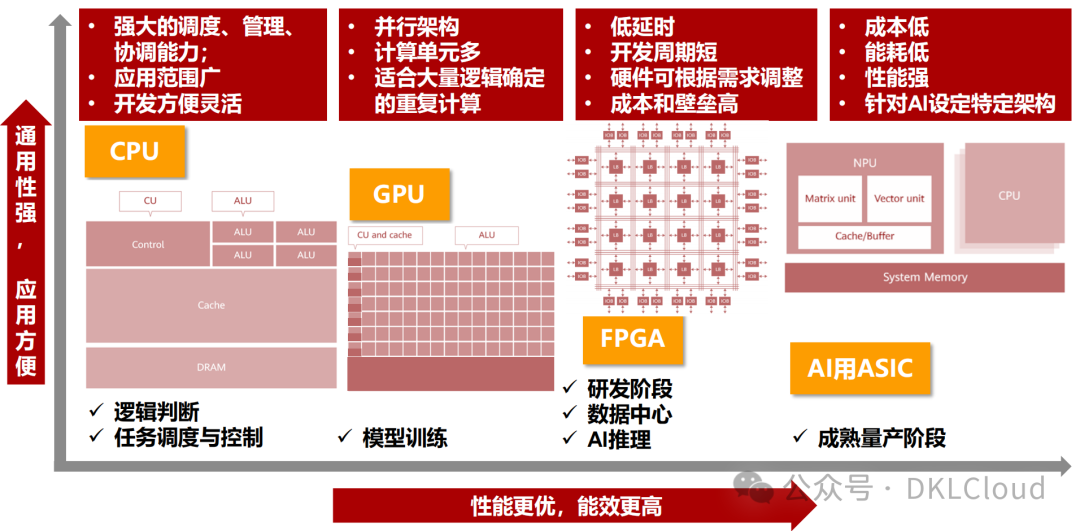

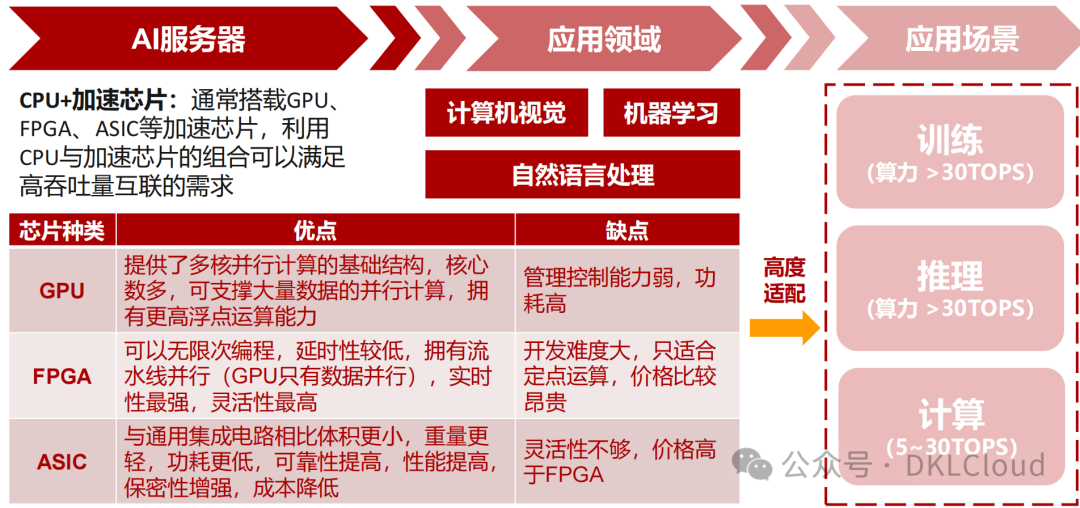

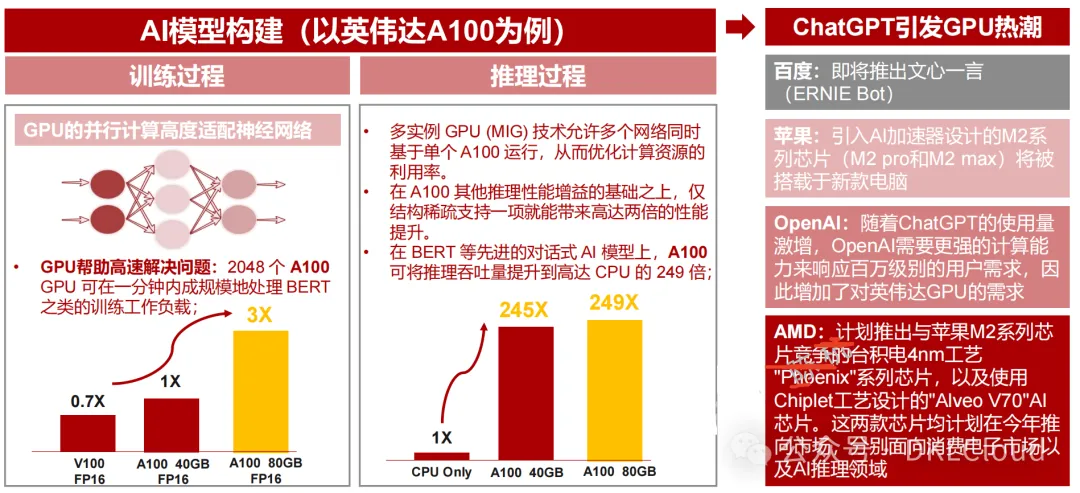

1) GPU: Supports strong computing power demand. Due to its parallel computing capabilities, it is compatible with training and inference. Currently, GPU is widely used to accelerate chips. Optimistic about Haiguang Information, Jingjiawei.

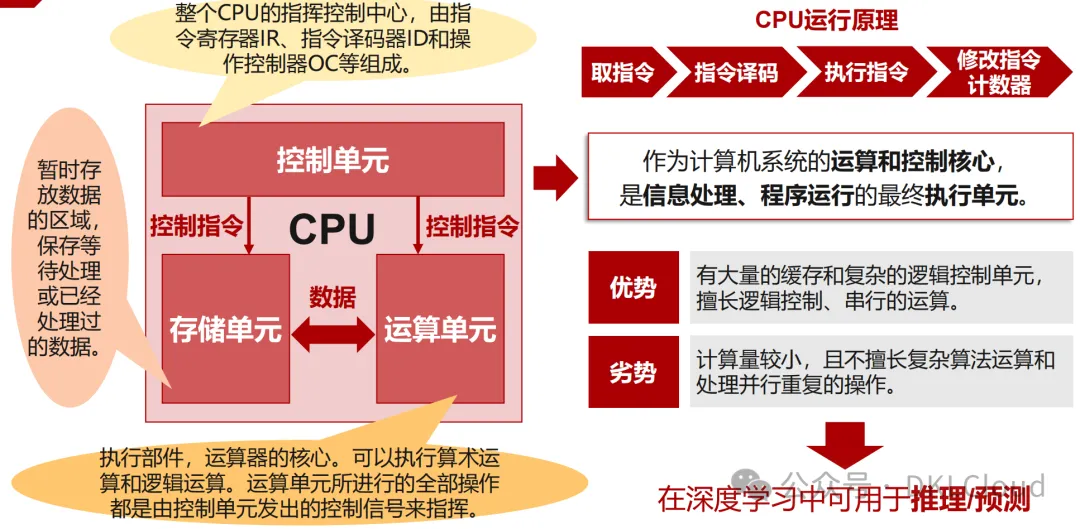

2) CPU: Can be used for inference/ prediction. AI servers utilize a combination of CPU and acceleration chips to meet high throughput interconnection needs. Optimistic about Loongson Technology, China Great Wall.

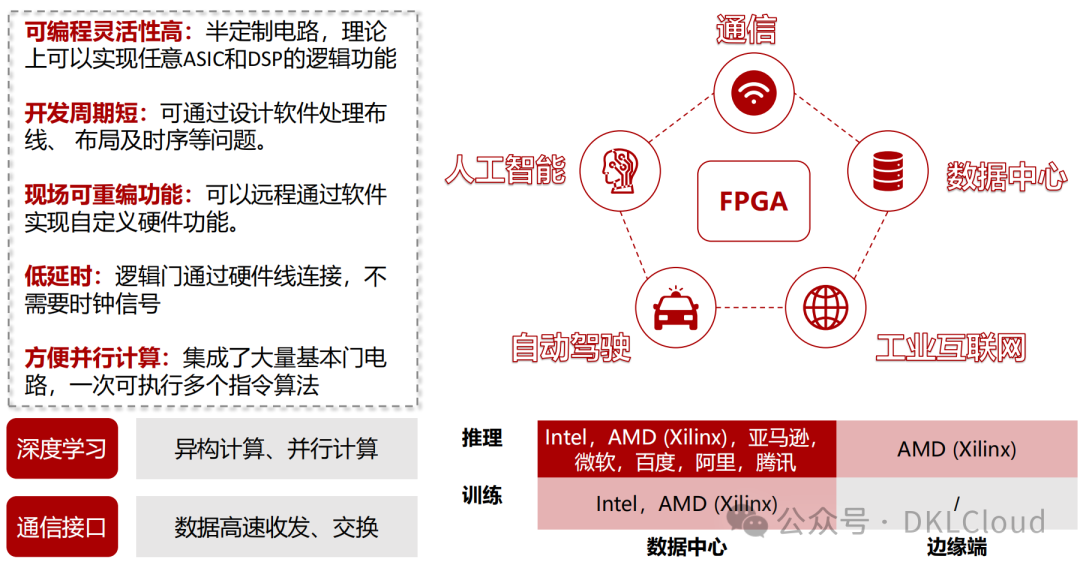

3) FPGA: Can empower large models through deep learning + distributed cluster data transmission. FPGA has advantages such as high flexibility, short development cycle, low latency, and parallel computing. Optimistic about Anlu Technology, Fudan Microelectronics, Unisoc.

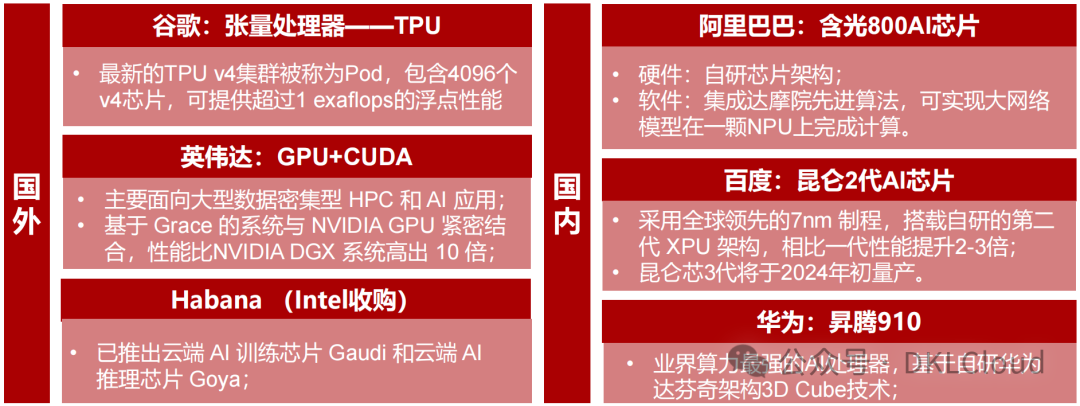

4) ASIC: Extreme performance and power consumption. AI ASIC chips are usually designed with specific architectures for AI applications, offering advantages in power consumption, reliability, and integration. Optimistic about Cambricon, Lattice Semiconductor.

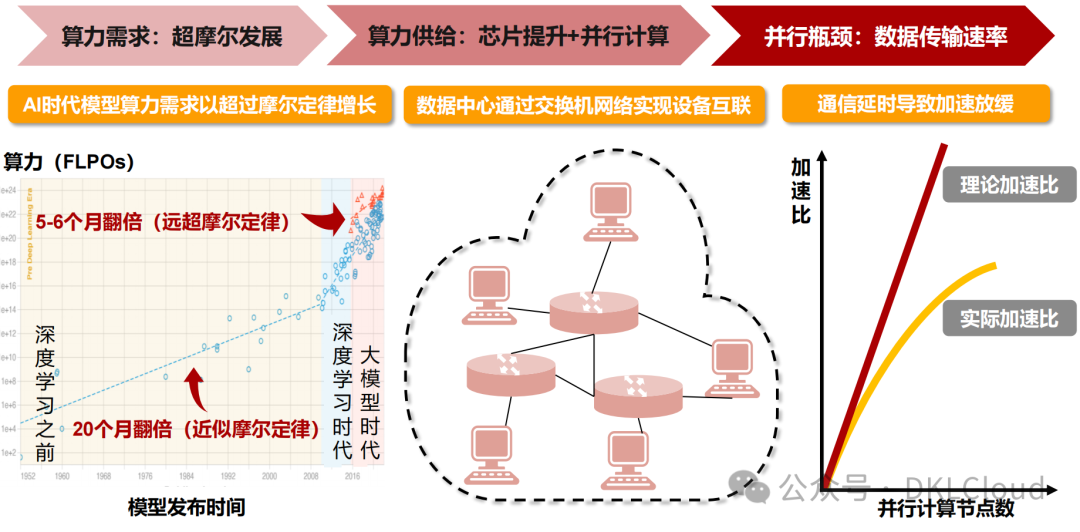

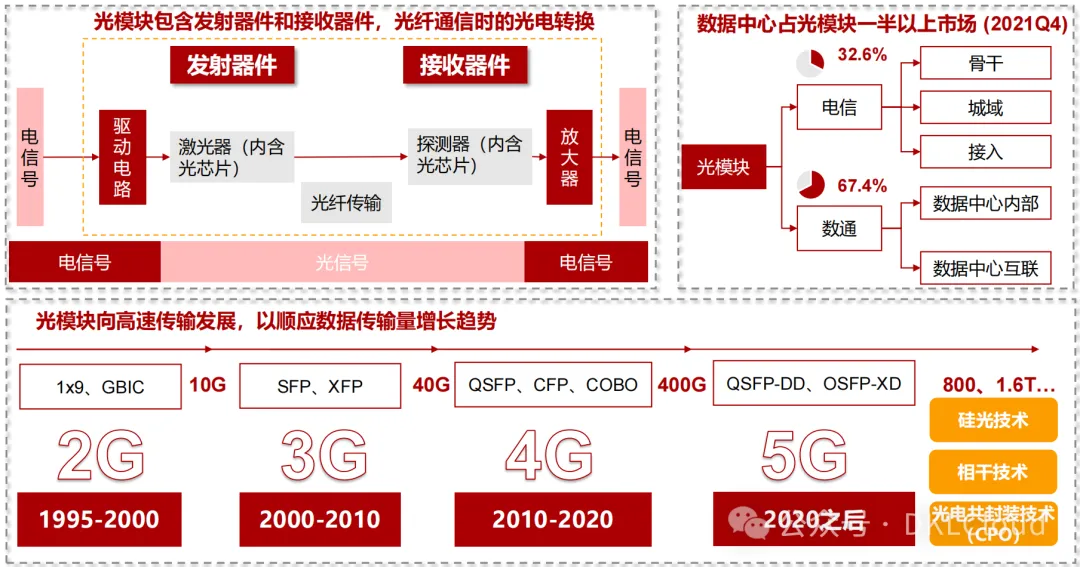

5) Optical Modules: An easily overlooked bottleneck in computing power. With the increase in data transmission volume, optical modules, as carriers for interconnecting devices within data centers, have seen a corresponding increase in demand. Optimistic about Decawave, Tianfu Communication, Zhongji Xuchuang..

1. The explosion in computing power demand drives both the quantity and price of chips to rise.

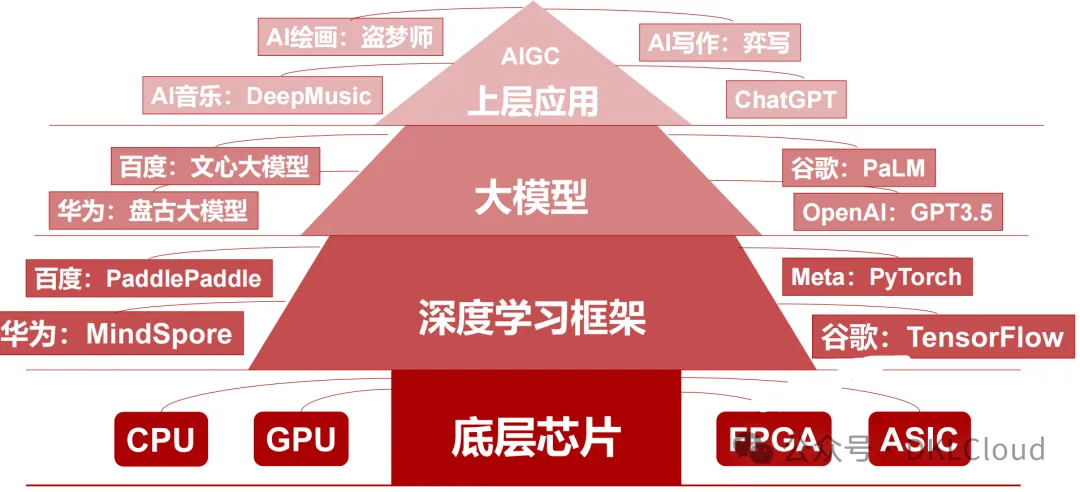

AI computing requires various chips for support.

The explosion in computing power demand leads to a simultaneous rise in chip quantity and price.

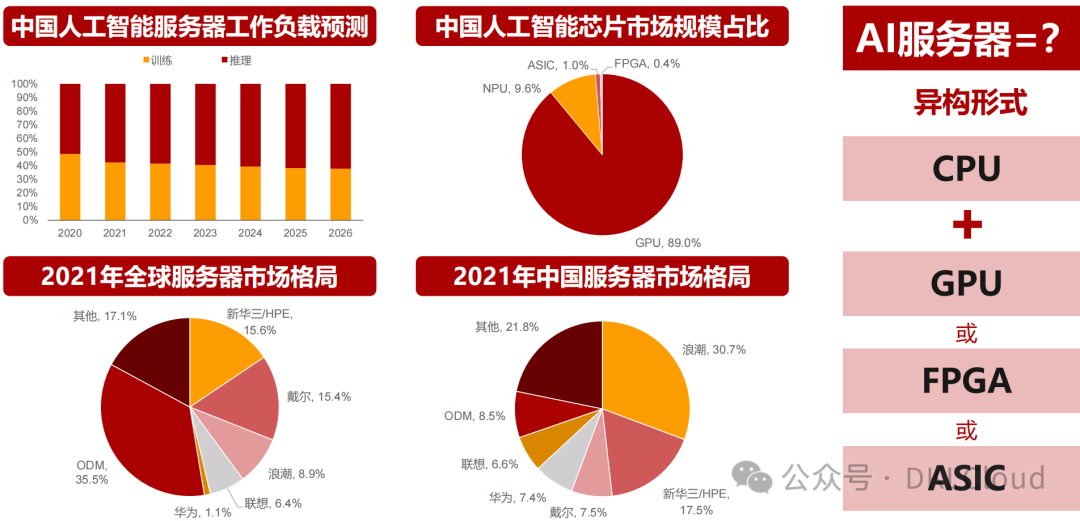

AI servers serve as the carriers of computing power.

CPU,GPU,FPGA,ASIC, and optical modules each play their roles.

1.1 The four-layer architecture of artificial intelligence, with chips as the underlying support.

1.2 Different computing tasks in artificial intelligence require various chips to achieve.

1.3 ChatGPT traffic surges, bringing significant development opportunities for AI servers.

1.4 AI servers are rapidly growing, significantly driving chip demand.

1.5 AI server chip composition——CPU+ acceleration chips.

1.6 CPU excels in logical control and can be used for inference/ prediction.

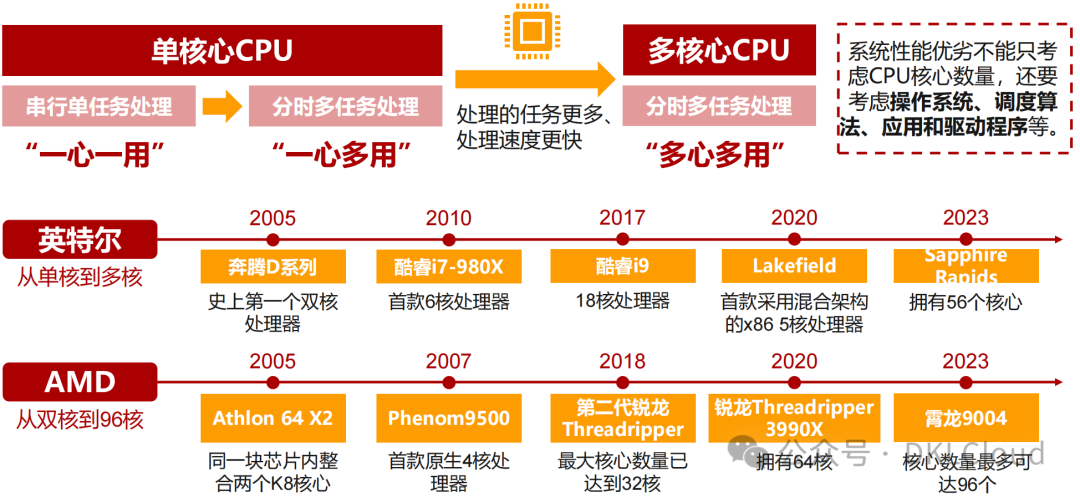

1.7 Server CPUs are evolving towards multi-core to meet the needs for increased processing power and speed.

1.7 GPU is highly compatible with AI model construction.

1.8 FPGA: Can empower large models through deep learning + distributed cluster data transmission.

1.9 ASIC can further optimize performance and power consumption, with global giants actively laying out.

Domestic and foreign ASIC chip leaders are laying out.

With the development of machine learning, edge computing, and autonomous driving, the generation of a large number of data processing tasks has led to increasing demands for chip computing efficiency, computing power, and energy consumption ratios. ASIC has gained widespread attention through its combination with CPU, and leading domestic and foreign manufacturers are actively laying out to meet the arrival of the AI era.

1.10 Data transmission rate: An easily overlooked bottleneck in computing power.

1.11 Core components of data transmission: Optical modules.

2. Technological innovation leads the local industry chain to break through.

2. Technological innovation leads the local industry chain to break through.

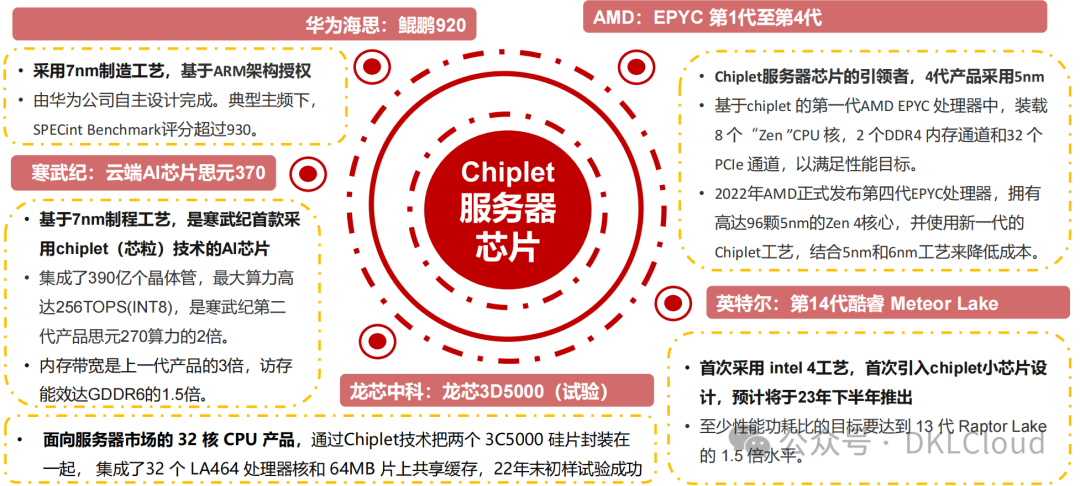

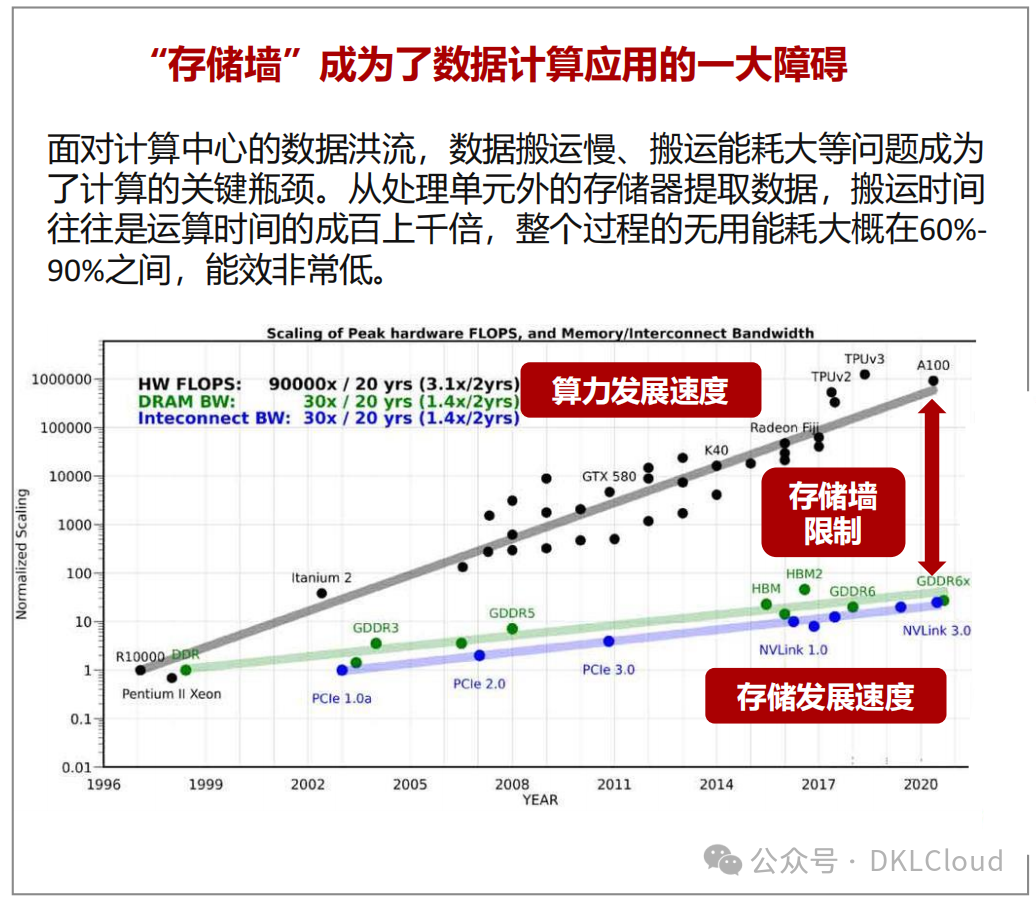

Domestic server CPU development path through CHIPLET layout of advanced processes, with server chips widely used to break the “storage wall” limitation, achieving cost reduction and efficiency improvement.

2.1 Server CPU demand growth, three development routes for localization.

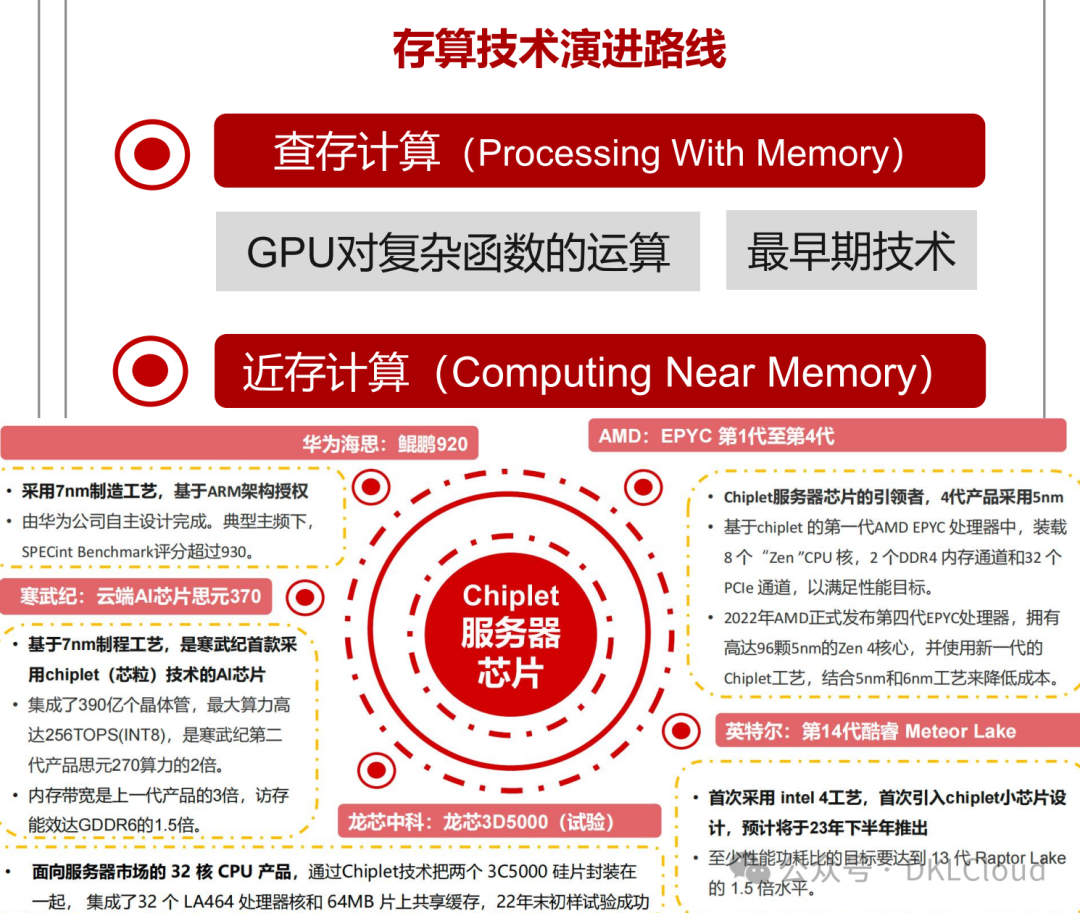

2.2 Future computing power upgrade paths: CHIPLET, storage-computing integration.

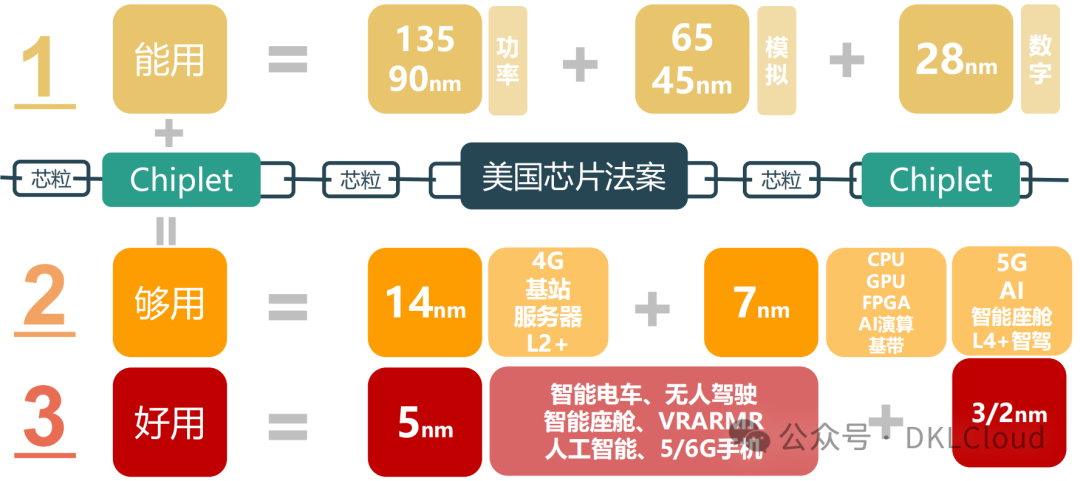

Recently, the rise of CHATGPT has driven the vigorous development of artificial intelligence at the application end, which has also posed unprecedented demands on the computing power of computing devices. Although AI chips, GPU, CPU+FPGA and other chips have already provided underlying computing power support for existing models, facing the potential exponential growth of computing power in the future, short-term use of CHIPLET heterogeneous technology to accelerate the implementation of various application algorithms, and in the long term, creating storage-computing integrated chips (reducing data movement inside and outside the chip) may become a potential way for future computing power upgrades.

2.3 CHIPLET is a key technology for laying out advanced processes and accelerating computing power upgrades.

Chiplet heterogeneous technology can not only break through the blockade of advanced processes but also significantly improve the yield of large chips, reduce design complexity and design costs, and lower chip manufacturing costs. Chiplet technology accelerates computing power upgrades but requires sacrificing some volume and power consumption, thus it will be widely used in base stations, servers, smart electric vehicles, and other fields.

2.4 CHIPLET has been widely applied in server chips.

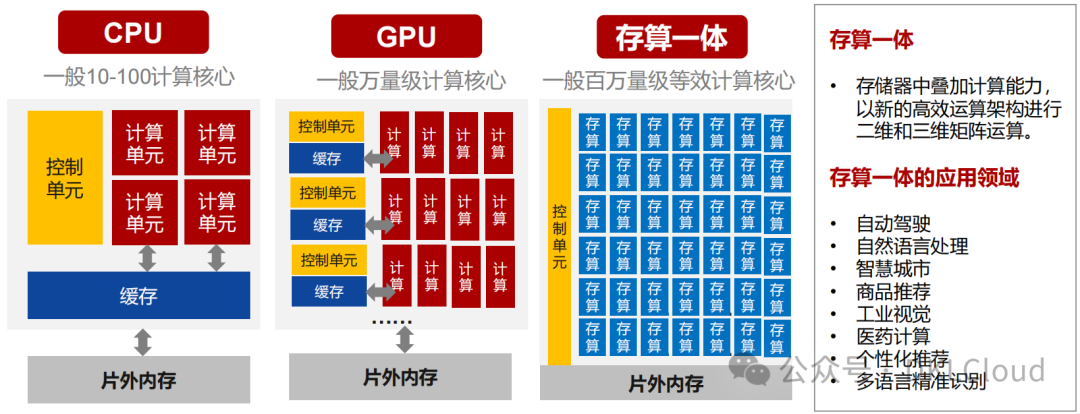

2.5 Storage-computing integration: Breaking the “storage wall” limitation, technological iteration and evolution.

2.6 Storage-computing integration: Greater computing power, higher energy efficiency, cost reduction, and efficiency improvement.

Storage-computing integration means overlaying computing power in memory, performing two-dimensional and three-dimensional matrix calculations using a new efficient computing architecture. The advantages of storage-computing integration include:

(1) Greater computing power (over 1000 TOPS)

(2) Higher energy efficiency (over 10-100 TOPS/W), surpassing traditional ASIC computing power chips.

(3) Cost reduction and efficiency improvement (can exceed an order of magnitude).

Key technologies and industry analysis of multimodal large models! 2025 Trillion space! In-depth analysis of the Xiaomi YU7 automotive industry chain 2025! Trillion concurrency level! Mainstream operating framework technologies and best practices for production-level configurations of large models! 2025

Breaking news! In 10 minutes, the large order exceeds 300,000! The Xiaomi mid-to-large luxury SUV YU7 is released!Solutions for training data of large models! 2025 Trillion track! Sudden takeoff! In-depth analysis of the brain-computer interface industry structure! 2025

Breaking news! The college entrance examination results will be announced the day after tomorrow! AI assists in college entrance examination volunteer filling guide! 2025

Trillion market! In-depth analysis of the Xinchuang industry structure! 2025! Breaking news! In-depth analysis of the Huawei Developer Conference’s heavy release of the HarmonyOS 6 operating system! 2025 Breaking news! Trillion market! Huawei releases the CloudRobo embodied intelligence platform! 2025 Breaking news! The turning point of the trillion track has arrived! Jiuzhang Smart Cloud reshapes China’s AI computing power landscape! Enterprise-level AI large model platform landing solutions! 2025 World-class mainstream AI intelligent body technology architecture and application in-depth analysis! 2025

Breaking news! Alibaba releases solutions for the development, training, and inference deployment of large models! 2025Breaking news! Trillion space! Soaring! In-depth analysis of the current state of the global AI intelligent computing power industry!Breaking news! Baidu releases key technology capability construction solutions for large model platforms! 2025

Large central state-owned enterprise data center construction plan! 2025In-depth research report on the development of intelligent computing centers network technology for operators 2025

Trillion market! In-depth analysis of the core technological concepts of the Harmony ecosystem applications! 2025

Breaking news! Huawei detonates the “digital nuclear bomb” with the global first ternary chip release! 2025 Trillion space! VMware domestic substitution market research report (2025) Trillion space! In-depth analysis of the future new intelligent humanoid robot industry chain! 2025

Trillion scale! The explosion is imminent! White paper on China’s intelligent computing power industry! 2025

Breaking news! Tencent releases the AI-native cloud construction technology white paper! 2025

Breaking news! NVIDIA will expose in-depth analysis of the new special supply chip B30 for China! 2025

The turning point has arrived! Trillion space! Challenges and solutions faced by the development of artificial intelligence computing power in China! 2025

Breaking news! Huawei releases the key technology white paper for AI intelligent bodies! 2025

Rapid growth! Overview of the development of new artificial intelligence storage! 2025

Late-night analysis! Trillion market! In-depth research report on the humanoid robot industry chain Late-night! Comprehensive tracing of large language model architecture technology! 2025 In-depth research report on the current status and development path of AGI large models 2025 Latest! Most comprehensive! In-depth explanation of AI development platforms and intelligent computing architecture framework technologies 2025