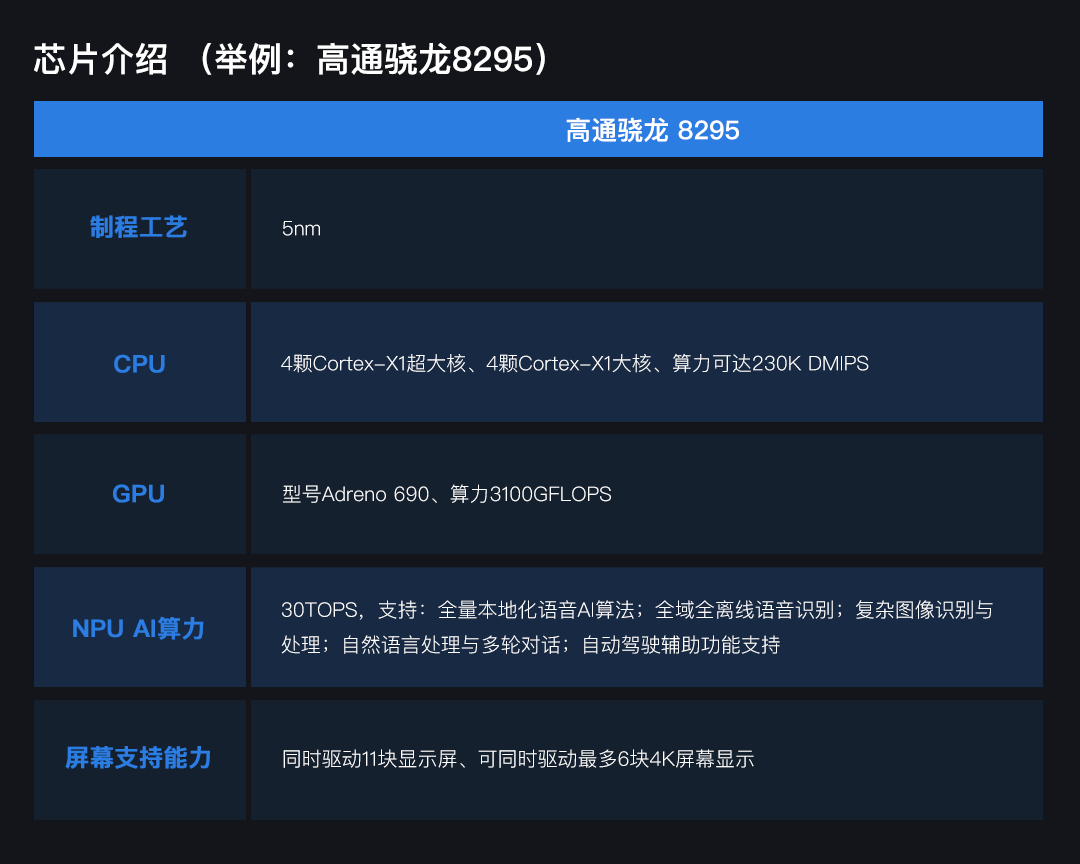

In today’s era of explosive demand for intelligent driving and immersive interaction, chip performance has become a core element defining user experience. This article takes the Qualcomm Snapdragon 8295 as an example to deeply analyze how to establish reasonable performance reference indicators for chips.

Focusing on six key scenarios—high-precision 3D graphics rendering, global 3D HMI immersive interaction, 3D intelligent driving maps, multi-screen 3D display and interaction, and voice interaction, this article dissects how chip capabilities can provide quantifiable and optimizable performance references for technology and design teams, enhancing the intelligent cockpit experience.

How to SupportHigh-Precision 3D Graphics RenderingCONTENTGraphics Processing Speed* Simple RenderingWhen displaying basic 3D geometric shapes (such as simple cubes, spheres) or in-car icons (such as volume control, air conditioning control icons), the Snapdragon 8295 chip’s GPU can process thousands of simple graphic elements per second. For example, it can easily draw and render 5000 simple 3D icons per second, with an average rendering time of about 3-5 milliseconds.* Medium Difficulty RenderingWhen rendering the exterior model of a car (assuming the model contains about 100,000 polygons) or the interior model (assuming it contains about 50,000 polygons), the Snapdragon 8295 chip’s multi-core CPU can complete the loading and preprocessing tasks of multiple models within 1-2 seconds. The GPU can process about 100-200MB of texture data per second during texture mapping, and for light and shadow calculations, it can handle millions of interactions between rays and the model’s surface per second. For example, when rendering the metallic texture of a car’s exterior, it can accurately calculate the reflection and refraction effects of light on the metal surface in a short time, making the car model look more realistic.* High Difficulty RenderingWhen rendering complex 3D game scenes (millions of polygons, including buildings, characters, etc.), the Snapdragon 8295 GPU can process billions of graphic calculations per second. For instance, the particle system can generate and render hundreds of millions of particles per second, accurately presenting particle details like dust effects during car acceleration; physical simulations can perform thousands of calculations per second to ensure realistic behaviors like collisions; global illumination can sample thousands of points per frame to make lighting appear natural.Frame Rate Performance* Simple RenderingFor simple 3D element combination animations, the frame rate can easily maintain above 60fps (frames per second), even when displaying 10-20 simple 3D elements simultaneously, ensuring smooth animation effects.* Medium Difficulty RenderingWhen displaying these detailed 3D models, the frame rate can stabilize around 30-40fps. Users can smoothly observe the model changes when rotating or zooming in on the car model without noticeable stuttering.* High Difficulty RenderingIn high difficulty rendering, 3D game scenes can maintain a steady 20-30fps, and high-precision 3D maps can quickly update based on vehicle speed and position, maintaining above 15-20fps, with smooth virtual driving effects.Resource Usage* Simple RenderingAt this time, the resource usage of the CPU and GPU is relatively low, with the CPU possibly only occupying 10%-20% of core performance, while the GPU usage may be around 10%.* Medium Difficulty RenderingCPU core usage may rise to 40%-60%, with some cores used for model loading and data preprocessing, and others assisting the GPU in data transfer and some simple calculations.GPU usage will increase to40%-50%, mainly for texture mapping, light and shadow calculations, and other complex graphic processing tasks.* High Difficulty RenderingCPU core usage70%-90%, with some used for game logic and physical simulations, and others assisting GPU data transfer and graphic calculations; GPU usage is 70%-80% for rendering special effects; NPU usage is 20%-30% for graphic-related intelligent optimization.How to SupportGlobal 3D HMIImmersive Graphics RenderingCONTENTGame Interface* Simple RenderingIn a 2D puzzle game with 50 pieces (about 20 polygons/piece), with a two-color gradient background. The GPU can render 5000 frames per second, with the CPU occupying 12% to handle piece movement, rotation, and assembly. The operation response is less than 10 milliseconds, allowing for stable parallel operation of music playback and vehicle status monitoring, with a monitoring data refresh rate of 1Hz.* Medium Difficulty Rendering3D racing, with 3000 polygons (with 512×512 textures), and the track has over 50000 polygons (including spectators and buildings). The GPU performs 400 million calculations per second, with the CPU occupying 50% (partly loading resources, partly assisting the GPU), with a frame rate of 35fps, and the chip at 50℃ can simultaneously run in-car navigation ( 1Hz map updates, 5-meter accuracy).* High Difficulty RenderingOpen-world 3D games, with over 8 million polygons (including buildings, vegetation, etc.), and rich special effects. The GPU performs 3 billion calculations per second, with the CPU occupying 80% (logic, simulation, and assisting GPU), with a frame rate of 25fps, running for 5 hours at 80℃, maintaining some functions of automatic driving assistance (lane departure warning in 0.5 seconds).Dynamic Background* Simple Rendering30 stars twinkling in the background, the GPU performs 3 million changes per second, with memory bandwidth occupying 5%, average power consumption 1 watt, with a temperature increase of 5℃ over 10 hours, creating only a slight dynamic atmosphere.* Medium Difficulty Rendering2000 wheat stalks ( 50 polygons/each, 256×256 textures) swaying in the wind, the GPU performs 40 million calculations per second, with the CPU occupying 20% to control wind direction fluctuations, without affecting music playback ( 50 milliseconds volume adjustment delay).* High Difficulty RenderingMillions of particles for weather and ray tracing, with the GPU performing 5 billion calculations per second, and the CPU and NPU collaborating (logic, optimization), maintaining the vehicle’s intelligent interconnection system (over 10Mbps).Based on Option-Based Stereoscopic Visual Scenes* Simple Rendering10 stereoscopic icons with 30 polygons (such as volume control), switching takes 5 milliseconds, with the CPU occupying 15% (data processing, instruction transmission, etc.), and the GPU occupying 10%, without affecting the stable operation of the vehicle’s air conditioning ( ±0.5℃ temperature control).* Medium Difficulty Rendering30,000 polygons in an indoor scene, switching takes 1.5 seconds (200MB/second loading), with the CPU occupying 55% (loading, logic processing, etc.), the GPU occupying 45%, and the NPU occupying 10% for preloading (70% accuracy), maintaining the vehicle’s dashboard data updates (100 milliseconds delay).* High Difficulty Rendering5 million polygons in a virtual city, switching takes 2.5 seconds (300MB/second updates), with the CPU occupying 85%, the GPU occupying 75%, and the NPU occupying 25% (behavior prediction 60% accuracy), maintaining multimedia entertainment (1080p 60fps video).How to Support3D Intelligent Driving MapsCONTENTComplex Intersection Navigation Scenes in Cities* Scene DescriptionVehicles in the city approach a multi-branch intersection, with traffic lights, crosswalks, lanes, and tall buildings, and complex traffic signs, with people and vehicles bustling.* Chip Support MethodThe GPU of the Snapdragon 8295 quickly creates high-definition 3D intersections, accurately displaying building details and traffic elements, with clear signs in bright light.* Performance IndicatorsThe GPU performs hundreds of millions of calculations per second, with a frame rate of around 30fps to ensure smoothness. The CPU occupies 40%-50%, processing map and traffic information. The AI unit recognizes traffic participants with an accuracy of over 95%.Highway Automatic Driving Assistance Scenes* Scene DescriptionDriving on the highway, with dense vehicles, facilities on both sides, weather changes, and vehicles may activate automatic driving assistance.* Chip Support MethodThe chip’s GPU renders high-speed scenes in real-time, using LOD technology to present distant views, showing raindrop effects in rainy weather, and accurately identifying lanes and vehicles by integrating data.* Performance IndicatorsThe GPU performs billions of calculations per second, with a frame rate of 25-30fps. The CPU occupies 60%-70%, with some used for automatic driving-related calculations. The AI unit predicts vehicle behavior with an accuracy of over 80%.Mountain Off-Road Navigation Scenes* Scene DescriptionVehicles off-road in the mountains, with rugged roads and complex terrain, requiring the map to display terrain, paths, and landmarks.* Chip Support MethodThe GPU of the Snapdragon 8295 renders realistic mountains, highlighting off-road paths, and the AI marks danger zones.* Performance IndicatorsThe GPU performs hundreds of millions of calculations per second, with a frame rate of 20-25fps. The CPU occupies 70%-80% for terrain and path calculations. The AI unit identifies danger zones with an accuracy of over 90%.How to SupportMulti-Screen 3D Display and InteractionCONTENTSimple Level* Screen Content DescriptionWhen switching between 3D vehicle status displays (such as door opening and closing actions presented through a 3D car model) and 3D navigation functions (simple intersections with several buildings and lane polygon models), the transition from the instrument screen to the central control screen for navigation maps is smooth. When adjusting 3D navigation settings (such as zooming, switching perspectives) on the central control screen, the operation can quickly respond, and the instrument screen navigation prompts can also update synchronously.* Chip Data Support3D car models have about 1500-2000 polygons, and simple intersections contain 5-8 buildings and 3-5 lane polygon models. The Snapdragon 8295 chip can ensure that the switching delay is less than 100 milliseconds, with the central control screen adjusting 3D navigation settings operation having a feedback time of 40-60 milliseconds, and the instrument screen navigation prompts ( 10-20KB data) updating synchronously in 80-100 milliseconds, with zooming and perspective switching issuing about 500,000-800,000 commands per second.Medium Difficulty Level* Screen Content DescriptionIn a 3D game cross-screen interaction scene, switching from the first-person driving perspective on the central control screen to the 3D bird’s-eye view on the passenger entertainment screen, and marking track props on the passenger screen can display on the central control screen, ensuring real-time collaboration among players.* Chip Data SupportGPU is responsible for rendering, CPU (4 large cores +4 small cores, main frequency 2.84GHz) handles interaction logic and data transmission, ensuring efficient execution of screen switching and interaction in complex game scenes through a 10Gbps in-vehicle Ethernet interface.High Difficulty Level* Screen Content DescriptionThe driver can flexibly switch 3D navigation perspectives and enlarge building models on the central control screen using gestures, with instant response, accurately planning routes; passengers in the front seat can freely play 3D games, smoothly controlling characters, and can also exchange game information with the central control screen; passengers in the back seat can easily zoom in, rotate 3D scenic models, and control 3D animations, with quick scene switching.* Chip Data SupportWith 230kdmips computing power, it can handle multiple user operation tasks simultaneously; its GPU reaches 2.9tflops (32-bit) and 5.8tflops (16-bit) computing power, supporting high-quality 3D rendering; 30TOPS of AI computing power enables voice localization and multi-modal perception, pre-integrated with a 5G platform and supporting various connectivity technologies, achieving smooth experiences for multiple users simultaneously controlling 3D functions in the vehicle cockpit, a high-difficulty scenario.How to Support Voice InteractionCONTENTSimple Level* Function DescriptionThrough voice control 3D navigation, such as saying “Go to a certain place” automatically plans the route, “Zoom in/Zoom out the map” and “Switch perspective” and other operations, the 3D map will respond accordingly.* Chip Data SupportEfficient voice recognition: Dual NPU working together enhances voice recognition accuracy, accurately identifying user voice commands such as “Zoom in the map” and “Switch perspective” and the full-end voice recognition speed is as fast as 500ms, with end-to-end voice response speed within 700ms, making user operations smoother..

How to SupportHigh-Precision 3D Graphics RenderingCONTENTGraphics Processing Speed* Simple RenderingWhen displaying basic 3D geometric shapes (such as simple cubes, spheres) or in-car icons (such as volume control, air conditioning control icons), the Snapdragon 8295 chip’s GPU can process thousands of simple graphic elements per second. For example, it can easily draw and render 5000 simple 3D icons per second, with an average rendering time of about 3-5 milliseconds.* Medium Difficulty RenderingWhen rendering the exterior model of a car (assuming the model contains about 100,000 polygons) or the interior model (assuming it contains about 50,000 polygons), the Snapdragon 8295 chip’s multi-core CPU can complete the loading and preprocessing tasks of multiple models within 1-2 seconds. The GPU can process about 100-200MB of texture data per second during texture mapping, and for light and shadow calculations, it can handle millions of interactions between rays and the model’s surface per second. For example, when rendering the metallic texture of a car’s exterior, it can accurately calculate the reflection and refraction effects of light on the metal surface in a short time, making the car model look more realistic.* High Difficulty RenderingWhen rendering complex 3D game scenes (millions of polygons, including buildings, characters, etc.), the Snapdragon 8295 GPU can process billions of graphic calculations per second. For instance, the particle system can generate and render hundreds of millions of particles per second, accurately presenting particle details like dust effects during car acceleration; physical simulations can perform thousands of calculations per second to ensure realistic behaviors like collisions; global illumination can sample thousands of points per frame to make lighting appear natural.Frame Rate Performance* Simple RenderingFor simple 3D element combination animations, the frame rate can easily maintain above 60fps (frames per second), even when displaying 10-20 simple 3D elements simultaneously, ensuring smooth animation effects.* Medium Difficulty RenderingWhen displaying these detailed 3D models, the frame rate can stabilize around 30-40fps. Users can smoothly observe the model changes when rotating or zooming in on the car model without noticeable stuttering.* High Difficulty RenderingIn high difficulty rendering, 3D game scenes can maintain a steady 20-30fps, and high-precision 3D maps can quickly update based on vehicle speed and position, maintaining above 15-20fps, with smooth virtual driving effects.Resource Usage* Simple RenderingAt this time, the resource usage of the CPU and GPU is relatively low, with the CPU possibly only occupying 10%-20% of core performance, while the GPU usage may be around 10%.* Medium Difficulty RenderingCPU core usage may rise to 40%-60%, with some cores used for model loading and data preprocessing, and others assisting the GPU in data transfer and some simple calculations.GPU usage will increase to40%-50%, mainly for texture mapping, light and shadow calculations, and other complex graphic processing tasks.* High Difficulty RenderingCPU core usage70%-90%, with some used for game logic and physical simulations, and others assisting GPU data transfer and graphic calculations; GPU usage is 70%-80% for rendering special effects; NPU usage is 20%-30% for graphic-related intelligent optimization.How to SupportGlobal 3D HMIImmersive Graphics RenderingCONTENTGame Interface* Simple RenderingIn a 2D puzzle game with 50 pieces (about 20 polygons/piece), with a two-color gradient background. The GPU can render 5000 frames per second, with the CPU occupying 12% to handle piece movement, rotation, and assembly. The operation response is less than 10 milliseconds, allowing for stable parallel operation of music playback and vehicle status monitoring, with a monitoring data refresh rate of 1Hz.* Medium Difficulty Rendering3D racing, with 3000 polygons (with 512×512 textures), and the track has over 50000 polygons (including spectators and buildings). The GPU performs 400 million calculations per second, with the CPU occupying 50% (partly loading resources, partly assisting the GPU), with a frame rate of 35fps, and the chip at 50℃ can simultaneously run in-car navigation ( 1Hz map updates, 5-meter accuracy).* High Difficulty RenderingOpen-world 3D games, with over 8 million polygons (including buildings, vegetation, etc.), and rich special effects. The GPU performs 3 billion calculations per second, with the CPU occupying 80% (logic, simulation, and assisting GPU), with a frame rate of 25fps, running for 5 hours at 80℃, maintaining some functions of automatic driving assistance (lane departure warning in 0.5 seconds).Dynamic Background* Simple Rendering30 stars twinkling in the background, the GPU performs 3 million changes per second, with memory bandwidth occupying 5%, average power consumption 1 watt, with a temperature increase of 5℃ over 10 hours, creating only a slight dynamic atmosphere.* Medium Difficulty Rendering2000 wheat stalks ( 50 polygons/each, 256×256 textures) swaying in the wind, the GPU performs 40 million calculations per second, with the CPU occupying 20% to control wind direction fluctuations, without affecting music playback ( 50 milliseconds volume adjustment delay).* High Difficulty RenderingMillions of particles for weather and ray tracing, with the GPU performing 5 billion calculations per second, and the CPU and NPU collaborating (logic, optimization), maintaining the vehicle’s intelligent interconnection system (over 10Mbps).Based on Option-Based Stereoscopic Visual Scenes* Simple Rendering10 stereoscopic icons with 30 polygons (such as volume control), switching takes 5 milliseconds, with the CPU occupying 15% (data processing, instruction transmission, etc.), and the GPU occupying 10%, without affecting the stable operation of the vehicle’s air conditioning ( ±0.5℃ temperature control).* Medium Difficulty Rendering30,000 polygons in an indoor scene, switching takes 1.5 seconds (200MB/second loading), with the CPU occupying 55% (loading, logic processing, etc.), the GPU occupying 45%, and the NPU occupying 10% for preloading (70% accuracy), maintaining the vehicle’s dashboard data updates (100 milliseconds delay).* High Difficulty Rendering5 million polygons in a virtual city, switching takes 2.5 seconds (300MB/second updates), with the CPU occupying 85%, the GPU occupying 75%, and the NPU occupying 25% (behavior prediction 60% accuracy), maintaining multimedia entertainment (1080p 60fps video).How to Support3D Intelligent Driving MapsCONTENTComplex Intersection Navigation Scenes in Cities* Scene DescriptionVehicles in the city approach a multi-branch intersection, with traffic lights, crosswalks, lanes, and tall buildings, and complex traffic signs, with people and vehicles bustling.* Chip Support MethodThe GPU of the Snapdragon 8295 quickly creates high-definition 3D intersections, accurately displaying building details and traffic elements, with clear signs in bright light.* Performance IndicatorsThe GPU performs hundreds of millions of calculations per second, with a frame rate of around 30fps to ensure smoothness. The CPU occupies 40%-50%, processing map and traffic information. The AI unit recognizes traffic participants with an accuracy of over 95%.Highway Automatic Driving Assistance Scenes* Scene DescriptionDriving on the highway, with dense vehicles, facilities on both sides, weather changes, and vehicles may activate automatic driving assistance.* Chip Support MethodThe chip’s GPU renders high-speed scenes in real-time, using LOD technology to present distant views, showing raindrop effects in rainy weather, and accurately identifying lanes and vehicles by integrating data.* Performance IndicatorsThe GPU performs billions of calculations per second, with a frame rate of 25-30fps. The CPU occupies 60%-70%, with some used for automatic driving-related calculations. The AI unit predicts vehicle behavior with an accuracy of over 80%.Mountain Off-Road Navigation Scenes* Scene DescriptionVehicles off-road in the mountains, with rugged roads and complex terrain, requiring the map to display terrain, paths, and landmarks.* Chip Support MethodThe GPU of the Snapdragon 8295 renders realistic mountains, highlighting off-road paths, and the AI marks danger zones.* Performance IndicatorsThe GPU performs hundreds of millions of calculations per second, with a frame rate of 20-25fps. The CPU occupies 70%-80% for terrain and path calculations. The AI unit identifies danger zones with an accuracy of over 90%.How to SupportMulti-Screen 3D Display and InteractionCONTENTSimple Level* Screen Content DescriptionWhen switching between 3D vehicle status displays (such as door opening and closing actions presented through a 3D car model) and 3D navigation functions (simple intersections with several buildings and lane polygon models), the transition from the instrument screen to the central control screen for navigation maps is smooth. When adjusting 3D navigation settings (such as zooming, switching perspectives) on the central control screen, the operation can quickly respond, and the instrument screen navigation prompts can also update synchronously.* Chip Data Support3D car models have about 1500-2000 polygons, and simple intersections contain 5-8 buildings and 3-5 lane polygon models. The Snapdragon 8295 chip can ensure that the switching delay is less than 100 milliseconds, with the central control screen adjusting 3D navigation settings operation having a feedback time of 40-60 milliseconds, and the instrument screen navigation prompts ( 10-20KB data) updating synchronously in 80-100 milliseconds, with zooming and perspective switching issuing about 500,000-800,000 commands per second.Medium Difficulty Level* Screen Content DescriptionIn a 3D game cross-screen interaction scene, switching from the first-person driving perspective on the central control screen to the 3D bird’s-eye view on the passenger entertainment screen, and marking track props on the passenger screen can display on the central control screen, ensuring real-time collaboration among players.* Chip Data SupportGPU is responsible for rendering, CPU (4 large cores +4 small cores, main frequency 2.84GHz) handles interaction logic and data transmission, ensuring efficient execution of screen switching and interaction in complex game scenes through a 10Gbps in-vehicle Ethernet interface.High Difficulty Level* Screen Content DescriptionThe driver can flexibly switch 3D navigation perspectives and enlarge building models on the central control screen using gestures, with instant response, accurately planning routes; passengers in the front seat can freely play 3D games, smoothly controlling characters, and can also exchange game information with the central control screen; passengers in the back seat can easily zoom in, rotate 3D scenic models, and control 3D animations, with quick scene switching.* Chip Data SupportWith 230kdmips computing power, it can handle multiple user operation tasks simultaneously; its GPU reaches 2.9tflops (32-bit) and 5.8tflops (16-bit) computing power, supporting high-quality 3D rendering; 30TOPS of AI computing power enables voice localization and multi-modal perception, pre-integrated with a 5G platform and supporting various connectivity technologies, achieving smooth experiences for multiple users simultaneously controlling 3D functions in the vehicle cockpit, a high-difficulty scenario.How to Support Voice InteractionCONTENTSimple Level* Function DescriptionThrough voice control 3D navigation, such as saying “Go to a certain place” automatically plans the route, “Zoom in/Zoom out the map” and “Switch perspective” and other operations, the 3D map will respond accordingly.* Chip Data SupportEfficient voice recognition: Dual NPU working together enhances voice recognition accuracy, accurately identifying user voice commands such as “Zoom in the map” and “Switch perspective” and the full-end voice recognition speed is as fast as 500ms, with end-to-end voice response speed within 700ms, making user operations smoother..

Outstanding Graphics Processing: GPU performance is twice that of 8155, and the 3D rendering capability is three times that of 8155, ensuring that 3D navigation interface map zooming, perspective switching, and other operations have smooth and natural animation effects, presenting highly realistic 3D roads, buildings, and other geographical elements.

Medium Difficulty Level* Function DescriptionVoice control of vehicle devices, such as “Set air conditioning to 25 degrees” and “Adjust seat heating level” and so on, the 3D car model synchronously displays status changes, such as air conditioning vents showing temperature, seat color changing to indicate heating level, and opening and closing doors and windows also have animations showing actual actions.* Chip Data SupportMulti-tasking capability: With high computing power and dual NPU, it can simultaneously process voice commands and vehicle device status data, allowing for rapid feedback of device status information to the 3D vehicle model when users control vehicle devices such as air conditioning and seat heating, achieving real-time synchronization of voice commands and 3D interface displays.

High-Resolution Display Support: Supports 6k resolution high-quality displays, clearly showing 3D car model details and various device status information icons, text descriptions, etc., allowing users to intuitively and clearly understand the status of various vehicle devices.

High Difficulty Level* Function DescriptionVoice triggers 3D intelligent scenes, such as “Sleep mode” where the vehicle automatically adjusts the seat, lighting, and air conditioning, and the 3D interface displays a quiet sleep scene and plays soothing sounds; “Sport mode” where the interface switches to a dynamic technology style, adjusting vehicle performance, displaying real-time sports data, and simulating racing scenes.* Chip Data SupportPowerful AI Fusion Processing Capability: Integrating dual NPU and powerful CPU, GPU, it can deeply integrate and process various sensor data, voice data, and 3D scene data. For example, when switching to “Sleep mode” or “Sport mode” it can coordinate the operation of multiple devices such as vehicle lighting, seats, air conditioning, and power according to scene requirements, and present corresponding virtual environments and dynamic effects on the 3D interface.Real-time Rendering of Complex Scenes: With powerful 3D rendering capabilities and high computing power, it can render 3D virtual environments in real-time that match intelligent scenes, such as in “Sport mode” where it can display high-frame-rate, technology-filled, dynamic sports-themed scenes, simulate the vehicle speeding on the track, and provide real-time acceleration performance curves, G-value changes, and other data information, bringing users an immersive experience.

This article uses the Snapdragon 8295 as an example to share the design of chip performance indicators and the deep coupling with scene requirements. For technology developers, indicators are the underlying guarantee for functional implementation; for designers, indicators are the feasibility scale for interactive creativity.

In the future, with the iteration of technologies such as AR-HUD and integrated cockpit, the only constant is the underlying methodology of “demand-driven performance definition”—only by transforming chip capabilities into quantifiable language of user experience can we continuously break through the innovation of intelligent terminals.

—end—

If the QR code fails, please add Xiao Zhi on WeChat:

If the QR code fails, please add Xiao Zhi on WeChat: