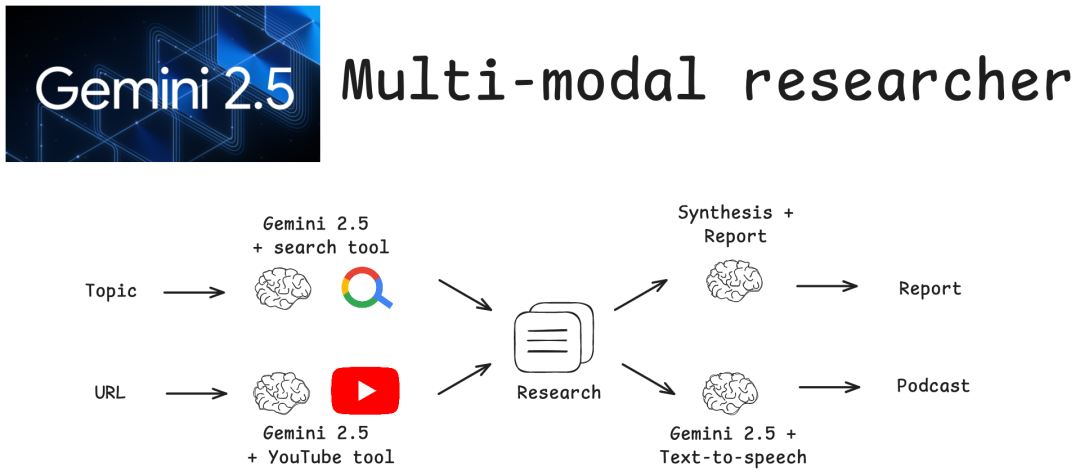

“Multi-Modal Researcher” is a simple yet efficient workflow for research and podcast generation that leverages the unique capabilities of the LangGraph and the Google Gemini 2.5 model family, integrating three practical features of this model family. Users can input a research topic and optionally provide a YouTube video link. The system then utilizes a search function to conduct research on the topic, analyze the video content, combine insights from both, and ultimately generate a report with citations and a brief podcast on the topic. This project fully utilizes the following native capabilities of Gemini:

- Video Understanding and Native YouTube Tools: Capable of integrating and processing YouTube videos.

- Google Search Tool: Features native integration with Google Search tools to obtain real-time web results.

- Multi-Speaker Text-to-Speech Conversion: Can generate natural conversations with different speaker voices.

References:

- https://github.com/langchain-ai/multi-modal-researcher

Give athumbs up and like and follow, you are the best~