Why have tactile sensors not become widespread in the field of robotics, and why is this situation about to change?

Amazon’s Vulcan is its first robot to utilize tactile sensing technology for fine manipulation. In tightly constrained grasping and placing scenarios, tactile sensing should be a disruptive key.

While humans primarily rely on vision, we cannot complete all tasks solely with our eyes. This starkly contrasts with today’s robotic artificial intelligence. Currently, the best practice in robotics is to create a fully visualized task environment, ensuring that robots can see all the information needed to perform tasks at any moment.

Humans, on the other hand, have a rich array of senses to back us:such as proprioception, which perceives the position of our limbs, a long-range context window constructed from memory and mental models, and of course, our sense of touch..

However, in robotics research, touch is often an overlooked area. Take the renowned pi-0.5vision-language-action model as an example. It may be the culmination of such models, trained by the team that initially promoted this concept, performing exceptionally in numerous tasks and unprecedented home environments. Yet even it relies solely on proprioception and onboard cameras.

However, as I mentioned in my summary report at ICRA 2025, there has been a recent resurgence of interest in these technologies within academia. Therefore, I want to delve into why we rarely see applications of tactile sensing and what efforts researchers are making to endow robots with this important sense.

Why Do We Rarely See Tactile Sensors?

Over the years, a plethora of tactile sensors have emerged on the market—from Meta’s Digit sensor to SynTouch’s Biotac, among others. However, historically, these sensors can be categorized as a type of “well-received but underutilized” robotic technology. This is not due to poor performance of the sensors themselves (in fact, they are often quite excellent), but rather because they areexpensive anddifficult to implement in any truly valuable applications.

Even if youcan do some interesting things with these sensors, the more common practice is to completely ignore their unique tactile properties: NVIDIA’s Dextreme project is a typical example, where the robotic hand is equipped with SynTouch Biotac sensors but does not utilize any form of tactile feedback!

Why is this the case? The answer remains: they are too difficult to use. While sensors like BioTac can predict force at different locations, they are extremely challenging to model in simulation environments and even harder to learn their dynamic models from real data. In fact, just interpreting the signals output by BioTac is a significant research topic in itself.

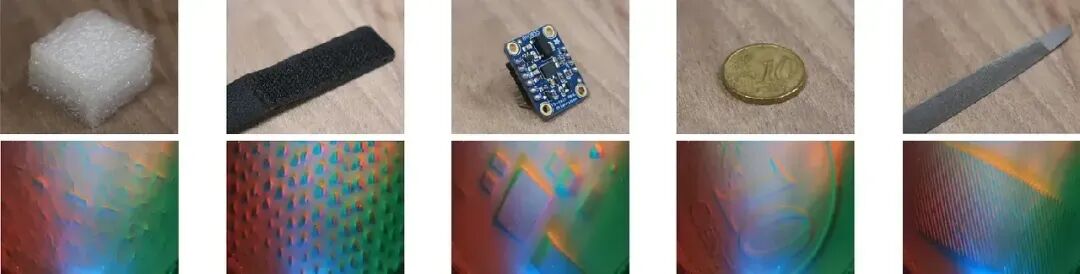

Other sensors face similar dilemmas. For example, Digit determines tactile force, contact points, and object geometry by sensing the deformation of its flexible skin:

This capability is undoubtedly powerful, generating vast amounts of information with great potential for intelligent strategies. The latest version of Digit, known as Digit 360, reportedly can:

Analyze spatial features as small as 7 microns, perceive normal and shear forces with resolutions of 1.01 mN and 1.27 mN, sense vibrations up to 10 kHz, and even detect heat and odors.

This range of performance is indeed astonishing. But the key issue is that to utilize all these magical functions, the robot must first make contact with the object… and achieving contact often relies on cameras. At this point,the advantages of vision-based robotic learning become apparent: it is not only effective, technically mature, and highly scalable (it can leverage vast amounts of online data for pre-training!), but more importantly, its sensors arereliable and inexpensive.

In the field of visual models, we have mature pre-trained backbone networks and architectures like ViT. In contrast, tactile sensors are not only diverse but also lack any so-calledstandard sensors—if they exist, they may not even be meaningful. Due to the high costs of the sensors themselves, collecting a large enough dataset to build atactile foundation model is exceedingly difficult. As I have previously emphasized, the key to scaling robotic technology lies indiversity.

What is the data scale law for robotic imitation learning?

If a sensor cannot be widely adopted by different teams and deployed in diverse environments to collect rich task data, we cannot expect it to perform as significantly as visual models.

Another related and tricky issue is that tactile data struggles to benefit fromimitation learning. When you remotely operate a robot, you may receive sometactile feedback—such as resistance felt by the arm—but you almost never receive fine tactile feedback. Frankly, how to effectively convey this high-dimensional tactile information to the operator is itself an unresolved challenge.

If the demonstration data from human experts does not contain effective tactile signals, then even if the robot is equipped with top-notch tactile sensors, it cannot learn how to utilize these signals, as they are unrelated to the success or failure of the task!

Thus, until recently, we have not found effective ways to leverage tactile sensors. Fortunately, two new trends in academia may be changing this situation:

- Emergence of new sensors that may make large-scale applications easier, allowing many teams to integrate tactile sensing technology into their research.

- Innovative data collection techniques that can scale to collect more valuable tactile data.

Innovation in Tactile Sensors

In the past, tactile sensors have been labeled as “expensive” and “of varying quality.” Sensors like BioTac and DIGIT not only cost hundreds of dollars but are also closed-source products, making them difficult to deploy in practical applications due to their fixed shapes.

However, a new wave of open-source innovation is fundamentally changing this situation.

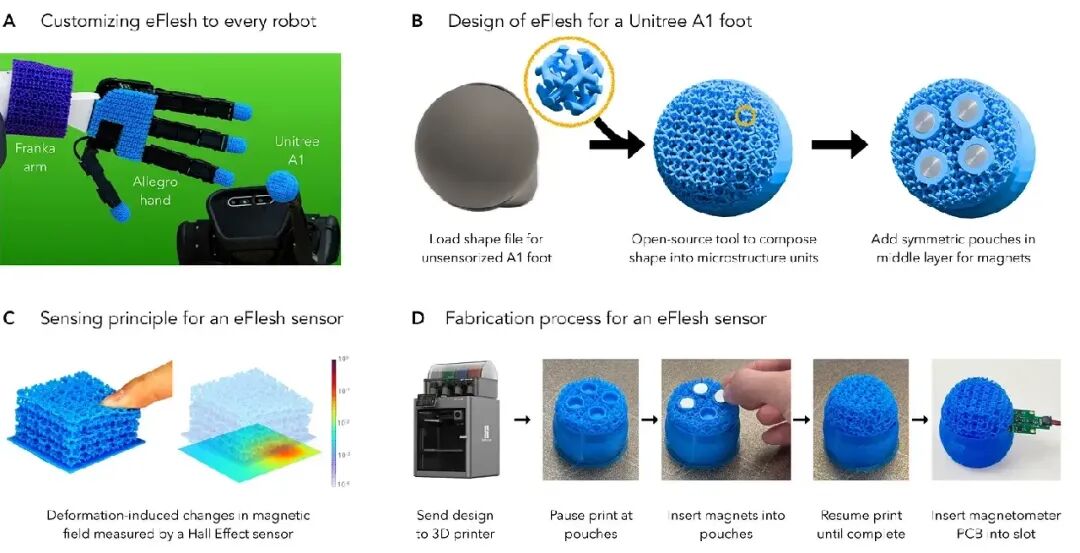

New sensors like eFlesh [2] break the limitations of past designs. They not only significantly reduce manufacturing costs but can also be customized in shape for different robots, offering far greater flexibility than before. You only need a circuit board, a few magnets, and a consumer-grade 3D printer, spending about a minute on actual operation time (of course, the printing process takes longer), to create your own sensor.

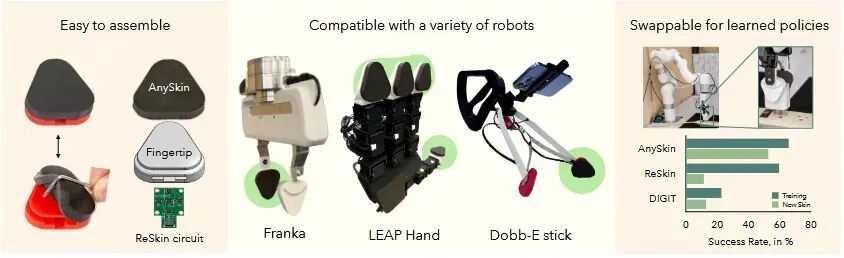

Similarly, research like AnySkin [3] has developed flat tactile “skin” that can be attached to various robotic grippers, even installable on the same Stick used in robotic general model projects.

These technologies employ similar principles, all belonging to magnetic field-based tactile “skin.” Of course, how tointerpret the data generated by these sensors remains a core challenge. This is precisely the problem that technologies like Sparsh [4] aim to solve: Sparsh-skin is a method proposed by Meta, designed to enable models to autonomously learn to understand tactile signals throughself-supervised pre-training.

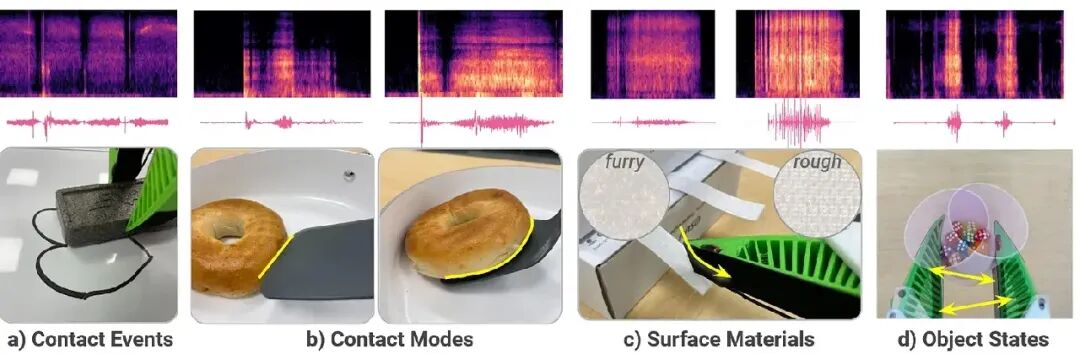

In addition to magnetic tactile skin, another approach is to useaudio. Microphones are not only ubiquitous and inexpensive but can also provide extremely strong contact signals. For example, the ManiWAV project has successfully applied audio [5] in various manipulation tasks requiring rich contact information. Another project called SonicBoom [6] utilizesmicrophone arrays to successfully locate contact points in new environments with an accuracy of 2.22 cm without the need for tactile skin.

Scaling Tactile Data Collection

Despite advancements in sensors, the biggest challenge facing tactile technology ultimately stems from that“painful lesson”. Tactile sensing is indeed exciting and beneficial for many tasks… but visual data is readily available, and visual simulation technology is mature, making large-scale collection of visual data a breeze.

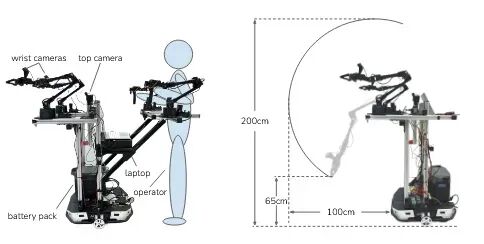

Take the typical master-slave robot system like Mobile ALOHA shown in the above image. The system includes two pairs of robotic arms: one pair operated by humans (master arms), and the other mimicking human actions to interact with the environment (slave arms). This setup cannot convey any fine tactile feedback to the human operator, at most providing some rough force feedback when the arms contact objects.

The key here is:If the demonstration data from human experts “ignores” tactile information, then the robot model naturally cannot learn to utilize it.

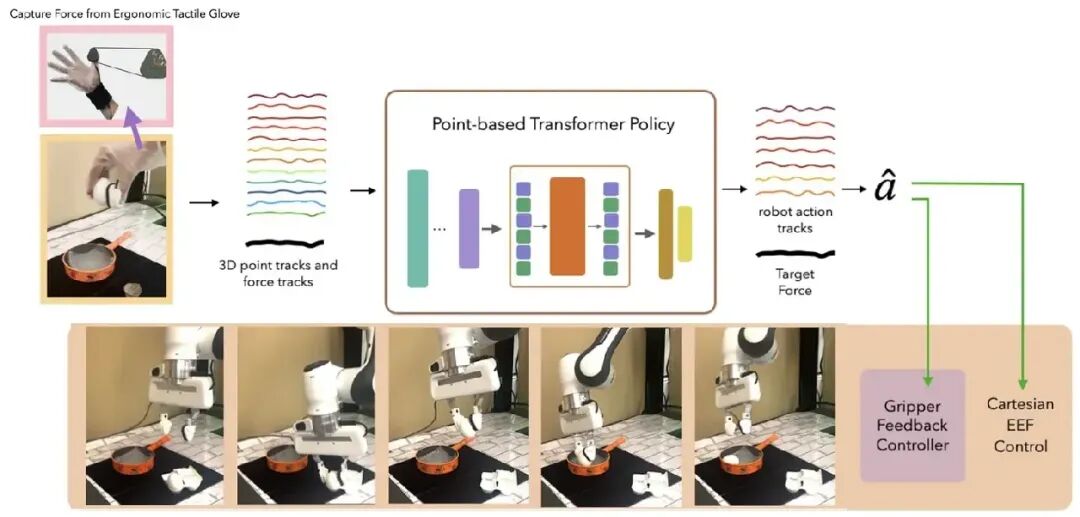

Fortunately, researchers have found some clever solutions. Projects like Feel The Force [7] and DexUMI [8] collect richer contact data by having operators wear specialized sensors. In the Feel The Force project, the operator’s fingertips wear an AnySkin tactile pad; in the DexUMI project, researchers designed a complete set of wearable hand exoskeletons that mimic the movements of grippers and integrate tactile sensors. These technologies not only collect valuable tactile data but also provide an additional benefit: human users can interact with objects more naturally, greatly enhancingdata collection efficiency.

Conclusion

Although tactile technology is not yet widely applied, I sense thattransformation may come soon. The development of tactile pre-training techniques and more accessible, flexible sensor hardware is expected to enable rapid scaling of tactile data in the future. Currently, the bottleneck constraining most robotic learning research is data scale, and tactile data is precisely the most stubborn fortress in this regard.

How can we obtain enough data to train a robot GPT?

Returning to the Amazon Vulcan case: for many tasks requiring high reliability,contact sensing is essential. Vulcan utilizes its contact sensing capabilities forslip detection and adaptive grip force adjustment. Unlike cameras, tactile sensors are not obstructed. They can determine whether an object is slipping by sensing shear forces, allowing the robot to increase grip strength when necessary while avoiding crushing the object. In recent research, we have already seen the power of these advantages, such as the V-HOP [9] project, which combines vision and touch to achieve precise tracking of objects in hand.

Additionally, I am very optimistic aboutreinforcement learning, despite its limitations. I believe a severely underestimated factor limiting tactile development is that existing human data collection methods are inherently unfavorable for effectively utilizing tactile signals.But if robots can collect data themselves, this problem will be easily resolved!

The limitations of reinforcement learning

In this model, we need not worry excessively about the inherent flaws of reinforcement learning, as we can assume that the robot already possesses a good foundational strategy, and our goal is merely to optimize this strategy through the newly added tactile sensors via self-learning until it achieves near-perfect success rates.

There are many exciting prospects ahead, but there are also many open questions to be answered. While we have yet to find that “standard answer” tactile sensor, the immense potential of this field is undeniable, and I look forward to its next leap forward.

One-click three connections「Like」「Share」「Heart」

Feel free to leave your thoughts in the comments!