Step 1: First, apply for the device from Ascend, and obtain the Atlas 800 9000 server. Use the official account and password provided by Ascend to ensure you can log into the server.

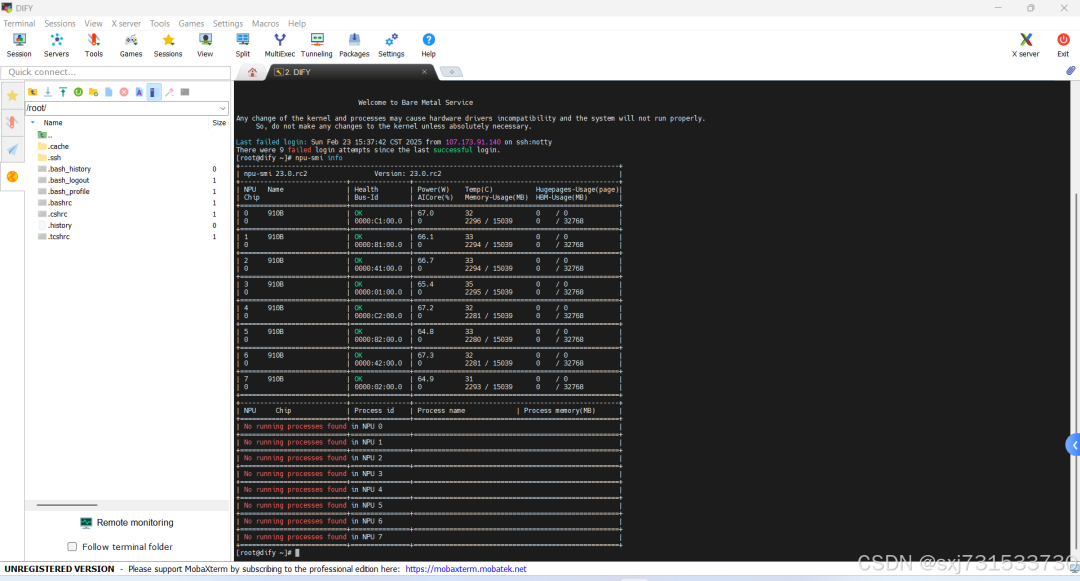

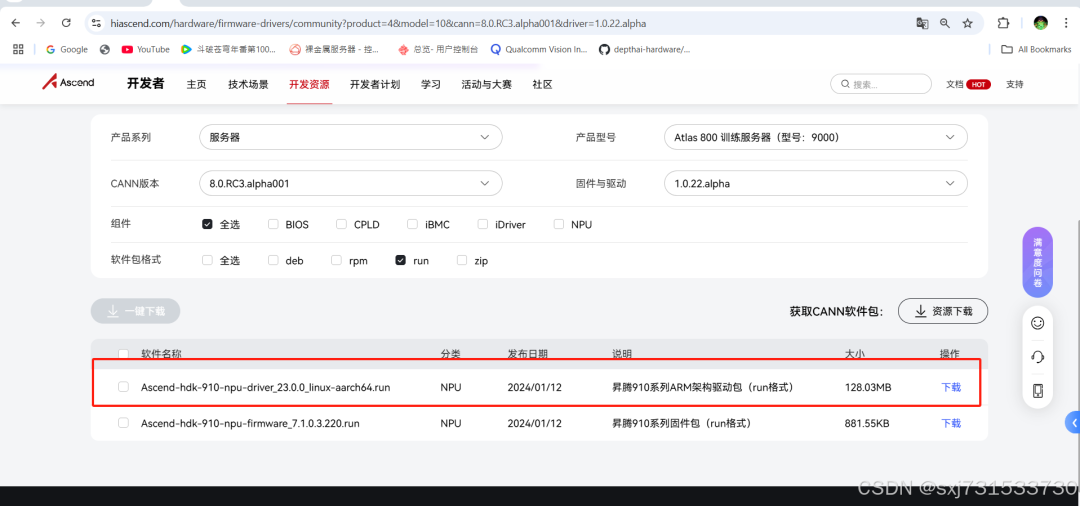

(1) Update the drivers, as the image provided by Ascend requires a specific version of the driver firmware. Download and install the update from Version: 23.0.rc2 to Version: 23.0.0. Download link: Community Edition – Firmware and Drivers – Ascend Community

Update and install the firmware, then restart the device. Everything should follow the latest drivers and announcements from Ascend.

[root@dify HwHiAiUser]# pwd

/home/HwHiAiUser

[root@dify HwHiAiUser]# ls -l

total 131112

-rw——- 1 root root 134251528 Dec 7 16:16 Ascend-hdk-910-npu-driver_23.0.0_linux-aarch64.run

[root@dify HwHiAiUser]# chmod 777 Ascend-hdk-910-npu-driver_23.0.0_linux-aarch64.run

[root@dify HwHiAiUser]# ls

Ascend-hdk-910-npu-driver_23.0.0_linux-aarch64.run

[root@dify HwHiAiUser]# sudo ./Ascend-hdk-910-npu-driver_23.0.0_linux-aarch64.run –full –force

Verifying archive integrity… 100% SHA256 checksums are OK. All good.

Uncompressing ASCEND DRIVER RUN PACKAGE 100%

[Driver] [2025-02-23 15:46:26] [INFO]Start time: 2025-02-23 15:46:26

[Driver] [2025-02-23 15:46:26] [INFO]LogFile: /var/log/ascend_seclog/ascend_install.log

[Driver] [2025-02-23 15:46:26] [INFO]OperationLogFile: /var/log/ascend_seclog/operation.log

[Driver] [2025-02-23 15:46:26] [INFO]base version is 23.0.rc2.

[Driver] [2025-02-23 15:46:26] [WARNING]Do not power off or restart the system during the installation/upgrade

[Driver] [2025-02-23 15:46:26] [INFO]set username and usergroup, HwHiAiUser:HwHiAiUser

[Driver] [2025-02-23 15:46:26] [INFO]Driver package has been installed on the path /usr/local/Ascend, the version is 23.0.rc2, and the version of this package is 23.0.0, do you want to continue? [y/n]

y

[Driver] [2025-02-23 15:46:36] [INFO]driver install type: Direct

[Driver] [2025-02-23 15:46:36] [INFO]upgradePercentage:10%

[Driver] [2025-02-23 15:46:40] [INFO]upgradePercentage:30%

[Driver] [2025-02-23 15:46:40] [INFO]upgradePercentage:40%

[Driver] [2025-02-23 15:46:42] [INFO]upgradePercentage:90%

[Driver] [2025-02-23 15:46:45] [INFO]upgradePercentage:100%

[Driver] [2025-02-23 15:46:45] [INFO]Driver package installed successfully! Reboot needed for installation/upgrade to take effect!

[Driver] [2025-02-23 15:46:45] [INFO]End time: 2025-02-23 15:46:45

[root@dify HwHiAiUser]# sudo reboot

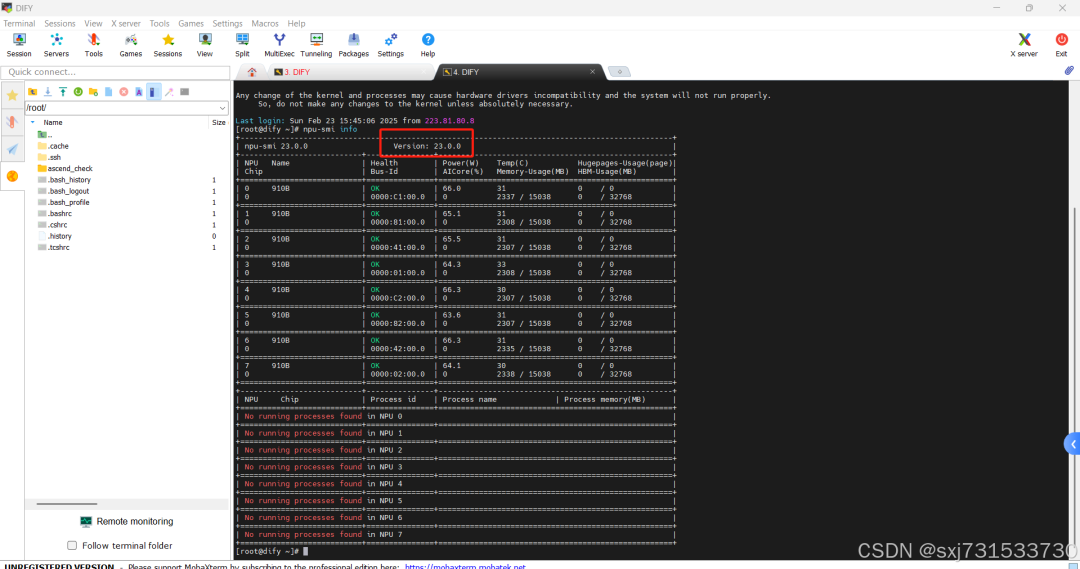

The firmware update is complete, and the driver version is now Version: 23.0.0.

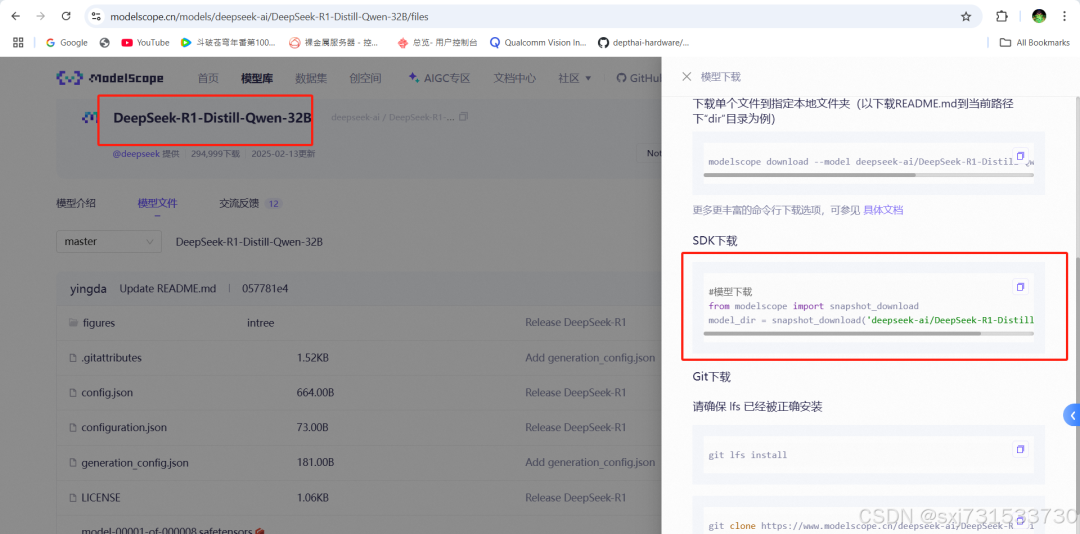

(2) Download the base model first, as we will later mount the inference model, tokenization model, and ranking model for use. You can download the model from the ModelScope community: DeepSeek-R1-Distill-Qwen-32B. Refer to the official guide for the download method.

Use a Python script to download the model.

[root@dify HwHiAiUser]# pwd/home/HwHiAiUser[root@dify HwHiAiUser]# pip3 install modelscope==1.18.0 -ihttps://mirrors.tuna.tsinghua.edu.cn/pypi/web/simple[root@dify HwHiAiUser]# python3Python 3.7.0 (default, May 11 2024, 10:32:14)[GCC 7.3.0] on linuxType “help”, “copyright”, “credits” or “license” for more information.>>> import modelscope>>> exit()[root@dify HwHiAiUser]# cat down.py# Model downloadfrom modelscope import snapshot_downloadmodel_dir = snapshot_download(‘deepseek-ai/DeepSeek-R1-Distill-Qwen-32B’,cache_dir=”.”)[root@dify HwHiAiUser]# python3 down.pyDownloading [figures/benchmark.jpg]: 100%|██████████████████████████████████████████████████████████████████████| 759k/759k [00:00<00:00, 1.78MB/s]Downloading [config.json]: 100%|██████████████████████████████████████████████████████████████████████████████████| 664/664 [00:00<00:00, 2.10kB/s]Downloading [configuration.json]: 100%|███████████████████████████████████████████████████████████████████████████| 73.0/73.0 [00:00<00:00, 233B/s]Downloading [generation_config.json]: 100%|█████████████████████████████████████████████████████████████████████████| 181/181 [00:00<00:00, 686B/s]Downloading [LICENSE]: 100%|██████████████████████████████████████████████████████████████████████████████████| 1.04k/1.04k [00:00<00:00, 2.92kB/s]Downloading [model-00001-of-000008.safetensors]: 0%| | 1.00M/8.19G [00:00<59:21, 2.47MB/s]Downloading [model-00001-of-000008.safetensors]: 0%| | 16.0M/8.19G [00:00<03:43, 39.3MB/s]

Download complete, check the weight directory.

[root@dify HwHiAiUser]# pwd

/home/HwHiAiUser

[root@dify HwHiAiUser]# tree -L 2

├── Ascend-hdk-910-npu-driver_23.0.0_linux-aarch64.run

├── deepseek-ai

│ ├── DeepSeek-R1-Distill-Qwen-32B

└── down.py

3 directories, 3 files

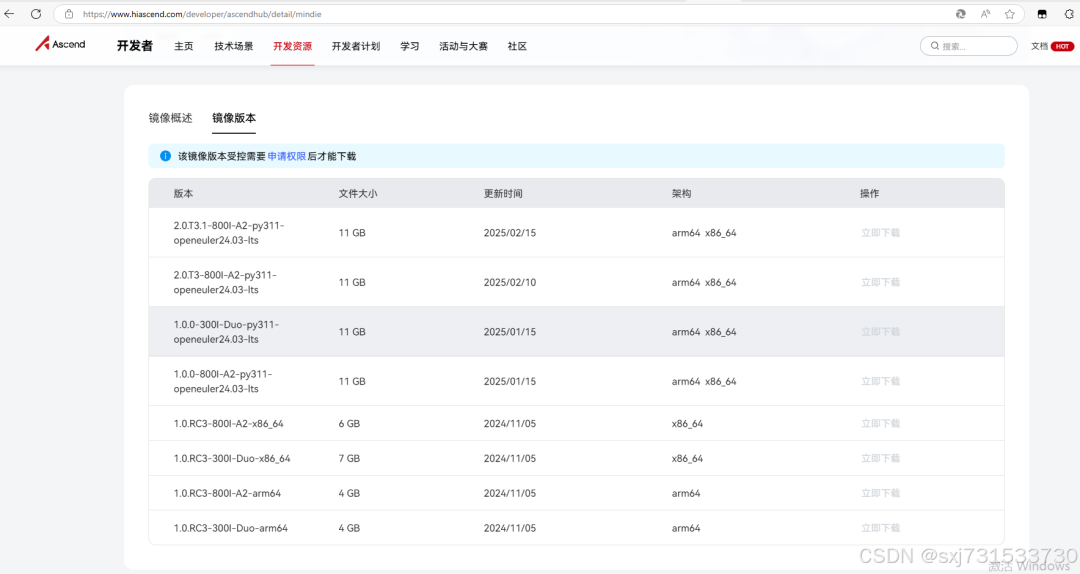

2. Use the official image. Details of the Ascend image repository (https://www.hiascend.com/developer/ascendhub/detail/mindie) to build the Ascend MindIE environment. Since we plan to test the DeepSeek-R1-Distill-Qwen-32B-W8A8 model, remember to create a container that mounts two cards.

(1) Pull the Atlas 800 9000 image. It is recommended to pull from the official source, and you should pull the corresponding image based on your model. Everything should follow the official guidelines. The Qingdao image is also based on the official image with minor modifications that do not affect operation.

You can also pull the image from the public link below to create a dual-card container.

[root@dify HwHiAiUser]#yum install docker[root@dify HwHiAiUser]# docker pull swr.cn-east-317.qdrgznjszx.com/sxj731533730/mindie:atlas_800_9000Error response from daemon: Get https://swr.cn-east-317.qdrgznjszx.com/v2/: x509: certificate signed by unknown authority[root@dify HwHiAiUser]#

Modify the configuration source and add the mindie image source.

Solution: [root@dify HwHiAiUser]#vim /etc/docker/daemon.json fill in the content { “insecure-registries”: [“https://swr.cn-east-317.qdrgznjszx.com”], “registry-mirrors”: [“https://docker.mirrors.ustc.edu.cn”] } Save and exit, then restart docker. [root@dify HwHiAiUser]# systemctl restart docker.service[root@dify HwHiAiUser]# docker pull swr.cn-east-317.qdrgznjszx.com/sxj731533730/mindie:atlas_800_9000atlas_800_9000: Pulling from qd-aicc/mindieedab87ea811e: Pull complete72906c864c93: Pull complete98f62a370e96: Pull completeDigest: sha256:6ceefe4506f58084717ec9bed7df75e51032fdd709d791a627084fe4bd92abeaStatus: Downloaded newer image for swr.cn-east-317.qdrgznjszx.com/qd-aicc/mindie:atlas_800_9000[root@dify HwHiAiUser]#

Create a container and enter it. We plan to use two Ascend NPU cards to infer the DeepSeek-R1-Distill-Qwen-32B W8A8 model, so the constructed container will use two cards, select cards 6 and 7. Cards 0-6 can run text embedding models and re-ranking models. Create a container script.

[root@dify ~]# cd /home/HwHiAiUser/

[root@dify HwHiAiUser]# ls

Ascend-hdk-910-npu-driver_23.0.0_linux-aarch64.run deepseek-ai down.py

[root@dify HwHiAiUser]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

swr.cn-east-317.qdrgznjszx.com/sxj731533730/mindie atlas_800_9000 69f30d0c15be 5 weeks ago 16.5GB

[root@dify HwHiAiUser]# vim docker_run.sh

[root@dify HwHiAiUser]# vim docker_run.sh

[root@dify HwHiAiUser]# vim docker_run.sh

[root@dify HwHiAiUser]# cat docker_run.sh

#!/bin/bash

docker_images=swr.cn-east-317.qdrgznjszx.com/sxj731533730/mindie:atlas_800_9000

model_dir=/home/HwHiAiUser # Modify the mount directory according to actual conditions

docker run -it –name qdaicc –ipc=host –net=host \

–device=/dev/davinci6 \

–device=/dev/davinci7 \

–device=/dev/davinci_manager \

–device=/dev/devmm_svm \

–device=/dev/hisi_hdc \

-v /usr/local/dcmi:/usr/local/dcmi \

-v /usr/local/bin/npu-smi:/usr/local/bin/npu-smi \

-v /usr/local/Ascend/driver/lib64/common:/usr/local/Ascend/driver/lib64/common \

-v /usr/local/Ascend/driver/lib64/driver:/usr/local/Ascend/driver/lib64/driver \

-v /etc/ascend_install.info:/etc/ascend_install.info \

-v /etc/vnpu.cfg:/etc/vnpu.cfg \

-v /usr/local/Ascend/driver/version.info:/usr/local/Ascend/driver/version.info \

-v ${model_dir}:${model_dir} \

-v /var/log/npu:/usr/slog ${docker_images} \

/bin/bash

[root@dify HwHiAiUser]#

Fill in the content as above and start the image.

[root@dify HwHiAiUser]# bash docker_run.sh(Python310) root@dify:/usr/local/Ascend/atb-models# cd /home/HwHiAiUser/(Python310) root@dify:/home/HwHiAiUser# lsAscend-hdk-910-npu-driver_23.0.0_linux-aarch64.run deepseek-ai docker_run.sh down.py

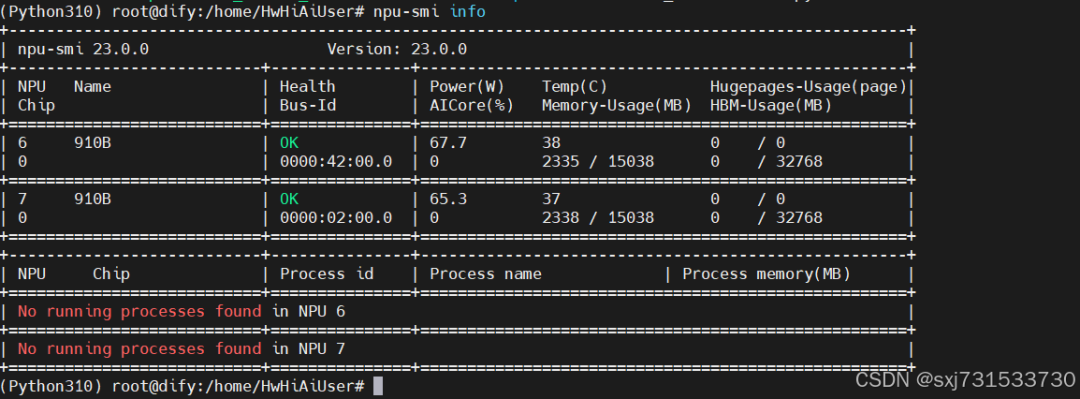

Since the previously mounted directory is /home/HwHiAiUser/, you can see the physical machine’s downloaded weights inside the docker. Check that there are two cards.

(2) Perform model quantization. Ascend/ModelZoo-PyTorch – Gitee.com (https://gitee.com/ascend/ModelZoo-PyTorch/tree/master/MindIE/LLM/DeepSeek/DeepSeek-R1-Distill-Qwen-32B) directly enter the quantization phase. Operate outside the container, as the system has already configured the environment by default. Jump directly to the weight quantization phase. If anything is missing during the installation process, download the source code outside the docker and enter the container for quantization. It is recommended to create an 8-card container here, as quantization in a dual-card container will show insufficient NPU memory unless you use the CPU to convert the model. I am too lazy to create a container, so I will use CPU quantization instead.

[root@dify HwHiAiUser]# pwd/home/HwHiAiUser[root@dify HwHiAiUser]# git clone https://gitee.com/ascend/msit.gitCloning into ‘msit’…remote: Enumerating objects: 81125, done.remote: Total 81125 (delta 0), reused 0 (delta 0), pack-reused 81125Receiving objects: 100% (81125/81125), 71.73 MiB | 12.14 MiB/s, done.Resolving deltas: 100% (59704/59704), done.[root@dify HwHiAiUser]# cd msit/.git/ .gitee/ msit/ msmodelslim/ msserviceprofiler/[root@dify Qwen]# docker start b5399c4da202b5399c4da202[root@dify Qwen]# docker exec -it b5399c4da202 /bin/bash(Python310) root@dify:/home/HwHiAiUser/msit# cd msmodelslim/(Python310) root@dify:/home/HwHiAiUser/msit/msmodelslim# bash install.sh# Installation successful, install what is missing in pip(Python310) root@dify:/home/HwHiAiUser# cd /home/HwHiAiUser/msit/msmodelslim/example/Qwen# Quantize the model(Python310) root@dify:/home/HwHiAiUser/msit/msmodelslim/example/Qwen# python3 quant_qwen.py –model_path /home/HwHiAiUser/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B/ –save_directory /home/HwHiAiUser/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B-W8A8 –calib_file ../common/boolq.jsonl –w_bit 8 –a_bit 8 –device_type npu2025-02-23 18:15:25,404 – msmodelslim-logger – WARNING – The current CANN version does not support LayerSelector quantile method. Or use CPU processing (Python310) root@dify:/home/HwHiAiUser/msit/msmodelslim/example/Qwen# python3 quant_qwen.py –model_path /home/HwHiAiUser/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B/ –save_directory /home/HwHiAiUser/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B-W8A8 –calib_file ../common/boolq.jsonl –w_bit 8 –a_bit 8 –device_type cpu2025-02-23 18:25:10,776 – msmodelslim-logger – WARNING – The current CANN version does not support LayerSelector quantile method.2025-02-23 18:25:10,783 – msmodelslim-logger – WARNING – `cpu` is set as `dev_type`, `dev_id` cannot be specified manually!

After conversion, the weight file is generated.

(Python310) root@dify:/home/HwHiAiUser/deepseek-ai# cd /home/HwHiAiUser/msit/msmodelslim/example/Qwen

(Python310) root@dify:/home/HwHiAiUser/msit/msmodelslim/example/Qwen# ls /home/HwHiAiUser/deepseek-ai/

DeepSeek-R1-Distill-Qwen-32B DeepSeek-R1-Distill-Qwen-32B-W8A8

(Python310) root@dify:/home/HwHiAiUser/msit/msmodelslim/example/Qwen#

Since the Atlas 800 9000 does not support bf16, modify to float16. Other devices refer to the Ascend manual.

(Python310) root@dify:/home/HwHiAiUser/msit/msmodelslim/example/Qwen# vim /home/HwHiAiUser/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B-W8A8/config.json

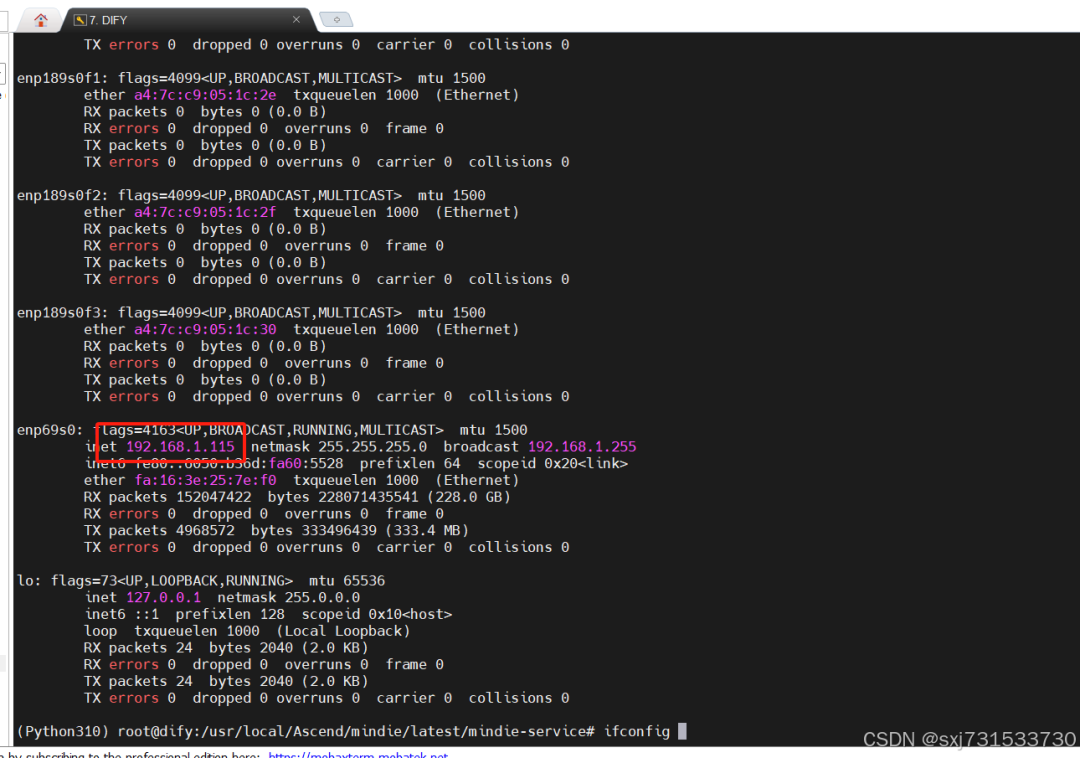

(3) Start the MindIE service. First, record the local IP address, model path, and model name.

Model weight path: /home/HwHiAiUser/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B-W8A8/

Model name: DeepSeek-R1-Distill-Qwen-32B-W8A8

Modify the configuration file.

(Python310) root@dify:/usr/local/Ascend/mindie/latest/mindie-service# pwd

/usr/local/Ascend/mindie/latest/mindie-service

(Python310) root@dify:/usr/local/Ascend/mindie/latest/mindie-service# vim conf/config.json

Modify the explanation, ipAddress, mainly for the inference engine model used in the subsequent dify setup. Other references can be found in the MindIE manual.

MindSpore Models Service Usage – MindSpore Models Usage – Model Inference Usage Process – MindIE LLM Development Guide – Large Model Development – MindIE1.0.0 Development Documentation – Ascend Community

https://www.hiascend.com/document/detail/zh/mindie/100/mindiellm/llmdev/mindie_llm0012.html

Single-machine inference – Configure MindIE Server – Configure MindIE-MindIE Installation Guide – Environment Preparation – MindIE1.0.0 Development Documentation – Ascend Community

https://www.hiascend.com/document/detail/zh/mindie/100/envdeployment/instg/mindie_instg_0026.html

“ipAddress” : “192.168.1.115”, change to local address

“httpsEnabled” : false,

“npuDeviceIds” : [[0,1]],

“modelName” : “DeepSeek-R1-Distill-Qwen-32B-W8A8”,

“modelWeightPath” : “/home/HwHiAiUser/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B-W8A8/”,

“maxInputTokenLen” : 4096,

“maxIterTimes” : 4096,

“truncation” : true,

Modify the content as follows.

(Python310) root@dify:/usr/local/Ascend/mindie/latest/mindie-service# cat conf/config.json

{

“Version” : “1.0.0”,

“LogConfig” :

{

“logLevel” : “Info”,

“logFileSize” : 20,

“logFileNum” : 20,

“logPath” : “logs/mindie-server.log”

},

“ServerConfig” :

{

“ipAddress” : “192.168.1.115”,

“managementIpAddress” : “127.0.0.2”,

“port” : 1025,

“managementPort” : 1026,

“metricsPort” : 1027,

“allowAllZeroIpListening” : false,

“maxLinkNum” : 1000,

“httpsEnabled” : false,

“fullTextEnabled” : false,

“tlsCaPath” : “security/ca/”,

“tlsCaFile” : [“ca.pem”],

“tlsCert” : “security/certs/server.pem”,

“tlsPk” : “security/keys/server.key.pem”,

“tlsPkPwd” : “security/pass/key_pwd.txt”,

“tlsCrlPath” : “security/certs/”,

“tlsCrlFiles” : [“server_crl.pem”],

“managementTlsCaFile” : [“management_ca.pem”],

“managementTlsCert” : “security/certs/management/server.pem”,

“managementTlsPk” : “security/keys/management/server.key.pem”,

“managementTlsPkPwd” : “security/pass/management/key_pwd.txt”,

“managementTlsCrlPath” : “security/management/certs/”,

“managementTlsCrlFiles” : [“server_crl.pem”],

“kmcKsfMaster” : “tools/pmt/master/ksfa”,

“kmcKsfStandby” : “tools/pmt/standby/ksfb”,

“inferMode” : “standard”,

“interCommTLSEnabled” : true,

“interCommPort” : 1121,

“interCommTlsCaPath” : “security/grpc/ca/”,

“interCommTlsCaFiles” : [“ca.pem”],

“interCommTlsCert” : “security/grpc/certs/server.pem”,

“interCommPk” : “security/grpc/keys/server.key.pem”,

“interCommPkPwd” : “security/grpc/pass/key_pwd.txt”,

“interCommTlsCrlPath” : “security/grpc/certs/”,

“interCommTlsCrlFiles” : [“server_crl.pem”],

“openAiSupport” : “vllm”

},

“BackendConfig” : {

“backendName” : “mindieservice_llm_engine”,

“modelInstanceNumber” : 1,

“npuDeviceIds” : [[0,1]],

“tokenizerProcessNumber” : 8,

“multiNodesInferEnabled” : false,

“multiNodesInferPort” : 1120,

“interNodeTLSEnabled” : true,

“interNodeTlsCaPath” : “security/grpc/ca/”,

“interNodeTlsCaFiles” : [“ca.pem”],

“interNodeTlsCert” : “security/grpc/certs/server.pem”,

“interNodeTlsPk” : “security/grpc/keys/server.key.pem”,

“interNodeTlsPkPwd” : “security/grpc/pass/mindie_server_key_pwd.txt”,

“interNodeTlsCrlPath” : “security/grpc/certs/”,

“interNodeTlsCrlFiles” : [“server_crl.pem”],

“interNodeKmcKsfMaster” : “tools/pmt/master/ksfa”,

“interNodeKmcKsfStandby” : “tools/pmt/standby/ksfb”,

“ModelDeployConfig” :

{

“maxSeqLen” : 2560,

“maxInputTokenLen” : 4096,

“truncation” : true,

“ModelConfig” : [

{

“modelInstanceType” : “Standard”,

“modelName” : “DeepSeek-R1-Distill-Qwen-32B-W8A8”,

“modelWeightPath” : “/home/HwHiAiUser/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B-W8A8/”,

“worldSize” : 2,

“cpuMemSize” : 5,

“npuMemSize” : -1,

“backendType” : “atb”,

“trustRemoteCode” : false

}

]

},

“ScheduleConfig” :

{

“templateType” : “Standard”,

“templateName” : “Standard_LLM”,

“cacheBlockSize” : 128,

“maxPrefillBatchSize” : 50,

“maxPrefillTokens” : 8192,

“prefillTimeMsPerReq” : 150,

“prefillPolicyType” : 0,

“decodeTimeMsPerReq” : 50,

“decodePolicyType” : 0,

“maxBatchSize” : 200,

“maxIterTimes” : 4096,

“maxPreemptCount” : 0,

“supportSelectBatch” : false,

“maxQueueDelayMicroseconds” : 5000

}

}

}

Modify the model permissions and start the service.

(Python310) root@dify:/usr/local/Ascend/mindie/latest/mindie-service# chmod -R 750 /home/HwHiAiUser/deepseek-ai/DeepSeek-R1-Distill-Qwen-32B-W8A8/

(Python310) root@dify:/usr/local/Ascend/mindie/latest/mindie-service# ./bin/mindieservice_daemon

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

[2025-02-23 19:04:44,279] [89160] [281464373506464] [llm] [INFO][logging.py-227] : Skip binding cpu.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Daemon start success!

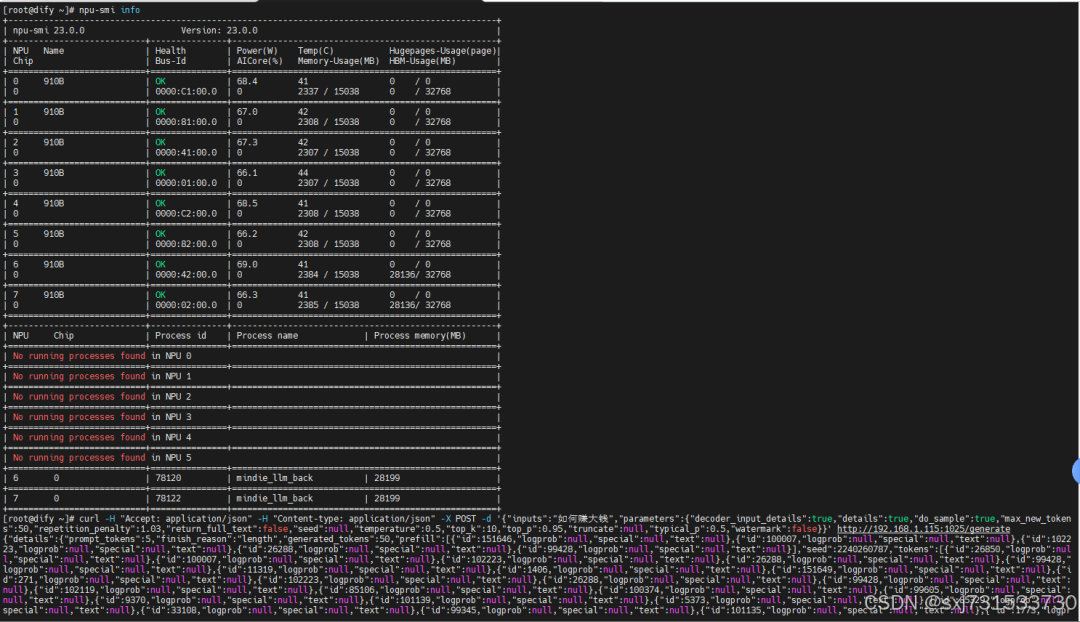

Restart a terminal and check the NPU usage status.

Local test[root@dify ~]# curl -H “Accept: application/json” -H “Content-type: application/json” -X POST -d ‘{“inputs”:”How to make big money”,”parameters”:{“decoder_input_details”:true,”details”:true,”do_sample”:true,”max_new_tokens”:50,”repetition_penalty”:1.03,”return_full_text”:false,”seed”:null,”temperature”:0.5,”top_k”:10,”top_p”:0.95,”truncate”:null,”typical_p”:0.5,”watermark”:false}}’ http://192.168.1.115:1025/generate{“details”:{“prompt_tokens”:5,”finish_reason”:”length”,”generated_tokens”:50,”prefill”:[{“id”:151646,”logprob”:null,”special”:null,”text”:null},{“id”:100007,”logprob”:null,”special”:null,”text”:null},{“id”:102223,”logprob”:null,”special”:null,”text”:null},{“id”:26288,”logprob”:null,”special”:null,”text”:null},{“id”:99428,”logprob”:null,”special”:null,”text”:null}],”seed”:2240260787,”tokens”:[{“id”:26850,”logprob”:null,”special”:null,”text”:null},{“id”:100007,”logprob”:null,”special”:null,”text”:null},{“id”:102223,”logprob”:null,”special”:null,”text”:null},{“id”:26288,”logprob”:null,”special”:null,”text”:null},{“id”:99428,”logprob”:null,”special”:null,”text”:null},{“id”:11319,”logprob”:null,”special”:null,”text”:null},{“id”:1406,”logprob”:null,”special”:null,”text”:null},{“id”:151649,”logprob”:null,”special”:null,”text”:null},{“id”:271,”logprob”:null,”special”:null,”text”:null},{“id”:102223,”logprob”:null,”special”:null,”text”:null},{“id”:26288,”logprob”:null,”special”:null,”text”:null},{“id”:99428,”logprob”:null,”special”:null,”text”:null},{“id”:102119,”logprob”:null,”special”:null,”text”:null},{“id”:85106,”logprob”:null,”special”:null,”text”:null},{“id”:100374,”logprob”:null,”special”:null,”text”:null},{“id”:99605,”logprob”:null,”special”:null,”text”:null},{“id”:9370,”logprob”:null,”special”:null,”text”:null},{“id”:101139,”logprob”:null,”special”:null,”text”:null},{“id”:5373,”logprob”:null,”special”:null,”text”:null},{“id”:85329,”logprob”:null,”special”:null,”text”:null},{“id”:33108,”logprob”:null,”special”:null,”text”:null},{“id”:99345,”logprob”:null,”special”:null,”text”:null},{“id”:101135,”logprob”:null,”special”:null,”text”:null},{“id”:1773,”logprob”:null,”special”:null,”text”:null},{“id”:87752,”logprob”:null,”special”:null,”text”:null},{“id”:99639,”logprob”:null,”special”:null,”text”:null},{“id”:97084,”logprob”:null,”special”:null,”text”:null},{“id”:102716,”logprob”:null,”special”:null,”text”:null},{“id”:39907,”logprob”:null,”special”:null,”text”:null},{“id”:48443,”logprob”:null,”special”:null,”text”:null},{“id”:14374,”logprob”:null,”special”:null,”text”:null},{“id”:220,”logprob”:null,”special”:null,”text”:null},{“id”:16,”logprob”:null,”special”:null,”text”:null},{“id”:13,”logprob”:null,”special”:null,”text”:null},{“id”:3070,”logprob”:null,”special”:null,”text”:null},{“id”:99716,”logprob”:null,”special”:null,”text”:null},{“id”:102447,”logprob”:null,”special”:null,”text”:null},{“id”:1019,”logprob”:null,”special”:null,”text”:null},{“id”:256,”logprob”:null,”special”:null,”text”:null},{“id”:481,”logprob”:null,”special”:null,”text”:null},{“id”:3070,”logprob”:null,”special”:null,”text”:null},{“id”:104023,”logprob”:null,”special”:null,”text”:null},{“id”:5373,”logprob”:null,”special”:null,”text”:null},{“id”:100025,”logprob”:null,”special”:null,”text”:null},{“id”:334,”logprob”:null,”special”:null,”text”:null},{“id”:5122,”logprob”:null,”special”:null,”text”:null},{“id”:67338,”logprob”:null,”special”:null,”text”:null},{“id”:101930,”logprob”:null,”special”:null,”text”:null},{“id”:99716,”logprob”:null,”special”:null,”text”:null},{“id”:101172,”logprob”:null,”special”:null,”text”:null}]}}