1.What is Multi-Agent

-

What is an agent?

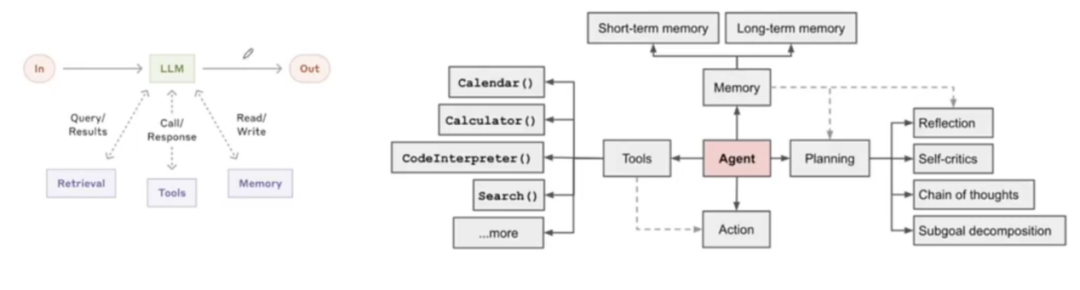

An agent is an entity or program that can autonomously perceive the environment, analyze information, and take actions to achieve specific goals. Currently, they are mainly divided into two schools: workflows and agents, which have an architectural distinction.

-

Workflows: A system that orchestrates large language models and tools through predefined code paths.

-

Agents: A system where large language models dynamically know their own processes and tool usage, allowing them to autonomously control how to complete tasks.

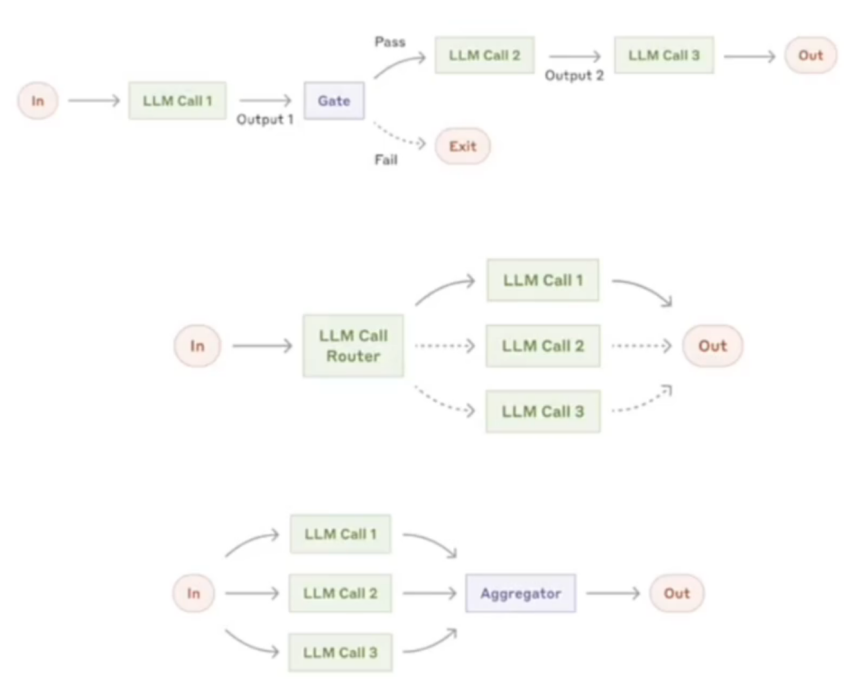

Workflow process—> The core is to complete manually orchestrated series and parallel tasks to implement a series of business rules. The three common patterns are shown in the figure below:

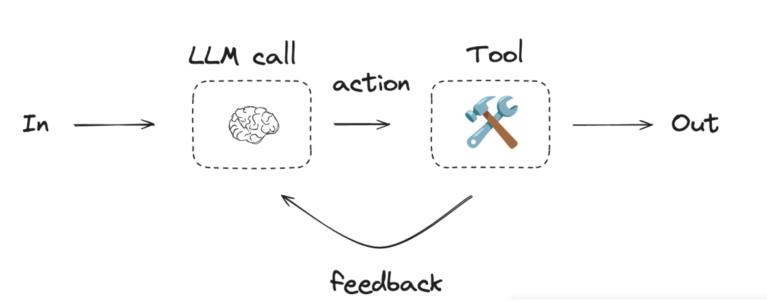

Agent: More flexible than workflows, allowing large models to autonomously choose the appropriate tools.

-

From single-agent to multi-agent

Multi-Agent systems consist of multiple agents with a certain degree of autonomy and interaction capabilities. These agents collaborate, compete, or negotiate to jointly complete complex tasks or solve specific problems.

1. Each agent in a Multi-Agent system only needs to focus on its own position and relevant information. It does not need to cover all historical information, while a Single-Agent, such as AutoGPT, needs to remember all historical information, which means that Single-Agent has higher memory capacity requirements (the sequence length supported by large models) when facing complex tasks with long histories. Multi-Agent has a clear advantage in this regard.

2. The role-playing mechanism can eliminate some perspectives, making the performance of large models more stable, while single agents cannot achieve this due to the mixing of many tasks.

3. Multi-Agent has better scalability, while the scalability of Single-Agent relies on some memory-saving strategies for tokens. For more complex tasks, the context input to the large model will become longer, posing a risk of performance degradation (large models may lose key information when processing long sequences, etc.). Multi-Agent collaboration does not have this problem, as each agent only completes specific sub-tasks, which generally do not create long contexts.

4. Multi-Agent can explore multiple solutions in parallel and then select the optimal solution. Single agents do not have this advantage or it is relatively cumbersome to implement.

2.Examples of Multi-Agent —- Manus

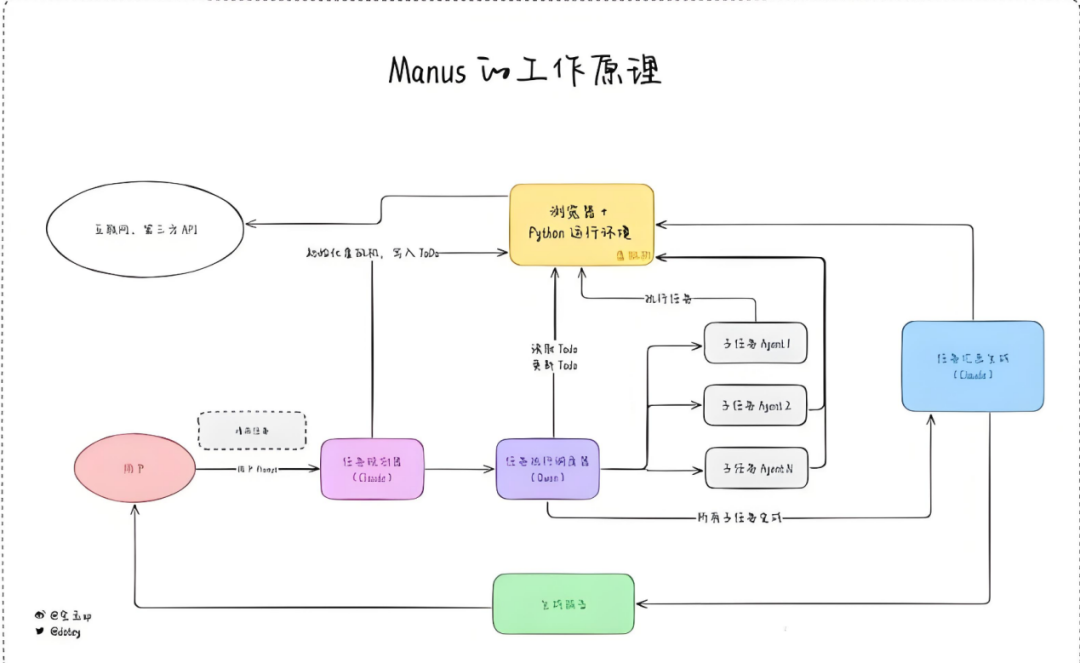

Manus workflow: A complete closed loop from demand to result.

-

Task reception: Users submit task requirements, such as “Help me write a market analysis report“.

-

Task understanding: The planning module analyzes user input, combining user preferences and historical data from the memory module to accurately understand the task objectives.

-

Task decomposition: Break down the task into sub-tasks such as “data collection“, “trend analysis“, “report writing“.

-

Planning: Develop execution plans for each sub-task, specifying the required tools and resources.

-

Autonomous execution: The multi-agent system starts, with data collection agents gathering data, analysis agents processing data, and writing agents generating reports.

-

Quality inspection: The system monitors execution quality in real-time and self-corrects when necessary.

-

Result integration: Integrate the outputs of each agent into a complete market analysis report.

-

Delivery to users: Provide the final results to users and optimize based on user feedback.

3.Communication between Multi-Agents

The system conducts collaborative activities through multiple autonomous agents, and their interaction patterns are similar to the dynamic behaviors of human groups in problem-solving scenarios. The core issue to study is: how these LLM-MA systems maintain coordination with their operating environment and achieve the collective goals intended in their design.

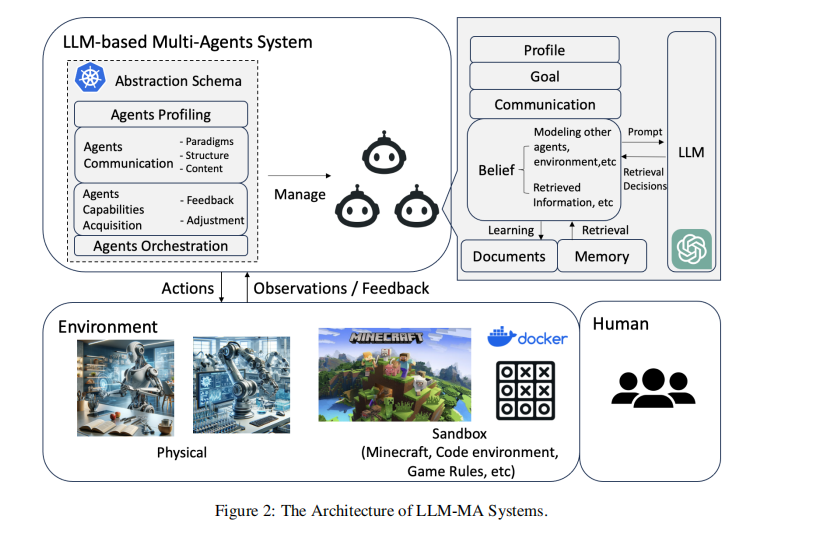

Multi-agent focuses on four key capabilities: agent–environment interface, agent configuration, agent communication, and agent capability acquisition.

(1)Agent–Environment Interface

Agents based on LLM perceive and act in the environment, while the environment, in turn, influences their behavior and decisions. The agent-environment interface refers to how agents interact with and perceive their environment. It is through this interface that agents understand their surroundings, make decisions, and learn from the outcomes of their actions.

(2)Agent Configuration

In LLM-MA systems, agents are defined by their characteristics, actions, and skills, all tailored to meet specific goals. In different systems, agents take on different roles, each with a comprehensive description, including features, capabilities, behaviors, and constraints. In predefined cases, agent configuration is explicitly defined by the system designer.

(3)Agent Communication

Communication between agents in LLM-MA systems is a key infrastructure supporting collective intelligence. We analyze agent communication from three perspectives: 1) Communication paradigm: The style and method of interaction between agents; 2) Communication structure: The organization and architecture of the communication network within the multi-agent system; and 3) The content of communication exchanged between agents.

Communication Paradigm: Current LLM-MA systems mainly adopt three communication paradigms: cooperation, debate, and competition. Cooperative agents work together to achieve shared goals, often exchanging information to enhance collective solutions. The debate paradigm is used when agents engage in argumentative interactions, presenting and defending their viewpoints or solutions while critiquing others. This paradigm is suitable for reaching consensus or refining solutions. Competitive agents strive to achieve their own goals, which may conflict with those of other agents.

Communication Structure: The figure illustrates four typical communication structures in LLM-MA systems. Hierarchical communication is structured in layers, with agents at each level having different roles, primarily interacting within their own level or with adjacent levels. Liu et al. (2023) introduced a framework called Dynamic LLM-Agent Network (DyLAN), which organizes agents in a multi-layer feedforward network. This setup facilitates dynamic interactions and includes features such as agent selection during reasoning and early stopping mechanisms, collectively improving cooperation efficiency among agents. Decentralized communication operates on a peer-to-peer network, where agents communicate directly with each other, a structure commonly seen in world simulation applications. Centralized communication involves a central agent or a group of central agents coordinating the system’s communication, with other agents primarily interacting through this central node. A shared message pool was proposed by MetaGPT (Hong et al., 2023) to enhance communication efficiency. This communication structure maintains a shared message pool where agents post messages and subscribe to relevant messages based on their configurations, thereby improving communication efficiency.

Communication Content: In LLM-MA systems, communication content typically exists in text form. The specific content varies widely depending on the particular application. For example, in software development, agents may communicate about code snippets.

(4) Agent Capability Acquisition

There are two basic concepts: what types of feedback agents should learn from to enhance their capabilities, and how agents adjust their strategies to effectively solve complex problems.

Feedback: Feedback is key information received by agents about the outcomes of their actions, helping them understand the potential impacts of their actions and adapt to complex and dynamic problems. In most studies, the format of feedback provided to agents is text. Depending on the source from which agents receive such feedback, it can be classified into four types: feedback from the environment, feedback from agent interactions, feedback from humans, and no feedback.

Adjustment of agents to complex problems: To enhance their capabilities, agents in LLM-MA systems can adjust through three main solutions.

1) Memory. Most LLM-MA systems utilize memory modules to adjust agent behavior. Agents store information from previous interactions and feedback in their memory. When executing actions, they can retrieve relevant and valuable memories, especially those containing successful actions from past similar goals, as emphasized by Wang et al. (2023b). This process helps improve their current actions.

2) Self-evolution. Agents do not solely rely on historical records to determine subsequent actions, as seen in memory-based solutions; agents can dynamically self-evolve by modifying themselves (e.g., changing initial goals and planning strategies) and training themselves based on feedback or communication logs.

3) Dynamic generation. In certain scenarios, the system can instantaneously generate new agents during its operation. This capability allows the system to effectively scale and adapt, as it can introduce agents specifically designed to address current needs and challenges.

4.Agent to Agent Protocol

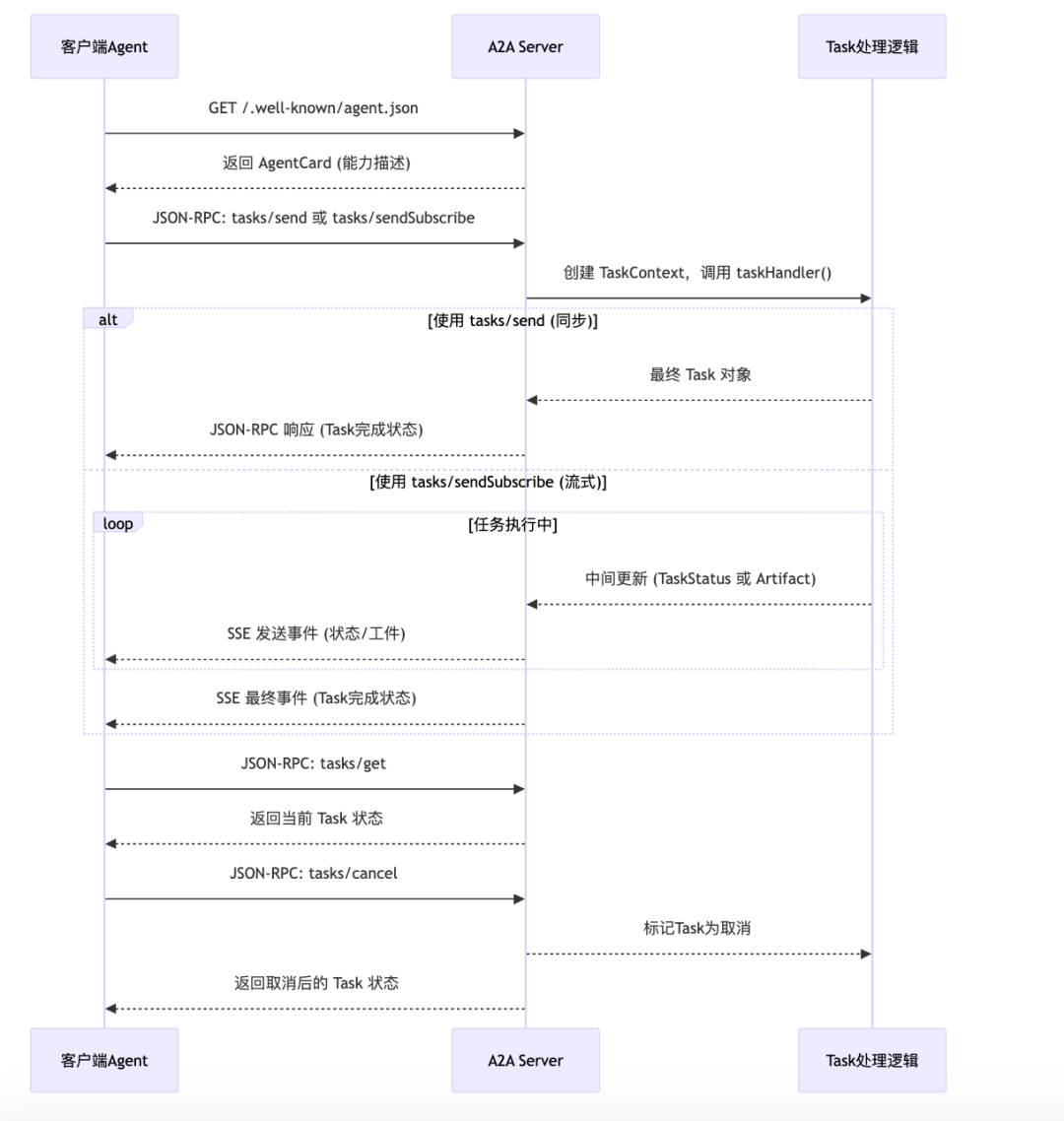

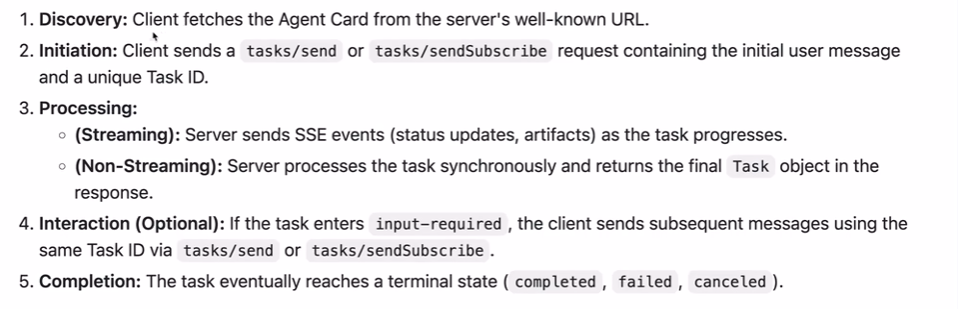

Definition:A2A protocol (Agent-to-Agent Protocol) is essentially a set of open standards that define a consistent communication interface for interoperability between agents.

A2A protocol mainly specifies:

Interface Specification: Client agents call server agents, connecting roles (e.g., /tasks/send, /tasks/sendSubscribe, /tasks/get, /tasks/cancel)

Data Exchange Format (based on JSON-RPC 2.0 + standardized Task/Message/Artifact structure)

Interaction Mode (supports synchronous calls and asynchronous streamingSSE push)

Discovery Mechanism: Client connects to multiple server agents, knowing what capabilities those server agents have (obtained through the standard path /.well-known/agent.json to get the Agent capability description).

/** * An AgentCard conveys key information: * - Overall details (version, name, description, uses) * - Skills: A set of capabilities the agent can perform * - Default modalities/content types supported by the agent. * - Authentication requirements */export interface AgentCard { /** * Human readable name of the agent. * @example "Recipe Agent" */ name: string; /** * A human-readable description of the agent. Used to assist users and * other agents in understanding what the agent can do. * @example "Agent that helps users with recipes and cooking." */ description: string; /** A URL to the address the agent is hosted at. */ url: string; /** A URL to an icon for the agent. */ iconUrl?: string; /** The service provider of the agent */ provider?: AgentProvider; /** * The version of the agent - format is up to the provider. * @example "1.0.0" */ version: string; /** A URL to documentation for the agent. */ documentationUrl?: string; /** Optional capabilities supported by the agent. */ capabilities: AgentCapabilities; /** Security scheme details used for authenticating with this agent. */ securitySchemes?: { [scheme: string]: SecurityScheme }; /** Security requirements for contacting the agent. */ security?: { [scheme: string]: string[] }[]; /** * The set of interaction modes that the agent supports across all skills. This can be overridden per-skill. * Supported media types for input. */ defaultInputModes: string[]; /** Supported media types for output. */ defaultOutputModes: string[]; /** Skills are a unit of capability that an agent can perform. */ skills: AgentSkill[]; /** * true if the agent supports providing an extended agent card when the user is authenticated. * Defaults to false if not specified. */ supportsAuthenticatedExtendedCard?: boolean;}Authentication and Error Handling (interfaces may require authentication, errors uniformly returned in JSON-RPC error format)

-

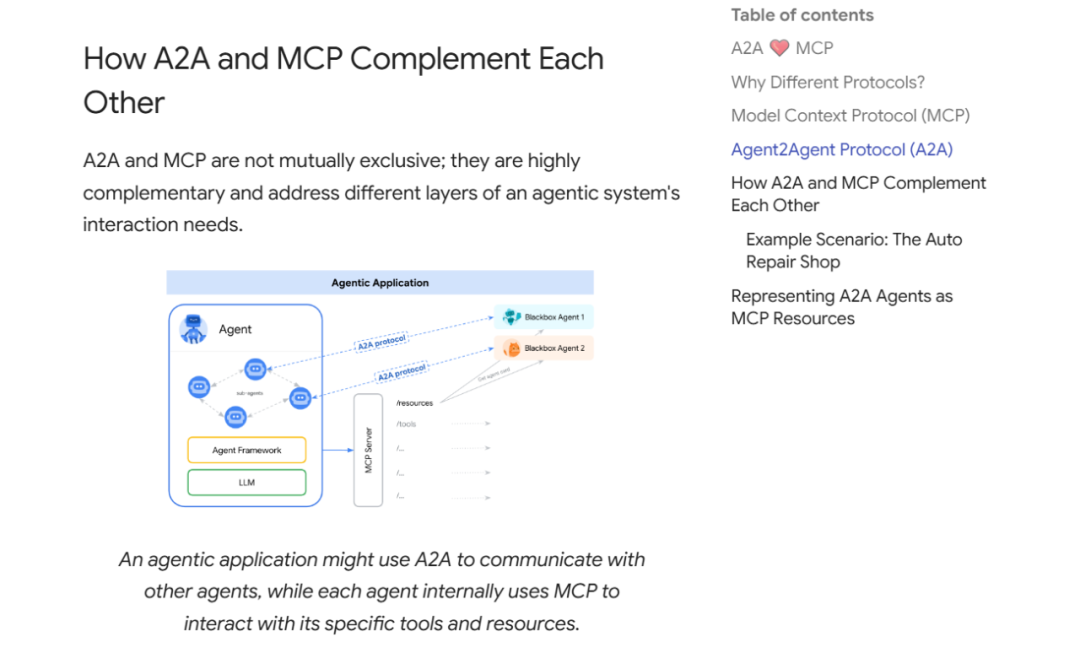

A2A vs MCP

A2A: protocol between agents; MCP: protocol between tools.

There is no mutual exclusivity between them.

5.Financial Industry Case of Multi-Agent

Source: TradingAgents: Multi-Agents LLM Financial Trading Framework —–2025 Year AAAI

TradingAgents designed an innovative stock trading system that mimics the collaborative processes within actual trading firms. This framework includes various role agents powered by large language models (LLM), including fundamental analysts, sentiment analysts, technical analysts, and traders with different risk tolerances.

Seven specialized agent roles are defined: fundamental analyst, sentiment analyst, news analyst, technical analyst, researcher, trader, and risk manager. Each agent has a unique name, specific role description, work objectives, and operational constraints, equipped with corresponding tools and techniques. For example, the sentiment analyst uses web searches and sentiment analysis algorithms to assess market sentiment, while the technical analyst focuses on coding and analyzing trading patterns. This division of labor helps improve the overall efficiency and accuracy of the system. For example, the analysis team consists of a series of specialized agents focused on collecting and analyzing market data to support trading decisions.

Evaluation Metrics:

Cumulative Return (CR), Annual Return (AR), Sharpe Ratio (SR), Maximum Drawdown (MDD).

Effect:

TradingAgents significantly outperformed existing rule-based trading benchmarks in profitability, achieving at least 23.21% cumulative return and 24.90% annual return, exceeding the best benchmark by at least 6.1 percentage points.