Comprehensive report from Electronic Enthusiasts Network, the Edge AI box is a hardware device that integrates high-performance chips, AI algorithms, and data processing capabilities, deployed at the edge of data sources such as factories, shopping malls, and traffic intersections. It can perform local data collection, preprocessing, analysis, and decision-making without the need to upload all data to the cloud, providing efficient and intelligent solutions for various industries.It is a key edge device required for Edge AI.

The Role of Edge AI Boxes in Edge AI Systems

Data Processing Center: The Edge AI box can connect to various sensors and devices to collect data in real-time, performing local data cleaning, feature extraction, and other preprocessing operations before inputting the processed data into AI models for inference and analysis. For example, in smart factories, the Edge AI box can receive data from various sensors on the production line, such as temperature, pressure, and vibration, preprocess this data, and use built-in AI models for fault prediction and quality inspection.Intelligent Decision Engine: Based on the inference results from AI models, the Edge AI box can make real-time decisions and controls. It can control devices or issue alerts based on preset rules or strategies. For instance, in smart security systems, when the Edge AI box detects abnormal behavior through video analysis, it can immediately trigger an alarm and notify relevant personnel.Communication Hub: Edge AI boxes typically have multiple communication interfaces, such as Ethernet, Wi-Fi, and 4G/5G, enabling communication with the cloud, other edge devices, and terminal devices. It can upload processing results to the cloud for further analysis and storage, receive commands and model updates from the cloud, and collaborate with other edge devices.Edge AI boxes collaborate with other edge devices (such as smart cameras, industrial sensors, and smart appliances) to form an Edge AI system. Smart cameras are responsible for collecting image data, industrial sensors collect various physical parameters, and the Edge AI box processes and analyzes this data to achieve intelligent functions. For example, in smart home systems, video data collected by smart cameras and environmental data (such as temperature, humidity, and light) collected by various sensors are transmitted to the Edge AI box, which analyzes this data to achieve intelligent control of home devices.Data generated by other edge devices is aggregated into the Edge AI box, which performs centralized processing and analysis. As the core node for data processing, the Edge AI box can improve the overall efficiency and response speed of the system. For instance, in smart traffic systems, traffic data collected by various sensors (such as geomagnetic sensors and cameras) distributed along the road is transmitted to nearby Edge AI boxes, which process and analyze this data in real-time to provide decision support for traffic management and control.

Main Edge AI Box Chips and Their Characteristics

Commonly used AI chips in Edge AI boxes vary, including ASIC, FPGA, and low-power GPU types, which need to possess high performance, low power consumption, and real-time processing capabilities.Major manufacturers includeNVIDIA (Jetson series), Huawei (Ascend series), Cambricon, Rockchip, Syntiant,Intel, Qualcomm, etc. Different chips focus on performance, power consumption, and application scenarios.NVIDIA’s Jetson series, based on NVIDIA’s GPU architecture, has powerful parallel computing capabilities, efficiently processing complex AI algorithms and models. It is also equipped with dedicated AI acceleration cores, further enhancing AI inference performance. It is widely used in smart robotics, drones, autonomous driving, and industrial automation. For example, in smart robotics, Jetson series chips can achieve real-time object detection, path planning, and voice interaction; in autonomous driving, they can be used for vehicle perception, decision-making, and control.Huawei’s Ascend series, utilizing Huawei’s self-developed Da Vinci architecture, features high computing power and low power consumption. It supports various precision calculations, meeting AI computing needs in different scenarios. Additionally, Huawei provides a comprehensive software ecosystem and development tools, facilitating application development. It has wide applications in smart cities, intelligent transportation, and financial security. For instance, in smart city construction, it can be used for video surveillance, smart security, and traffic flow analysis; in financial security, it can enable facial recognition and behavior analysis.Rockchip’s RK series integrates high-performance CPU, GPU, and NPU, offering high integration and cost-effectiveness. It supports various operating systems and development frameworks, making development convenient and enabling rapid product deployment. It is commonly used in smart retail, smart homes, and smart education. For example, in smart retail, it can be used for product recognition and customer flow statistics; in smart homes, it can enable voice interaction and image recognition functions for smart speakers and cameras.Cambricon’s MLU series focuses on AI computing, featuring efficient AI algorithm processing capabilities. It adopts advanced architectures and processes, excelling in energy efficiency. Additionally, Cambricon provides a rich software stack and toolchain, supporting mainstream deep learning frameworks. It is suitable for data centers, cloud computing, and smart security scenarios. In data centers, it can serve as an AI acceleration card to enhance the AI computing capabilities of servers; in smart security, it can be used for large-scale video analysis and processing.Syntiant’s BM series features high performance and low power consumption, meeting the real-time and low-power requirements of edge devices. It supports various AI models and algorithms, demonstrating strong versatility. It has applications in smart communities, intelligent energy, and industrial quality inspection. For example, in smart communities, it can be used for personnel and vehicle recognition and access management; in industrial quality inspection, it can enable product defect detection and classification.

Technical Challenges and Solutions for Edge AI Boxes

As key devices that bring AI computing capabilities to the edge, Edge AI computing boxes face numerous technical challenges in practical applications. The first is limited hardware resources; edge devices are typically small and low-power, with limited hardware resources (such as CPU, memory, and storage), making it difficult to run complex AI models. Large deep learning models (such as ResNet and BERT) require substantial computing resources, and directly deploying them on edge devices can lead to performance degradation or failure to run.This can be addressed through model compression and optimization, dedicated hardware acceleration, and lightweight model design. Model compression and optimization involve using techniques such as quantization, pruning, and knowledge distillation to reduce model size. Dedicated hardware acceleration: using AI chips (such as ASIC and FPGA) to enhance computing efficiency. Lightweight model design: developing lightweight models suitable for edge scenarios (such as MobileNet and ShuffleNet).The second challenge is energy consumption and heat dissipation; edge devices often rely on battery power or limited power sources, making power consumption and heat dissipation critical issues. AI computing requires significant energy, which may shorten device battery life or necessitate frequent recharging. High power consumption can lead to device overheating, affecting stability and lifespan.Therefore, Edge AI boxes require low-power design, dynamic power management, and heat dissipation optimization. Low-power design: optimizing hardware architecture (such as heterogeneous computing), reducing operating voltage and frequency. Dynamic power management: adjusting power consumption modes dynamically based on task load. Heat dissipation optimization: using efficient heat dissipation materials or structural designs.The third challenge is balancing model accuracy and performance; on resource-constrained edge devices, it is often difficult to balance model accuracy and performance. This manifests as either a decrease in accuracy or insufficient performance. Accuracy decline: model compression or simplification may lead to reduced recognition accuracy. Insufficient performance: even with model simplification, hardware limitations may still prevent meeting real-time requirements.Thus, mixed precision computing, edge-cloud collaboration, and adaptive inference can be employed. Mixed precision computing: using lower precision (such as INT8) for some calculations to enhance speed while ensuring accuracy. Edge-cloud collaboration: offloading complex computing tasks to the cloud, with edge devices only responsible for preliminary processing. Adaptive inference: dynamically adjusting model accuracy or computing resources based on task requirements.The fourth challenge is the adaptation of heterogeneous hardware and software; edge devices come in various hardware types (such as ARM, x86, and AI chips), and software must adapt to different platforms. This results in high development complexity: custom code must be written for different hardware, increasing development costs. Performance differences: the same model may perform significantly differently on different hardware.A unified development framework can be established, using frameworks that support multiple hardware platforms (such as TensorFlow Lite and ONNX Runtime). Middleware can mask hardware differences, simplifying the development process. Tools can be utilized to automatically adjust models to fit different hardware.

In Conclusion

Edge AI computing boxes face multiple challenges in hardware resources, energy consumption, security, adaptability, network environment, and cost. Addressing these challenges requires optimization from multiple dimensions, including hardware optimization, software adaptation, security mechanisms, and cloud-edge collaboration, to promote technological advancement and the improvement of the industrial ecosystem. In the future, as technology matures, Edge AI computing boxes will play a key role in more scenarios.

Disclaimer:This article is a comprehensive report from Electronic Enthusiasts. Please cite the source above when reprinting. For group discussions, please add WeChat elecfans999, for submission of interview requests, please email [email protected].

More Hot Articles to Read

-

Domestic DRAM leader initiates IPO! Valuation nearly 140 billion

-

Transform in 1 second! Zhi Hui Jun launches “Nezha” wheeled robot, humanoid robot industry chain poised for takeoff

-

Domestic GPU makes another breakthrough, collectively breaking through NVIDIA + AMD

-

Market value nearly 4 trillion! NVIDIA’s GB300 server officially shipped

-

Suspension of work and production! Former charging treasure champion “Roma Shi” faces bankruptcy rumors? Official response

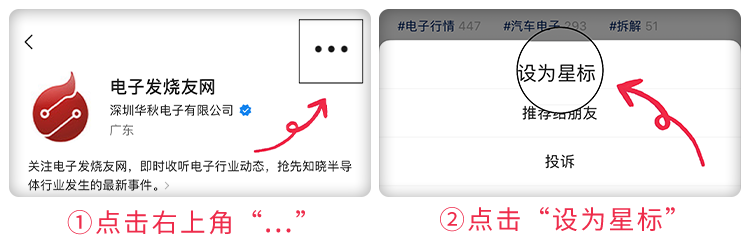

Click to Follow and Star Us

Set us as a star to not miss any updates!