The implementation principle of Edge AI is to deploy artificial intelligence algorithms and models on edge devices close to the data source, enabling these devices to process, analyze, and make decisions locally without the need to transmit data to remote cloud servers. The goal of Edge AI implementation is to bring AI capabilities down to edge devices.

Principles and Core Aspects of Edge AI Implementation

Edge AI adopts a distributed computing architecture, dispersing computing tasks from centralized cloud servers to various edge devices. Edge devices can include smartphones, smart cameras, industrial sensors, smart home devices, etc. These devices possess certain computing capabilities, allowing them to process collected data locally, reducing reliance on cloud computing resources.Edge devices complete data collection, preprocessing, analysis, and decision-making locally. Data is processed near its source, avoiding the network latency and bandwidth pressure caused by transmitting large amounts of data to the cloud. For example, in smart security monitoring, cameras can analyze video images locally to identify abnormal behaviors and immediately issue alerts upon detecting suspicious situations, without needing to upload all video data to the cloud.Model Lightweighting: Due to the limited computing resources, storage capacity, and power consumption of edge devices, traditional complex AI models cannot run efficiently on edge devices. Therefore, AI models need to be lightweighted through techniques such as model compression, pruning, and quantization. Model compression reduces the number of parameters in the model, lowering storage space and computational complexity; model pruning removes unimportant neurons or connections in the model to improve operational efficiency; model quantization converts floating-point parameters in the model to low-precision fixed-point numbers, reducing computational load and memory usage.Deployment of Edge Devices: The lightweight AI models are deployed on edge devices. This requires consideration of the hardware architecture, operating system, and development environment of the edge devices. Different edge devices may have different processor architectures (such as ARM, x86, etc.), necessitating optimization and adaptation of the model for different architectures to ensure efficient operation on the device. Additionally, corresponding applications or software frameworks need to be developed to facilitate the invocation and management of AI models on edge devices.Data Collection and Preprocessing: Edge devices collect data through various sensors (such as cameras, microphones, temperature sensors, accelerometers, etc.). The raw data collected often contains noise, redundancy, and inconsistencies, requiring preprocessing. Data preprocessing includes operations such as data cleaning, feature extraction, and data normalization. Data cleaning removes noise data and outliers; feature extraction extracts useful feature information from the raw data for the AI model, reducing data dimensionality; data normalization maps data to a specific range, improving the convergence speed and accuracy of the model.Real-time Inference and Decision-making: The preprocessed data is input into the AI model deployed on the edge device for real-time inference. The AI model analyzes and judges based on the input data, outputting corresponding results. The edge device makes decisions based on the inference results and executes corresponding actions. For example, in autonomous vehicles, edge devices (such as onboard computing platforms) process data from cameras, radars, and LiDAR sensors in real-time, using AI models for environmental perception, target detection, and path planning, then controlling the vehicle’s speed, direction, and braking based on the inference results.Cloud Collaboration and Updates: Although Edge AI emphasizes local processing, in some cases, edge devices still need to collaborate with the cloud. For instance, when edge devices encounter complex problems or need to process large amounts of data, they can upload some data to the cloud for further analysis and processing; the cloud can push updated AI models, algorithms, and knowledge bases to edge devices, enabling remote updates and optimizations of edge devices, enhancing the performance and adaptability of the Edge AI system.

Edge AI Hardware Devices

The implementation of Edge AI relies on various edge devices, each with different characteristics and functions to adapt to diverse application scenarios. Common types of edge devices required for Edge AI include:Smart terminal devices,such as smartphones, smart cameras, smart wearable devices, etc.Smartphoneshave powerful computing capabilities, a variety of sensors (such as cameras, microphones, accelerometers, gyroscopes, etc.), and good communication capabilities (supporting Wi-Fi, 4G/5G, etc.). They can be used for image recognition (such as photo translation, QR code recognition), voice interaction (such as smart voice assistants), and real-time health monitoring (combined with sensor data) in Edge AI applications. For example, when a user takes a photo to identify tourist information while traveling, they are utilizing the Edge AI capabilities of their smartphone.Smart camerasare equipped with image sensors and certain computing capabilities, allowing them to collect image data in real-time and perform local processing. They are widely used in security monitoring, smart traffic, and other fields. In security monitoring, smart cameras can achieve facial recognition and behavior analysis (such as detecting abnormal running or loitering) through Edge AI, issuing alerts in a timely manner; in smart traffic, they can be used for vehicle recognition and traffic flow statistics.Smart wearable devices, such as smartwatches and smart bands, are compact and portable, typically equipped with various sensors (such as heart rate sensors, sleep monitoring sensors, etc.) and low-power processors. They are mainly used for health monitoring and activity tracking. For example, smartwatches can monitor users’ heart rates, blood pressure, and sleep quality in real-time, and perform preliminary analysis using Edge AI algorithms to provide health recommendations.Industrial devices, such as industrial sensors, industrial gateways, and industrial robot controllers.Industrial sensorscan collect various parameters in real-time during industrial production, such as temperature, pressure, flow, vibration, etc. Some industrial sensors have certain data processing capabilities. In industrial automation, industrial sensors can analyze collected data through Edge AI to achieve real-time monitoring of equipment status and fault warning. For example, by analyzing vibration data from equipment, potential faults can be detected in advance, preventing production losses caused by equipment downtime.Industrial gatewaysact as a bridge between industrial site devices and the cloud, possessing certain computing and communication capabilities, able to connect devices of various industrial protocols, and preprocess and perform edge computing on collected data. They can integrate and analyze data collected from different industrial devices, enabling remote monitoring and management of equipment. For example, in a factory, industrial gateways can collect device data from various production lines and analyze it using Edge AI algorithms to optimize production processes and improve efficiency.Industrial robot controllersare responsible for controlling the movement and operation of industrial robots, requiring high computing performance and real-time capabilities. By integrating Edge AI technology, industrial robot controllers can enable robots to have smarter perception and decision-making capabilities. For example, on an assembly line, robots can use Edge AI to identify the shape and position of parts, automatically adjust assembly actions, and improve accuracy and efficiency.Smart home devices, such as smart speakers and smart appliances.Smart speakersintegrate microphone arrays, speakers, and voice recognition chips, enabling voice interaction capabilities, and can recognize and process voice commands through Edge AI. Users can control smart home devices with voice commands, such as turning on lights or adjusting air conditioning temperature. Additionally, smart speakers can provide music playback, news, and other services.Smart appliances, such as smart refrigerators, smart air conditioners, and smart washing machines, are equipped with sensors and microprocessors, capable of monitoring device operating status and environmental information in real-time. Through Edge AI technology, smart appliances can achieve intelligent control and optimize operation. For example, smart refrigerators can provide shopping list suggestions based on the storage conditions of internal food; smart air conditioners can automatically adjust operating modes and temperatures based on indoor and outdoor temperatures and human activity.Smart traffic devices, such as onboard computing platforms and smart roadside devices.Onboard computing platformshave high-performance computing capabilities and low-latency communication capabilities, meeting the needs of complex applications such as autonomous driving. In autonomous vehicles, onboard computing platforms process data from cameras, radars, and LiDAR sensors in real-time using Edge AI, achieving environmental perception, target detection, path planning, and decision control functions to ensure safe driving.Smart roadside devicesare installed along roads, such as smart traffic lights and roadside units (RSUs), capable of collecting traffic flow, vehicle speed, and other information, and communicating with vehicles. Through Edge AI technology, smart roadside devices can achieve real-time monitoring and optimization control of traffic flow. For example, adjusting the duration of traffic lights based on real-time traffic flow can improve road efficiency; at the same time, they can send road condition information to vehicles, guiding them to choose the best driving route.

Setting Up Edge AI Device Environment and Model Deployment

Hardware Device Selection: Choose appropriate edge device hardware based on the size and computational requirements of the model. For models with high computational resource requirements, edge computing boxes equipped with high-performance processors (such as GPUs, TPUs) can be selected; for simpler tasks, ordinary embedded processors (such as ARM Cortex series) may be sufficient.Operating System and Development Environment Configuration:Install the appropriate operating system on the edge device, such as Linux or Android, and configure the corresponding development environment, including compilers, debugging tools, etc. Additionally, install frameworks that support AI model inference, such as TensorFlow Lite, PyTorch Mobile, ONNX Runtime, etc., which can deploy trained models to edge devices and perform efficient inference.Model Conversion: Convert the trained model into a format supported by the edge device. For example, if a model is trained using TensorFlow, the TensorFlow Lite Converter can be used to convert the model to .tflite format for running on the TensorFlow Lite framework.Model Deployment: Deploy the converted model to the edge device. Model files can be transferred to the storage unit of the edge device via wired (such as USB, Ethernet) or wireless (such as Wi-Fi, Bluetooth) methods.System Integration: Integrate the deployed model with other software modules on the edge device to achieve a complete process of data collection, preprocessing, model inference, and result output. For example, write a data collection program that transmits data collected from sensors in real-time to the model for inference and controls corresponding actuators based on the inference results.

Disclaimer: This article is reported by Electronic Enthusiasts. Please indicate the source when reprinting. If you wish to join the group for discussion, please add WeChat elecfans999. For submission of interview requests, please send an email to [email protected].

More Hot Articles to Read

-

Huawei Harmony Foldable PC Global Debut, Major Breakthrough for Domestic Operating Systems!

-

The Robotics Industry Becomes a Capital Darling: Completing 3 Financing Rounds in 5 Months, with a Peak Market Value of 15 Billion Yuan

-

Significant Progress on AEB Mandatory National Standards! Official Implementation in 2028, Industry Chain Celebration

-

AI Toys or AI Tools?

-

Computing Power Soars! China Launches 2800 Computing Power Satellites, Companies Behind the Scenes Revealed

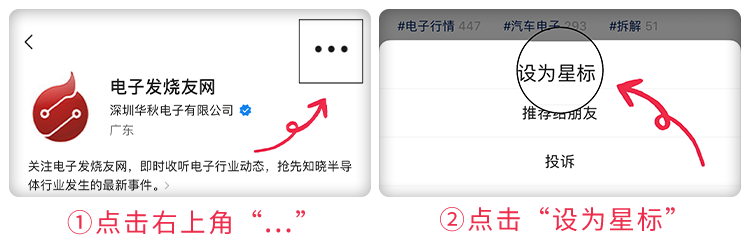

Click to Follow and Star Us

Set us as a star to not miss any updates!