✎ Introduction Are you still confused about the differences between CPU, GPU, FPGA, ASIC, DPU, and NPU?

In modern computing, there are various specialized processor chips, each with unique characteristics serving different application needs.

With the rise of AI and the semiconductor industry, these chips have transitioned from being “high-end” to “widely recognized,” yet those without specialized knowledge often find it challenging to grasp their intricacies.

This article will provide an overview of CPU, GPU, FPGA, ASIC, DPU, and NPU chips from the perspective of a mobile company’s “workplace persona”. (There are three surprises at the end)

Think of these chips as employees in a company, and you can easily understand their roles. For instance, the CEO (CPU) excels at multitasking coordination, while the production line worker (GPU) specializes in physical tasks, and the flexible contractor (FPGA) can switch roles as needed…

Their design structures differ, which directly determines what tasks they can perform, how quickly, and how efficiently.

CPU: Central Processing Unit (CEO)

Let’s start with the well-known CPU.

The CPU (Central Processing Unit) is based on a multi-level pipeline architecture and can schedule multiple tasks through its instruction set. It excels at quickly processing complex logic and diverse tasks, such as operating system scheduling, application execution, and real-time decision-making.

Thanks to its multi-core design, the CPU can process multiple threads in parallel, but its parallel computing capability is relatively limited, leading to lower efficiency when handling massive data computations. Therefore, it is primarily used in personal computers, servers, and mobile devices. Intel, AMD, and ARM are currently the main manufacturers of CPUs worldwide.

Although it has fewer cores, with mainstream CPUs having 4-16 cores, each core is a highly educated elite (complex computation unit).

In workplace terms, you can think of it as the CEO of the company, who can handle over 100 decisions daily (system scheduling/file editing/web browsing), but each task is considered carefully, and only one document can be signed at a time.

GPU: Graphics Processing Unit (Production Line Worker)

When highly parallel tasks need to be processed, the GPU shows its unique advantages. The GPU (Graphics Processing Unit) uses SIMD (Single Instruction, Multiple Data) / SIMT (Single Instruction, Multiple Threads) architecture, integrating thousands of simplified computation units.

Originally designed solely for graphics rendering, the GPU’s thousands of computing cores can simultaneously execute a large number of similar operations, such as matrix multiplication or pixel processing, making it an ideal choice for deep learning training and scientific computing.

NVIDIA’s CUDA and AMD’s OpenCL frameworks further expanded the GPU’s applications in general computing, but its high power consumption and limitations in complex logic processing make it difficult to replace the CPU’s core position.

The GPU has a vast number of cores; for instance, NVIDIA’s highly sought-after H100 has 18,432 CUDA cores, but each core only performs simple arithmetic multiplication.In workplace terms, you can think of it as a Foxconn production line worker. Although each person only tightens screws (pixel computation), with 5,000 people working simultaneously, the speed of assembling an iPhone on the production line far surpasses that of a CEO working alone, as more people (more cores) means greater power.

FPGA: Field-Programmable Gate Array (Contractor)

If higher flexibility and hardware-level optimization are required, FPGA provides an intermediate solution between CPU and GPU.

FPGA (Field-Programmable Gate Array) allows users to reconfigure hardware circuits through programmable logic blocks and wiring resources, enabling specific algorithms to be executed directly at hardware speed. This reconfigurable feature shines in communication base stations, edge AI inference, and prototype validation.

However, FPGA development requires expertise in hardware description languages, and the design cycle is long and costly. Currently, Xilinx and Intel are the main competitors in this field.

From a structural perspective, FPGAs have no fixed circuits, with latency 5-10 times lower than that of CPUs.

In workplace terms, FPGAs are like a flexible outsourcing team. Today, you can have them assemble phones (image processing), and tomorrow switch to soldering circuit boards (signal encryption). They can be moved wherever needed, but each time they switch roles, they require retraining (programming).

ASIC (TPU as one example): Application-Specific Integrated Circuit (Accountant)

In contrast to the flexibility of FPGAs, ASICs (Application-Specific Integrated Circuits) are designed for single algorithm customization and are non-modifiable.

ASICs achieve extreme performance and energy efficiency through streamlined circuits, but their high R&D costs and non-modifiable nature limit their application scope, typically only being economically viable in large-scale production scenarios (such as AI acceleration in data centers or mining).

For example, the SHA-256 algorithm acceleration chip in Bitcoin mining machines or Google’s TPU (Tensor Processing Unit) designed specifically for TensorFlow.

Structurally, its circuits are fixed with no redundancy; for instance, the ASIC in Bitcoin mining machines has an energy efficiency ratio 1,000 times that of CPUs.

You can think of ASICs as the specialized accountants in your company’s finance team, who can process calculations (algorithm processing speed) 1,000 times faster than the CEO, but ask them to assemble phones (graphics rendering)? They would immediately collapse, thinking: “Don’t you know what role does what work?”

TPUs can optimize for matrix multiplication, using pulsed arrays to reduce data movement, akin to a senior accountant who is the best in the company at this aspect.

DPU: Data Processing Unit (Logistics Coordinator)

In recent years, with the increasing complexity of data centers, DPUs (Data Processing Units) have gradually emerged. These chips integrate RDMA (Remote Direct Memory Access) and virtualization acceleration engines, focusing on offloading network, storage, and security tasks from the CPU.

DPUs, through smart network cards, incorporate multi-core ARM processors and hardware acceleration modules, enabling data forwarding acceleration and virtualization performance optimization, becoming core components of cloud computing and software-defined networks. For example, AWS Nitro systems or NVIDIA BlueField series.

They function like dedicated logistics coordinators in a company, sorting incoming packages (network data packets) so that the CEO doesn’t have to personally handle the logistics (CPU offloading), speeding up the company’s inventory and shipping processes.

NPU: Neural Processing Unit (Design Team)

Meanwhile, NPUs (Neural Processing Units) have emerged in edge computing, capable of optimizing for convolutional neural networks and supporting INT8 low-precision calculations.

NPUs integrate MAC (Multiply-Accumulate) arrays internally, specializing in image recognition, applicable in real-time image processing for mobile camera systems or visual recognition in autonomous driving.

For instance, Huawei Ascend and Apple A-series chips both include NPUs, which can achieve efficient AI inference under low power conditions through hardware acceleration for operations like convolution and pooling.

They are akin to the design team in our mobile company, who can produce posters at an impressive speed, but ask them to prepare financial reports (data calculations) or assemble phones (graphics rendering)? They would simply refuse, thinking, “Whoever wants to do it can do it.”

Final Thoughts (Three Surprises)

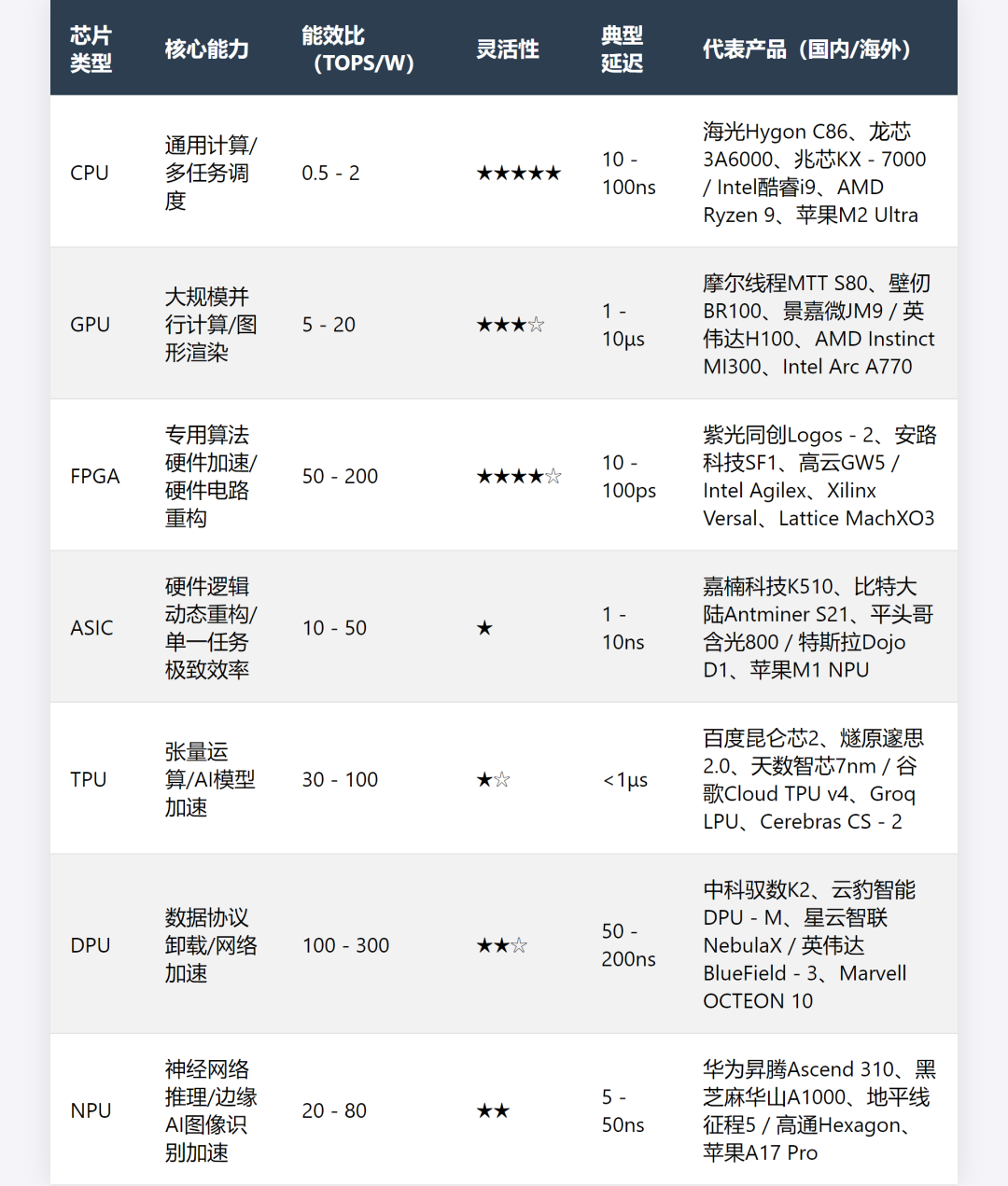

Finally, we will summarize with a chip capability table, a workplace comparison reference chart, and a simple selection tongue twister to help everyone understand more clearly.

If you find this article useful, please share it with your friends, click share, and like, so more people can see it.

Chip/Workplace Comparison (No offense intended, just for reference):

-

CEO (CPU): Can do everything but inefficient → Suitable for daily office work

-

Worker (GPU): Only good at heavy lifting but many in number → Suitable for gaming/AI training

-

Outsourcing (FPGA): Flexible but costly → Suitable for military/communication base stations

-

Accountant (ASIC): Specialized but rigid → Suitable for mining/mobile chips

-

Senior Accountant (TPU): Arithmetic genius among ASICs but specialized → For Google/Baidu large models

-

Logistics Department (DPU): Allows the CEO not to handle logistics personally → Essential for cloud computing data centers

-

Design Team (NPU): Image editing wizard → Mobile photography/autonomous driving vision

Selection Tongue Twister:

For flexible needs, choose FPGA, for energy efficiency, ASIC is the first choice. For AI training, TPU is fast, for daily office work, CPU is suitable. For gaming graphics, GPU is cool, for cloud computing, use DPU. For photo optimization, NPU is strong, domestic chips are just starting to shine!We welcome everyone to share and discuss your views in the comments section, and we hope domestic chips continue to improve.We will continue to share the differences between various types of international and domestic mainstream chips, so don’t forget to star★follow us.

END

Click the card aboveto star★and follow us,to get the latest updates on global semiconductor industry news!

Click the card aboveto star★and follow us,to get the latest updates on global semiconductor industry news!

If you find the content good, please take a moment to click share, like, and recommend it so more people can see it!

Share

Collect

Like

Recommend

▼Recommended Reading▼

Breaking news: Domestic DUV light source technology breakthrough! 3nm!?

RISC-V is expected to become widespread across the country! Over 20 manufacturers have already laid out plans.

Summary of major wafer fabs in China for 2025 (with comprehensive tables)

A comprehensive glossary of common semiconductor jargon, recommended for collection!

Business Cooperation Alice:13122434666Email:[email protected] Conference Consultation Ms. Zheng:15626471856Email:[email protected] Submission and Tips G:18621818440Email:[email protected]