If you have been following Intel and AMD’s new CPUs recently, you may have noticed that whether it’s the Core Ultra or Ryzen 8000G, new products are equipped with NPU modules to meet the challenges of the AI era. You might ask: What is NPU, and why do I need it?

Neural Networks and Neural Network Algorithms

NPU stands for “Neural Processing Unit.” Therefore, to understand NPU, one must know what a neural network is and what role it plays in AI technologies and applications.

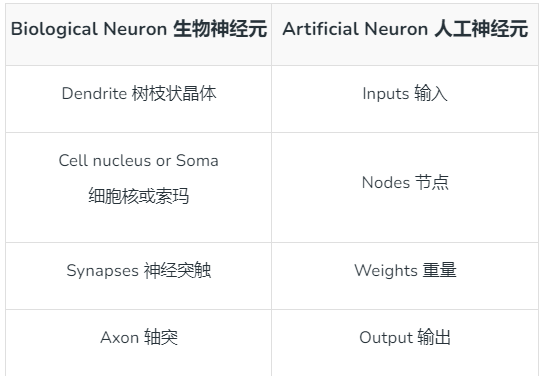

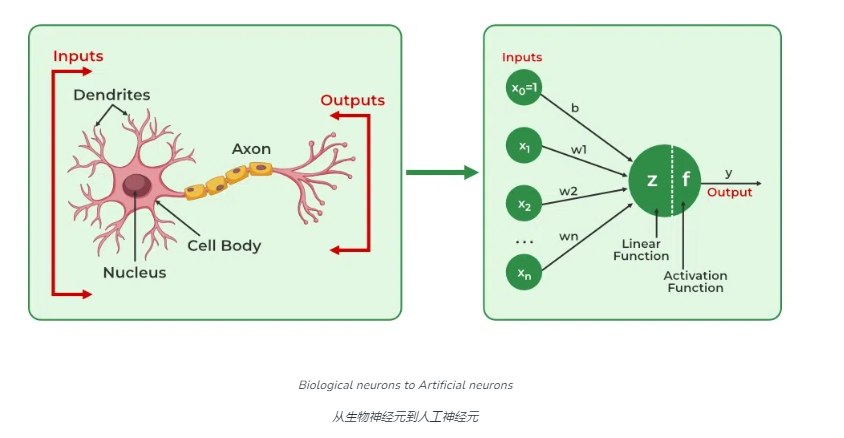

A neural network is a computational model that mimics the structure of neurons in the human brain, used for pattern recognition and processing complex data. It consists of many nodes (similar to neurons) that interact through connections (similar to synapses).

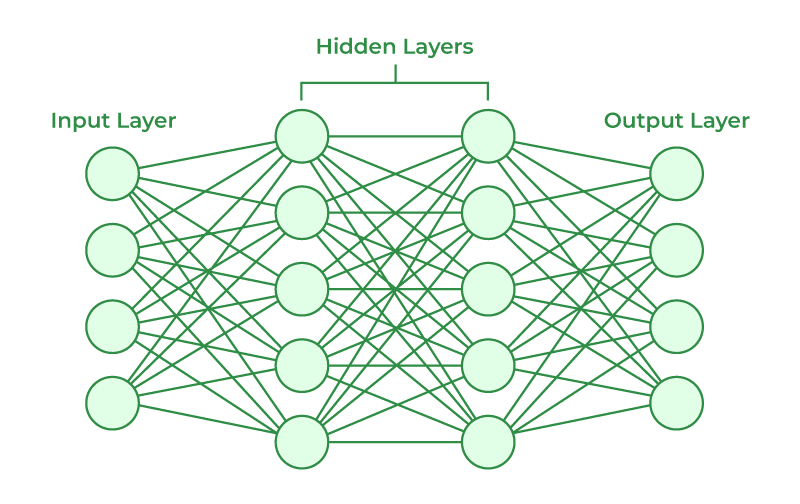

Artificial neural networks contain artificial neurons called units/nodes. These units are arranged in layers, collectively forming the entire artificial neural network system. A layer can have only a few dozen units or millions of units, depending on how complex the neural network needs to learn hidden patterns in the dataset.

Typically, an artificial neural network has an input layer, an output layer, and one or more hidden layers. The input layer receives external data that the neural network needs to analyze or learn. Then, this data passes through one or more hidden layers, transforming the input into valuable data for the output layer. Finally, the output layer provides output in the form of the neural network’s response to the input data.

Each connection has a weight that represents the strength of information transfer. In this way, the neural network can learn and store information, classifying, recognizing, and predicting input data.

For example, in the human brain, learning occurs within the nucleus or somatic cells, where there is a nucleus that helps process impulses. If the intensity of the impulse is sufficient to reach the threshold, an action potential is generated and transmitted through the axon. Synaptic plasticity represents the ability of synapses to strengthen or weaken over time based on their activity.

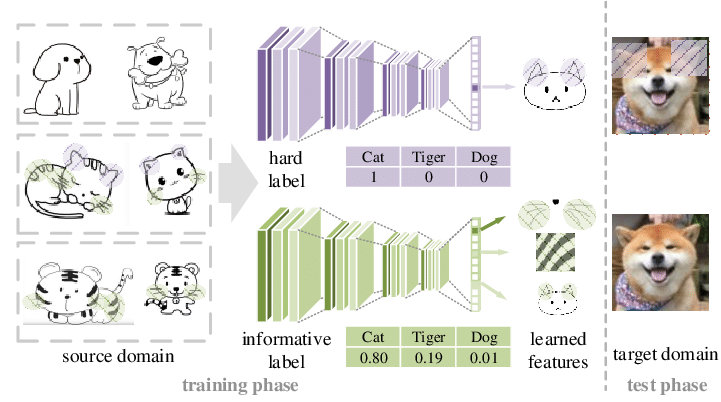

In artificial neural networks, backpropagation is a technique used for learning, adjusting the weights between nodes based on the error or difference between predicted and actual results. By adjusting the weights in the network, it enables the network to complete specific tasks more accurately, such as image recognition, speech recognition, and natural language processing. Deep learning, an important branch of neural network algorithms, can handle more complex data and tasks by using multi-layer (deep) network structures.

In the field of artificial intelligence, especially in generative AI, neural networks and neural network algorithms play a crucial role. Generative AI refers to AI systems that can create new content, such as automated writing, painting, and music creation. These systems typically rely on deep neural networks, generating new, similar real-world content by learning from a large number of data samples.

Confused? Let’s take an example.

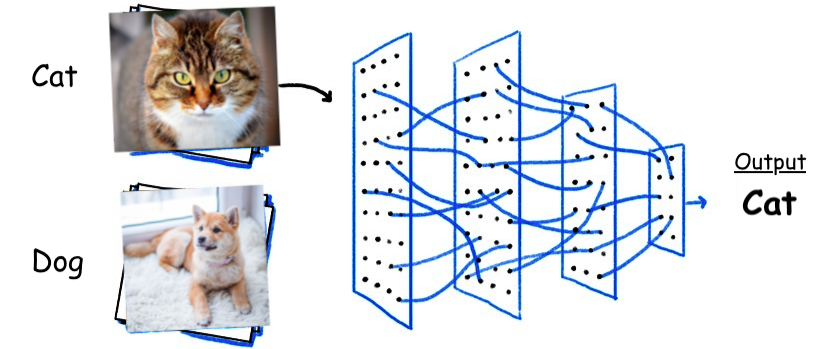

Suppose you want to teach an AI to recognize cats through an artificial neural network, and you show it thousands of different cat images, trying to train the network to recognize cats. Once the neural network has been sufficiently trained with cat images, it needs to be tested to see if it can correctly identify cat images.

The specific method is to have the neural network classify the provided images, determining whether they are cat images. The output results obtained by the AI network will be confirmed by human-provided descriptions of whether the images are cat images. If the automatic recognition network misidentifies, backpropagation will be used to adjust what it learned during training.

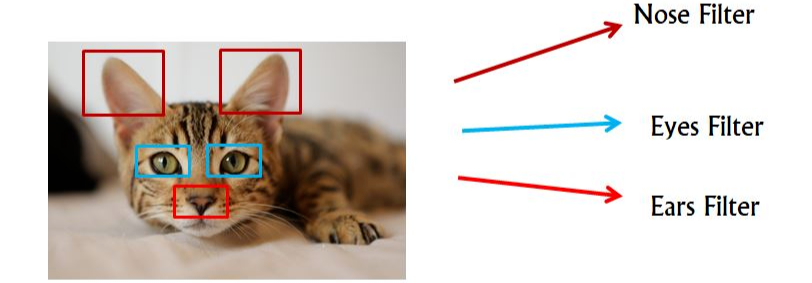

A single neuron may specialize in recognizing certain features of a pattern and “communicate” with other nodes.

A single neuron may specialize in recognizing certain features of a pattern and “communicate” with other nodes.

For instance, during the learning recognition process, if it correctly identifies a cat, it scores a point; if incorrect, no points or points are deducted. The neural network exchanges information from images processed by different neurons, continuously summarizing the characteristics of the “cat,” discarding irrelevant image information, striving for correct identification, achieving high scores, and figuring out how to recognize faster, increasing scoring efficiency. This process continues until the artificial neural network can accurately identify cats in images with minimal error rates.

Similarly, by training the neural network, generative AI can create new musical works, write articles, or generate realistic images. The role of neural networks in generative AI is to learn and understand the inherent structure of data, then generate new data instances based on these structures.

NPU Emergence

The Neural Processing Unit (NPU) is a processor specifically designed to accelerate neural network computations. Unlike traditional Central Processing Units (CPUs) and Graphics Processing Units (GPUs), NPUs are optimized at the hardware level for AI computations to enhance performance and energy efficiency.

Intel’s NPU architecture

Intel’s NPU architecture

The NPU works by utilizing its specially designed hardware structure to perform various mathematical operations in neural network algorithms, such as matrix multiplication, convolution, etc. These operations are core tasks in the training and inference processes of neural networks. By optimizing at the hardware level, NPUs can execute these operations with lower energy consumption and higher efficiency.

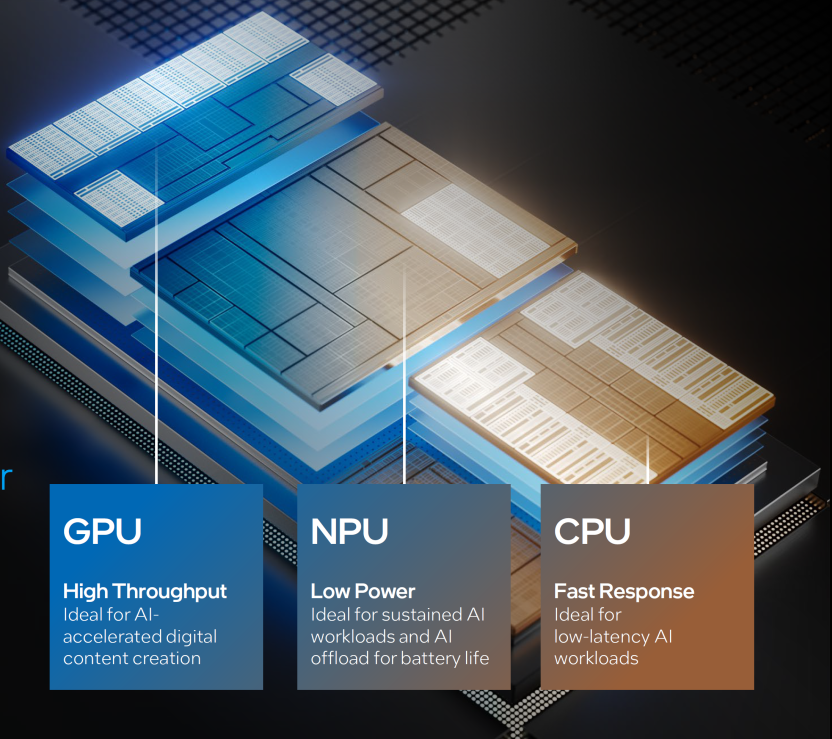

Differences Between NPU, CPU, and GPU

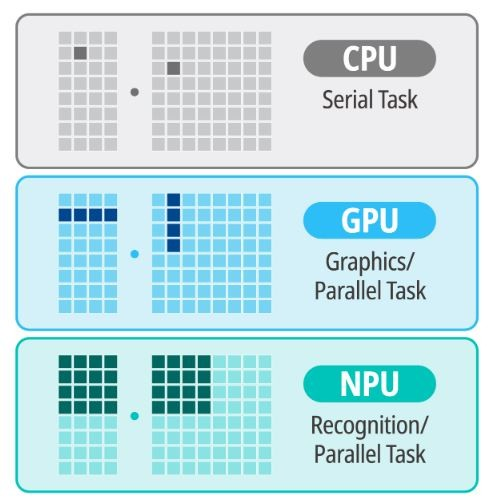

The CPU is a general-purpose processor designed to execute a wide range of computational tasks. It has strong flexibility and programmability but may not be efficient for specific tasks (like AI computations).

The GPU was originally designed for graphics and video rendering, excelling in parallel computing tasks, thus widely used in the AI field. However, GPUs are not specifically designed for AI computations and may not be as efficient as NPUs for certain types of AI tasks.

In simple terms, as illustrated in the following diagram, it is easy to explain the differences among the three: CPUs execute linear, serial tasks (instructions) with lower efficiency and higher generality; GPUs handle parallel processing and specialized graphics parallel processing with higher efficiency; while NPUs perform “parallel cognitive processing,” achieving higher efficiency in AI machine learning.

Compared to CPUs and GPUs, NPUs have significant advantages in the following areas:

1. **Performance**: NPUs are specifically optimized for AI computations and can provide higher computational performance.

2. **Energy Efficiency**: NPUs are typically more energy-efficient than CPUs and GPUs when executing AI tasks.

3. **Area Efficiency**: NPUs have a compact design that allows for efficient computational capabilities in limited space.

4. **Dedicated Hardware Acceleration**: NPUs often include dedicated hardware accelerators, such as tensor accelerators and convolution accelerators, which can significantly speed up AI task processing.

The Significance of NPU in CPUs

The emergence of NPUs is significant for advancing artificial intelligence, especially generative AI. As AI applications continue to grow and deepen, the demand for computational resources is also increasing. Integrating NPUs into CPUs provides an efficient and energy-saving solution, allowing AI technologies to be widely applied across various devices, including smartphones, autonomous vehicles, smart homes, etc., maximizing the workload of CPUs and GPUs while allowing each to perform its role.

The high performance and low energy consumption characteristics of NPUs enable real-time processing of AI technologies on mobile devices, providing users with a smoother and more natural interactive experience. Additionally, NPUs help reduce the deployment costs of AI applications, allowing more enterprises and developers to leverage AI technologies to create new value.

In summary, as one of the core technologies of the AI era, NPUs not only promote the advancement of artificial intelligence technologies but also have a profound impact on various industries. With the continuous progress and optimization of NPU technology, we have reason to believe that future AI applications will become more intelligent, efficient, and widespread.

Editor: Xiong Le

·END·