Core Logic:

ASIC AI chips are designed to optimize specific algorithms, thus demonstrating stronger computational power and efficiency than GPU when executing specific tasks, while also consuming less power and being more cost-effective. Currently, major overseas companies such as Google, META, and Amazon are actively investing in ASIC chips, and domestic companies are also continuously following suit. It is expected that the market size of AI ASIC chips will grow from $6.6 billion in 2023 to $55.4 billion by 2028, with a compound annual growth rate (CAGR) of 53%. Related companies in the industry chain are expected to welcome broad development opportunities.

Related Companies:

Chipone Technology, Aojie Technology, Cambricon, Canxin Technology, Huidian Technology, Xingsen Technology, and Placo New Materials, etc.

01 Basic Concept of ASIC Chips

ASIC chips, short for Application-Specific Integrated Circuits, are integrated circuits designed and manufactured according to specific user needs and electronic system requirements. Unlike general-purpose chips (such as CPU and GPU), ASIC chips focus on a single task, such as dedicated audio and video processors, as well as AI chips, all of which fall under the category of ASIC chips. Their significant advantages include compact size, low power consumption, high reliability, superior performance, strong confidentiality, and reduced costs, applicable in fields such as consumer electronics, network communication, cryptocurrency mining, automotive customization, and medical devices.

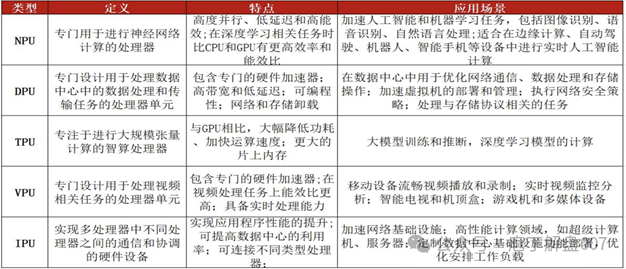

ASIC chips can be further subdivided based on their purpose and function into TPU, NPU, DPU, and other ASIC chips.Among them, TPU is a high-performance computing chip specifically designed for machine learning and artificial intelligence, capable of efficiently processing large-scale matrix operations; NPU simulates the working methods of human neurons and synapses for deep learning tasks; DPU is mainly used for data packet processing and security functions in data centers and network devices, providing efficient data processing and security guarantees. Additionally, ASIC chips also include other specific-purpose chips, such as those used for cryptocurrency mining.

Figure 1: Comparison of Different Types of ASIC Chips

Source: Zhongtai Securities

02 Advantages of AI ASIC Chips Compared to GPUs

(1)ASIC has superior computational power in specific algorithms

ASIC chips can be optimized for specific algorithms, thus demonstrating powerful computational capabilities when executing specific tasks. Especially in AI deep learning algorithms, ASIC chips can efficiently perform matrix operations and data processing, while GPU, despite having excellent parallel computing capabilities and numerous computing cores, may not match the computational power of ASIC chips in certain specific tasks. Furthermore, the computational efficiency of ASIC chips is strictly matched to the task algorithm, and the entire chip architecture is meticulously customized to achieve efficient task execution.

(2)ASIC chips have lower power consumption per unit of computational power

Compared to CPU and GPU, ASIC chips consume less energy per unit of computational power because they are designed specifically for certain tasks, avoiding unnecessary energy consumption. In contrast, GPU designs are more general-purpose, which may lead to some energy waste when executing a single task. For example, GPU consumes about 4 watts of power per unit of computational power, while ASIC chips consume only about 2 watts per unit of computational power. For instance, Microsoft’s Maia 100 has an energy efficiency ratio of 1.60, surpassing NVIDIA’s H200 (with an energy efficiency ratio of 1.41).

(3)ASIC has lower inference costs

Because ASIC chips are hardware-structured specifically for certain tasks, they reduce unnecessary general-purpose accelerated computing hardware designs, resulting in lower unit costs of computational power compared to GPU. For example, Google’s TPUv5 and Amazon’s Trainium 2 have unit costs of computational power that are only 70% and 60% of NVIDIA’s H100, respectively, providing significant cost reduction opportunities for certain applications.

(4)ASIC performs excellently in inference stage applications

The inference stage is characterized by the fact that the AI model has been fully trained and needs to quickly predict and classify input data. At this stage, while the computational accuracy requirements for the chip are moderate, the demands for computational speed, energy efficiency, and cost are quite strict. ASIC, with its highly customized design, can be optimized for inference tasks to achieve high-speed computation with low power consumption, especially in large-scale deployment scenarios, where the cost advantages of ASIC become increasingly apparent.

03 Mainstream Companies’ Progress in Developing ASIC Chips

Google began considering the layout of ASIC in 2006 and released its first TPU ASIC at the Google I/O conference in 2016, subsequently iterating to TPUV5P in 2023 and V6e in 2024, continuously leading the way with advanced processes and high computing power. At the Google Cloud Next 25 conference in April 2025, Google officially released the seventh generation TPU Ironwood, which set a new industry record with a computing power of 42.5 EFLOPS and lower training costs. Recently, it has been reported that OpenAI has started renting Google TPU to provide computing power services for its ChatGPT and other products to reduce inference costs, marking a milestone event in the recognition of computing ASIC by top AI manufacturers.

Amazon released Trainium in 2023, and the Trainium2 released in 2024 focuses on optimizing cloud energy efficiency. This chip is currently used by AI startup Anthropic, which Amazon has invested in, for training large models. It is understood that the company’s Trainium 3 chip will be mass-produced by the end of 2025.

Meta self-developed the AI computing chip MTIA v1 in May 2023, using RISC-V architecture and TSMC’s 7nm process, with an FP16 floating-point computing capability of 51.2 TFLOPS, and showcased its first-generation MTIA at the 2024 Hot Chips conference. Recently, Nomura Securities released a research report stating that Meta is expected to launch the next generation AI ASIC chip MTIA T-V1 in the fourth quarter of 2025, designed by Broadcom, featuring high specifications with 36-layer PCB and hybrid cooling technology, which will exceed NVIDIA’s next-generation AI chip “Rubin”. It may also launch the MTIA T-V1.5 (V1.5) ASIC in mid-2026, which is expected to be significantly more powerful than V1, with the interlayer size potentially twice that of V1, exceeding 5 times the mask size, similar to or slightly larger than NVIDIA’s next-generation GPU Rubin.

Microsoft also released the Maia 100, an AI chip (ASIC) specifically designed for cloud training and inference in 2023, which is the first AI chip designed for training and inference of large language models in Microsoft Cloud, using TSMC’s 5nm process with a transistor count of 105 billion. However, recent news indicates that the release date for Microsoft’s next-generation Maia AI chip has been postponed from 2025 to 2026.

04 Market Size and Growth Rate of AI ASIC Chips

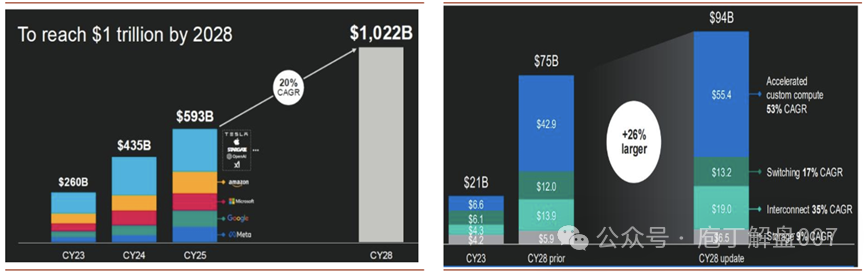

According toMarvell latest forecasts,it is expected that the four major CSPs in the United States will maintain a compound growth rate of 46% in ultra-large Capex from 2023 to 2025, reaching $327 billion by 2025. If we include the rapidly growing demand from companies like Apple, Tesla, and OpenAI, the overall data center Capex is expected to maintain a CAGR of 51%, reaching $593 billion by 2025, and further predicted to reach $1.022 trillion by 2028.

Marvell also raised the expected market size of the data center market to $94 billion by 2028, up 26% from the previous forecast of $75 billion in April 2024, with a 37% upward adjustment in the market size guidance for customized computing chips (XPU and XPU supporting chips). Customized AI chips are the fastest-growing segment, with a market size of $6.6 billion in 2023, expected to grow to $55.4 billion by 2028, with a CAGR of 53%, which is an increase of 29% from previous forecasts. Among them, the market size of XPU is expected to increase from $6 billion in 2023 to $40.8 billion in 2028, with a CAGR of 47%. The market size of supporting chips for XPU (including memory interfaces, network interconnects, power management chips, etc.) is expected to increase from $600 million in 2023 to $1.46 billion in 2028, with a CAGR of 90%.

In addition, regarding the price of ASIC, some institutions expect that the overall average unit price of ASIC is expected to increase by 92% in the 2026 accounting year (ending in October 2026), and again grow by 25% in 2027, mainly due to the increase in chip size and newer memory technologies, as the specifications of ASIC will gradually approach the level of AI GPU.

Figure 2: Capital Expenditure and Market Size Forecast for Data Centers

Source: Guosen Securities

05 Global Supply Pattern of AI ASIC Chips

In the ASIC track, the two giants in the US IC design industry, Broadcom and Marvell, together account for over 60% of the market share.

(1)Broadcom

Broadcom’s predecessor is Avago, which acquired the former ASIC giant LSI Logic in 2014, laying the foundation for the ASIC field. In 2016, Broadcom designed the first generation of TPU for Google, and subsequently collaborated on rapid iteration work for TPU, and later partnered with Meta, Intel, OPEN AI, etc. It is expected that the number of major customers will increase to 7 by 2027.

Currently, Broadcom holds the first position in the ASIC market with a share of 55%-60%, and the company’s core advantages in the AI chip field lie in customized ASIC chips and high-speed data exchange chips, with solutions widely applied in data centers, cloud computing, high-performance computing, and 5G infrastructure.

According to the company’s latest financial report for the second quarter of the 2025 accounting year (ending May 4, 2025), the quarterly revenue reached a historical high of $15.004 billion, with AI chip revenue also exceeding $4.4 billion, and it is expected that AI chip revenue will grow to $5.1 billion in the third quarter, mainly driven by ASIC chips.

(2)Marvell

Marvell also expands its ASIC R&D capabilities through acquisitions. In 2019, it acquired Avera Semi, which is responsible for ASIC business under Global Foundries, with clients including Amazon, Intel, Microsoft, etc.

It is estimated that Marvell’s current ASIC revenue mainly comes from Amazon’s Trainium 2 and Google’s Axion Arm CPU processor, and the company’s collaboration with Amazon on the Inferential ASIC project is expected to begin mass production in 2025 (i.e., the 2026 fiscal year). The project with Microsoft, Microsoft Maia, is expected to launch in 2026 (i.e., the 2027 fiscal year).

According to Marvell, the company has secured 18 customized chip projects and has over 50 transactions in negotiation. In 2023, Marvell’s market share in customized computing and supporting components (including customized XPU and supporting components) was below 5%, while the market share in 2024 is expected to reach 13%, and it is projected to increase to 20% by 2028.

From the perspective of domestic companies, major internet firms such as Alibaba, Baidu, and Tencent are also actively laying out ASIC chips. For example, Alibaba’s T-head launched the Hanguang 800 AI chip, Baidu’s Kunlun series AI chips, and Tencent has developed dedicated chips for AI inference, video transcoding, and smart network cards. ByteDance has also been reported to collaborate with Broadcom to jointly develop a 5nm process AI chip. Additionally, well-known companies like Huawei’s 910 series and Cambricon’s SiYuan series also belong to the category of ASIC chips and are currently the most mainstream AI chips in China.

06AI ASIC Chip-Related Companies

By sorting through, the following are some companies involved in AI ASIC chips in the A-share market:A股中涉及AI ASIC芯片的部分公司如下:

——Chipone Technology:

is a leading provider of SoC and ASIC design services in China, with integrated platform-based customized chip design capabilities, continuously providing customized chip solutions for giants like ByteDance, Alibaba, and Baidu. In 2024, the revenue from ASIC business is expected to be approximately 725 million yuan, a year-on-year increase of 47.18%, accounting for over 30% of total revenue. The company has multiple processors such as GPU, NPU, and VPU, and over 1600 mixed-signal IPs, deeply laying out AI and Chiplet technology, and is expected to become a leader in the domestic ASIC industry.

——Aojie Technology:

The company is proactively laying out customized ASIC chip business, with rapid growth in chip customization and semiconductor IP licensing business. In 2024, the revenue from chip customization is expected to be 336 million yuan, a year-on-year increase of 48.7%, accounting for nearly 10% of total revenue.

——Huidian Technology:

Due to the lower performance of single-chip servers, the demand for data transmission is more frequent, and the thermal management requirements are higher, the PCB specifications are higher than those of GPU platforms, leading to a significant increase in value. The company supplies ASIC chip substrates directly to Google/Meta, benefiting the most from the growth of the ASIC industry.

——Cambricon:

is a rare cloud AI chip manufacturer in China, providing a series of intelligent AI chip products and platform-based basic system software that integrate cloud, edge, and end, with both training and inference capabilities. Among them, the cloud product line mainly provides cloud AI chips, acceleration cards, and training machines, covering model training and inference, and has now iterated to the SiYuan 590 series.

——Canxin Technology:

is a one-stop customized chip and IP supplier, experienced in ASIC chip design and customization, capable of providing one-stop services.

——Xingsen Technology: The company’s FCBGA packaging substrate can be used for packaging high-end chips such as CPU, GPU, FPGA, and ASIC.

——Placo New Materials:

The company’s chip inductors can be applied to ASIC chips, serving to power their front end, and feature miniaturization and high current resistance.

Disclaimer:This article is for personal learning purposes only and does not constitute any investment advice. Much of the information quoted in the text is compiled from publicly available information on the internet, and all judgments are based on personal subjective assumptions, which may inevitably contain many errors and omissions. Please operate at your own risk. The stock market has risks, and investment requires caution!