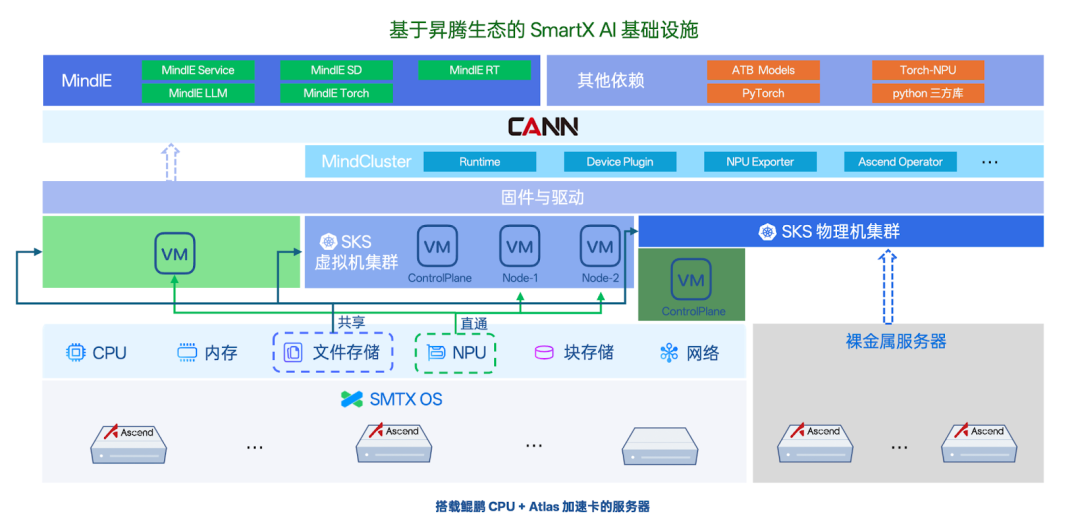

Recently, SmartX launched the DeepSeek solution based on AI infrastructure, providing a unified infrastructure resource stack for both AI large models and other enterprise business systems, helping enterprises quickly advance the implementation and validation processes of large models. At the same time, considering the needs of users in industries such as finance and healthcare for data compliance and domestic transformation, it is necessary to conduct AI large model testing and usage in a self-controllable environment. SmartX AI infrastructure has added support for Ascend NPU and MindIE platform, and performance evaluations have been conducted based on hyper-converged (virtual machine) and SMTX Kubernetes Service (SKS) environments.

Download the eBook “Building Enterprise AI Infrastructure: Technical Trends, Product Solutions, and Testing Validation” to learn more about enterprise deployment validation and application practices in AI large models.

Download the eBook “Building Enterprise AI Infrastructure: Technical Trends, Product Solutions, and Testing Validation” to learn more about enterprise deployment validation and application practices in AI large models.

Using Ascend NPU and MindIE platform in SmartX AI infrastructure

Currently, SmartX AI infrastructure is fully compatible with the Ascend ecosystem, supporting users to deploy and use Ascend NPU and MindIE platform based on virtual machines, virtualized Kubernetes, and bare-metal Kubernetes environments, helping users quickly build a full-stack hardware and software domestic AI infrastructure.

Solution Features

-

High-performance heterogeneous computing: Compatible with general computing and AI-specific loads, building a heterogeneous resource pool.

-

Flexible and diverse deployment solutions: Supports deployment solutions based on virtual machines, virtualized Kubernetes, and bare-metal Kubernetes, where the bare-metal Kubernetes deployment solution helps users avoid virtualization overhead and achieve optimal performance.

-

Efficient resource utilization: Dynamic allocation and horizontal scaling of virtual machine resources can be achieved through DRS and virtual machine placement groups, adapting to business load fluctuations and improving resource utilization.

-

Unified management and simplified operations: Manage virtual machines, containers, and physical machine nodes with a unified control plane, simplifying environment operations.

-

Full-stack domestic support: From CPU to Ascend NPU, from SmartX AI infrastructure to AI inference MindIE framework, achieving full-stack domestic adaptation of hardware and software, fully meeting the requirements of Xinchuang.

Deployment Solutions

Deployment based on virtual machines

Users can quickly deploy Ascend NPU and MindIE in the SmartX hyper-converged virtual machine environment through the Ascend inference hardware passthrough feature and MindIE image method, rapidly building an AI inference framework based on the Ascend ecosystem to run large language models like DeepSeek. The brief steps are as follows:

1. Infrastructure preparation:

-

Ascend inference hardware passthrough: Connect Atlas 300I Pro / Atlas 300I Duo for direct use by virtual machines through SmartX hyper-convergence.

-

Install Ascend driver: Install the Ascend Ascend driver package in the virtual machine to provide hardware support.

-

Install Docker: Install the Docker runtime environment in the virtual machine to provide the model inference runtime environment.

2. Inference framework installation:

-

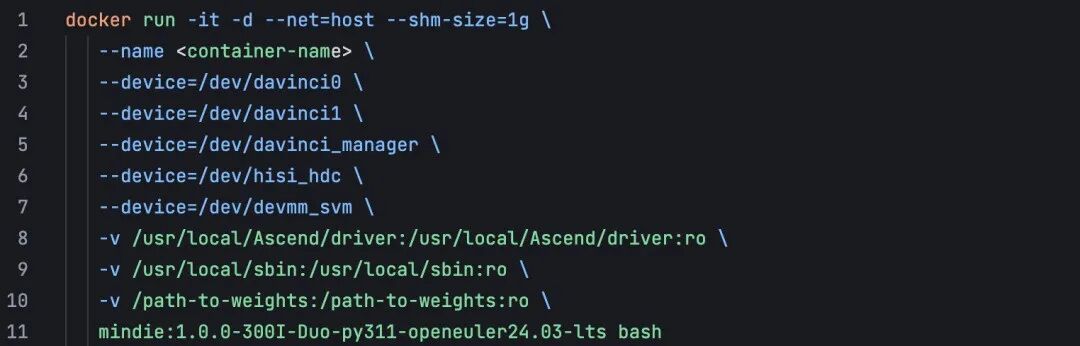

Pull MindIE image: The Ascend image repository provides the MindIE base image, and users can pull the corresponding image file based on their hardware. This image has the basic environment required for model operation, including: CANN, FrameworkPTAdapter, MindIE, and ATB-Models, enabling quick inference of models.

-

Start MindIE image: Users can start the MindIE runtime environment based on their hardware and model files.

3. Model inference startup:

-

Configure model inference: Users need to configure the model’s input and output according to their needs, taking a maximum input of 1024 tokens and a maximum output of 4096 tokens as an example:

-

maxIterTimes = 4096

-

maxInputTokenLen = 1024

-

maxSeqLen = maxIterTimes + maxInputTokenLen = 5120

-

Start inference model: Enter the container and execute the MindIE startup command, allowing users to run large language models like DeepSeek.

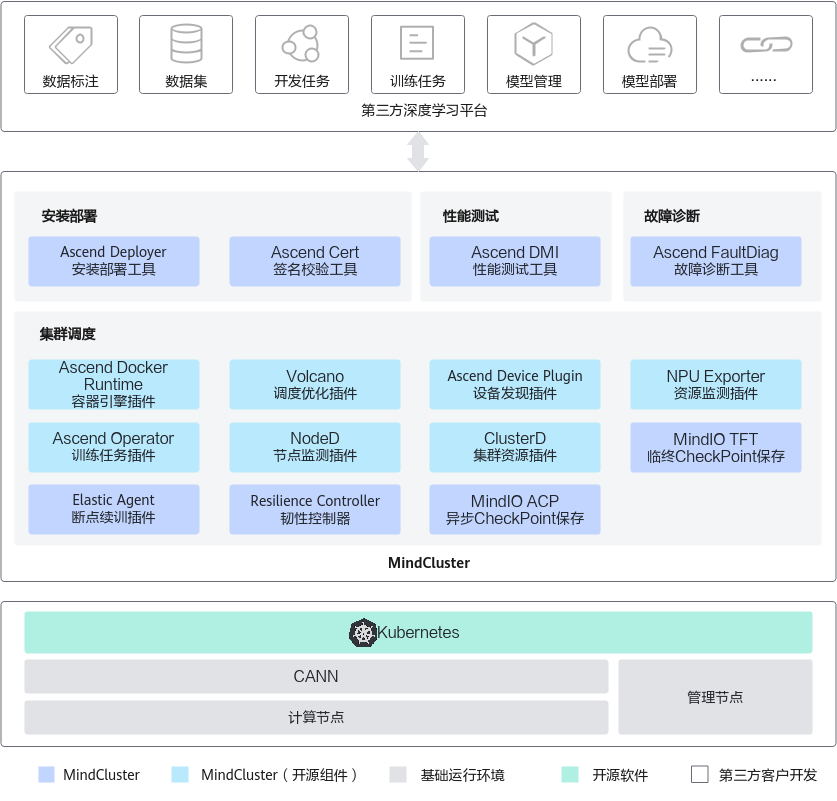

Deployment based on SKS

Users can deploy Ascend NPU and MindIE in the SmartX hyper-converged virtual machine Kubernetes environment or bare-metal Kubernetes environment through Ascend inference hardware passthrough/manage bare-metal services and pair with the MindCluster cluster scheduling component, quickly building a cloud-native AI inference framework based on the Ascend ecosystem to run large language models like DeepSeek. The brief steps are as follows:

1. Infrastructure preparation:

-

Create Ascend inference workload cluster: Users can quickly create an inference workload cluster with Ascend NPU in SKS.

-

Install Ascend driver: Install the Ascend Ascend driver package in the SKS workload cluster worker nodes to provide hardware support.

2. Deployment of MindCluster cluster scheduling component:

-

Pull MindCluster component image: The Ascend image repository provides the image files for MindCluster related components, and users can pull the corresponding image files based on the components they need to install.

-

Deploy MindCluster components: The MindCluster component package provided by Ascend includes essential modules and optional modules, and users can choose to install based on actual business needs.

3. Model inference startup:

-

MindIE MS image creation: Users can create MindIE MS images based on their Ascend hardware following the guidance provided on the Ascend official website.

-

Configure MindIE MS deployment script: Ascend provides a MindIE MS deployment script that requires users to configure NPU type, NPU quantity, model files, model parameters, etc.

-

Start inference model: Run the deployment script to automatically issue business configurations and startup scripts, automatically generate a ranktable containing node device information, and schedule Pods to compute nodes to run large language models like DeepSeek.

Deployment Validation – Integrating with Open WebUI

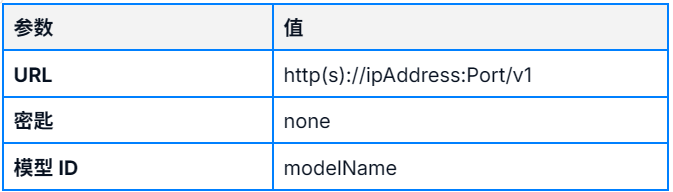

MindIE is compatible with various RESTful interfaces, and users can integrate with Open WebUI using interfaces compatible with OpenAI. During integration, it is necessary to use the IP address, port, and model name of the MindIE business side, which are defined in the config.json file as “ipAddress”, “port”, and “modelName”, with parameters as follows:

The effect of the integrated DeepSeek-R1 model through Open WebUI is as follows:

Performance of SmartX AI infrastructure deploying Ascend NPU

Environment Configuration

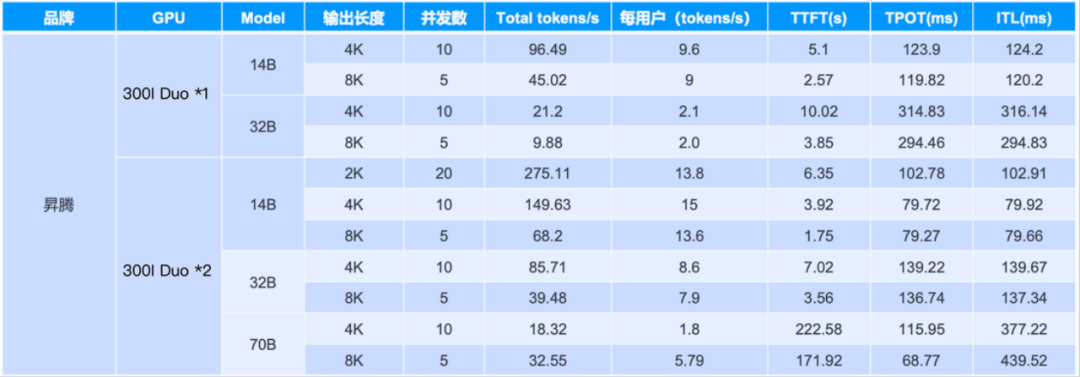

We built a 3-node SmartX HCI hyper-converged cluster, establishing the MindIE inference platform based on the Atlas 300I Duo inference card in a virtual machine environment, deploying DeepSeek-R1-14B, 32B, and 70B models for performance testing.

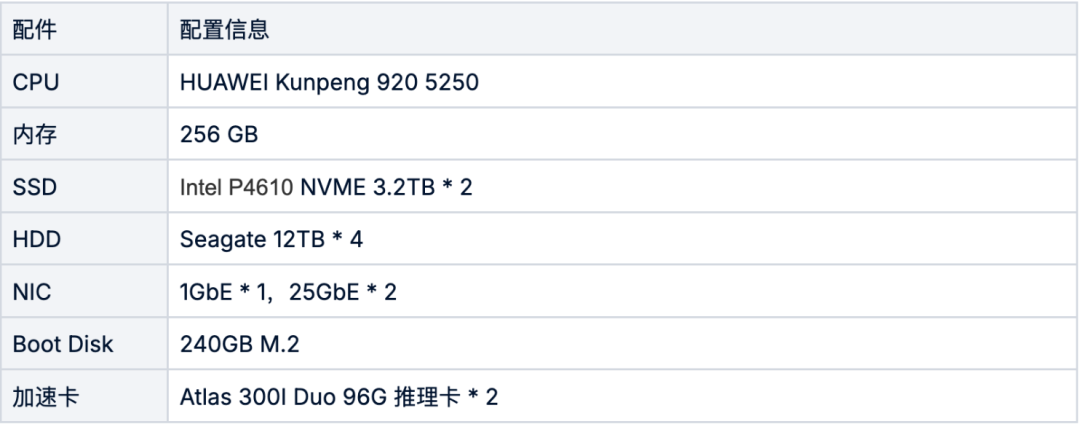

SMTX OS cluster hardware configuration (the following is for a single node configuration)

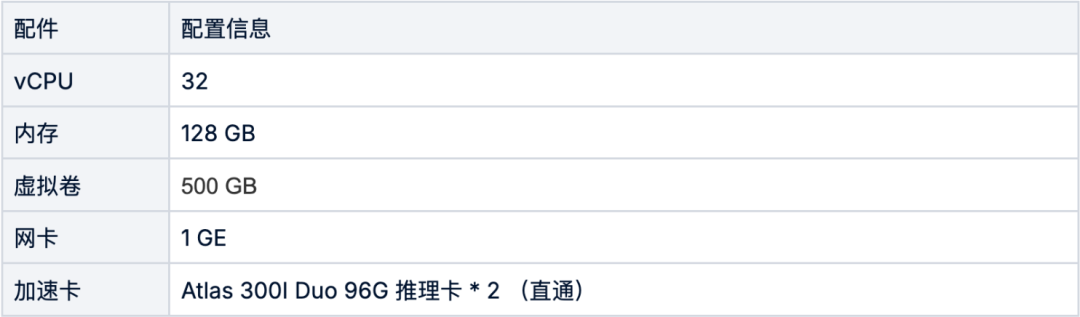

MindIE virtual machine configuration

Test Results

The above performance data is for reference. We recommend:

-

Running the DeepSeek-R1-32B model requires 2 Ascend 300I Duo inference cards. If higher concurrency is needed, it is recommended to configure 4 inference cards.

-

Running the DeepSeek-R1-70B model requires 4 Ascend 300I Duo inference cards. If higher concurrency is needed, it is recommended to configure 8 inference cards.

Download the eBook “Building Enterprise AI Infrastructure: Technical Trends, Product Solutions, and Testing Validation” to learn more about enterprise deployment validation and application practices in AI large models.

Recommended Reading:

-

How to Meet Enterprise DeepSeek Rapid Landing Validation Needs Based on SmartX Hyper-convergence?

-

Sharing from Multiple Hospitals: The Transformation and Opportunities of DeepSeek in the Medical Industry

-

Practice Sharing | Building an Enterprise Knowledge Base from Scratch, It Might Not Be as Simple as You Think

-

Trend Insights | Three Key Capabilities and Deployment Solutions for Generative AI Storage Facilities

-

Trend Insights | Will AI Drive the Strong Rise of Bare-metal K8s?

-

From CPU, GPU to Encryption Cards, SmartX Hyper-convergence Support Capabilities Have Been Fully Enhanced!

-

GPU Passthrough & vGPU: Hyper-convergence Provides High-Performance Support for GPU Application Scenarios

-

Performance Testing of GPU Support in Hyper-converged Virtualization and Container Environments: Based on NVIDIA T4 and A30

Reference Documents:1. Ascend Open Docker Image Repository, Providing Ascend Software Docker Imageslink:https://www.hiascend.com/developer/ascendhub2. mind-cluster Release – Gitee.comlink:https://gitee.com/ascend/mind-cluster/releases3.Creating MindIE Images – Containerized Installation and Image Creation – Installing MindIE – MindIE Installation Guide – Environment Preparation – MindIE 1.0.0 Development Documentation – Ascend Communitylink:https://www.hiascend.com/document/detail/zh/mindie/100/envdeployment/instg/mindie_instg_0023.html#ZH-CN_TOPIC_0000002186809165__section21214544562