MAS-GPT proposes a generative design paradigm for Multi-Agent Systems (MAS) that can automatically generate a complete, executable, and well-organized MAS with just a single query. This approach significantly simplifies the process of building MAS, making it as easy as chatting with ChatGPT. This work was presented at the International Conference on Machine Learning (ICML) 2025.Generative Multi-Agent SystemsIntroductionOpenAI has set “Organizational AI” as the fifth crucial stage towards AGI, expecting AI to handle complex tasks and coordinate large-scale operations like an efficiently collaborating organization. Multi-Agent Systems (MAS) are a significant exploration direction to achieve this goal. However, existing methods face issues such as lack of adaptability, high costs, and low generalization, severely hindering the widespread application of MAS.

MAS-GPT, jointly launched by the School of Artificial Intelligence at Shanghai Jiao Tong University, Shanghai Artificial Intelligence Laboratory, and the University of Oxford, officially proposes:A generative MAS design paradigm that can “one-click generate” a complete, executable, and well-organized MAS with just a single query!

Innovations of MAS-GPT

The core innovation of MAS-GPT lies in transforming the “designing MAS” task into a language generation task. Users input a single query, and MAS-GPT outputs a set of multi-agent systems that can be directly executed. This generated MAS is elegantly presented entirely in Python code:

| Agent Prompt:Python variables, clear and concise |

|

Agent Response:LLM function calls, intelligent core |

| Agent Interaction:String concatenation, simple and efficient |

|

Agent Tool Invocation:Python functions, limitless expansion |

MAS is no longer manually written but generated by the model!

Training Method

The training of MAS-GPT is not based on rote memorization but through a cleverly designed data construction process that teaches the model “what kind of MAS to design for what kind of query.” The specific steps are as follows:

|

Data Pool Construction:Extensively collect queries covering various fields such as mathematics, code, and general Q&A, and compile over 40 basic MAS code structures. |

|

Data Pair Evaluation:Conduct detailed automated evaluation and annotation for each “Query-MAS” combination. |

|

Data Pair Selection:Based on the principle of inter-consistency, match similar queries to the best-performing MAS. |

|

Data Pair Refinement:According to the principle of intra-consistency, use large models to rewrite MAS and add reasoning explanations to align closely with the query logic. |

Ultimately, 11K high-quality data samples were obtained, and through a simple supervised fine-tuning (SFT) of the open-source model, MAS-GPT was trained.

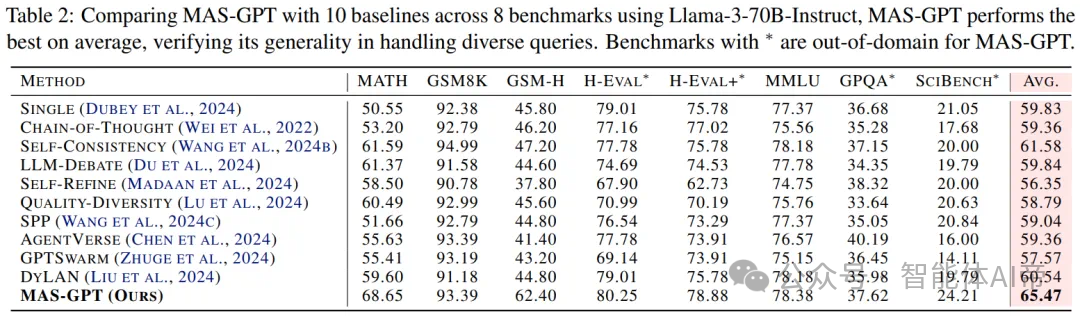

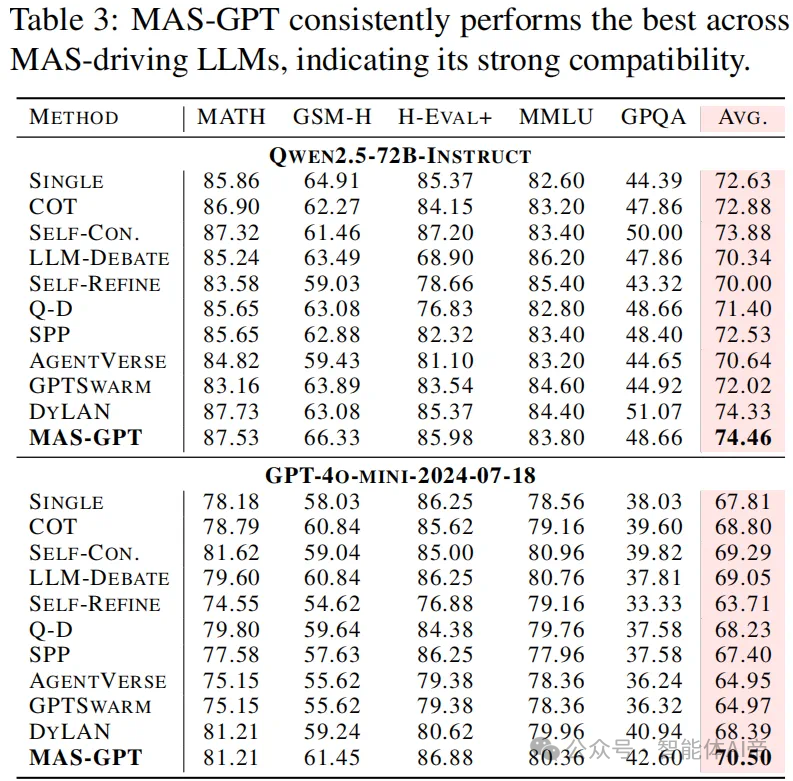

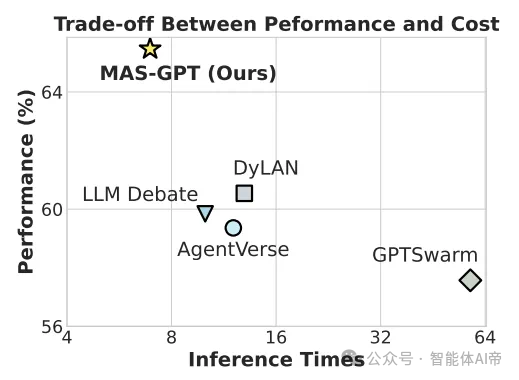

Experimental ResultsThe research team systematically compared over 10 existing methods across 8 benchmark tasks and 5 mainstream models, showing that MAS-GPT

| Is More Accurate:MAS-GPT leads with an average accuracy that improves by 3.89% compared to the current strongest baseline! |

|

Is More Generalized:It maintains robust performance even on tasks not seen during training (such as GPQA, SciBench) |

Is More Cost-Effective:During inference, MAS-GPT can achieve better results than DyLAN, GPTSwarm, etc., at nearly 0.5 times the inference cost! |

|

Has Strong Compatibility:The MAS generated by MAS-GPT provides consistent performance improvements regardless of which LLM is used!

|

Expansion Capability

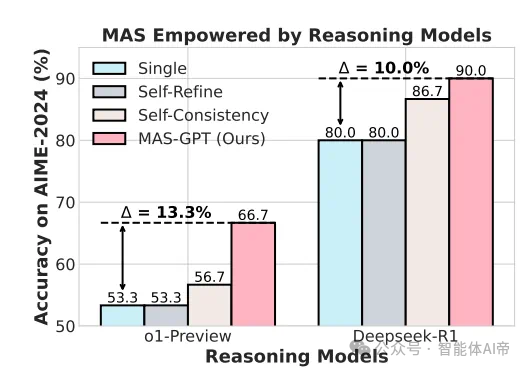

The MAS generated by MAS-GPT is not only suitable for Chatbot LLMs but can also assist stronger Reasoner LLMs in reasoning. Using strong reasoning models like OpenAI o1 and DeepSeek-R1 combined with the MAS-GPT structure, in the AIME-2024 math challenge:

MAS-GPT truly possesses the ability to “organize strong models to work together”!

|

o1 + MAS-GPT improved by 13.3% |

|

DeepSeek-R1 + MAS-GPT improved by 10.0% |

| MASWorks Address: https://github.com/MASWorks |

|

MAS-2025 Address: https://mas-2025.github.io/MAS-2025/ |