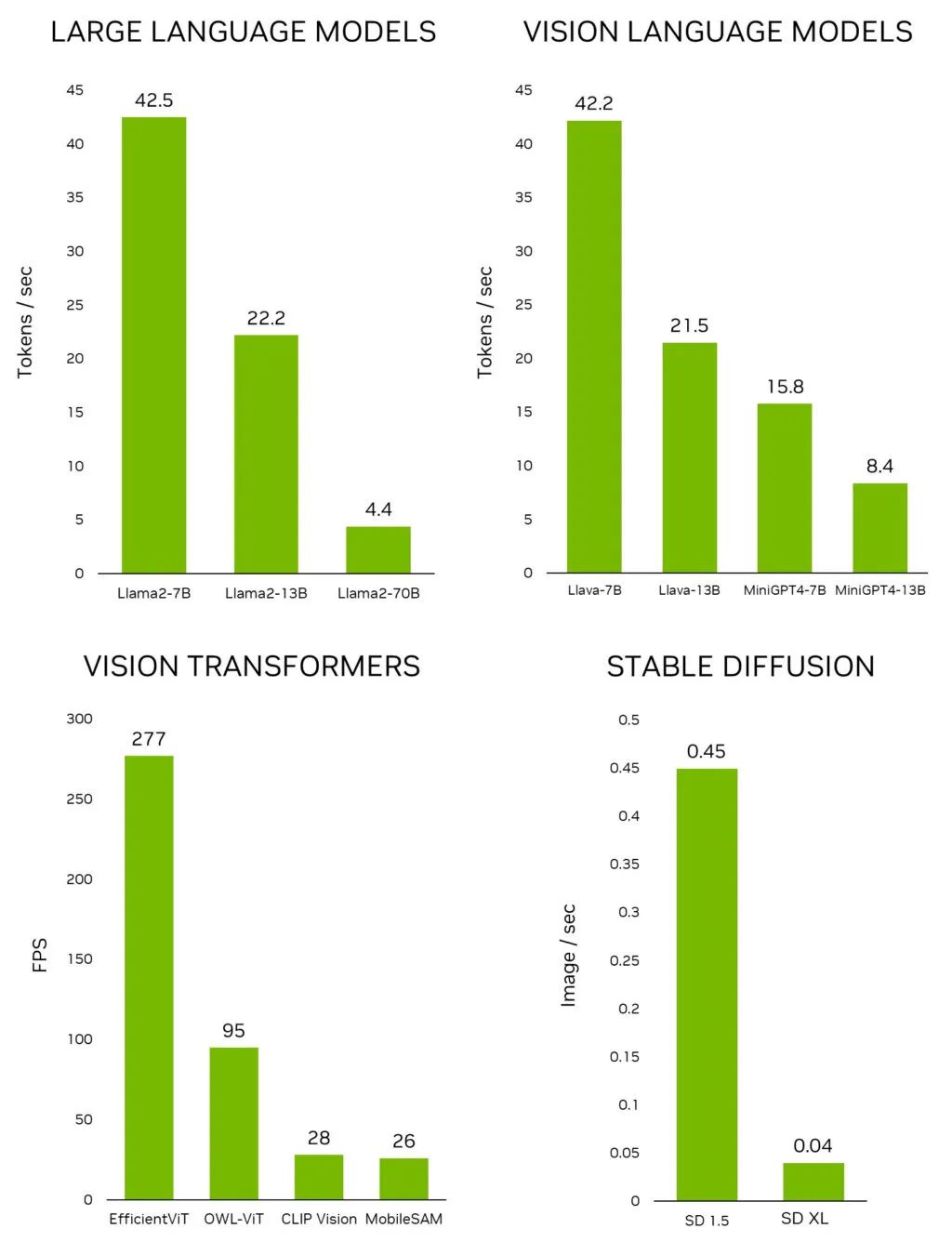

Recently, NVIDIA launched the Jetson Generative AI Lab, enabling developers to explore the endless possibilities of generative AI in the real world through NVIDIA Jetson edge devices. Unlike other embedded platforms, Jetson can run large language models (LLM), vision transformers, and stable diffusion locally, including the Llama-2-70B model running interactively on Jetson AGX Orin.

Figure 1. Leading generative AI models on

inference performance on Jetson AGX Orin

To quickly test the latest models and applications on Jetson, please use the tutorials and resources provided by the Jetson Generative AI Lab. Now, you can focus on discovering the potential of generative AI that has yet to be developed in the physical world.

This article will explore the exciting generative AI applications that can be run and experienced on Jetson devices, all of which are also explained in the lab’s tutorials.

Edge Generative AI

In the rapidly evolving field of AI, generative models and the following models are gaining attention:

-

LLMs capable of engaging in human-like conversations.

-

Vision language models (VLM) enabling LLMs to perceive and understand the real world through cameras.

-

Diffusion models that can transform simple textual instructions into stunning images.

These significant advancements in AI have sparked the imagination of many. However, if you delve into the infrastructure supporting these cutting-edge model inferences, you’ll find they are often “tethered” to the cloud, relying on the processing power of data centers. This cloud-centric approach has largely hindered the development of edge applications that require high bandwidth and low-latency data processing.

Video 1. NVIDIA Jetson Orin brings powerful generative AI models to the edge

Running LLMs and other generative models in local environments is a growing trend among the developer community. A thriving online community provides enthusiasts with a platform to discuss the latest advancements in generative AI technology and its practical applications, such as r/LocalLlama on Reddit. Numerous technical articles published on platforms like Medium delve into the complexities of running open-source LLMs in local setups, with some mentioning the use of NVIDIA Jetson.

The Jetson Generative AI Lab is the hub for discovering the latest generative AI models and applications, as well as learning how to run them on Jetson devices. As the field rapidly evolves, new LLMs are appearing almost daily, and the development of quantization libraries has also reshaped benchmarks overnight. NVIDIA recognizes the importance of providing the latest information and effective tools. Thus, we offer easy-to-learn tutorials and pre-built containers.

What makes all this possible is jetson-containers, a well-designed and maintained open-source project aimed at building containers for Jetson devices. This project uses GitHub Actions to build 100 containers in a CI/CD manner. These containers allow you to quickly test the latest AI models, libraries, and applications on Jetson without the hassle of configuring underlying tools and libraries.

With the Jetson Generative AI Lab and jetson-containers, you can focus on exploring the endless possibilities of generative AI in the real world with Jetson.

Demonstration

Here are some exciting generative AI applications that run on the NVIDIA Jetson devices provided by the Jetson Generative AI Lab.

stable-diffusion-webui

Figure 2. Stable Diffusion interface

A1111’s stable-diffusion-webui provides a user-friendly interface for Stability AI’s Stable Diffusion. You can use it to perform various tasks, including:

-

Text-to-image conversion: Generate images based on textual instructions.

-

Image-to-image conversion: Generate images based on input images and corresponding textual instructions.

-

Image inpainting: Fill in missing or occluded parts of input images.

-

Image extension: Extend the original boundaries of input images.

The web application will automatically download the Stable Diffusion v1.5 model upon first launch, so you can start generating images right away. If you have a Jetson Orin device, you can easily execute the following commands as per the tutorial.

git clone https://github.com/dusty-nv/jetson-containerscd jetson-containers./run.sh $(./autotag stable-diffusion-webui)For more information on running stable-diffusion-webui, refer to the Jetson Generative AI Lab tutorials. The Jetson AGX Orin can also run the newer Stable Diffusion XL (SDXL) model, and the theme image at the beginning of this article was generated using that model.

text-generation-webui

Figure 3. Interacting with Llama-2-13B on Jetson AGX Orin

Oobabooga’s text-generation-webui is a commonly used web interface for running LLMs in local environments based on Gradio. Although the official repository provides one-click installers for various platforms, jetson-containers offers a simpler method.

Through this interface, you can easily download models from the Hugging Face model repository. Based on experience, under 4-bit quantization, Jetson Orin Nano typically accommodates models with 7 billion parameters, Jetson Orin NX 16GB can run models with 13 billion parameters, while Jetson AGX Orin 64GB can run an astonishing 70 billion parameter models.

Many are currently researching Llama-2. This open-source large language model from Meta is freely available for research and commercial use. When training models based on Llama-2, techniques such as supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF) were also employed. Some even claim it outperforms GPT-4 in certain benchmark tests.

Text-generation-webui not only provides extensions but also helps you develop your own extensions. In the following llamaspeak example, you can see that this interface can be used to integrate your applications and supports multi-modal VLMs like Llava and image chat.

Figure 4. Response of the quantized Llava-13B VLM to image queries

For more information on running text-generation-webui, refer to Jetson Generative AI Lab tutorials:https://www.jetson-ai-lab.com/tutorial_text-generation.html

llamaspeak

Figure 5. Voice conversation with LLM using Riva ASR/TTS

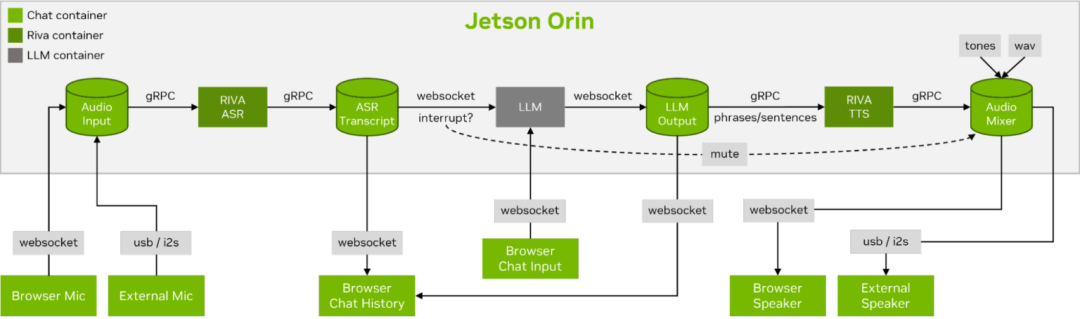

Llamaspeak is an interactive chat application that allows voice conversations with locally running LLMs through real-time NVIDIA Riva ASR/TTS. Llamaspeak has now become part of jetson-containers.

For a smooth and seamless voice conversation, it is crucial to minimize the time taken for the LLM’s first output token. Llamaspeak not only shortens this time but also handles interruptions in conversation, allowing you to start speaking while llamaspeak processes the TTS of the generated response. The container microservices are suitable for Riva, LLM, and chat servers.

Figure 6. Streaming ASR/LLM/TTS pipeline

Real-time conversation control flow to the web client

Llamaspeak features a responsive interface that can transmit low-latency audio streams from the browser microphone or a microphone connected to the Jetson device. For more information on running it yourself, refer to jetson-containers documentation:https://github.com/dusty-nv/jetson-containers/tree/master/packages/llm/llamaspeak

NanoOWL

Open World Localization with Vision Transformers (OWL-ViT) is an open vocabulary detection method developed by Google Research. This model allows you to perform object detection by providing textual prompts for target objects.

For example, when detecting people and cars, you would use a textual prompt describing the category:

prompt = "a person, a car"This monitoring method is very useful, enabling rapid development of new applications without training new models. To unlock edge applications, our team developed a project called NanoOWL, optimizing the model using NVIDIA TensorRT to achieve real-time performance on the NVIDIA Jetson Orin platform (encoding speed of approximately 95FPS on Jetson AGX Orin). This performance means you can run at frame rates far exceeding those of ordinary cameras.

The project also includes a new tree detection pipeline that accelerates the combination of the OWL-ViT model with CLIP, enabling zero-shot detection and classification at any level. For example, when detecting faces, to differentiate between happy or sad, you would use the following prompt:

prompt = "[a face (happy, sad)]"If you want to first detect faces and then detect facial features in each target area, you would use the following prompt:

prompt = "[a face [an eye, a nose, a mouth]]"Combining the two:

prompt = "[a face (happy, sad)[an eye, a nose, a mouth]]"There are countless examples like this. This model can be more precise for certain objects or classes, and due to its ease of development, you can quickly try different combinations and determine their applicability. We look forward to seeing the amazing applications you develop!

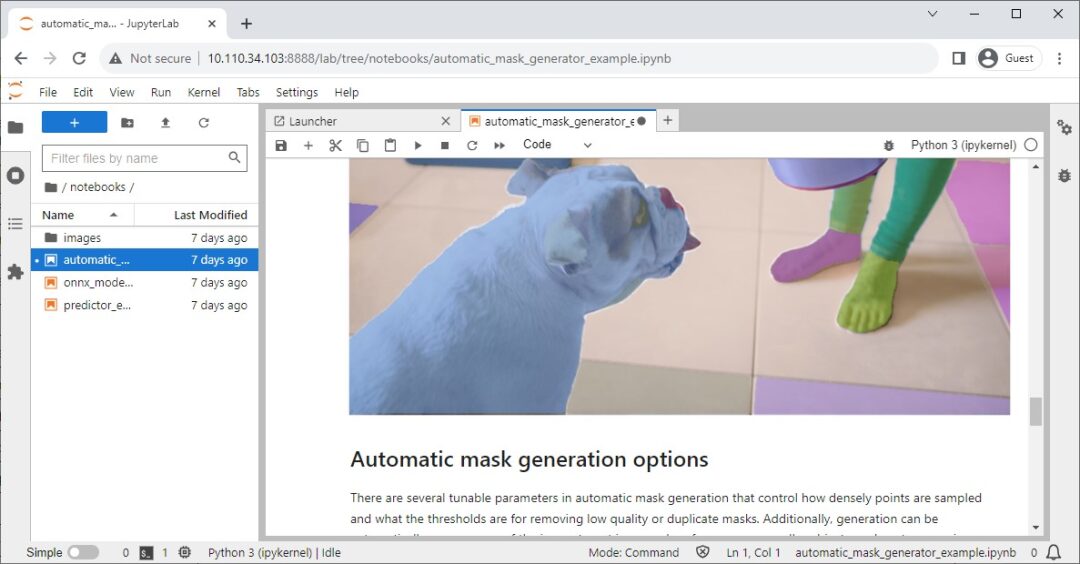

Segment Anything Model

Figure 8. Jupyter notebook for Segment Anything Model (SAM)

Meta has released the Segment Anything Model (SAM), an advanced image segmentation model capable of precisely identifying and segmenting objects in images, regardless of their complexity or context.

Its official repository also includes Jupyter notebooks for easy inspection of the model’s impact, and jetson-containers also provides a convenient container with built-in Jupyter Lab.

NanoSAM

Figure 9. Real-time tracking and segmentation of a computer mouse by NanoSAM

Segment Anything (SAM) is a magical model that transforms points into segmentation masks. Unfortunately, it does not support real-time operation, limiting its role in edge applications.

To overcome this limitation, we recently released a new project NanoSAM, which can refine the SAM image encoder into a lightweight model. We also optimized this model using NVIDIA TensorRT to achieve real-time performance applications on the NVIDIA Jetson Orin platform. Now, you can easily transform existing bounding boxes or keypoint detectors into instance segmentation models without any additional training.

Track Anything Model

As described in the team’s paper:https://arxiv.org/abs/2304.11968 , the Track Anything Model (TAM) is “a combination of Segment Anything with video.” In its Gradio-based open-source interface, you can click on a frame of the input video to specify anything to be tracked and segmented. The TAM model even has an additional feature to remove tracked objects through image inpainting.

Figure 10. Track Anything interface

NanoDB

Video 2. Hello AI World –

Real-time multimodal VectorDB on NVIDIA Jetson

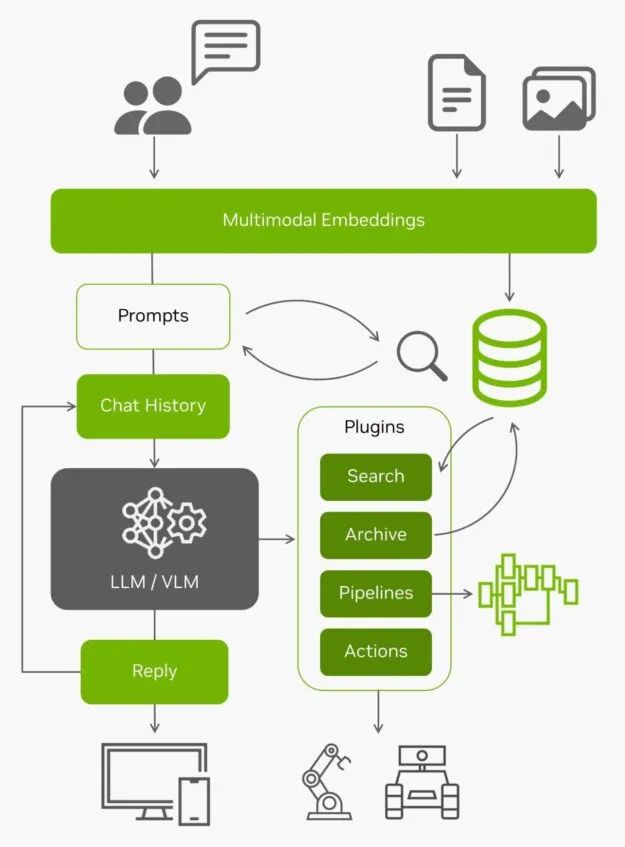

In addition to effectively indexing and searching data at the edge, these vector databases are often used in conjunction with LLMs to achieve retrieval-augmented generation (RAG) over long-term memory that exceeds their built-in context length (4096 tokens for Llama-2 models). Vision language models also use the same embeddings as input.

Figure 11. Architecture diagram centered around LLM/VLM

With all the real-time data from the edge and the ability to understand this data, AI applications become agents capable of interacting with the real world. If you want to try using NanoDB on your own images and datasets, for more information, please refer to lab tutorials:https://www.jetson-ai-lab.com/tutorial_nanodb.html

Conclusion

As you can see, exciting generative AI applications are emerging. You can easily run and experience them on Jetson Orin by following these tutorials. To witness the amazing capabilities of generative AI running locally, please visit Jetson Generative AI Lab:https://www.jetson-ai-lab.com/

If you have created your own generative AI applications on Jetson and want to share your ideas, be sure to showcase your creations in the Jetson Projects forum:https://forums.developer.nvidia.com/c/agx-autonomous-machines/jetson-embedded-systems/jetson-projects/78 .

Join us for a webinar on November 8, 2023, from 1-2 AM Beijing time, to delve into several topics discussed in this article and participate in a live Q&A!

In this webinar, you will learn about:

-

Performance characteristics and quantization methods of open-source LLM APIs

-

Accelerating open vocabulary vision transformers like CLIP, OWL-ViT, and SAM

-

Multi-modal vision agents, vector databases, and retrieval-augmented generation

-

Real-time multilingual conversation and dialogue through NVIDIA Riva ASR/NMT/TTS

Scan the QR code below to register for the event now!