Hardware-in-the-Loop (HIL) testing is a powerful tool for validating and verifying the performance of complex systems, including robotics and computer vision. This article explores how HIL testing is applied in these fields using the NVIDIA Isaac platform.

The NVIDIA Isaac platform consists of NVIDIA Isaac Sim and NVIDIA Isaac ROS. The former is a simulator that provides a testing environment for robotic algorithms, while the latter is hardware-accelerated software optimized for NVIDIA Jetson, which includes machine learning, computer vision, and localization algorithms. By conducting HIL testing based on the NVIDIA Isaac platform, you can validate and optimize the performance of your robotic software stack, resulting in safer, more reliable, and more efficient products.

The following will discuss the various components of the HIL system, including the software and hardware of the NVIDIA Isaac platform. Additionally, we will examine how they work together to optimize the performance of robotic and computer vision algorithms. We will also explore the benefits of conducting HIL testing using the NVIDIA Isaac platform and compare it with other testing methods.

NVIDIA Isaac Sim

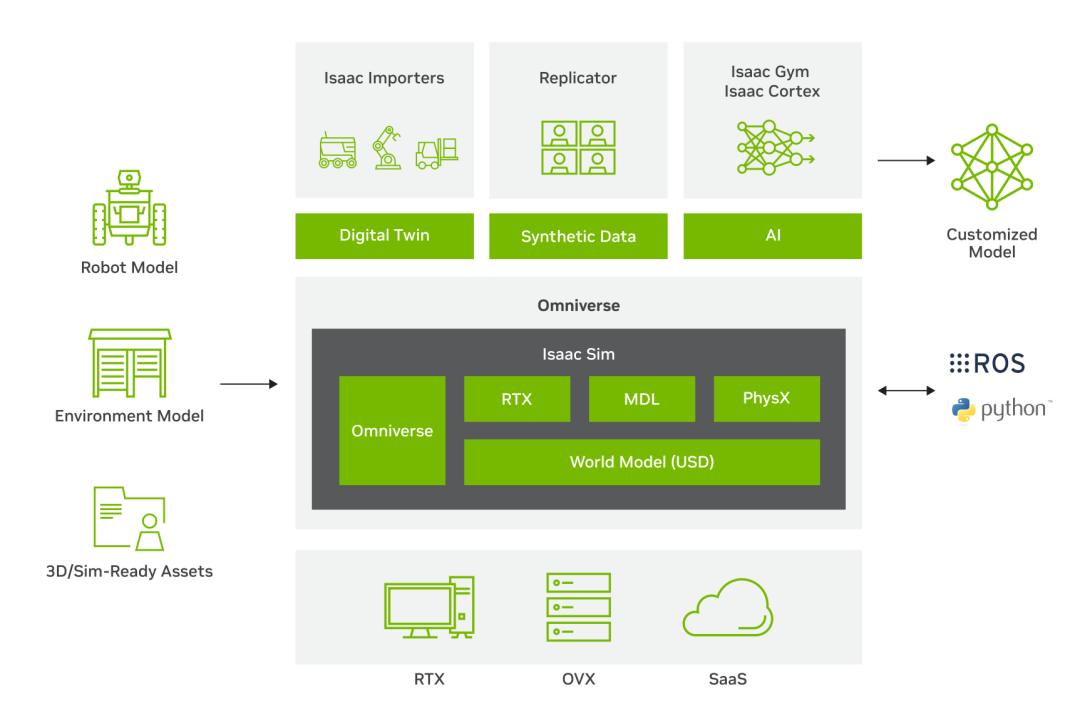

The NVIDIA Isaac Sim, based on Omniverse, provides a photo-realistic and physically accurate virtual environment for testing robotic and computer vision algorithms, allowing users to fine-tune performance without the risk of damaging physical hardware. Additionally, this simulator is highly customizable, making it an ideal choice for testing various scenarios and use cases.

You can leverage NVIDIA Isaac Sim to create smarter and more advanced robots. The platform provides a suite of tools and technologies that enable you to build complex algorithms, allowing robots to perform intricate tasks.

By using Omniverse Nucleus and Omniverse Connectors, NVIDIA Isaac Sim can easily collaborate, share, and import environments and robot models in the Universal Scene Description (USD) format. By integrating Isaac ROS/ROS 2 interfaces, full-featured Python scripting, and plugins for importing robot and environment models, more efficient and effective robotic simulation can be achieved.

Figure 1. NVIDIA Isaac Sim Stack

You can interact with NVIDIA Isaac Sim using either ROS or ROS 2, or through Python. Run NVIDIA Isaac Gym and NVIDIA Isaac Cortex to generate synthetic data or use it for digital twins.

NVIDIA Isaac Sim internally uses a customized version of ROS Noetic, utilizing roscpp to build a bridge for ROS that seamlessly collaborates with the Omniverse framework and Python 3.7. This version is compatible with ROS Melodic.

NVIDIA Isaac Sim currently supports ROS 2 Foxy and Humble with the ROS 2 Bridge, and it is recommended to use Ubuntu 20.04 for ROS 2.

For more detailed information, please refer to NVIDIA Isaac Sim (https://developer.nvidia.com/isaac-sim).

NVIDIA Isaac ROS

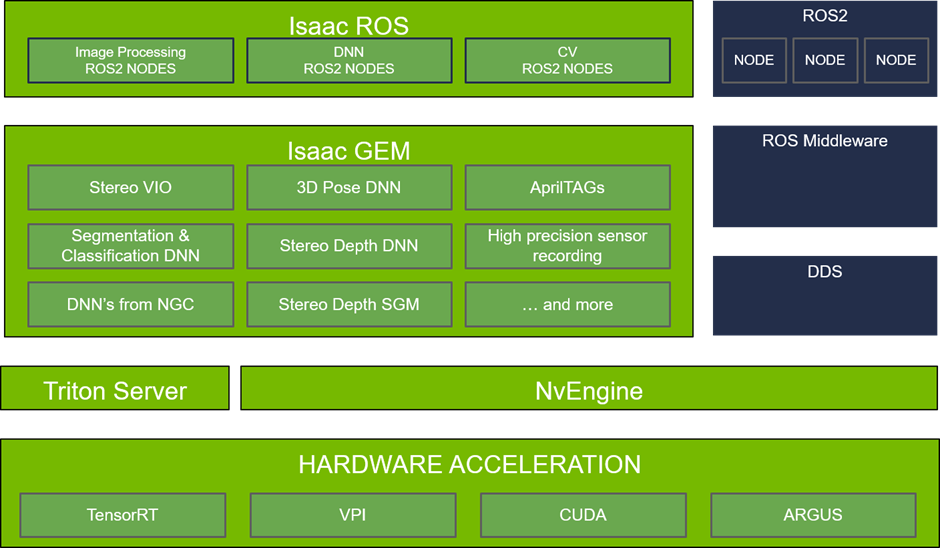

NVIDIA Isaac ROS is built on top of the Robot Operating System (ROS) and provides a range of advanced features and tools to help you create smarter and more powerful robots. These features include advanced mapping and localization, as well as object detection and tracking. For more information on the latest features, please refer to Isaac ROS Developer Preview 3 (https://developer.nvidia.com/blog/build-high-performance-robotic-applications-with-nvidia-isaac-ros-developer-preview-3/).

By using Isaac ROS from the NVIDIA Isaac platform, you can create complex robotic applications that execute intricate tasks with precision. With powerful computer vision and localization algorithms, Isaac ROS is a valuable tool for any developer looking to create advanced robotic applications.

Figure 2. Isaac ROS and Software Layers

Isaac GEMs for ROS is a set of GPU-accelerated ROS 2 packages released for the robotics community, which is part of the NVIDIA Jetson platform.

Isaac ROS provides a suite of software packages for perception and AI, along with a complete pipeline known as NVIDIA Isaac Transport for ROS (NITROS). These packages have been optimized for NVIDIA GPU and Jetson platforms, featuring capabilities for image processing and computer vision.

In this article, we include examples of how to run HIL for the following packages:

-

NVIDIA Isaac ROS vslam

-

NVIDIA Isaac ROS apriltag

-

NVIDIA Isaac ROS nvblox

-

NVIDIA Isaac ROS Proximity segmentation

For more information on other Isaac ROS packages and the latest Isaac ROS Developer Preview 3, please refer to NVIDIA Isaac ROS (https://developer.nvidia.com/isaac-ros).

Hardware Specifications and Setup

For this testing, you will need a workstation or laptop, along with an NVIDIA Jetson platform:

-

x86/64 computer running Ubuntu 20.04

-

NVIDIA graphics card with NVIDIA RTX

-

Monitor

-

Keyboard and mouse

-

NVIDIA Jetson AGX Orin or NVIDIA Jetson Orin NX

-

NVIDIA JetPack 5+ (test version is 5.1.1)

-

Router

-

Ethernet cable

Figure 3. Hardware Setup

When transferring large amounts of data between devices (such as the NVIDIA Jetson module and the computer), it is generally preferred to use a wired Ethernet connection rather than Wi-Fi. This is because Ethernet connections provide faster and more reliable data transfer rates, which are especially important for real-time data processing and machine learning tasks.

To establish an Ethernet connection between the Jetson module and the computer, follow these steps:

-

Prepare an Ethernet cable and a router with free Ethernet ports.

-

Plug one end of the cable into the Ethernet port of the device.

-

Plug the other end of the cable into another unused Ethernet port on the router.

-

Power on the device and wait for it to boot up completely.

-

Check the Ethernet connection by looking for the Ethernet icon or using network diagnostic tools like ifconfig or ipconfig.

Once the computer and NVIDIA Jetson are ready and connected, please follow the instructions at /NVIDIA-AI-IOT/isaac_demo (https://github.com/NVIDIA-AI-IOT/isaac_demo) to proceed.

Run Demo and Drivers

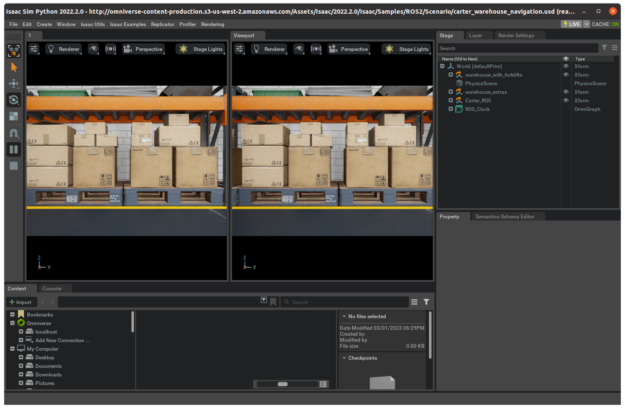

First, run NVIDIA Isaac Sim on the workstation. Use the ./isaac_ros.sh script to run a demo of the Carter robot.

Carter 1.0 is a robotic platform that utilizes a Segway differential drive base, a Velodyne P16 for 3D range scanning, a ZED camera, an IMU, and a Jetson module as the core of the system, along with a custom mounting bracket, providing a robust demonstration platform for the NVIDIA Isaac navigation stack.

As the simulation begins, you can see the stereo camera output of NVIDIA Isaac Sim. With two cameras, the robot is ready to receive inputs from Isaac ROS running on the NVIDIA Jetson module.

Figure 4. Carter on NVIDIA Isaac Sim

Try Isaac ROS Packages in the Demo

In this article, we explore NVIDIA Isaac ROS packages used for AMR robots or wheeled robots. We will focus on software packages for localization, mapping, and AprilTag detection, and you can modify the repository as needed to test other packages.

Isaac ROS Visual SLAM

NVIDIA Isaac ROS Visual SLAM combines visual odometry with simultaneous localization and mapping (SLAM) techniques.

Visual odometry estimates the position of the camera relative to its starting position. This technique involves analyzing two consecutive input frames or stereo pairs to identify a set of key points iteratively. By matching the key points from both sets, the transition of the camera and the relative rotation between frames can be determined.

SLAM is a method that enhances the accuracy of visual SLAM by combining previously obtained trajectories. By detecting whether the current scene has been seen before (loop closure in camera motion), the previously estimated camera pose can be optimized.

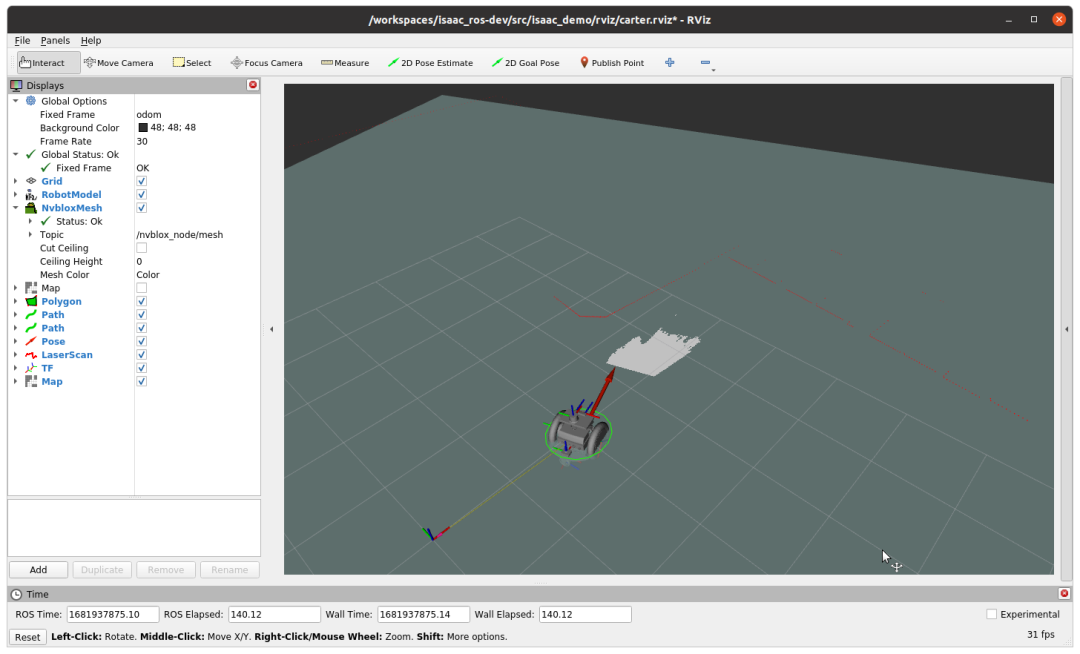

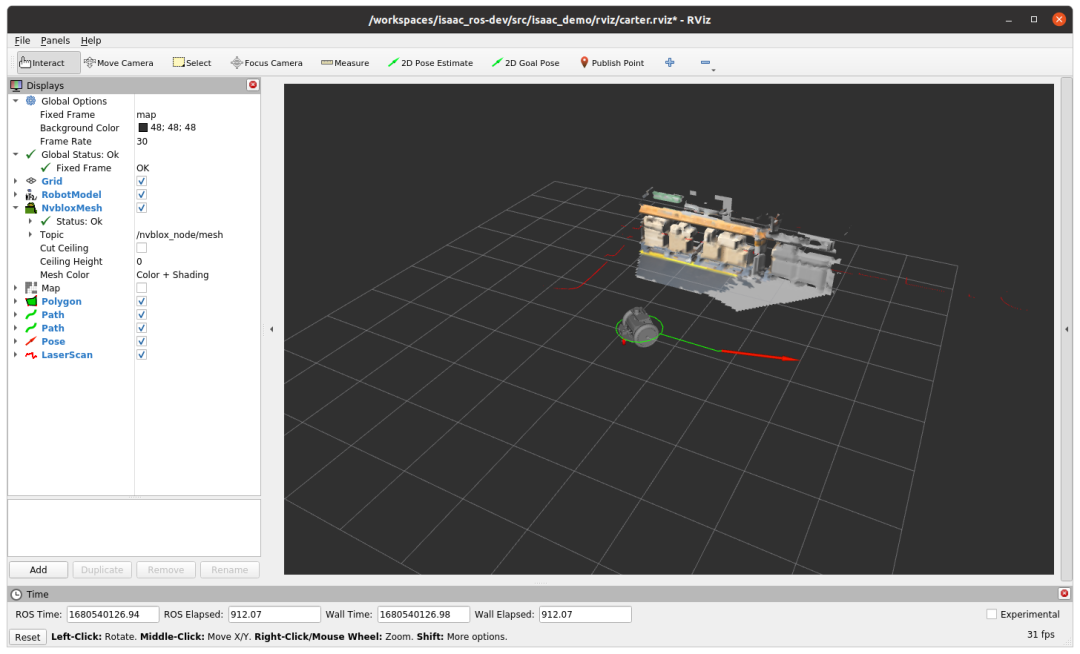

Figure 5. Isaac ROS vslam and nvblox

Figure 6. Isaac ROS vslam and nvblox Running Status

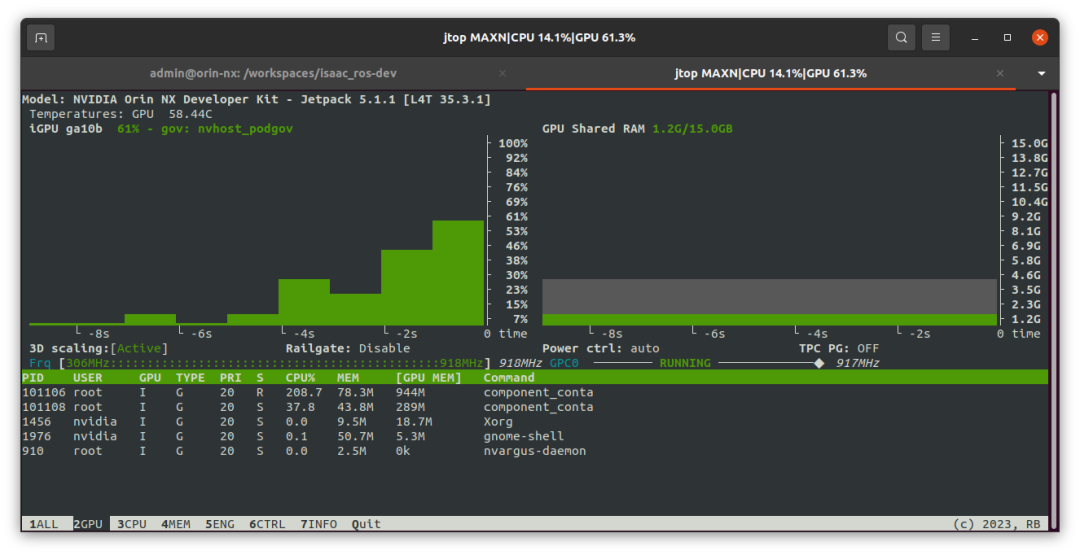

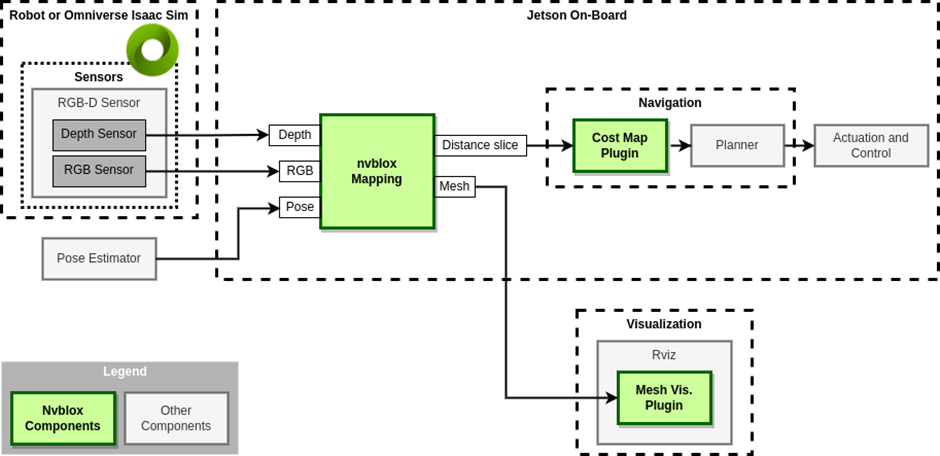

Isaac ROS nvblox

The nvblox package builds a 3D model of the environment around the robot in real-time using sensor observation data, which can then be used by path planners to create collision-free paths. The package utilizes NVIDIA CUDA technology to accelerate this process for real-time performance. This repository includes ROS 2 integration for the nvblox library.

Figure 7. Isaac ROS NVblox Workflow

Figure 8. Isaac ROS vslam Output

By following the instructions at /NVIDIA-AI-IOT/isaac_demo (https://github.com/NVIDIA-AI-IOT/isaac_demo), the Isaac ROS vslam package can run on the demo.

NVIDIA Isaac ROS apriltag

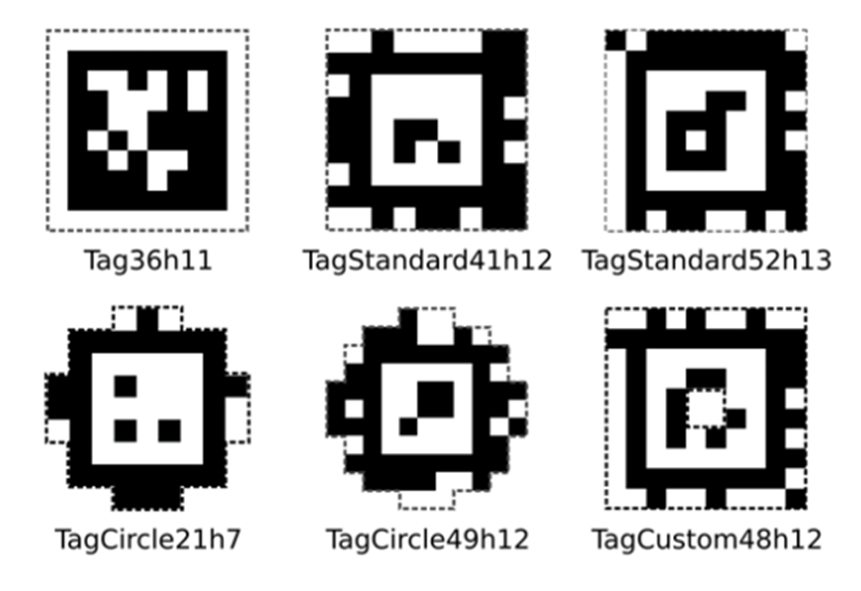

The ROS 2 apriltag package uses NVIDIA GPUs to accelerate detection in images and publishes pose, ID, and other metadata. This package is comparable to CPU AprilTag detection in ROS 2 nodes.

These tags serve as benchmarks for driving robots or manipulators to start actions or complete tasks from specific points. They are also used in augmented reality to calibrate the distance of observation holes. These tags are available in many series and can be easily printed with a desktop printer, as shown in Figure 9.

Figure 9. Example of AprilTags Replacing QR Codes

Figure 10. Isaac ROS AprilTag Detection Demo

Isaac ROS proximity segmentation

The isaac_ros_bi3d package employs a Bi3D model optimized for binary classification to perform stereo depth assessment. This process is used for proximity segmentation, helping to identify the presence of obstacles within a certain range and assisting in collision avoidance during navigation in the environment.

Figure 11. Isaac ROS Proximity Segmentation

Driving Carter with rviz

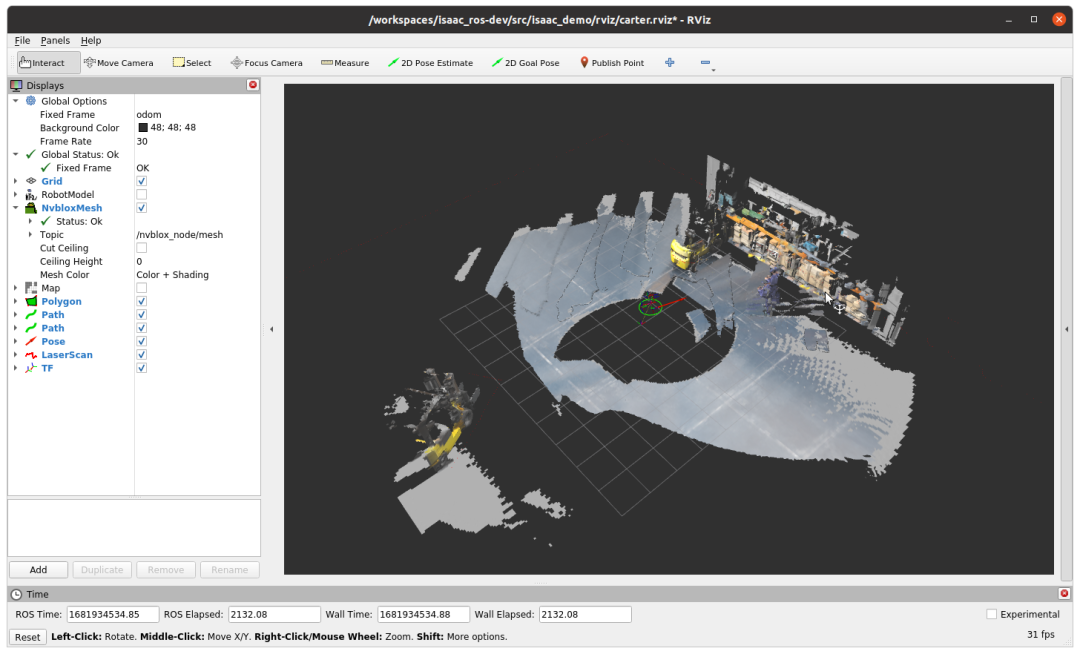

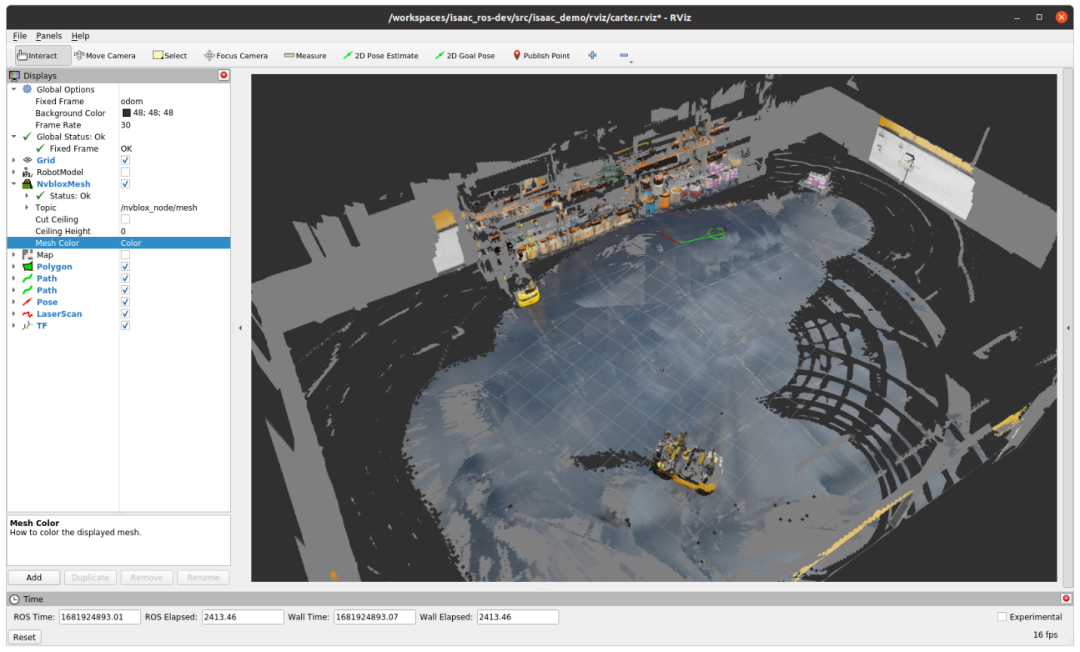

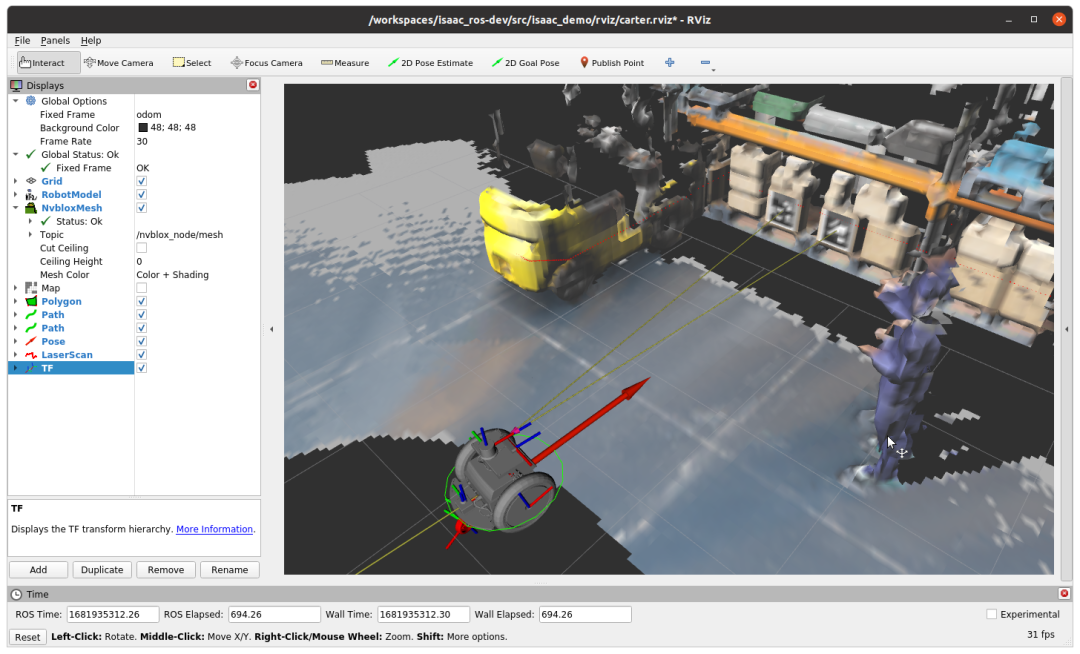

When reading rviz and everything is running, rviz displays the output shown in the image below. Figure 12 shows Carter’s position in the center of the map and all blocks.

Figure 12. Using Isaac ROS vslam and Isaac ROS nvblox to Build Maps in rviz

The following video demonstrates how you can drive the robot in all environments using rviz and view the maps generated by nvblox.

Video 1. HIL on NVIDIA Orin NX Based on Isaac ROS vslam and nvblox

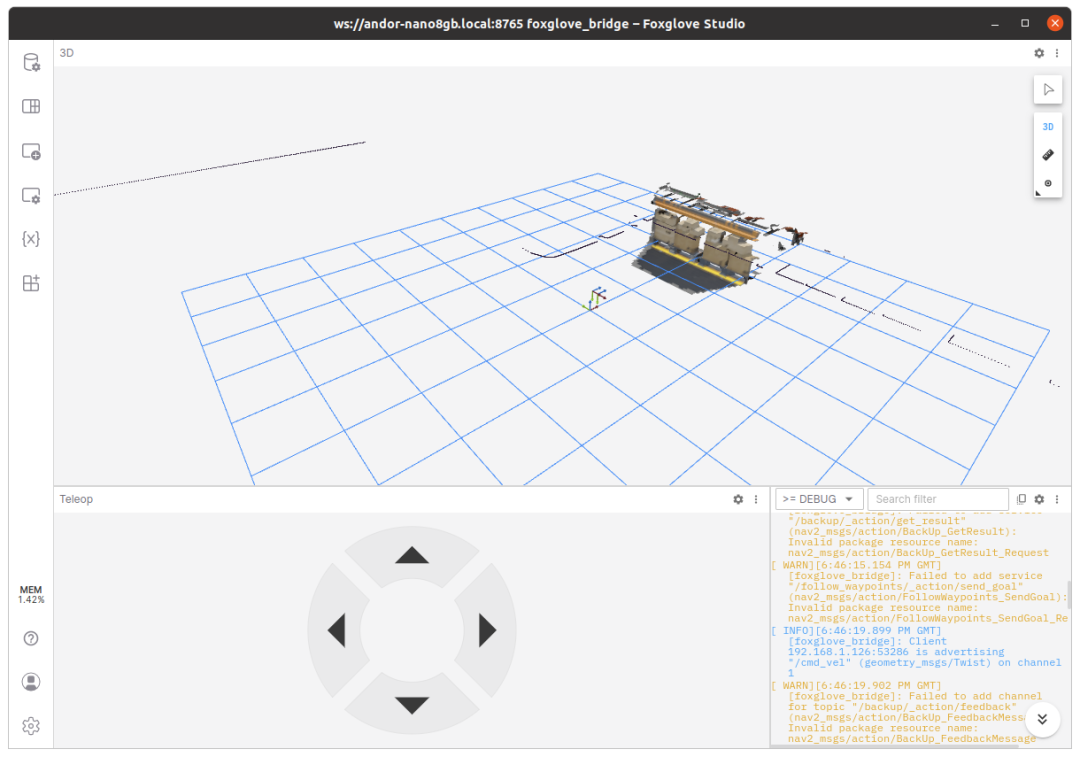

You can also use Foxglove to test the simulation.

Figure 13. Simulation Testing with Foxglove

Conclusion

In this article, we showed you how to set up HIL and conduct tests using the NVIDIA Jetson Isaac ROS module, as well as how to try out NVIDIA Isaac Sim. Please use a wired connection between your desktop computer and the Jetson module. To display all telemetry raw data, you will need a reliable connection.

You can also test other NVIDIA Isaac ROS packages that were just added to the /isaac-ros_dev folder. For more details, please refer to the readme.md file located at /NVIDIA-AI-IOT/isaac_demo (https://github.com/NVIDIA-AI-IOT/isaac_demo).

For more information, please refer to the Isaac ROS Webinar Series (https://gateway.on24.com/wcc/experience/elitenvidiabrill/1407606/3998202/isaac-ros-webinar-series).