This Tuesday evening, NVIDIA conducted an internal training for developers participating in the NVIDIA Jetson development competition. We have organized the highlights of the training session, focusing on several key points (especially the comparison charts of specifications, which everyone should save):

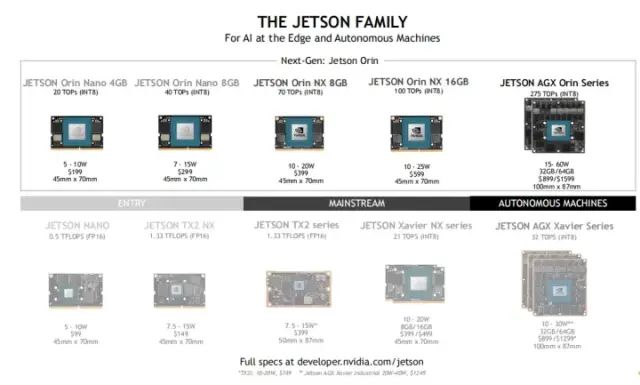

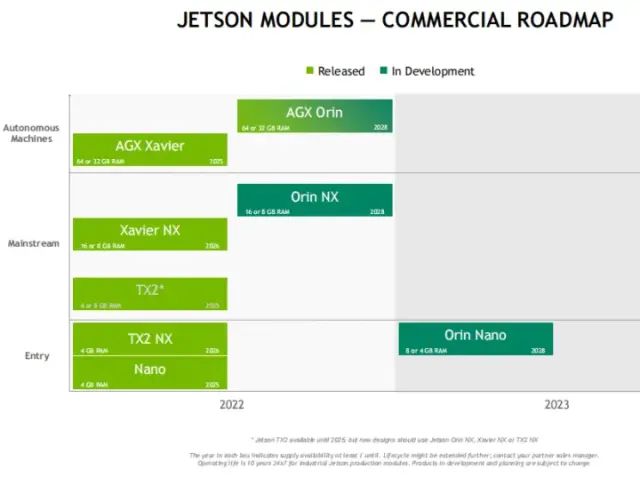

NVIDIA Jetson Module Product Line:

Developers who are new to NVIDIA Jetson products can refer to the above chart to understand that NVIDIA has released a complete range of modules for different AI application scenarios, from high-end to low-end. Among them, the Jetson Orin NANO module will be launched in January 2023.

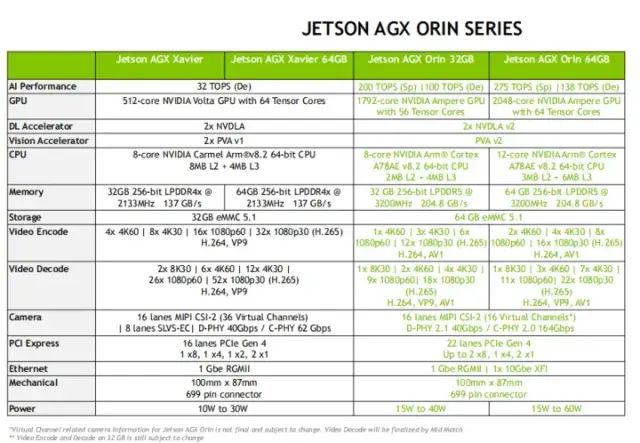

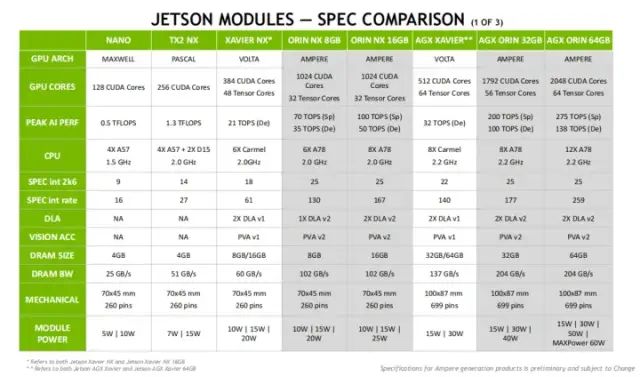

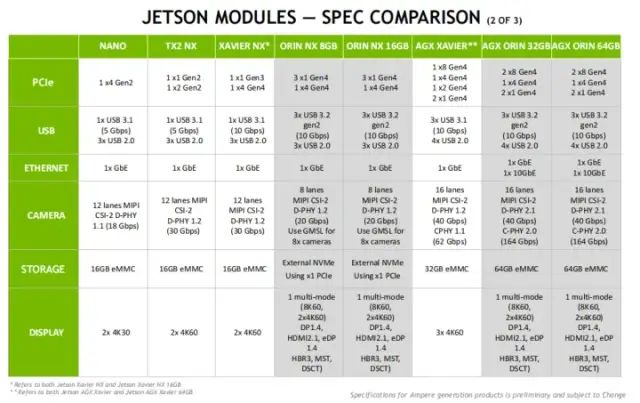

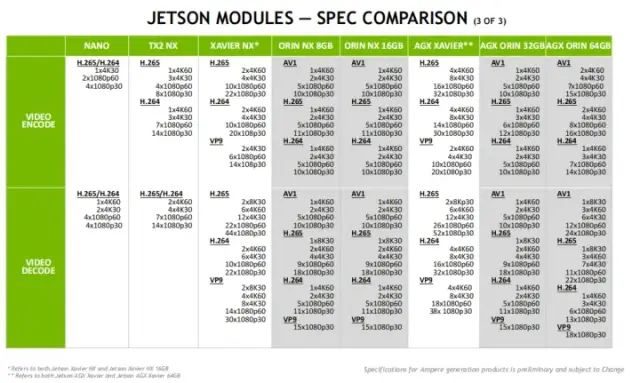

High-end Module Specifications Comparison:

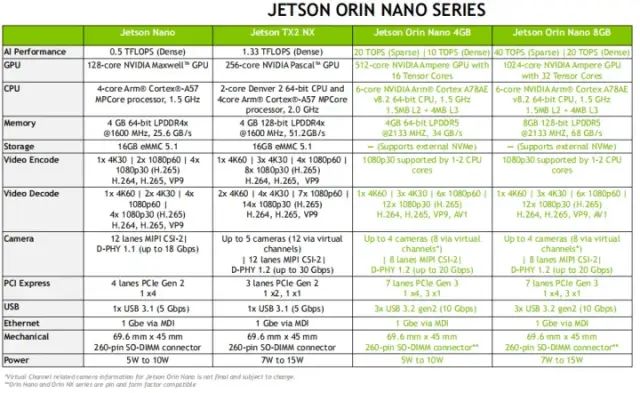

Low-end Module Specifications Comparison:

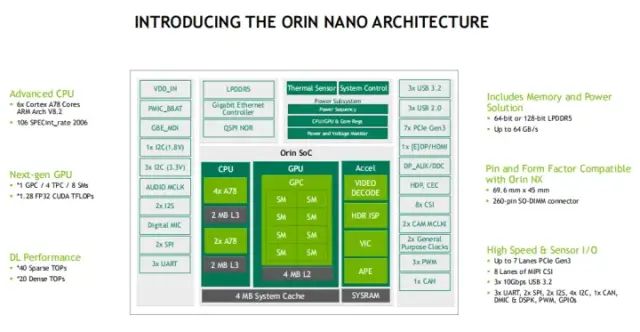

Architecture of Jetson Orin NANO

Note: The Jetson NANO Orin has removed the encoding function, so be sure to pay attention when selecting.

Comparison of Specifications for All Series Modules (Orin NANO is missing here)

From these three images, we can see:

-

All Orin series use the Ampere architecture, and the GPU is of the same model, which is very helpful for software development adaptation. Because with different architectures, adaptations in algorithms or optimizations need to be made, which inevitably affects portability. However, this is not an issue in the Orin series; the only difference among different Orin modules is performance, which is why the AGX Orin development kit can simulate six Orin modules.

-

The performance of the Orin series is divided into Dense and Sparse. Some may ask, what is this? This is a special operation of the Ampere architecture called structured sparsity—modern AI networks are quite large and growing, with millions or even billions of parameters. Accurate predictions and inferences do not require all parameters, and some parameters can be converted to zero to ensure that the model becomes ‘sparse’ without sacrificing accuracy. The Tensor cores can improve the performance of sparse models by up to 2 times. Making the model sparse is beneficial for AI inference and can also improve model training performance. Therefore, for example, with the Orin NX 8GB, if you make it sparse, the performance can reach 70 TOPS, while without sparsity, it can reach 35 TOPS.

-

In the Orin series, DLA and PVA (visual acceleration) have both reached version 2.

-

For video encoding and decoding, both Orin NX and AGX Orin support AV1. Over the past decade, video applications have become ubiquitous on the internet, and modern devices have driven rapid growth in the consumption of high-resolution, high-quality content. Services like video-on-demand and video conferencing are major bandwidth consumers, posing significant challenges to transmission infrastructure, thus increasing the demand for efficient video compression technologies. AV1 is an emerging open-source royalty-free video compression format. The main goal of AV1’s development is to achieve significant compression gains on state-of-the-art codecs while maintaining practical decoding complexity and hardware feasibility.

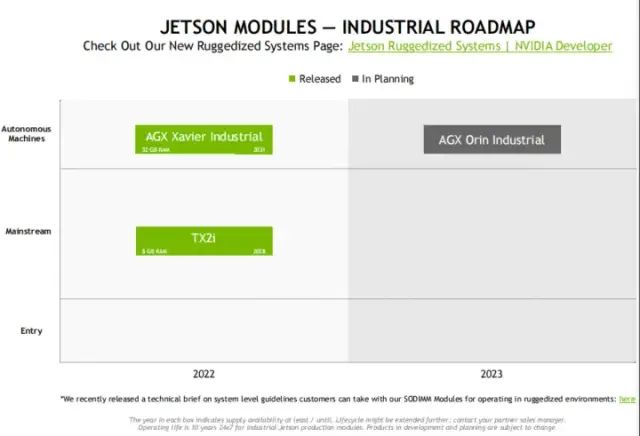

NVIDIA Jetson Module Roadmap

In NVIDIA’s PPT, we discovered that in 2023, NVIDIA will launch the AGX Orin industrial-grade module—specifically designed for applications that require wide temperature, shock, and vibration specifications to operate in harsh environments!

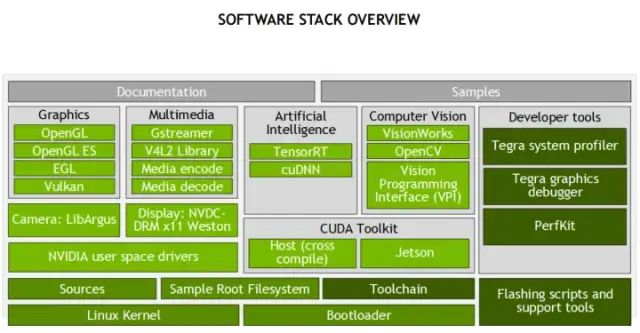

NVIDIA Jetson Software Stack

NVIDIA emphasizes that the Jetson software system platform is slightly different from other embedded platforms. Other embedded platforms use Uboot + kernel + self-made Roots, while the NVIDIA Jetson platform is packaged through JetPack, which includes the underlying kernel, Uboot, and various NVIDIA libraries, along with a Rootfs.

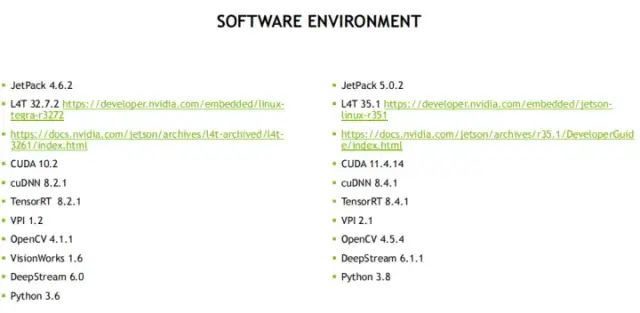

Before the release of Orin, the highest version of JetPack was JetPack 4.6.2, based on Ubuntu 18.04. Currently, the highest version that can be flashed on Jetson NANO and Jetson TX2NX is JetPack 4.6.2, and it will not be upgraded to JetPack 5.0.

For the Orin series, JetPack can be upgraded to JetPack 5.X, based on Ubuntu 20.04.

One point to note: JetPack 5.0 and above has upgraded the kernel to 5.1, while the kernel for JetPack 4.6.2 is 4.9. Therefore, many drivers based on Kernel 5.1 are easier to port to JetPack 5.0.X. For example, some peripherals of Raspberry Pi are based on kernel 5.X, and porting them to JetPack 4.6.X may encounter issues.

CUDA-X SDK Resources

CUDA

▪ https://docs.nvidia.com/cuda/cuda-for-tegra-appnote/index.html#overview

cuDNN

▪ https://developer.nvidia.com/cudnn

TensorRT

▪ https://developer.nvidia.com/tensorrt

▪ Samples https://github.com/NVIDIA/TensorRT/tree/main/samples

Jetson Multimedia

▪ https://docs.nvidia.com/jetson/l4t-multimedia/group__l4t__mm__test__group.html

▪ Samples: /usr/src/jetson_multimedia_api

DeepStream

▪ https://developer.nvidia.com/deepstream-sdk

▪ Samples: /opt/nvidia/deepstream/deepstream-6.1/sources

VPI

▪ https://docs.nvidia.com/vpi/index.html

Camera

▪ https://docs.nvidia.com/jetson/archives/l4t-archived/l4t-3261/index.html#page/Tegra%20Linux%20Driver%20Package%20Development%20Guide/camera_dev.html#

Welcome to join our Jetson AGX Orin technical exchange WeChat group