Abstract

Real-Time Operating Systems (RTOS) and Operating Systems (OS) both provide the capability to set thread priorities. We assign a unique priority to each thread based on the software functional architecture, thereby creating a hierarchy of threads within the system. However, this principle of assigning unique priorities can lead to issues, as assigning a unique priority to each thread may affect latency, response speed, deadlocks, and CPU utilization, resulting in various problems.

Core Understanding of Priority

Priority is a measure of a thread’s “determinism in response latency”. When an RTOS requires a thread to run, it may not respond immediately due to the influence of other threads or interrupts, which can delay its execution for a period of time.

The lower the priority, the more events are interspersed between the required response and the actual response, leading to increased complexity in the event chain, and poorer analyzability and predictability of latency;

The higher the priority, the lower the probability of being interrupted by unexpected events, and the higher the predictability of latency. The latency of the highest priority thread in the system is the easiest to predict, as its latency is almost constant, with only minor interrupt events affecting its CPU preemption. After all, it is the highest priority thread.

Initial Allocation of Thread Priorities

Interrupt-Related Threads (Event Types)

First, let’s clarify the components of interrupt service:

- Interrupt Latency, the time from interrupt request to interrupt service (interrupt masking + interrupt nesting + Sbus stack push (including bus contention) + vector table loading)

- Pre-Interrupt Part, the critical part of the ISR that needs to be processed quickly, i.e., the ISR service function,

- Post-Interrupt Part, the part processed in user tasks outside the ISR in the interrupt environment

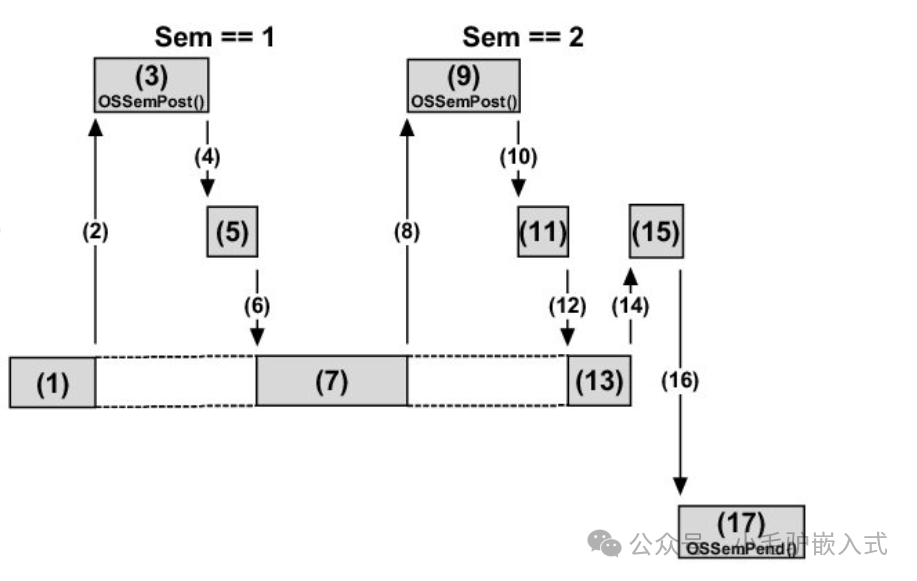

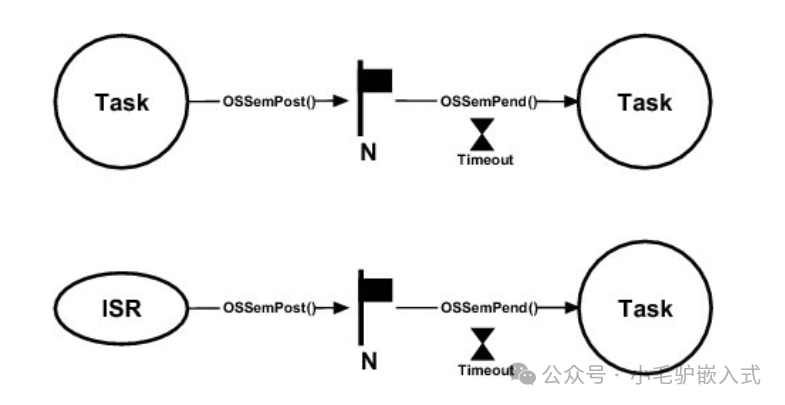

Interrupt-related threads are used to complete the post-interrupt work. Interrupt events are generally urgent, and tasks that intersect functionally with interrupt services need to be assigned a higher task level. They are generally not scheduled periodically but use event notification scheduling. When an interrupt event occurs and is completed, mechanisms such as semaphores, queues, and task notifications are used to activate task execution.For example, UI interactive tasks related to communication interrupts must be set to a high level to quickly respond to UI interface operations. After receiving complete UART data, it is sent to the task using a queue to activate task execution.

- Proximity Principle: The closer the function is to the interrupt service, the higher the thread level, to respond to interrupts promptly.

- Next Proximity Principle: If there is one or a few tasks (generally 1-3) interspersed between the function and the interrupt service, a slightly lower priority can be set, but resource locking mechanisms need to be used to penetrate priority at critical times to handle secondary interrupt events.

Periodic Type Threads

Tasks that need to run at a fixed frequency should obtain relevant functional running indicators from software requirements, system solutions, and detailed designs: throughput requirements, maximum delay time, average execution time, maximum execution time, etc. For example:

- The refresh rate of the LED screen needs to be no less than 60 FPS and no more than 120 FPS

- The PID control algorithm for the motor needs to be calculated every 3 ms

- The watchdog safety thread must feed the watchdog at least once per second

- CPU usage must not exceed 50%

When the system load does not exceed approximately 69.3%, all tasks can be completed on time (refer to Scheduling Algorithms for Multiprogramming in a Hard Real-Time Environment). Generally, tasks with shorter periods are assigned higher priorities.

Subsequent Adjustments of Thread Priorities

When is it necessary to truly modify their priorities?

Key Principles:

- Preemption only when delays are unmetPreemption should only be used when delays exceed the required throughput and it is absolutely necessary. In any other case, do not use task preemption mechanisms. For example, if a critical real-time deadline must be met, and thread A and thread D have similar workloads, but thread A must run immediately upon receiving a message, then A’s priority must be higher than D’s.

- Minimize different prioritiesUse as few different priorities as possible and reserve a unique priority for situations that truly require preemption.

- Use equal priority in ambiguous situationsIf it is impossible to compare the priorities of two or more tasks, use equal priority and employ time-slicing methods to allow tasks to run sequentially, ensuring “fair execution” and preventing task starvation. This can avoid unreasonable context switching and reduce RTOS overhead (note: this refers to unreasonable overhead, not that the total is lower than that of different priorities; on the contrary, this overall overhead is higher because it rarely causes task starvation).

- One change affects allAdditionally, it is crucial to note that once the priorities of various threads are adjusted, adding new threads may disrupt the priority order of all threads, which can be described as one change affecting all.

Visual analysis of system threads is a good method for optimizing priorities; please refer to the SystemView porting and usage tutorial.