📌 1. Research Background and Challenges

1.1 Importance of Indoor Positioning

- Application Scenarios: Smart homes, AR/VR, robotic navigation, emergency rescue, etc.

- Market Value: The global market size will reach $11.9 billion by 2024, continuing to grow.

1.2 Advantages and Challenges of Wi-Fi RSS Fingerprint Positioning

- Advantages: No additional hardware required, good compatibility, low deployment costs.

- Challenges:

- Environmental Noise: RSS fluctuations caused by walls, furniture, and human obstructions.

- Device Heterogeneity: Significant differences in Wi-Fi chips, antennas, and firmware across different phones lead to substantial RSS variations at the same location.

1.3 Limitations of Existing Methods

| Method Type |

Representative Models |

Limitations |

| Traditional ML |

KNN, SVM, DNN, CNN |

Assumes RSS noise follows a Euclidean distribution, ignores spatial topology, and is difficult to generalize |

| Graph Neural Networks (GNN) |

GNN-KNN, GCN-ED, GCLoc |

Faces the “GNN blind spot” problem, where information transmission fails in high-dimensional graphs, unable to model non-Euclidean noise structures |

📌 2. Motivation and Goals for the GATE Framework

To address the following three major issues, this paper proposes the GATE framework:

| Issue |

Description |

GATE Solution |

| Environmental Noise |

Irregular RSS fluctuations, non-uniform noise distribution |

AHV retains non-Euclidean structure |

| Device Heterogeneity |

Significant measurement differences across devices |

MDHV aggregates contextual information to enhance robustness |

| GNN Blind Spot |

Information transmission failure in high-dimensional graphs |

RTEC dynamically constructs edges to avoid redundant connections |

📌 3. Structure and Core Innovations of the GATE Framework

3.1 Framework Overview

- Two-Stage Process:

- Offline Stage: Construct graph, train GCN.

- Online Stage: RTEC dynamically accesses new nodes for real-time positioning.

- Three Core Components:

- Dynamic selection of neighboring nodes in the online stage to avoid redundancy or erroneous connections from static graphs.

- Fusion of raw fingerprints, contextual information (MSG), and feature-level attention (AHV).

- Retains the non-uniform influence of each AP feature, modeling non-Euclidean structures.

- AHV (Attention Hyperspace Vector)

- MDHV (Multi-Dimensional Hyperspace Vector)

- RTEC (Real-Time Edge Construction)

3.2 Key Technical Details

| Module |

Function |

Mathematical Expression |

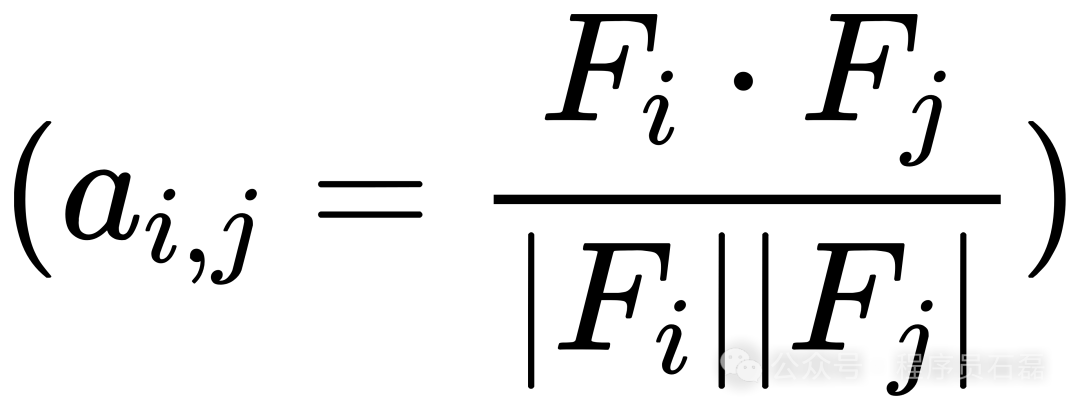

| Attention Mechanism |

Calculates similarity between nodes |

|

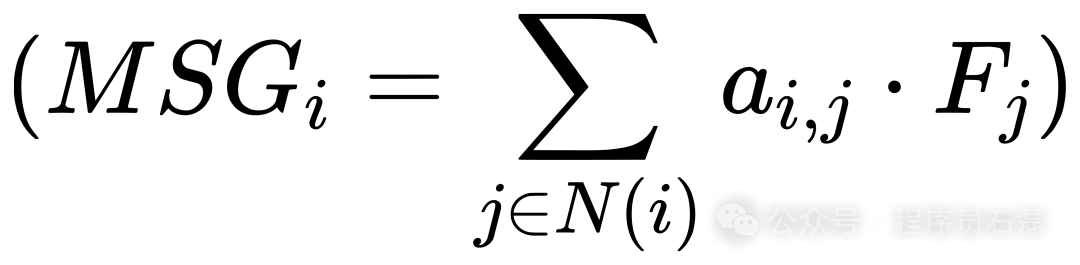

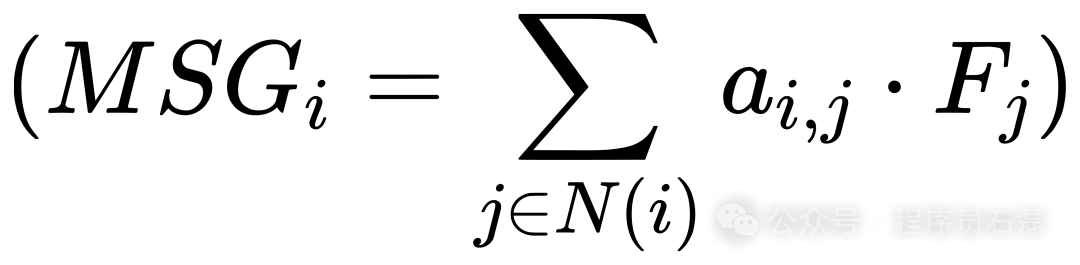

| MSG Vector |

Aggregates neighbor features |

|

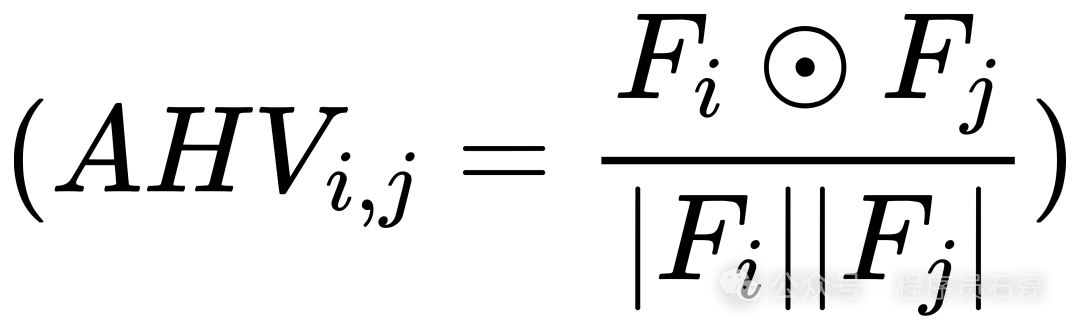

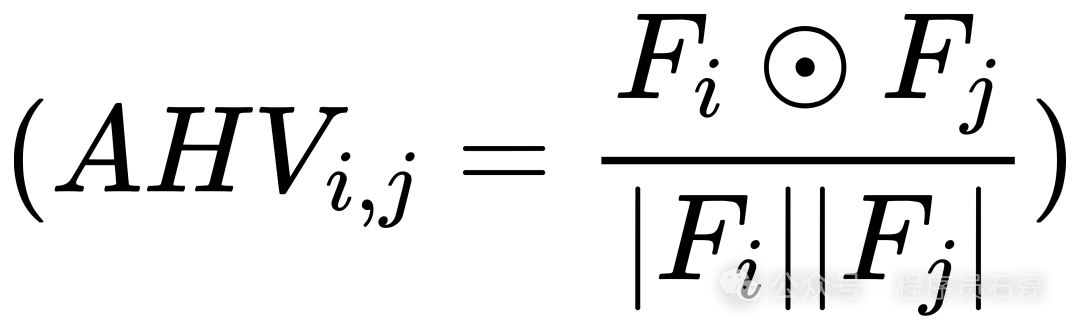

| AHV Vector |

Feature-level attention |

|

| RTEC |

Edge construction in the online stage |

Calculates attention similarly, selects Top-K neighboring nodes |

📌 4. Experimental Validation and Performance Evaluation

4.1 Experimental Setup

- 5 Buildings: Path lengths of 48–88 meters, RP density of 48–88, AP density of 78–339.

- 7 Types of Phones: Including Moto Z2, Pixel 4a, OnePlus 3T, covering high/mid/low-end devices.

- Training Configuration: 5 samples collected per RP, GCN compression rate H=50%, number of neighbors NB=10.

4.2 Key Experimental Results

✅ 1. Overall Performance Comparison (Figure 12)

| Model |

Average Error (m) |

Worst Error (m) |

| GATE-Full |

1.98 |

3.5 |

| GCLoc [31] |

3.19 |

6.3 |

| KNN [11] |

9.4 |

15.2 |

- GATE outperforms all baselines:

- Average error reduced by 1.6×–4.72×

- Worst error reduced by 1.85×–4.57×

✅ 2. Robustness under High AP Density (Figure 5.5)

- In Building 1 (339 APs), GATE maintains an error of <2 meters at NB=60%.

- Traditional GNNs experience performance collapse at NB>20%.

✅ 3. Device Heterogeneity Testing (Figure 9)

| Number of Training Samples per RP |

Average Error |

Device-to-Device Error Difference |

| 1 |

3.84 |

1.49 |

| 5 |

1.98 |

0.25 |

- Increasing training samples significantly enhances cross-device robustness.

✅ 4. Component Ablation Experiment (Figure 10)

| Model |

Average Error |

Worst Error |

Device Difference |

| GATE-Full |

1.98 |

3.2 |

0.25 |

| GATE-No-AHV |

3.45 |

7.15 |

1.1 |

| GATE-No-MSG |

2.75 |

5.8 |

2.17 |

| GATE-No-MDHV |

4.75 |

8.3 |

2.25 |

- MDHV is crucial, none can be omitted.

✅ 5. Real-Time Deployment Performance (Table 1)

| Model |

Latency (ms) |

Model Size (KB) |

Energy Consumption (J/s) |

| GATE-Full |

871 |

604 |

0.543 |

| GraphLoc |

1034 |

2234 |

0.75 |

| STELLAR |

1554 |

8024 |

1.20 |

- GATE has a significant advantage inmobile deployment: small model size, low latency, and low energy consumption.

📌 5. Conclusion and Summary of Contributions

| Contribution Points |

Description |

| 1. Non-Euclidean Modeling |

Models RSS non-uniform noise structure through AHV, enhancing robustness |

| 2. Mitigation of GNN Blind Spots |

Introduces MDHV and RTEC to address information transmission failure in high-dimensional graphs |

| 3. Real-Time Dynamic Graph Construction |

RTEC supports dynamic edge construction in the online phase, adapting to environmental changes |

| 4. Strong Cross-Device Generalization Ability |

Error difference of <0.25 meters across 7 types of phones, demonstrating strong adaptability |

| 5. Efficient Deployment |

Model size of only 604KB, latency <1s, energy consumption <0.6J/s, suitable for mobile devices |

✅ One-Sentence Summary

GATE achieves robust, real-time, cross-device high-precision Wi-Fi fingerprint positioning systems on mobile devices for the first time by integrating non-Euclidean structure modeling, dynamic graph construction, and feature-level attention mechanisms, significantly surpassing existing methods.

Paper

https://arxiv.org/pdf/2507.11053