Reported by Electronic Enthusiasts (Author: Li Wanwan)Low power design for edge AI chips is crucial for their deployment in resource-constrained scenarios such as mobile devices and IoT terminals. In power-sensitive applications like IoT, wearables, and smart homes, low power design directly determines the device’s battery life, deployment costs, and user experience.

Why do edge AI chips require low power design?

From the perspective of application scenarios, firstly, devices have limited power supply, such as smartwatches, wireless sensors, and wearables that rely on battery power; low power design directly affects battery life. In self-powered systems like solar and RF energy harvesting, chip power consumption must be lower than the energy harvesting rate; otherwise, the device cannot operate continuously.Secondly, deployment environments can be harsh. In scenarios like industrial monitoring and agricultural IoT, devices may be placed in areas where battery replacement is difficult or wiring is impossible. For example, bridge structure monitoring sensors need to operate continuously for several years, requiring power consumption to be below 1mW. Excessive power consumption can lead to chip heating, affecting stability and lifespan. For instance, in automotive electronics, chips must meet AEC-Q100 standards, and low power design can reduce the risk of failure due to thermal stress.From a technological development perspective, firstly, energy efficiency ratio (TOPS/W) is a core metric; edge AI chips need to provide high computing power under limited power consumption. For example, Tesla’s FSD chip achieves 144 TOPS of computing power with a power consumption of 72W, resulting in an energy efficiency ratio of 2 TOPS/W, meeting the real-time requirements of autonomous driving. Low power design can break through the “power wall” limitation. For instance, traditional GPUs are difficult to apply in mobile devices due to high power consumption (>20W), while dedicated edge AI chips can compress power consumption to hundreds of mW.Secondly, heat dissipation and packaging cost constraints; high power chips require heat sinks or fans, increasing size and cost. For example, desktop GPUs can consume up to 300W and require active cooling; however, edge device chips need to keep power consumption below 5W, allowing for passive cooling. Low power design can simplify packaging requirements. For instance, edge AI chips using Chiplet technology can reduce interconnect power consumption through 2.5D packaging while decreasing the need for heat dissipation materials.

Low Power Design Methods for Edge AI Chips

From the perspective of hardware architecture optimization, dedicated accelerators like NPU and DPU are designed with specialized circuits for AI computations (such as matrix multiplication) to enhance energy efficiency. For example, Google TPU reduces redundant operations in general computing units through pulsed arrays. Heterogeneous computing architectures combine CPU (control), GPU (parallel computing), and NPU (AI inference) modules to dynamically allocate computing loads based on task types. Lightweight tasks are handled by the CPU, while complex models are assigned to the NPU to avoid resource waste.There are also innovative architectural design directions, such as compute-in-memory, which reduces data movement by performing computations directly near storage units, lowering I/O power consumption. Technical paths include in-memory computing and near-memory computing. Additionally, event-driven architectures using Spiking Neural Networks (SNN) or event camera sensors trigger computations only when data changes, reducing static power consumption.From the perspective of algorithm and model optimization, techniques such as model compression, pruning, and removing redundant neurons or weights (sparsification) can reduce computational load; quantization converts 32-bit floating-point models to 8-bit integers, decreasing multiplier and memory access energy consumption; knowledge distillation trains lightweight student models using large models, maintaining accuracy while lowering computational demands.For instance, lightweight network designs use structures like MobileNet (depthwise separable convolutions) and EfficientNet (compound scaling) to balance accuracy and computational load. Additionally, dynamic inference sets checkpoints during inference; if lower layers are sufficiently accurate, computation can be terminated early. Approximate computing allows for non-critical computation results to have errors, simplifying operations (e.g., low-precision floating-point, rounding strategies).From the perspective of dynamic power management, DVFS (Dynamic Voltage Frequency Scaling) adjusts voltage and frequency in real-time based on load, such as entering low power modes (like C6 sleep state) during idle times. Multi-power domain partitioning divides the chip into multiple power domains, enabling on-demand activation or deactivation (e.g., the camera module is powered only when motion is detected). Adaptive power strategies combine load prediction (like LSTM predicting task cycles) to dynamically adjust power states.There are also aspects of software and system collaboration, such as compiler optimization, which reduces computation cycles and energy consumption through instruction-level parallelism (ILP) optimization and memory access merging. Operating system scheduling manages power at the task level, prioritizing low-power cores for simple tasks and waking high-performance cores during high loads. Application layer strategies, such as wake word detection (like Alexa’s Always-On mode), run only lightweight models and wake the main model upon detecting keywords.

Conclusion

Low power design for edge AI chips is a necessary condition for their deployment in real-world scenarios, directly determining the device’s usability, economy, and sustainability. Through multi-dimensional collaboration in hardware architecture, algorithm optimization, and process technology, edge AI chips can operate at milliwatt or even microwatt power levels, meeting core requirements for battery power, real-time response, and low-cost deployment.

Disclaimer:This article is original from Electronic Enthusiasts, please cite the source above. For group discussions, please add WeChat elecfans999, for submission and interview requests, please email [email protected].

More Hot Articles to Read

-

Lenovo’s self-developed 5nm chip?! Suspected to be equipped with its own tablet

-

Price wars, can gross margins still rise? These power semiconductor manufacturers made a fortune in the first quarter

-

HarmonyOS computer! Explosive debut!

-

Transformation! Domestic automotive chips are targeting the high-end market, with these products aiming for international standards

-

China’s AI market is expected to reach $50 billion! Jensen Huang: Missing the Chinese market will result in huge losses

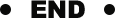

Click to Follow and Star Us

Set us as a star to not miss any updates!