Paper Title: Optimising TinyML with Quantization and Distillation of Transformer and Mamba Models for Indoor Localisation on Edge Devices

Publication Date: December 2024

Authors: Thanaphon Suwannaphong, Ferdian Jovan, Ian Craddock, Ryan McConville

Affiliations: University of Bristol, University of Aberdeen

Original Link:

https://arxiv.org/pdf/2412.09289

Open Source Code and Dataset Link:

https://github.com/AloeUoB/tinyML_indoor_localisation

Introduction

Typically, accurate indoor localization systems rely on large machine learning models deployed on a central remote server, which process data collected from low-power battery-operated edge devices such as wearables. This requires transmitting raw data outside the device, leading to increased latency, potential privacy issues, and higher overall operational costs. The advancements in TinyML provide a means to implement advanced machine learning models directly on resource-constrained edge devices. By processing data on-device, battery life can be improved, privacy enhanced, latency reduced, and operational costs lowered, which is crucial for common applications such as health monitoring.

As the healthcare industry increasingly relies on digital data to optimize patient outcomes, the ability to accurately track and monitor individuals in indoor environments becomes essential. Accurate indoor localization can enhance patient monitoring, improve safety, and support medical decision-making and interventions by ensuring timely and accurate data.

By accurately determining the location of patients, healthcare providers can monitor the elderly or those with cognitive impairments to prevent them from getting lost and ensure timely assistance in emergencies. This technology also aids in efficient asset tracking, optimizing the use of medical equipment and reducing operational inefficiencies. Furthermore, indoor localization supports personalized medicine by customizing interventions and treatments through detailed movement pattern collection.

Background and Related Work

With the advancement of technology, accurate indoor localization systems have become increasingly important. Traditional indoor localization methods often rely on large machine learning models on a central remote server, processing data collected from low-power devices such as wearables. However, this approach has several issues, including data transmission latency, privacy concerns, and high operational costs. The emergence of TinyML offers new possibilities to address these issues. TinyML technology can run machine learning models directly on resource-constrained edge devices, improving battery life, enhancing privacy, reducing latency, and lowering operational costs. However, running complex machine learning models on resource-constrained devices still faces many challenges. For instance, low-power microcontrollers (MCUs) typically have limited memory, processing power, and battery life, which restricts the size and complexity of deployable models.

This paper focuses on using model compression techniques such as quantization and knowledge distillation to significantly reduce model size while maintaining high predictive performance. Through these techniques, advanced machine learning models can be deployed on low-power MCUs for efficient indoor localization.

Research Objectives

The main objective of this paper is to develop an efficient and compact indoor localization model that can operate within the memory constraints of low-power MCUs. Additionally, this paper aims to optimize model size through model compression techniques such as quantization and knowledge distillation and evaluate their performance. The ultimate goal is to deploy advanced indoor localization models on resource-constrained devices, thereby improving system robustness and energy efficiency in practical applications.

Core Design

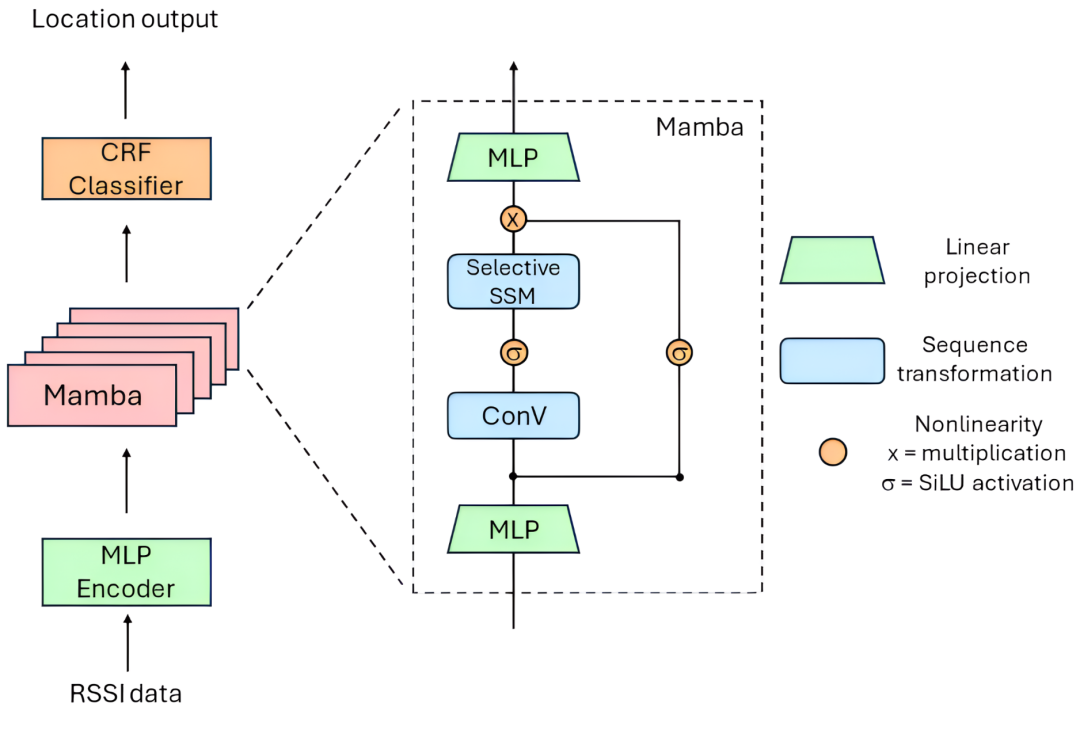

This paper proposes an indoor localization method based on Transformer and Mamba models, utilizing model compression techniques to optimize their size and performance. The Transformer model is widely used due to its excellent performance in sequence modeling tasks, but its computational complexity makes it challenging to apply directly to resource-constrained devices. To address this issue, this paper employs quantization techniques to reduce model parameters from 32-bit floating-point numbers to lower precision integers, significantly reducing model size and computational requirements. In addition to the Transformer model, this paper introduces a Mamba-based architecture as an alternative. Mamba is a novel structured state space model (SSM) that combines the advantages of RNNs and CNNs, efficiently handling sequence modeling tasks. Compared to the Transformer, the Mamba model is more compact and suitable for environments with tighter memory and computational resources. Through the combination and optimization of these two models, this paper demonstrates the feasibility of achieving efficient indoor localization within 64KB and 32KB RAM constraints.

Main Innovations

Innovation 1: This paper is the first to apply the Mamba architecture to indoor localization tasks, demonstrating its good performance under a 32KB memory constraint without model compression.

Innovation 2: This paper systematically evaluates the effects of quantization and knowledge distillation techniques on indoor localization model compression and provides hardware-constrained model selection benchmarks.

Innovation 3: Through experiments, this paper demonstrates the feasibility of deploying advanced indoor localization models on low-power MCUs and analyzes the performance of different models in practical applications.

Experimental Results

The experimental results of this study showcase the performance of different models in home and large building environments. For the home dataset, the quantized MDCSA model performs best under the 64KB RAM constraint, while the smaller Mamba model is suitable for the stricter 32KB constraint environment. In the building dataset, the Mamba model excels under the 64KB RAM constraint, significantly outperforming other models. Below is a summary of the key experimental results:

*The table can be scrolled horizontally if it exceeds the width.

| Model Name | Number of Parameters | F1 Score (%) | Accuracy (%) | Model Size (KB) |

|---|---|---|---|---|

| MDCSA:H16L1 | 10588 | 84.20 | 97.73 | 64 |

| Mamba: H32L1 | 10392 | 82.71 | 98.01 | 47 |

| MDCSA:H8L1 | 2812 | 83.33 | 97.74 | 27 |

| Mamba: H8L1 | 1432 | 80.49 | 96.44 | 12 |

These results highlight the effectiveness of quantization techniques in reducing model size without significantly sacrificing performance. In the UJIindoorLoc dataset, the Mamba model performs best under the 64KB RAM constraint, achieving an F1 score of 64% after knowledge distillation. However, when the target RAM constraint is set to 32KB, the model’s performance significantly declines, indicating the need for further model compression or input size reduction techniques.

Conclusion and Future Outlook

This paper provides an in-depth analysis of the process of developing TinyML models for indoor localization on resource-constrained low-power MCUs. Through model compression techniques such as quantization and knowledge distillation, it demonstrates the potential to significantly reduce model size under strict memory constraints without sacrificing performance. The research findings indicate that the quantized transformer model performs strongly under the 64KB RAM constraint, while the more compact Mamba model excels under the 32KB RAM constraint.

This on-device processing approach not only enhances privacy, reduces latency, and lowers operational costs, making it a practical effective solution for continuous patient monitoring and personalized care. Future work will include validating the performance of these models on actual edge devices, assessing their performance under real operational conditions, and optimizing deployment strategies to ensure model robustness and scalability.

Reviewer Comments

Academic Research Value: ★★★★☆

Stability: ★★★☆☆

Adaptability and Generalization Ability: ★★★☆☆

Hardware Requirements and Costs: ★★★★☆

Overall, this paper has high academic value, proposing an innovative method for indoor localization on resource-constrained low-power microcontrollers. The application of quantization and knowledge distillation techniques also has practical utility. However, the stability and generalization ability of the model still require further validation, especially in various real-world application environments. Future research can continue to explore more model compression techniques and optimization methods to further enhance model performance and efficiency.