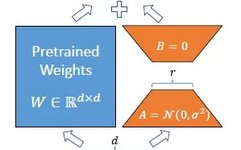

LoRA: Low-Rank Adaptation for Large Models

Source: DeepHub IMBA This article is approximately 1000 words and is recommended to be read in 5 minutes. Low-Rank Adaptation significantly reduces the number of trainable parameters for downstream tasks. For large models, it becomes impractical to fine-tune all model parameters. For example, GPT-3 has 175 billion parameters, making both fine-tuning and model deployment impossible. … Read more