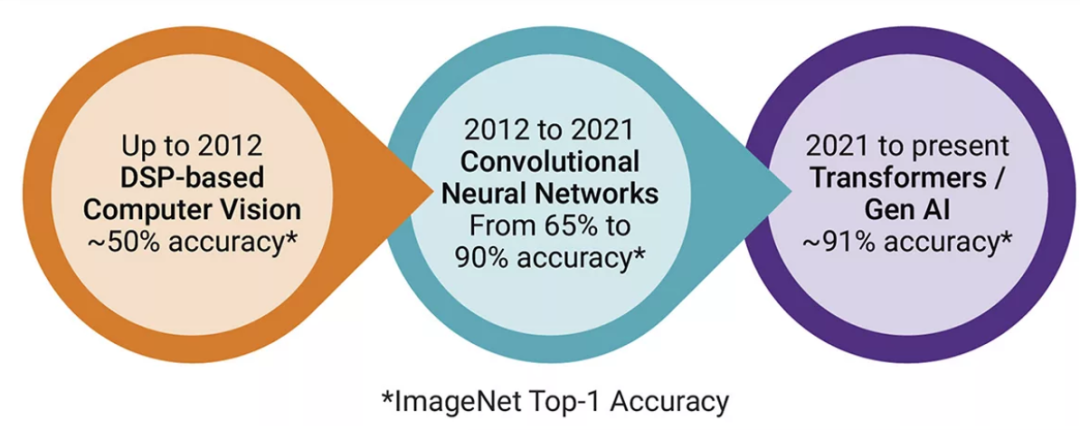

In the past decade, artificial intelligence (AI) and machine learning (ML) have undergone significant transformations—convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are transitioning to Transformers and generative artificial intelligence (GenAI). This transformation is driven by the industry’s urgent demand for models that are efficient, accurate, context-aware, and capable of handling complex tasks.Initially, AI and ML models heavily relied on digital signal processors (DSPs) to perform tasks such as audio, text, speech, and visual processing. While these models were effective, they had limitations in accuracy and scalability. The introduction of neural networks, particularly CNNs, significantly improved model accuracy. For instance, the pioneering CNN, AlexNet, achieved a 65% accuracy in image recognition, surpassing the 50% accuracy of DSPs.The next industry milestone was the development of Transformers. In 2017, Google introduced the concept of Transformers in its paper “Attention is All You Need,” bringing a more efficient way to process sequential data and revolutionizing the development of AI models. Unlike CNNs, which process data locally, Transformers use attention mechanisms to evaluate the weights of different parts of the input data. This allows Transformers to capture complex relationships and dependencies in the data, excelling in tasks such as natural language processing (NLP) and image recognition.Transformers have propelled the rise of GenAI. GenAI utilizes Transformer models to generate new data based on learned patterns, such as text, images, and even music. The ability of Transformers to understand and generate complex data forms the foundation of popular AI applications like ChatGPT and DALL-E, which have demonstrated extraordinary capabilities, such as generating coherent text and creating images based on textual descriptions, showcasing the potential of GenAI.

Figure 1: Transformers are replacing machine vision and RNN/CNN, enabling GenAI to achieve higher accuracy.

Advantages of Deploying GenAI on Edge Devices

Deploying GenAI on edge devices offers numerous advantages, especially in applications where real-time processing, privacy, and security are critical. For example, edge devices such as smartphones, IoT devices, and autonomous vehicles can benefit from GenAI.Deploying GenAI on edge devices meets the demand for low-latency processing. Applications like autonomous driving, real-time translation, and voice assistants require immediate responses, and the latency introduced by cloud processing can hinder this. By running GenAI models directly on edge devices, latency can be minimized, ensuring faster and more reliable performance.Privacy and security are also important considerations. Sending sensitive data to the cloud for processing poses risks of data breaches and unauthorized access. By processing data locally on the device, edge GenAI can enhance privacy and reduce potential security vulnerabilities. This is particularly crucial in applications like healthcare, where patient data must be handled with extreme care.Connectivity limitations are also driving the development of edge GenAI. In remote areas with unreliable internet access or no service, edge devices equipped with GenAI can operate independently without cloud connectivity, ensuring continuous functionality. This is vital for applications like disaster response, where communication infrastructure may be unavailable.

Challenges of Deploying GenAI on Edge Devices

While deploying GenAI on edge devices has many benefits, it also presents a series of challenges, including computational complexity, data requirements, bandwidth limitations, power consumption, and hardware constraints, which need to be addressed to ensure effective implementation and operation of the models.First, let’s look at the computational complexity of GenAI models. As the foundation of GenAI models, Transformers require intensive computations due to their attention mechanisms and extensive matrix multiplications, necessitating substantial computational resources and memory, which can strain the limited resources of edge devices. Additionally, edge devices often need to perform real-time processing, especially in applications like autonomous driving or real-time translation, where the high computational demands of GenAI models make achieving the necessary speed and responsiveness challenging.

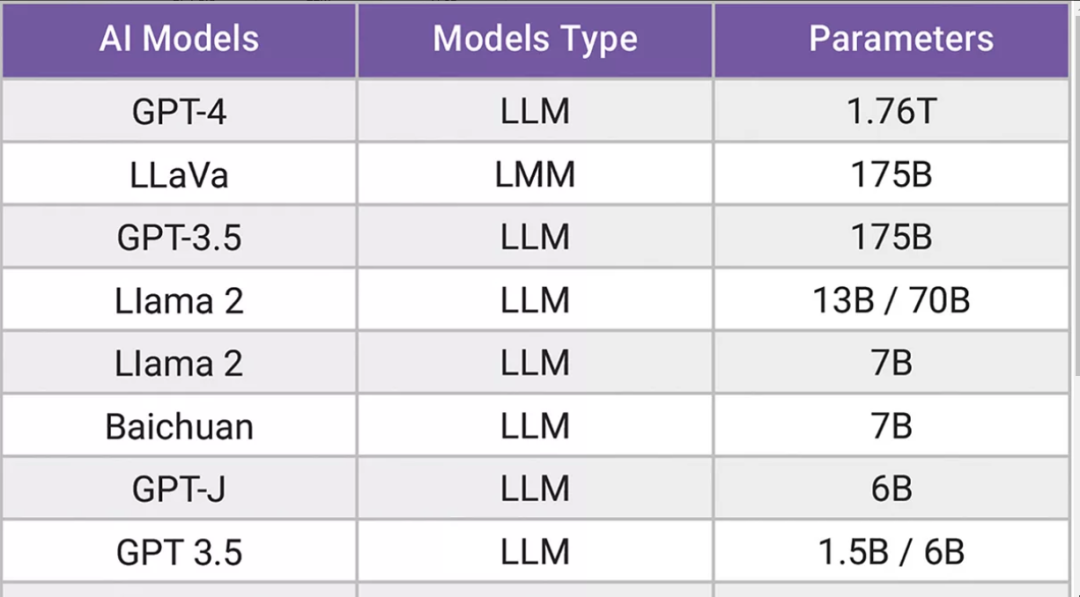

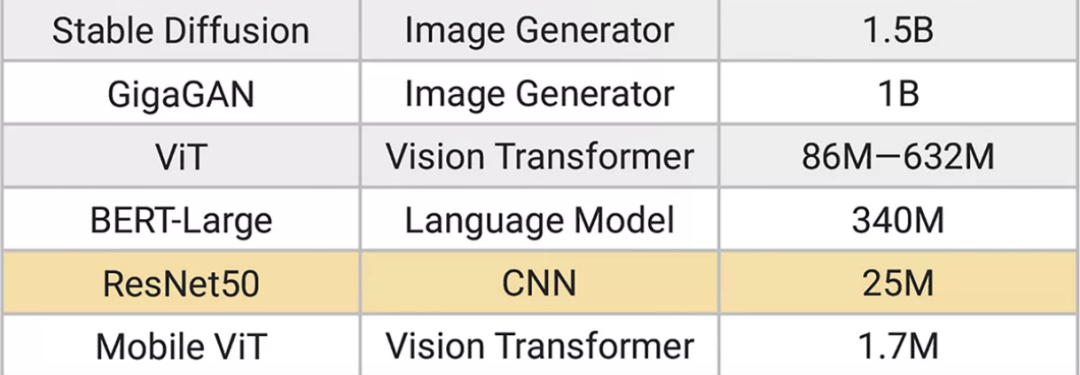

Table 1: Parameters of GenAI models (including large language models (LLMs) and image generators) are significantly larger than those of CNNs.

Data requirements are also a significant challenge. Training GenAI models requires vast amounts of data; for example, the GPT-4 model was trained on several terabytes of data, making it impractical for edge devices with limited storage and memory capacity to process and store this data. Even during inference, GenAI models may require substantial data to generate accurate and relevant outputs. Managing and processing this data on edge devices poses significant challenges due to storage limitations.Bandwidth limitations further complicate the deployment of GenAI on edge devices. Edge devices typically use low-power memory interfaces, such as low-power double data rate (LPDDR), which provide lower bandwidth compared to the high-bandwidth memory (HBM) used in data centers, potentially limiting the data processing capabilities of edge devices and affecting the performance of GenAI models. Efficiently transferring data between memory and processing units is crucial for maximizing the performance of GenAI models, and limited bandwidth can lead to slower processing speeds and reduced efficiency.Power consumption is another critical issue. Due to high computational demands, GenAI models can generate significant power consumption, which is a key concern for edge devices, especially those reliant on battery power, such as smartphones, IoT devices, and autonomous vehicles. High power consumption also increases heat generation, necessitating effective thermal management solutions. Managing heat dissipation in compact edge devices is also challenging and can affect their lifespan and performance.Hardware limitations are also a challenge. Compared to data center servers, edge devices typically have limited processing capabilities. Choosing the right processor that can meet GenAI demands while maintaining energy efficiency and performance is crucial. The limited memory and storage capacity of edge devices may restrict the size and complexity of GenAI models that can be deployed. This necessitates the development of optimized models that can operate within these constraints without compromising performance.Model optimization can help address these challenges. Techniques such as model quantization (reducing the precision of model parameters) and pruning (removing redundant parameters) can help reduce the computational and memory requirements of GenAI models. However, these techniques must be applied carefully to maintain the accuracy and functionality of the models. Developing models specifically optimized for edge deployment can help address some of these challenges, involving the creation of lightweight GenAI models that can run efficiently on edge devices without sacrificing performance.Software and toolchain support is also critical. Effectively deploying GenAI on edge devices requires robust software tools and frameworks to support model optimization, deployment, and management, ensuring software resources are compatible with edge hardware and providing efficient development processes. For real-time applications, optimizing the inference process to reduce latency and improve efficiency involves fine-tuning the models and leveraging hardware accelerators for optimal performance.Security and privacy issues must also be addressed—ensuring the security of data processed on edge devices is crucial, and strong encryption and secure data processing practices help protect sensitive information. Processing data locally on edge devices can minimize the transmission of sensitive data to the cloud, helping to address privacy concerns. However, it is also essential to ensure that the GenAI models themselves do not inadvertently leak sensitive information.By carefully selecting hardware, optimizing models, and leveraging advanced software tools to tackle these challenges, deploying GenAI on edge devices becomes more feasible and effective, allowing a wide range of applications to benefit from the capabilities of GenAI while maintaining the advantages of edge computing.

Processor Selection for Deploying GenAI on Edge Devices

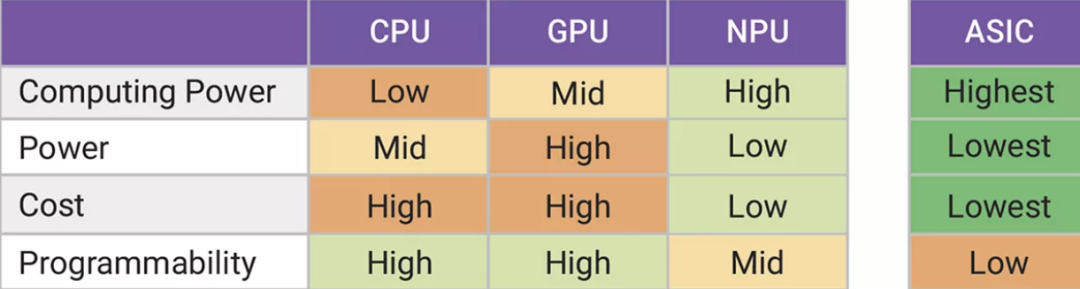

As mentioned above, selecting the right embedded processor helps overcome the challenges of edge deployment of GenAI, requiring a balance between computational power, energy efficiency, and load flexibility.GPUs and CPUs offer flexibility and programmability, making them suitable for various AI applications. However, they may not be the most energy-efficient choice. GPUs, in particular, consume a lot of power, making them less ideal for battery-powered devices. ASICs provide optimized solutions for specific tasks, offering high efficiency and performance. However, their lack of flexibility makes it difficult to adapt to evolving AI models and workloads.Neural Processing Units (NPUs) strike a balance between flexibility and efficiency. NPUs, including Synopsys’s ARC NPX NPU IP, are designed specifically for AI workloads, providing optimized performance for tasks such as matrix multiplication and tensor operations, along with programmable and low-power solutions, making them suitable for edge devices.

Figure 2: Comparison of CPU, GPU, NPU, and ASIC for edge AI/ML, with NPU providing the most efficient processing capability along with programmability and ease of use.For example, running a GenAI model like Stable Diffusion on an NPU consumes only 2 watts, while running it on a GPU requires 200 watts, significantly reducing power consumption. NPUs also support advanced features such as mixed-precision algorithms and memory bandwidth optimization, better meeting the computational demands of GenAI models.

Conclusion

The shift towards Transformers and GenAI represents a significant advancement in the fields of AI and ML. These models offer exceptional performance and versatility, supporting a wide range of applications from natural language processing to image generation. Deploying GenAI on edge devices can unlock limitless possibilities, providing low-latency, secure, and reliable AI capabilities.However, to fully realize the potential of edge GenAI, challenges such as computational complexity, data requirements, bandwidth limitations, and power consumption must be addressed. Choosing the right processor (such as an NPU) can provide a balanced solution, delivering the necessary performance and efficiency for edge applications.As AI technology continues to evolve, integrating GenAI into edge devices will play a key role in driving innovation and expanding the reach of intelligent technologies. By addressing these challenges and leveraging the advantages of advanced processors, we can seamlessly integrate AI into everyday life.Authors: Fergus Casey, Executive Director of R&D at Synopsys, and Gordon Cooper, Product Management and Processor IP Staff Manager at Synopsys