Goodbye SSE, Embrace Streamable HTTP

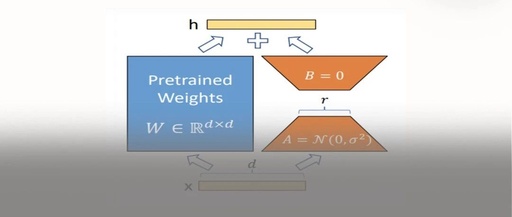

With the rapid development of artificial intelligence (<span>AI</span>), efficient communication between AI assistants and applications has become particularly important. The Model Context Protocol (<span>MCP</span>, abbreviated as <span>MCP</span>) has emerged to provide a standardized interface for large language models (<span>LLMs</span>) to interact with external data sources and tools. Among the many features of <span>MCP</span>, the <span>Streamable … Read more