Selected from Sebastian Raschka’s blog

Translated by Machine Heart

Editor: Jiaqi

This is the experience derived from hundreds of experiments by the author Sebastian Raschka, worth reading.

Increasing the amount of data and the number of model parameters is a widely recognized direct method to improve neural network performance. Currently, mainstream large models have parameter counts that have expanded to the hundreds of billions, and the trend of “large models” is only getting larger.

This trend brings various computational challenges. Fine-tuning large language models with hundreds of billions of parameters not only takes a long training time but also requires a significant amount of high-performance memory resources.

To reduce the cost of fine-tuning large models, researchers at Microsoft developed Low-Rank Adaptation (LoRA) technology. The brilliance of LoRA lies in its ability to add a detachable plugin on top of the existing large model, keeping the model’s core unchanged. LoRA is lightweight and convenient.

LoRA is one of the most widely used and effective methods for efficiently fine-tuning a customized large language model.

If you are interested in open-source LLMs, LoRA is a fundamental technology worth learning and not to be missed.

Professor Sebastian Raschka from the University of Wisconsin-Madison has conducted a comprehensive exploration of LoRA. With many years of experience in the field of machine learning, he is passionate about breaking down complex technical concepts. After hundreds of experiments, Sebastian Raschka summarized his experiences in fine-tuning large models using LoRA and published them in Ahead of AI magazine.

Based on preserving the author’s original intent, Machine Heart has translated this article:

Last month, I shared an article about LoRA experiments, primarily based on the open-source Lit-GPT library maintained by my colleagues and me at Lightning AI, discussing the key insights and lessons I derived from the experiments. Additionally, I will address some common questions related to LoRA technology. If you are interested in fine-tuning customized large language models, I hope these insights can help you get started quickly.

In summary, the main points I discuss in this article include:

-

Although LLM training (or all models trained on GPU) has an inevitable randomness, the results of multi-run training remain very consistent.

-

If limited by GPU memory, QLoRA provides a cost-effective compromise. It saves 33% of memory at the cost of a 39% increase in runtime.

-

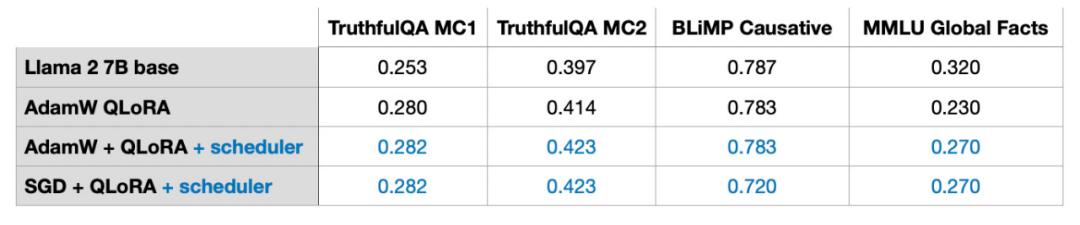

When fine-tuning LLMs, the choice of optimizer is not a major factor affecting the results. Whether it’s AdamW, SGD with a scheduler, or AdamW with a scheduler, the impact on the results is negligible.

-

Although Adam is often considered a memory-intensive optimizer because it introduces two new parameters for each model parameter, this does not significantly affect the peak memory requirements of LLMs. This is because most memory will be allocated for the multiplication of large matrices rather than for retaining additional parameters.

-

For static datasets, multiple iterations during multi-run training may not yield good results. This often leads to overfitting, worsening the training results.

-

When combining LoRA, ensure it is applied across all layers, not just in the Key and Value matrices, to maximize the model’s performance.

-

Tuning the LoRA rank and selecting the appropriate α value is crucial. A tip is to try setting the α value to twice the rank value.

-

A single GPU with 14GB RAM can efficiently fine-tune a model with 7 billion parameters in a few hours. For static datasets, making an LLM a “jack of all trades” that performs well across all baseline tasks is impossible. Solving this problem requires diverse data sources or techniques beyond LoRA.

Additionally, I will answer ten common questions related to LoRA.

If readers are interested, I will write another article providing a more comprehensive introduction to LoRA, including detailed code for implementing LoRA from scratch. Today’s article mainly shares key issues in using LoRA. Before we begin, let’s supplement some basic knowledge.

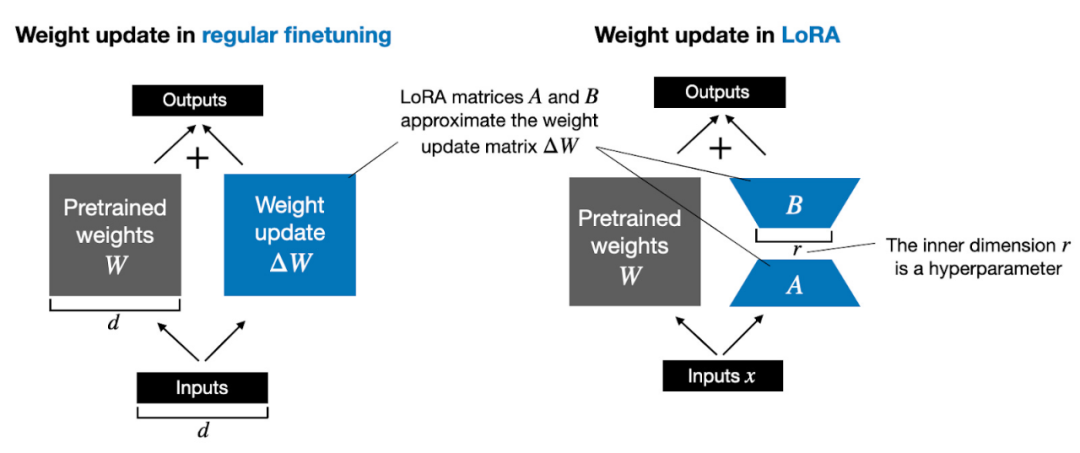

Due to the limitations of GPU memory, updating model weights during training is costly.

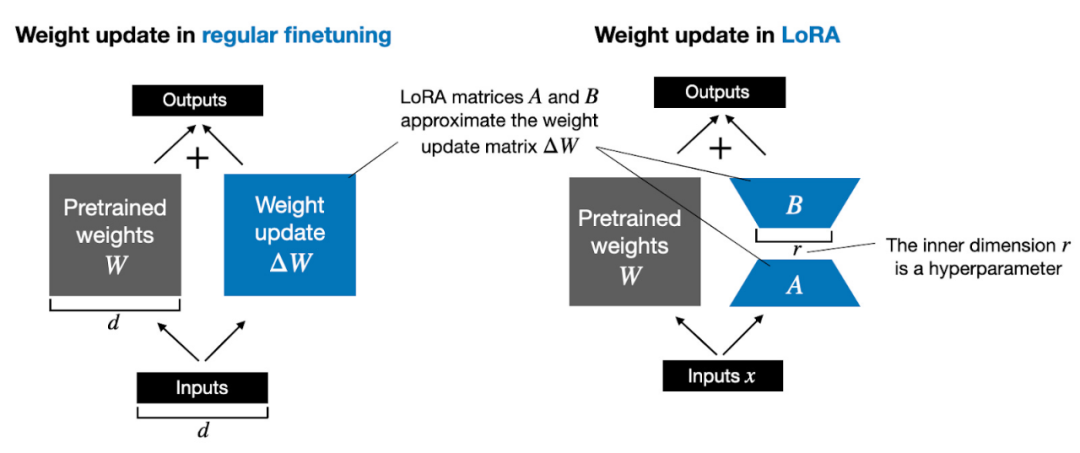

For example, suppose we have a language model with 7B parameters represented by a weight matrix W. During backpropagation, the model needs to learn a ΔW matrix aimed at updating the original weights to minimize the loss function value.

The weight update is as follows: W_updated = W + ΔW.

If the weight matrix W contains 7B parameters, the weight update matrix ΔW also contains 7B parameters, making the computation of ΔW very resource-intensive.

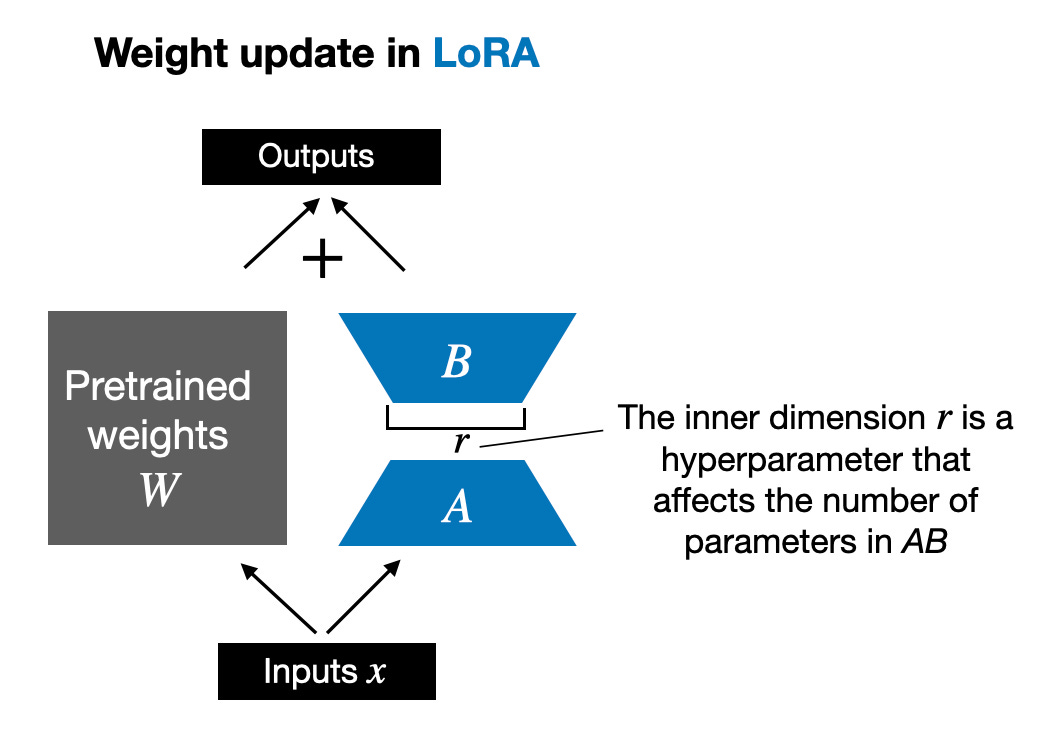

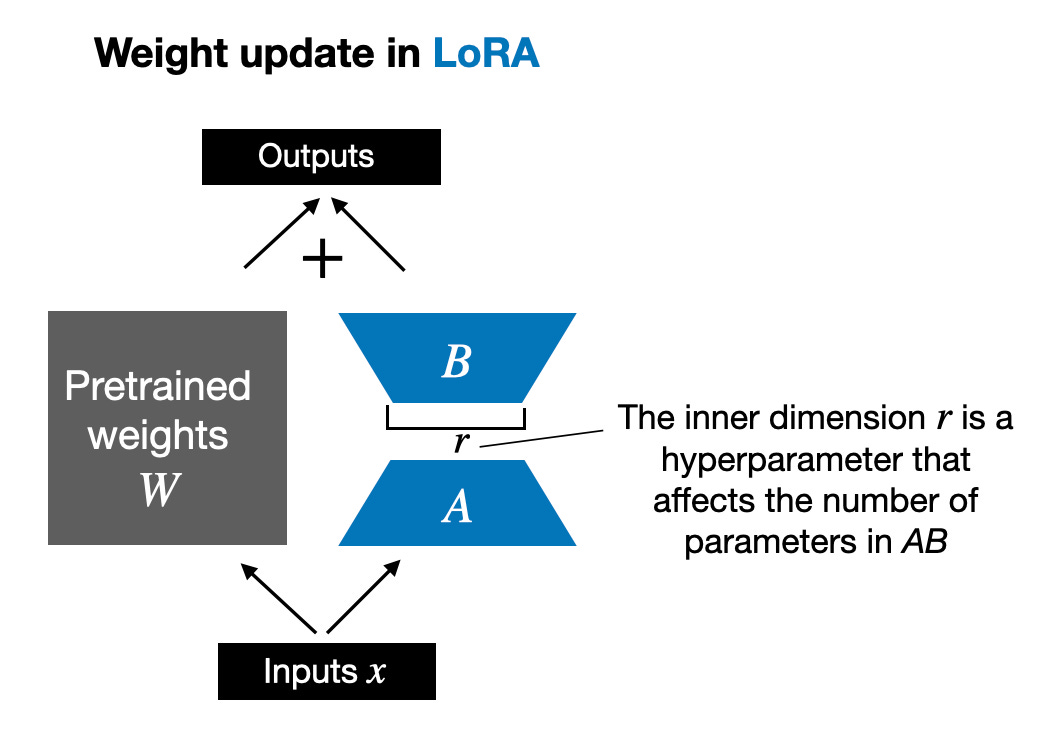

LoRA, proposed by Edward Hu and others, decomposes the part of the weight change ΔW into a low-rank representation. Specifically, it does not require explicitly computing ΔW. Instead, LoRA learns the decomposed representation of ΔW during training, as shown in the figure below, which is the secret of LoRA’s computational resource savings.

As shown above, the decomposition of ΔW means we need two smaller LoRA matrices A and B to represent the larger matrix ΔW. If A has the same number of rows as ΔW and B has the same number of columns as ΔW, we can denote the decomposition as ΔW = AB. (AB is the result of matrix multiplication between A and B.)

How much memory does this method save? It also depends on the rank r, which is a hyperparameter. For example, if ΔW has 10,000 rows and 20,000 columns, we need to store 200,000,000 parameters. If we choose A and B with r=8, then A has 10,000 rows and 8 columns, and B has 8 rows and 20,000 columns, resulting in 10,000×8 + 8×20,000 = 240,000 parameters, which is about 833 times less than 200,000,000 parameters.

Of course, A and B cannot capture all the information covered by ΔW, but this is determined by the design of LoRA. When using LoRA, we assume that model W is a full-rank large matrix that collects all the knowledge from the pre-training dataset. When fine-tuning LLMs, we do not need to update all weights; we only need to update fewer weights than ΔW to capture the core information, which is achieved through low-rank updates using the AB matrices.

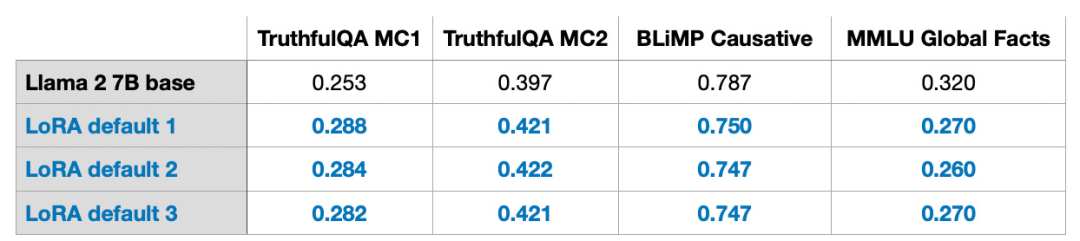

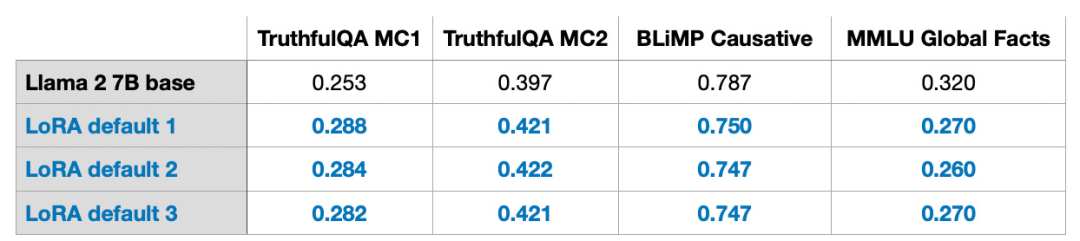

Although the randomness of LLMs, or models trained on GPUs, is inevitable, using LoRA for multiple experiments has shown that the final benchmark results of LLMs exhibit remarkable consistency across different test sets. This provides a good foundation for conducting other comparative studies.

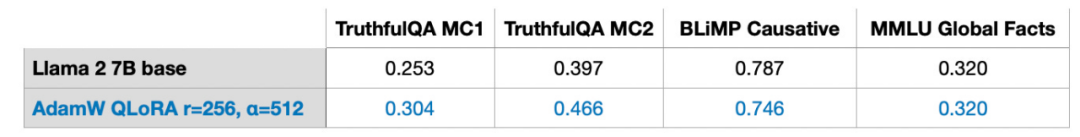

Note that these results were obtained under default settings using a smaller value of r=8. Experimental details can be found in my other article.

Article link: https://lightning.ai/pages/community/lora-insights/

QLoRA Computation – Memory Trade-Off

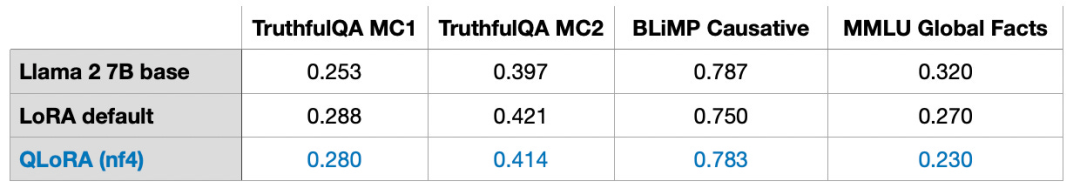

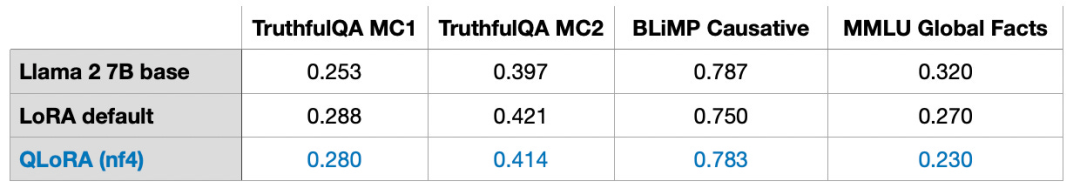

QLoRA is an abbreviation for Quantized LoRA proposed by Tim Dettmers and others. QLoRA is a technique that further reduces memory usage during fine-tuning. During backpropagation, QLoRA quantizes the pre-trained model weights to 4 bits and uses a paging optimizer to handle memory peaks.

I found that using LoRA can save 33% of GPU memory. However, due to the additional quantization and de-quantization of pre-trained model weights in QLoRA, the training time increased by 39%.

Default LoRA has 16-bit floating-point precision:

-

Training duration: 1.85 hours

-

QLoRA with 4-bit normal floating-point:

-

Training duration: 2.79 hours

-

Moreover, I found that the model’s performance is almost unaffected, indicating that QLoRA can serve as an alternative to LoRA training, further addressing common GPU memory bottleneck issues.

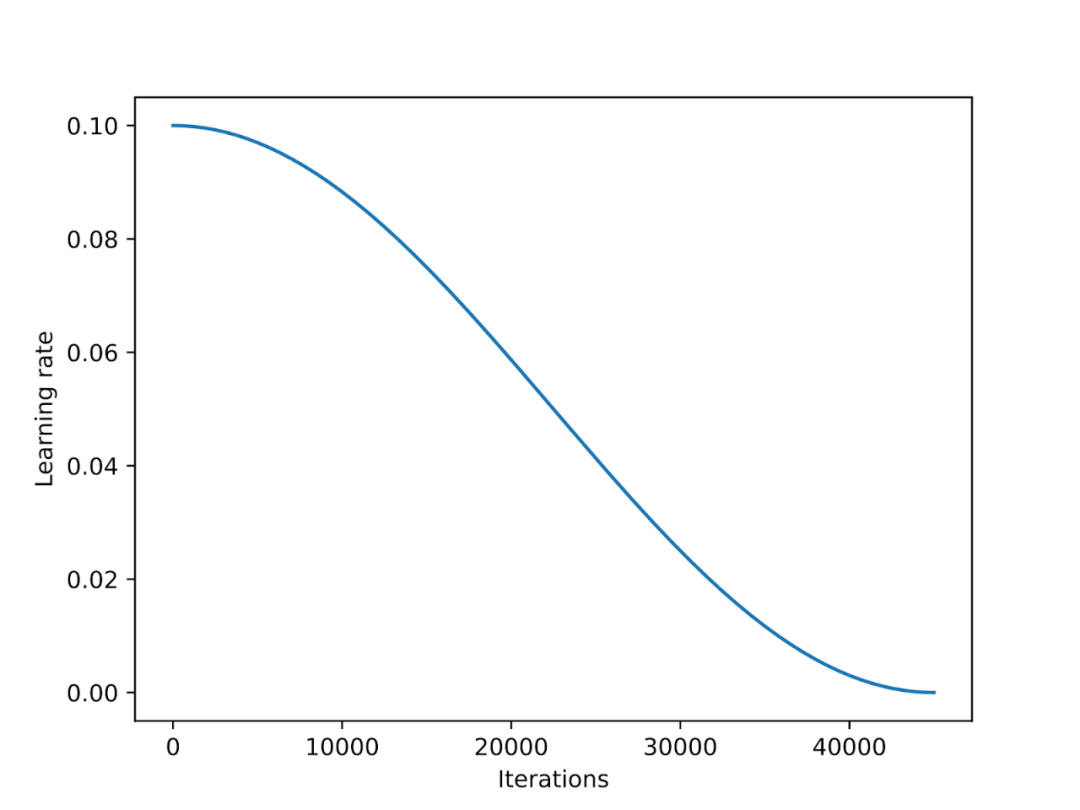

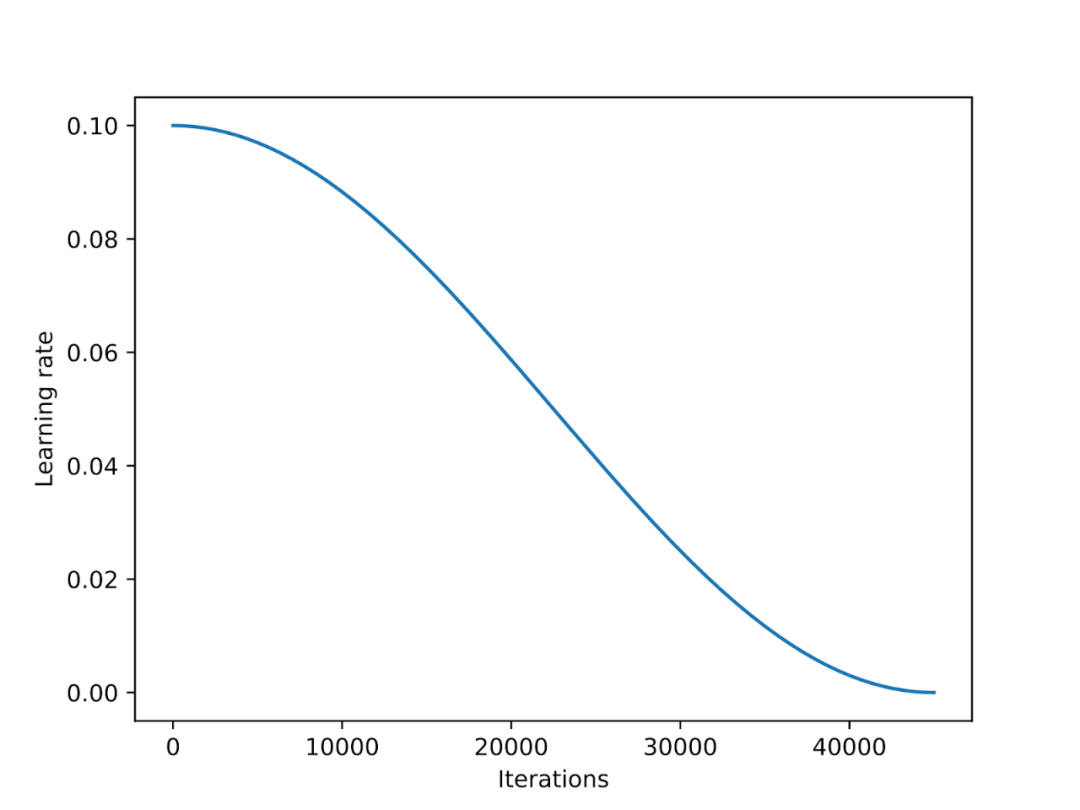

The learning rate scheduler reduces the learning rate throughout the training process, optimizing the model’s convergence and avoiding excessively high loss values.

Cosine annealing is a scheduler that adjusts the learning rate following a cosine curve. It starts with a higher learning rate and then smoothly decreases, approaching 0 in a cosine-like pattern. A common variant of cosine annealing is the half-cycle variant, which completes only half a cosine cycle during training, as shown in the figure below.

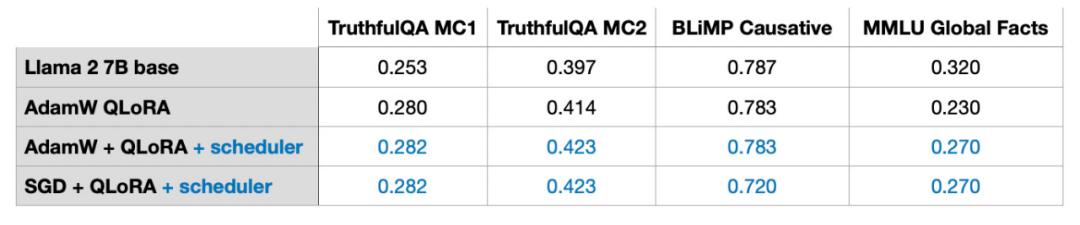

In my experiments, I added a cosine annealing scheduler to the LoRA fine-tuning script, which significantly improved the performance of SGD. However, it had little gain for Adam and AdamW optimizers; there was almost no change after adding it.

The next section will discuss the potential advantages of SGD over Adam.

Adam and AdamW optimizers are very popular in deep learning. If we are training a model with 7B parameters, using Adam can track an additional 14B parameters during training, effectively doubling the model’s parameter count under unchanged conditions.

SGD cannot track additional parameters during training, so what advantages does it have over Adam in terms of peak memory?

In my experiments, training a 7B parameter Llama 2 model using AdamW and LoRA (default settings r=8) required 14.18 GB of GPU memory. Training the same model with SGD required 14.15 GB of GPU memory. Compared to AdamW, SGD only saved 0.03 GB of memory, which is negligible.

Why is the memory savings so small? This is because using LoRA has already significantly reduced the model’s parameter count. For instance, if r=8, out of the 6,738,415,616 parameters in the 7B Llama 2 model, only 4,194,304 are trainable LoRA parameters.

Looking only at the numbers, 4,194,304 parameters may still seem like a lot, but they actually occupy only 4,194,304 × 2 × 16 bits = 134.22 megabits = 16.78 megabytes. (We observed a difference of 0.03 Gb = 30 Mb, which is due to the additional overhead when storing and copying optimizer states.) 2 represents the number of additional parameters stored by Adam, while 16 bits refers to the default precision of model weights.

If we expand the LoRA matrix’s r from 8 to 256, then the advantages of SGD over AdamW will become apparent:

-

Using AdamW will occupy 17.86 GB of memory

-

Using SGD will occupy 14.46 GB of memory

Therefore, as the matrix size increases, the memory savings from SGD will become significant. Since SGD does not need to store additional optimizer parameters, it can save more memory compared to Adam and other optimizers when dealing with large models. This is a crucial advantage for memory-constrained training tasks.

In traditional deep learning, we often perform multiple iterations over the training set, with each iteration referred to as an epoch. For example, when training a convolutional neural network, it is common to run hundreds of epochs. So, does multi-run iterative training have an effect on instruction fine-tuning?

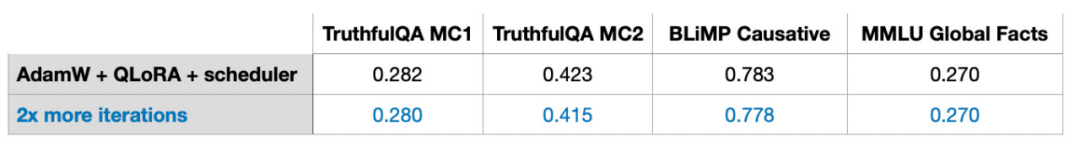

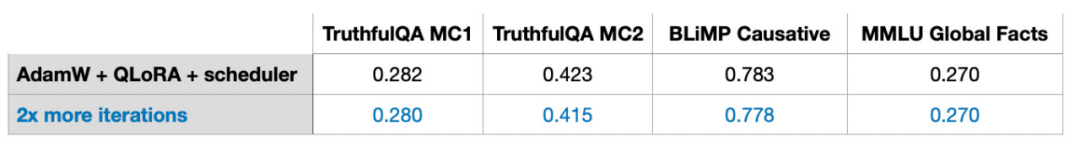

The answer is no; when I doubled the number of iterations for a 50k Alpaca example instruction fine-tuning dataset, the model’s performance declined.

Therefore, I conclude that multiple iterations may be detrimental to instruction fine-tuning. I also observed the same situation in a 1k example LIMA instruction fine-tuning dataset. The decline in model performance may be due to overfitting, and the specific reasons still need further exploration.

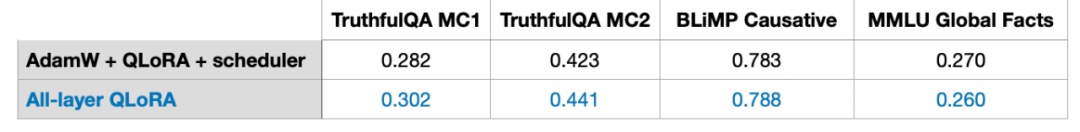

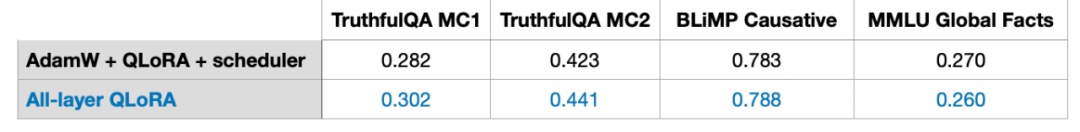

Using LoRA in More Layers

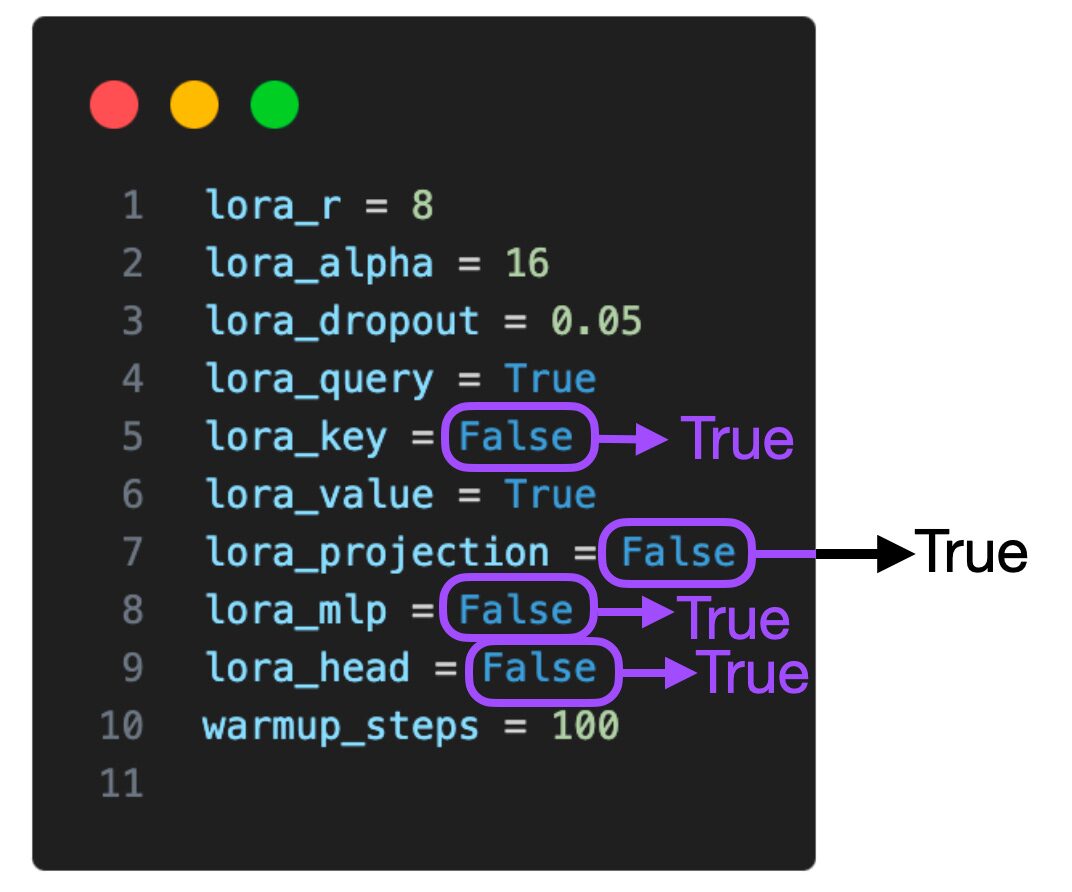

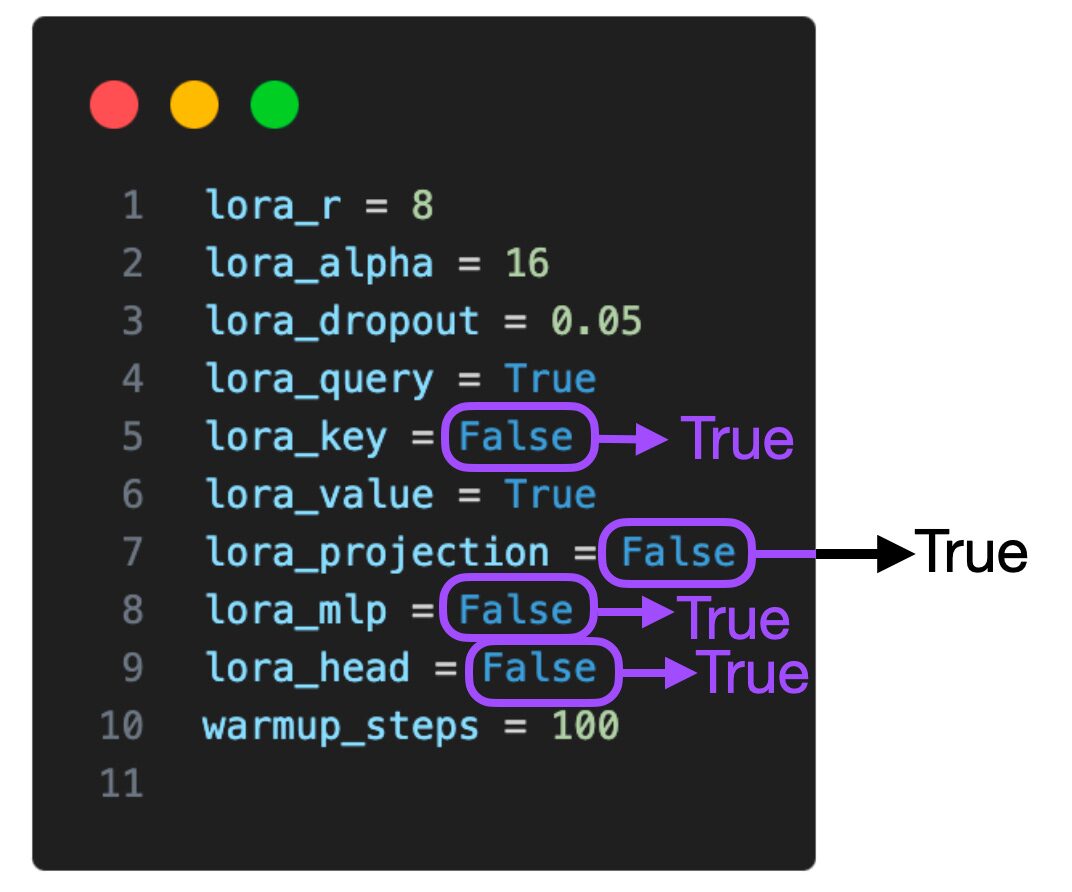

The table below shows that LoRA only affects selected matrices (i.e., Key and Value matrices in each Transformer). Additionally, we can enable LoRA between other linear layers, such as query weight matrices, projection layers, and multi-head attention modules, as well as output layers.

If we add LoRA to these additional layers, the number of trainable parameters for the 7B Llama 2 model will increase from 4,194,304 to 20,277,248, a fivefold increase. Applying LoRA to more layers can significantly improve model performance, but it also requires more memory space.

Moreover, I only explored (1) LoRA enabled only for query and weight matrices, and (2) LoRA enabled for all layers. The effects of using LoRA in more layer combinations are worth further investigation. If we can determine whether using LoRA in the projection layer is beneficial for training results, we can better optimize the model and enhance its performance.

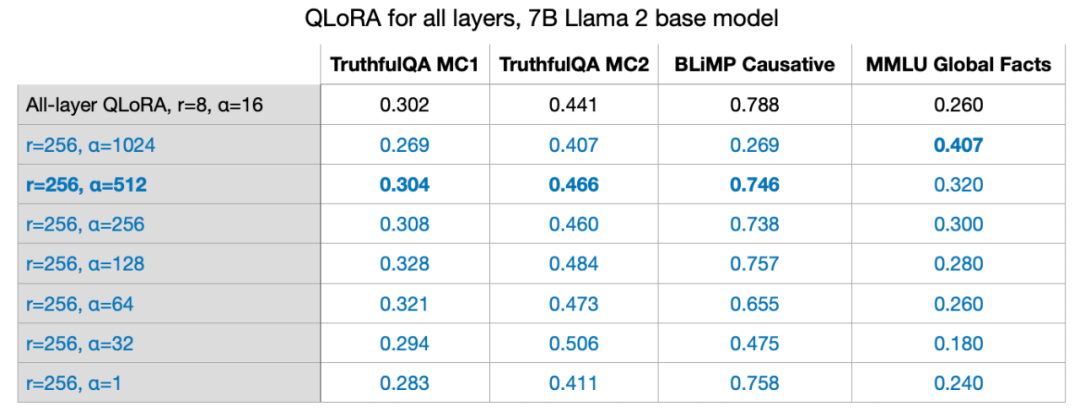

Balancing LoRA Hyperparameters: R and Alpha

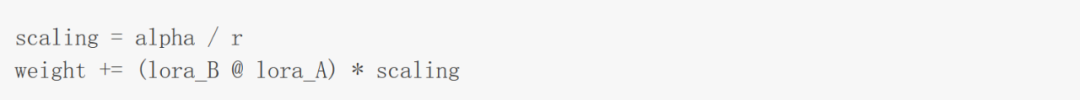

As mentioned in the paper proposing LoRA, it introduces an additional scaling coefficient. This coefficient is used to apply LoRA weights during the forward propagation process. The scaling involves the previously discussed rank parameter r and another hyperparameter α (alpha), applied as follows:

As shown in the formula in the figure above, the larger the value of LoRA weights, the greater the impact.

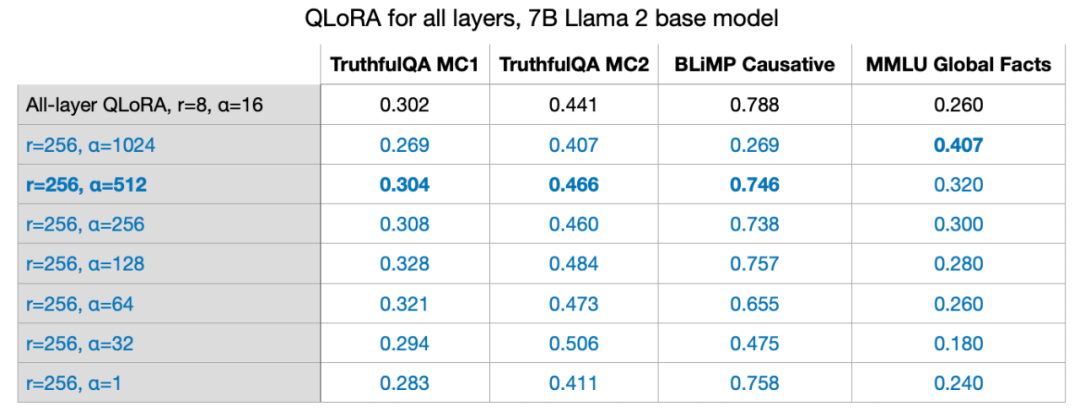

In previous experiments, I used parameters r=8 and alpha=16, which resulted in a 2x scaling. When using LoRA to reduce the weight of large models, setting alpha to twice the rank value is a common rule of thumb. However, I am curious whether this rule applies to larger r values.

I also tried r=32, r=64, r=128, and r=512, but omitted this process for clarity. However, r=256 did indeed yield the best results. In fact, choosing alpha=2r provided optimal results.

Training a 7B Parameter Model on a Single GPU

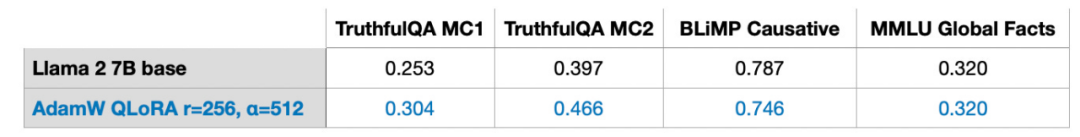

LoRA allows us to fine-tune large language models with 7B parameter scales on a single GPU. In this specific case, using the optimally set QLoRA (r=256, alpha=512), processing 17.86 GB (50k training samples) of data on an A100 takes about 3 hours (in this case, the Alpaca dataset).

In the remainder of this article, I will answer other questions you may encounter.

Q1: How important is the dataset?

The dataset is crucial. I used the Alpaca dataset containing 50k training examples. I chose Alpaca because it is very popular. Since this article is already lengthy, I will not discuss the test results on more datasets here.

Alpaca is a synthetic dataset that may be somewhat outdated by today’s standards. The quality of the data is critical. For example, in June, I discussed the LIMA dataset in an article, which is a curated dataset consisting of only a thousand examples.

Article link: https://magazine.sebastianraschka.com/p/ahead-of-ai-9-llm-tuning-and-dataset

As the title of the paper proposing LIMA states: less is more for alignment, although LIMA has fewer data than Alpaca, the 65B Llama model fine-tuned on LIMA outperformed the results from Alpaca. Using the same configuration (r=256, alpha=512), I achieved similar model performance on LIMA as that of Alpaca, which has a data volume 50 times larger.

Q2: Is LoRA suitable for domain adaptation?

I currently do not have a clear answer to this question. Based on experience, knowledge is usually extracted from the pre-training dataset. Generally, language models absorb knowledge from the pre-training dataset, while instruction fine-tuning primarily helps LLMs better follow instructions.

Since computational power is a key factor limiting the training of large language models, LoRA can also be used on specific domain-specific datasets to further pre-train existing pre-trained LLMs.

Additionally, it is worth noting that my experiments included two arithmetic benchmark tests. In both tests, the models fine-tuned with LoRA performed significantly worse than the pre-trained base models. I speculate this is due to the Alpaca dataset lacking corresponding arithmetic examples, leading the model to “forget” arithmetic knowledge. Further research is needed to determine whether the model “forgot” arithmetic knowledge or stopped responding to corresponding instructions. However, a conclusion can be drawn here: “When fine-tuning LLMs, it is a good idea to have examples of every task we care about in the dataset.”

Q3: How to determine the best r value?

I currently do not have a good solution for this question. Determining the best r value requires specific analysis based on each LLM and dataset. I suspect that a high r value may lead to overfitting, while a low r value may prevent the model from capturing the diverse tasks in the dataset. I suspect that the more diverse the task types in the dataset, the larger the required r value. For example, if I only need the model to perform basic two-digit arithmetic, a very small r value may suffice. However, this is merely my hypothesis and requires further research to validate.

Q4: Does LoRA need to be enabled for all layers?

I only explored (1) LoRA enabled only for query and weight matrices, and (2) LoRA enabled for all layers. The effects of using LoRA in more layer combinations are worth further investigation. If we can determine whether using LoRA in the projection layer is beneficial for training results, we can better optimize the model and enhance its performance.

If we consider various settings (lora_query, lora_key, lora_value, lora_projection, lora_mlp, lora_head), there are 64 combinations to explore.

Q5: How to avoid overfitting?

Generally speaking, a larger r is more likely to lead to overfitting, as r determines the number of trainable parameters. If the model has overfitting issues, the first consideration should be to reduce the r value or increase the dataset size. Additionally, one can try increasing the weight decay of the AdamW or SGD optimizers or increasing the dropout value of the LoRA layers.

I have not explored the dropout parameter of LoRA in my experiments (I used a fixed dropout rate of 0.05), and the dropout parameter of LoRA is also a research-worthy issue.

Q6: Are there other optimizers to consider?

Sophia, released in May this year, is worth trying. Sophia is a scalable stochastic second-order optimizer for language model pre-training. According to the paper: “Sophia: A Scalable Stochastic Second-order Optimizer for Language Model Pre-training,” Sophia is twice as fast as Adam and achieves better performance. In short, both Sophia and Adam normalize using gradient curvature instead of gradient variance.

Paper link: https://arxiv.org/abs/2305.14342

Q7: Are there other factors affecting memory usage?

In addition to precision and quantization settings, model size, batch size, and the number of trainable LoRA parameters, the dataset can also affect memory usage.

The block size of Llama 2 is 4048 tokens, which means Llama can process sequences containing 4048 tokens at once. If masks are added to subsequent tokens, training sequences will become shorter, saving a significant amount of memory. For example, the Alpaca dataset is relatively small, with the longest sequence length being 1304 tokens.

When I tried using other datasets with a maximum sequence length of 2048 tokens, memory usage skyrocketed from 17.86 GB to 26.96 GB.

Q8: What advantages does LoRA have compared to full fine-tuning and RLHF?

I did not conduct RLHF experiments, but I tried full fine-tuning. Full fine-tuning requires at least 2 GPUs, each consuming 36.66 GB, and takes 3.5 hours to complete. However, the baseline test results were poor, possibly due to overfitting or suboptimal hyperparameters.

Q9: Can LoRA weights be combined?

The answer is yes. During training, we separate LoRA weights from pre-trained weights and add them during each forward pass.

Assuming there is an application in the real world with multiple sets of LoRA weights, each set corresponding to a user of the application, it makes sense to store these weights separately to save disk space. Additionally, after training, we can merge pre-trained weights with LoRA weights to create a single model. This way, we do not have to apply LoRA weights during each forward pass.

weight += (lora_B @ lora_A) * scaling

We can update the weights using the method shown above and save the merged weights.

Similarly, we can continue to add many sets of LoRA weights:

weight += (lora_B_set1 @ lora_A_set1) * scaling_set1weight += (lora_B_set2 @ lora_A_set2) * scaling_set2weight += (lora_B_set3 @ lora_A_set3) * scaling_set3...

I have not experimented to evaluate this method, but it can already be implemented using the scripts/merge_lora.py script provided in Lit-GPT.

Script link: https://github.com/Lightning-AI/lit-gpt/blob/main/scripts/merge_lora.py

Q10: How does layer-wise optimal rank adaptation perform?

For simplicity, we usually set the same learning rate for each layer in deep neural networks. The learning rate is a hyperparameter we need to optimize, and further, we can choose different learning rates for each layer (this is not very complicated in PyTorch).

However, this is rarely done in practice, as this method adds extra costs, and there are many other parameters to tune in deep neural networks. Similar to choosing different learning rates for different layers, we can also choose different LoRA r values for different layers. I have not attempted this yet, but there is a detailed paper introducing this method: “LLM Optimization: Layer-wise Optimal Rank Adaptation (LORA).” Theoretically, this method sounds promising and provides ample room for optimizing hyperparameters.

Paper link: https://medium.com/@tom_21755/llm-optimization-layer-wise-optimal-rank-adaptation-lora-1444dfbc8e6a

Original link: https://magazine.sebastianraschka.com/p/practical-tips-for-finetuning-llms?continueFlag=0c2e38ff6893fba31f1492d815bf928b

© THE END

For reprints, please contact this public account for authorization

Submissions or inquiries: [email protected]