Skip to content

[New Intelligence Guide] Enabling language models to think like humans.

When ChatGPT was first released, it shocked us with its performance in conversations, so much so that it created the illusion that language models possess “thinking abilities”.

However, as researchers delved deeper into language models, they gradually discovered that there is still a significant gap between the reproduction of high-probability language patterns and the expected “general artificial intelligence”.

In most current studies, large language models primarily generate chains of thought under specific prompts to perform reasoning tasks, without considering the human cognitive framework, resulting in a noticeable gap in the ability of language models to solve complex reasoning problems compared to humans.

Humans typically use various cognitive abilities when faced with complex reasoning challenges and need to interact with various aspects of tools, knowledge, and external environmental information. Can language models simulate human thought processes to solve complex problems?

The answer is of course yes! The first model to simulate human cognitive processing framework, OlaGPT, is here!

Paper link: https://arxiv.org/abs/2305.16334

Code link: https://github.com/oladata-team/OlaGPT

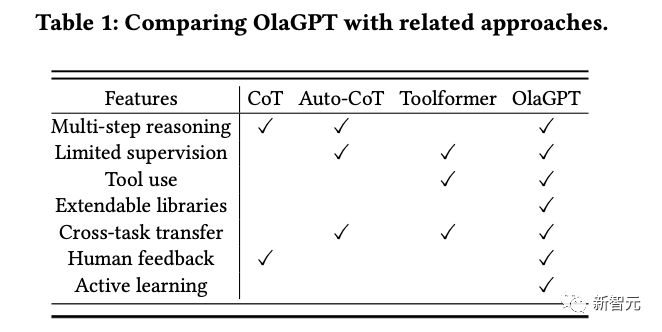

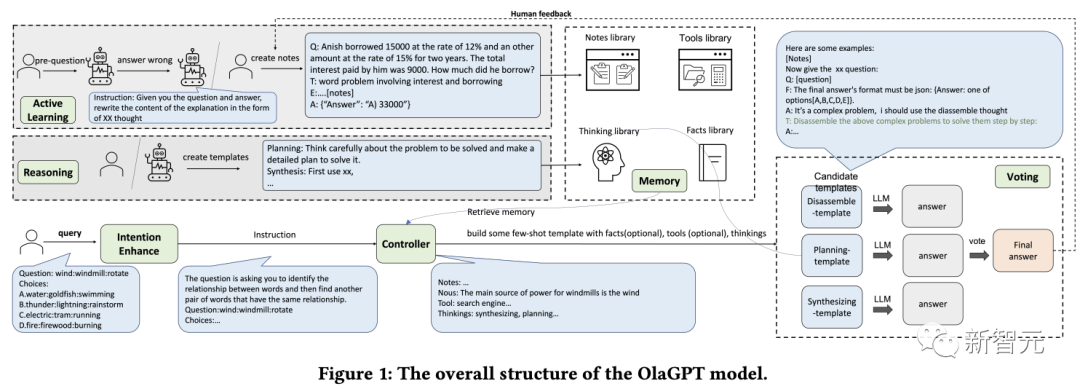

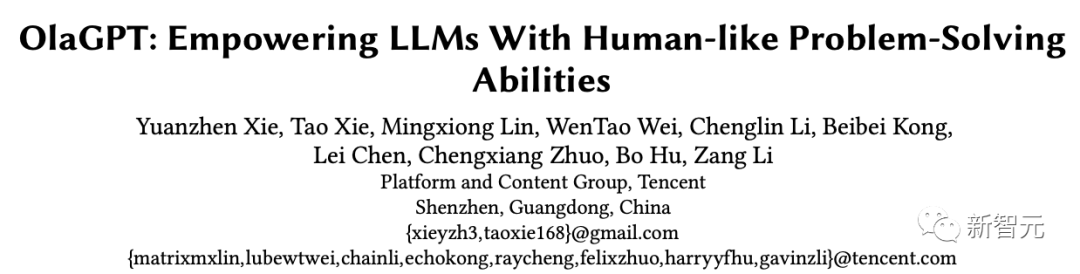

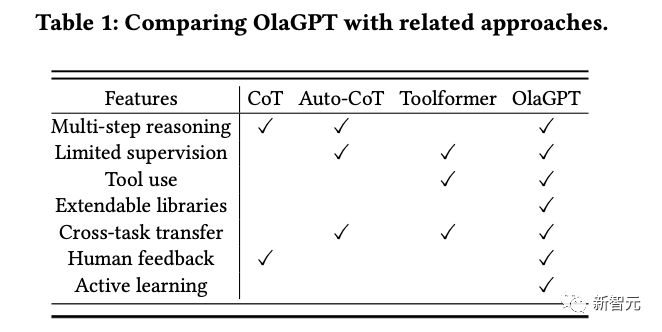

OlaGPT includes multiple cognitive modules, including attention, memory, reasoning, learning, and corresponding scheduling and decision-making mechanisms; inspired by human active learning, the framework also includes a learning unit to record previous errors and expert opinions, dynamically referencing them to enhance the ability to solve similar problems.

The article also outlines common effective reasoning frameworks used by humans to solve problems and accordingly designs a chain of thought (CoT) template; it also proposes a comprehensive decision-making mechanism to maximize the model’s accuracy.

After rigorous evaluation on multiple reasoning datasets, the experimental results show that OlaGPT surpasses previous state-of-the-art benchmarks, proving its effectiveness.

Simulating Human Cognition

Current language models still have a significant gap from the expected general artificial intelligence, mainly manifested as:

1. In some cases, the generated content is meaningless or deviates from human value preferences, even providing some very dangerous suggestions. The current solution is to introduce human feedback reinforcement learning (RLHF) to rank model outputs.

2. The knowledge of language models is limited to the concepts and facts explicitly mentioned in the training data.

When faced with complex problems, language models cannot adapt to changing environments like humans, utilize existing knowledge or tools, reflect on historical lessons, break down problems, or use human thought patterns (such as analogy, inductive reasoning, and deductive reasoning) summarized through long-term evolution to solve problems.

However, simulating the human brain’s problem-solving process presents many systemic challenges:

1. How to systematically mimic and encode the main modules in the human cognitive framework while scheduling according to human general reasoning patterns in a feasible manner?

2. How to guide language models to engage in active learning like humans, that is, to learn and develop from historical errors or expert solutions to difficult problems?

Although retraining models to encode corrected answers may be feasible, it is clearly costly and inflexible.

3. How to enable language models to flexibly utilize various thinking patterns evolved by humans to improve their reasoning performance?

A fixed, universal thinking pattern is difficult to adapt to different problems, just as humans often flexibly choose different ways of thinking, such as analogy reasoning and deductive reasoning, when faced with different types of problems.

OlaGPT is a problem-solving framework that simulates human thinking and can enhance the capabilities of large language models.

OlaGPT draws on cognitive architecture theory, modeling the core capabilities of the cognitive framework as attention, memory, learning, reasoning, and action selection.

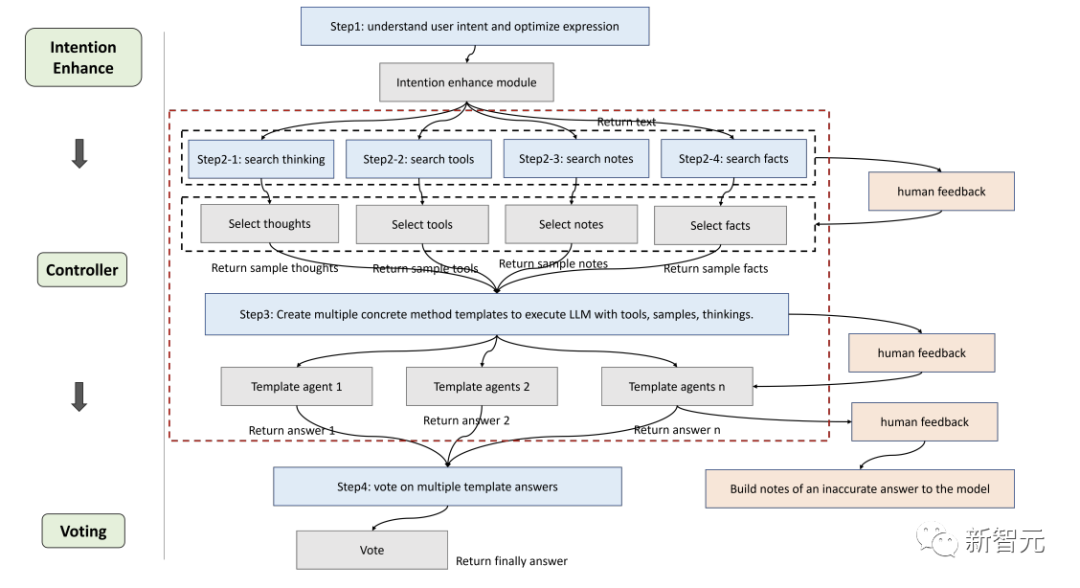

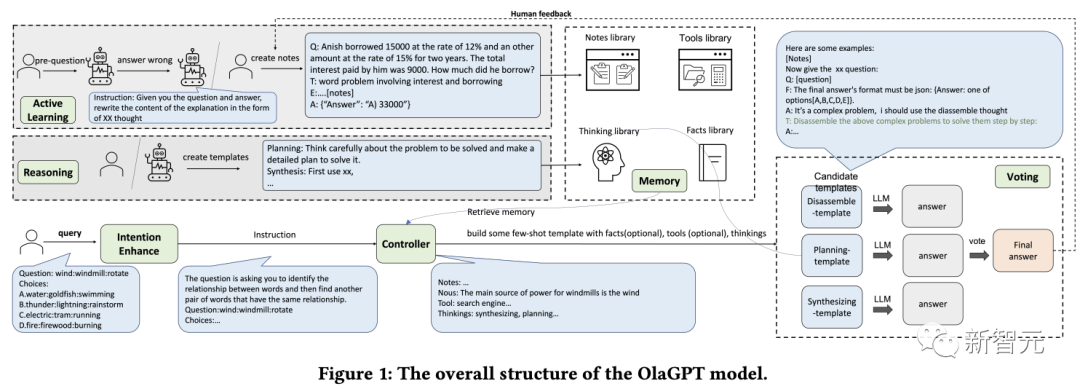

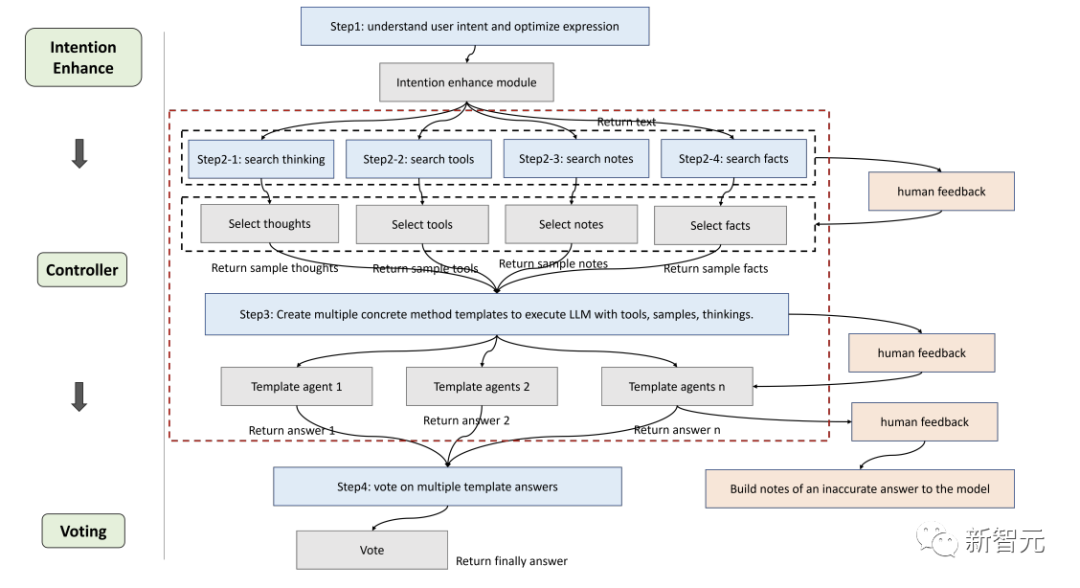

Researchers have fine-tuned the framework based on specific implementation needs and proposed a process suitable for language models to solve complex problems, specifically including six modules: intention enhancement module (attention), memory module (memory), active learning module (learning), reasoning module (reasoning), controller module (action selection), and voting module.

Attention is an important component of human cognition, identifying relevant information and filtering out irrelevant data.

Similarly, researchers designed a corresponding attention module for language models, namely intention enhancement, aimed at extracting the most relevant information and establishing a stronger connection between user input and the model’s language patterns, which can be seen as an optimization converter from user expression habits to model expression habits.

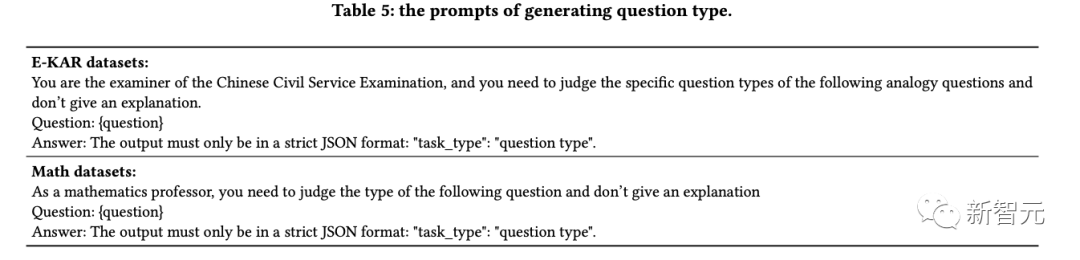

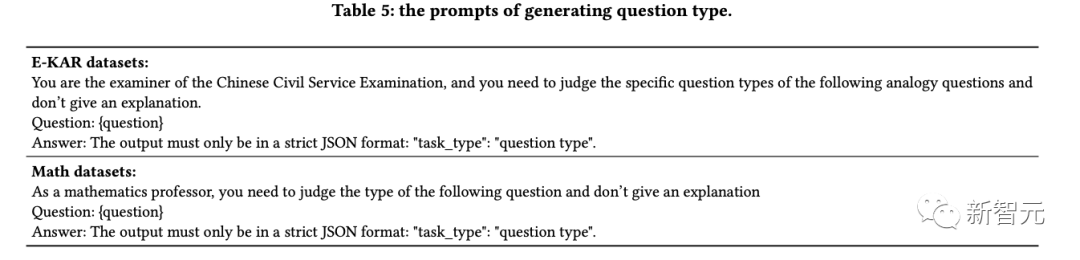

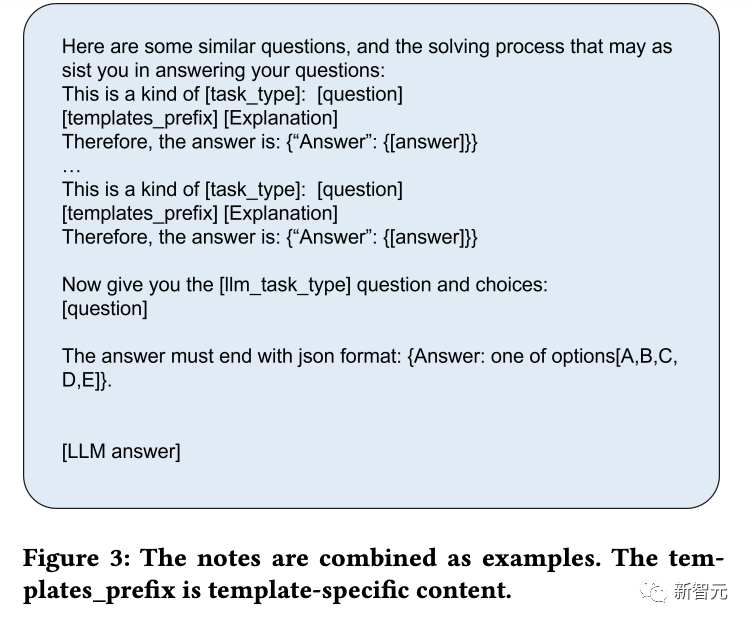

First, obtain the type of questions from LLMs through specific prompts, and then reconstruct the way of asking questions.

For example, add a sentence at the beginning of the question: “Now give you the XX (question type), question and choices:”; For ease of analysis, the prompt also needs to include “The answer must end with JSON format: Answer: one of options[A,B,C,D,E].”

The memory module plays a crucial role in storing various knowledge base information. Research has proven the current limitations of language models in understanding the latest factual data, while the memory module focuses on consolidating knowledge that the model has not yet internalized and storing it as long-term memory in an external library.

Researchers use the memory function provided by langchain for short-term memory, while long-term memory is implemented by a Faiss-based vector database.

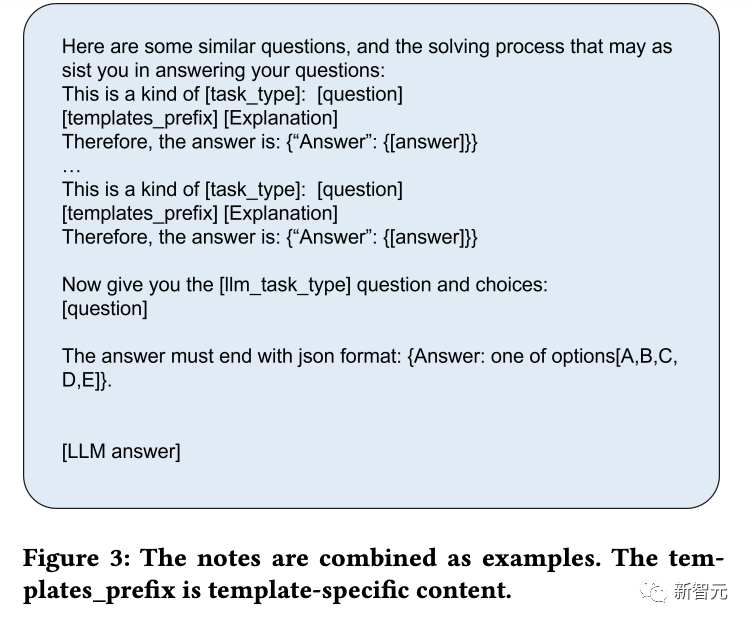

During the querying process, its retrieval function can extract relevant knowledge from the library, covering four types of memory banks: facts, tools, notes, and thinking, where facts are information about the real world, such as common sense; tools include search engines, calculators, and Wikipedia, which can assist language models in completing tasks without being explicitly stated; notes mainly record some difficult cases and steps to solve problems; and the thinking bank mainly stores thinking templates for problem-solving written by experts, who can be either humans or models.

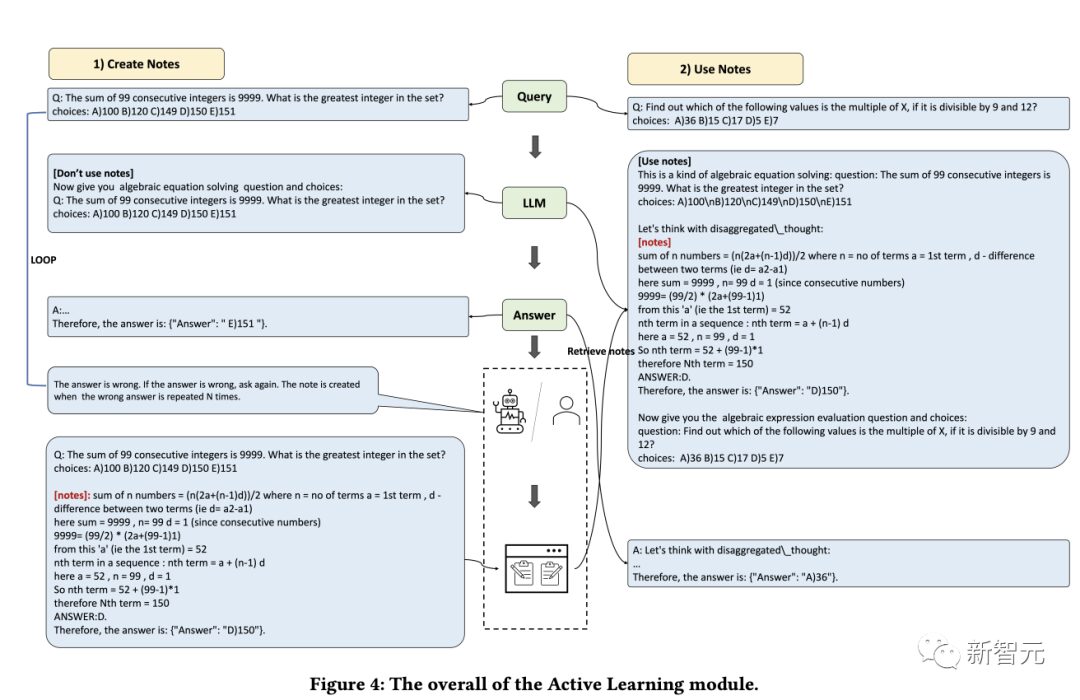

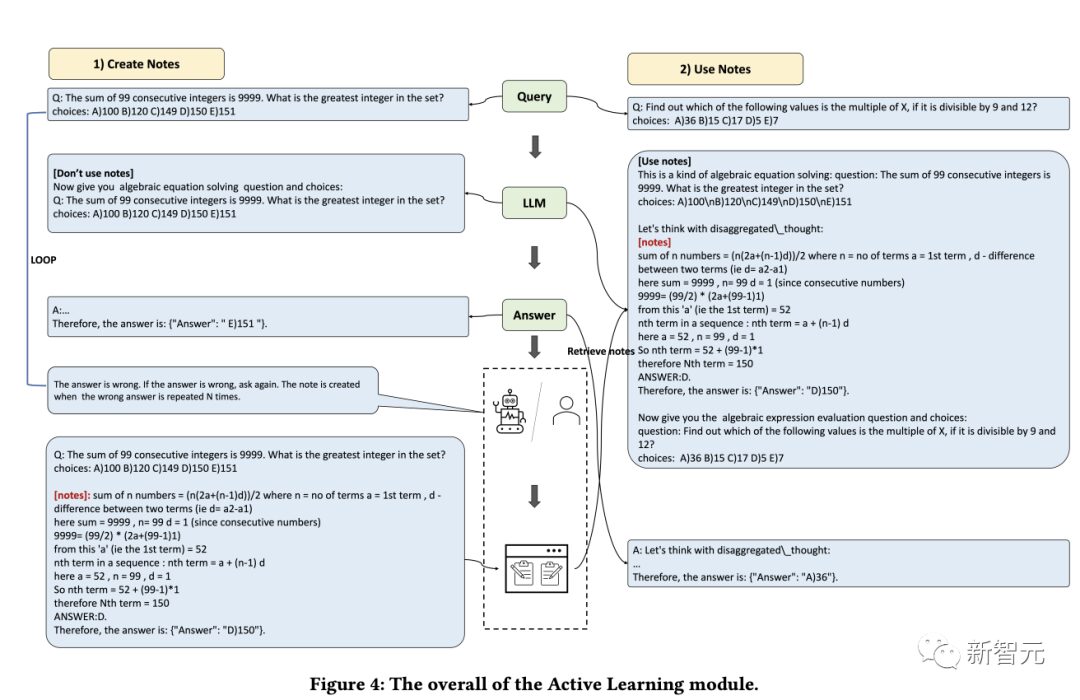

The ability to learn is crucial for humans to continuously improve their performance. Essentially, all forms of learning rely on experience, and language models can learn from previous mistakes, thereby rapidly enhancing their reasoning abilities.

First, researchers identify the problems that language models cannot solve; then record insights and explanations provided by experts in the notes bank; finally, select relevant notes to facilitate the learning of language models, allowing them to handle similar problems more effectively.

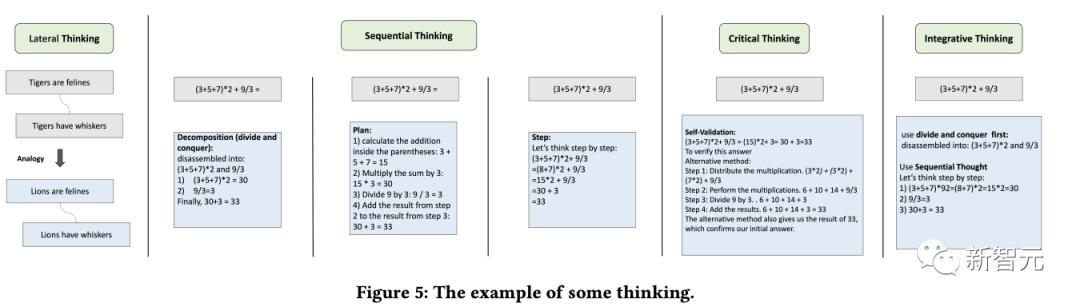

The purpose of the reasoning module is to create multiple intelligent agents based on human reasoning processes, thereby stimulating the potential thinking abilities of language models to solve reasoning problems.

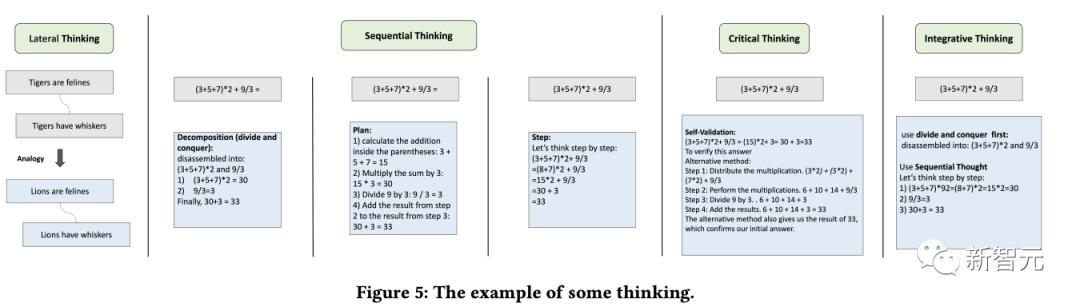

This module combines various thinking templates, referencing specific types of thinking, such as lateral thinking, sequential thinking, critical thinking, and integrative thinking, to facilitate reasoning tasks.

The controller module is mainly used to handle relevant action selections, specifically including the internal planning tasks of the model (such as selecting certain modules to execute) and choosing from the facts, tools, notes, and thinking banks.

First, retrieve and match relevant libraries, and the retrieved content is then integrated into a template agent, requiring the language model to provide responses asynchronously under a template, just as humans may find it difficult to identify all relevant information at the beginning of reasoning, it is also difficult to expect language models to do so initially.

Therefore, dynamic retrieval is achieved based on the user’s questions and intermediate reasoning progress, using the Faiss method to create embedding indexes for the aforementioned four libraries, where the retrieval strategies for each library vary slightly.

Since different thinking templates may be more suitable for different types of problems, researchers designed a voting module to enhance the integration and calibration ability between multiple thinking templates and various voting strategies to generate the best answer to improve performance.

Specific voting methods include:

1. Language model voting: guiding the language model to choose the most consistent answer among several given options and providing a reason.

2. Regex voting: precisely matching extracted answers using regular expressions to obtain voting results.

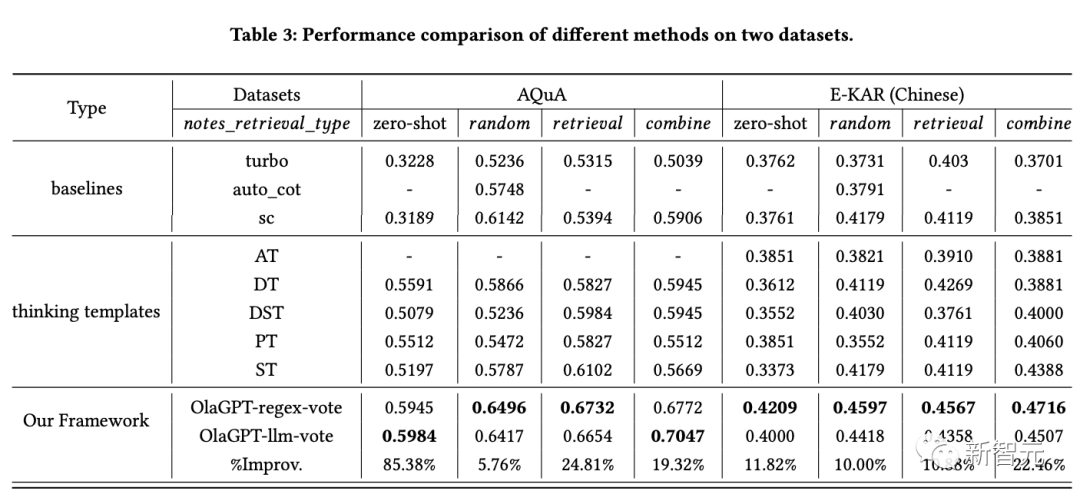

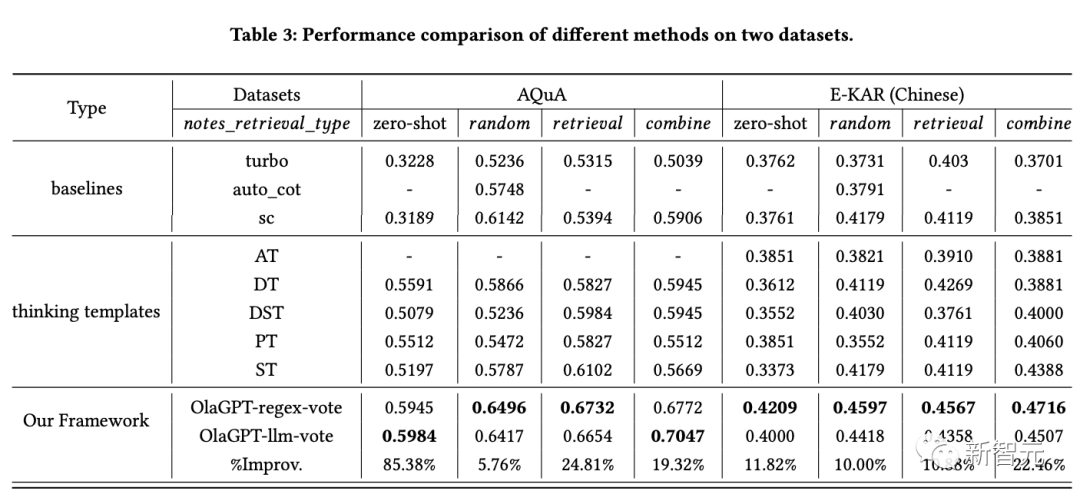

To evaluate the effectiveness of the enhanced language model framework in reasoning tasks, researchers conducted comprehensive experimental comparisons on two types of reasoning datasets.

From the results, it can be seen that:

1. The performance of SC (self-consistency) outperforms GPT-3.5-turbo, indicating that adopting ensemble methods to some extent does help improve the effectiveness of large-scale models.

2. The performance of the proposed method in the article exceeds that of SC, proving the effectiveness of the thinking template strategy to some extent.

Answers from different thinking templates show considerable variance; voting under different thinking templates ultimately produces better results than simply conducting multiple rounds of voting.

3. The effects of different thinking templates vary; step-by-step solutions may be more suitable for reasoning-type problems.

4. The performance of the active learning module significantly outperforms zero-shot methods.

Specifically, random, retrieval, and combination show higher performance, indicating that incorporating challenging cases as part of the notes bank is a feasible strategy.

5. Different retrieval schemes perform differently across datasets; overall, the combine strategy performs better.

6. The methods proposed in the article are significantly better than other solutions, thanks to the reasonable design of the overall framework, including the effective design of the active learning module; the thinking templates adapt to different models, and the results under different thinking templates vary; the controller module plays a good control role, selecting content that matches the desired content; the voting module’s design of integrating different thinking templates is effective.

https://github.com/oladata-team/OlaGPT