2024 is seen as the dawn of AI PCs. According to predictions by Qunzhi Consulting, global shipments of AI PCs will reach approximately 13 million units. As the computational core of AI PCs, processors integrated with Neural Processing Units (NPUs) will be launched on a large scale in 2024. Third-party processor suppliers such as Intel and AMD, as well as self-developed processor manufacturers like Apple, have all indicated that they will release computer processors equipped with NPUs in 2024. Meanwhile, how to maximize the effectiveness of NPUs in computer processors has become a hot topic in the industry.

The 14th generation Core Ultra mobile processor integrated with NPU (Image source: Intel)

NPUs Will Blossom in AI PCs

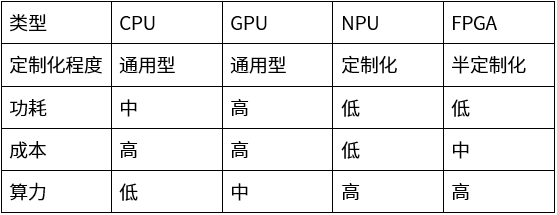

It is understood that NPUs can implement various specific network functions through software or hardware programming, based on the characteristics of network computing. Compared to traditional CPUs and GPUs, NPUs can execute neural network tasks with higher efficiency and lower power consumption.

Compared to FPGAs, NPUs offer greater cost advantages. Industry experts told China Electronics News that while FPGAs also have advantages in flexibility and programmability, the process nodes of mainstream FPGA chips are usually between 14nm and 45nm, whereas NPUs often have process nodes below 10nm, making them more suitable for consumer-grade devices such as compact, low-power PCs and smartphones.

Comparison of various types of AI chips (Data source: compiled from online information)

In terms of software, NPUs have the advantage of strong compatibility with mainstream software. “NPUs can interact with AI frameworks through standardized interfaces such as OpenCL, CUDA, and OpenVX, making it easy to integrate into different software environments. Therefore, NPUs usually have better compatibility with mainstream AI frameworks and software stacks, making it easier for developers to utilize these hardware accelerators,” industry experts stated.

In 2017, Huawei was the first to integrate NPU processors into mobile CPUs, significantly enhancing the amount of data processed per unit time and the AI computing power per unit of power consumption, showcasing the potential of NPUs in terminal devices. As AI applications become increasingly widespread, the demand for AI capabilities among PC users is also rising, prompting the industry to attempt integrating NPUs into PCs. This allows users to train and infer AI models locally without relying on cloud computing resources, and supports users in training and optimizing models according to their needs, aligning with the current trend of personalized and customized software and services.

Currently, various processor suppliers are showing great interest in computer processors with built-in NPUs, launching related products one after another. Intel recently released the 14th generation Core Ultra mobile processor with built-in NPU. Intel has stated that in 2024, there will be over 230 models equipped with Core Ultra. Apple will release the MacBook with M3 processors in 2024, revealing that the NPU performance of its M3 processor has improved by 60% compared to M1. AMD will officially release the Ryzen 8040 processor with an independent NPU in early 2024, stating that the performance of large language models has improved by 40% after adding the NPU.

AMD Ryzen 8040 processor with built-in NPU (Image source: AMD)

It is widely believed in the industry that the future combination of “CPU+NPU+GPU” will become the computational foundation of AI PCs. The CPU is mainly used to control and coordinate the work of other processors, the GPU is primarily used for large-scale parallel computing, and the NPU focuses on deep learning and neural network computations. The three types of processors working together can fully leverage their respective advantages, improving the efficiency and energy efficiency of AI computations.

High-End Consumer PCs Will Lead Adoption

In the future development of PCs, NPUs, as the core component of AI computing, will be widely applied in PCs, with high-end product line users being the first to experience it.

Industry experts believe that integrating NPUs into PC processors requires a certain level of technical and resource investment, which will also lead to increased costs. Therefore, NPUs will initially focus on the high-end product lines of consumer-grade PCs.

“Currently, processors equipped with NPUs are mainly concentrated in consumer-grade PCs. This is because consumer-grade PC users have relatively flexible needs and usage scenarios, placing more emphasis on product performance, functionality, and user experience than on reliability and lifespan. Therefore, consumer-grade PC manufacturers are generally more willing to experiment with new technologies. In contrast, enterprise PCs have stricter requirements for reliability and lifespan, with users more inclined to adopt proven technologies to ensure system stability. As a result, the application of NPUs in enterprise PCs will be delayed,” an industry expert told China Electronics News.

Lenovo Xiaoxin Pro 16 AI Super Notebook with built-in NPU

Taking Intel’s latest two AI PC processors as an example, Intel’s fifth-generation scalable Xeon processor for enterprise PC users does not adopt the latest process technology or integrate NPUs, but continues to use CPU processors and still employs the relatively mature Intel 7 process technology. In contrast, the consumer-grade Core Ultra processors not only use the latest Intel 4 process but also integrate NPUs for the first time.

Utilizing NPUs Effectively Requires More Than Just Accumulation

The challenge in using NPUs lies in how to unleash effective computational power.

Gao Yu, General Manager of Intel’s Technical Department in China, stated that to fully unleash the performance of NPUs, it cannot be achieved merely through accumulation; one must understand the theoretical and practical computational power of NPUs. Based on this, existing programming models need to be optimized to better adapt to the parallel processing architecture of NPUs. This requires developers and engineers to have an in-depth understanding of the working principles and characteristics of NPUs. In addition to optimizing programming models, hardware devices themselves also need optimization, such as heat dissipation and power management. These factors will affect the actual performance and computational power of NPUs.

To further realize the popularization of NPUs in AI PCs and unleash effective computational power, it is necessary to build an ecosystem for NPUs. “To promote the development of NPUs in the PC field, a complete ecosystem needs to be established, including hardware suppliers, software developers, and PC application vendors. When this ecosystem becomes healthy and vibrant, NPU technology can develop better,” industry experts said.

Major | China Electronics News Lists Top Ten Events in the Electronic Information Industry for 2023

The First Xiaomi Car Officially Released: The Car Finally Reaches the ‘Chip’ Stage

Supervisor: Lian Xiaodong