The Objective of This Article

This article will practice the MobileNet image classification example in the RKNN Docker environment and deploy it to the RK3588 development board using the adb tool. For an introduction to MobileNet, please refer to the previous article.

Development Environment Description

- Host System: Windows 11

- Target Device: Android development board equipped with RK3588 chip

- Core Tools: Docker image containing rknn-toolkit2, rknn_model_zoo, and other tools, ADB debugging tools

Starting the RKNN Docker Environment

# Use the docker run command to create and run the RKNN Toolkit2 container

# and map local files into the container using the -v <host src folder>:<image dst folder> parameter

docker run -t -i --privileged -v /dev/bus/usb:/dev/bus/usb -v D:\rknn\rknn_model_zoo-v2.3.0:/rknn_model_zoo rknn-toolkit2:2.3.0-cp38 /bin/bash

Introduction to the MobileNet Directory Structure

After starting Docker, navigate to the MobileNet example directory in rknn_model_zoo

cd /rknn_model_zoo/examples/mobilenet/

The directory structure is as follows:

├── rknn_model_zoo/examples/mobilenet

│ ├── cpp # Cpp project example, will be used for deployment

│ ├── model # Model directory

│ │ ├── bell.jpg # Image to be detected in the example

│ │ ├── download_model.sh # Script to download the model

│ │ └── synset.txt # ImageNet category label file

│ └── python # Python toolchain

│ └── mobilenet.py # Core tool script

MobileNet Tool Script Description

mobilenet.py is a tool script for deploying the MobileNetV2 model on the RKNN (Rockchip Neural Network) platform, supporting model conversion, performance evaluation, and inference testing.

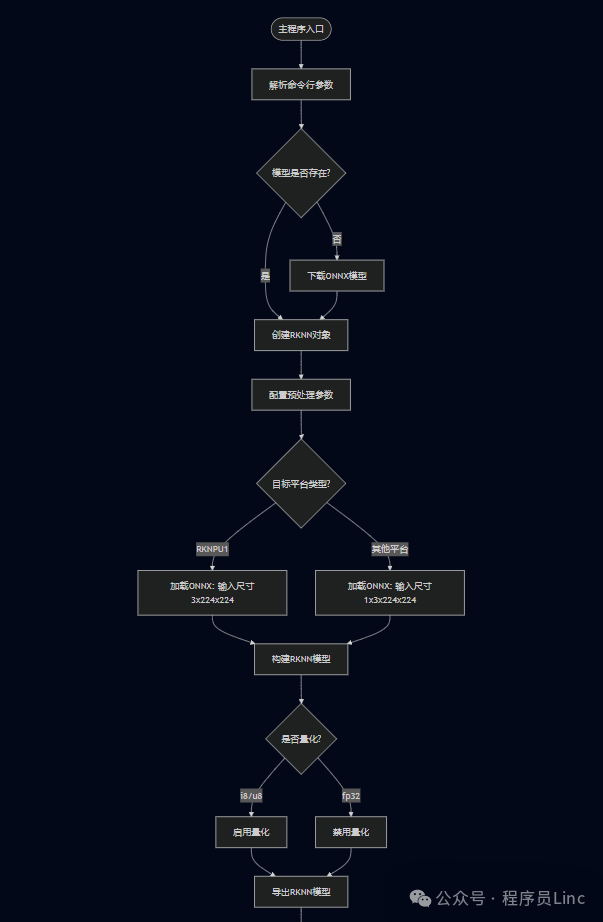

Overall Process

Core Functions

1. Model Download and Preparation

Automatically downloads the ONNX model. If no model file (mobilenetv2-12.onnx) is detected locally, it will download from the cloud and display a real-time progress bar and download speed.

def check_and_download_origin_model():

2. RKNN Model Conversion

Configure Preprocessing Parameters Standardization parameters adapted to the ImageNet dataset (mean/variance).

rknn.config(mean_values=[[...]], std_values=[[...]], target_platform=args.target)

Model Loading and Quantization Supports ONNX model conversion, with options for INT8/UINT8/FP32 quantization.

rknn.load_onnx(model=args.model, ...)

rknn.build(do_quantization=do_quant, ...)

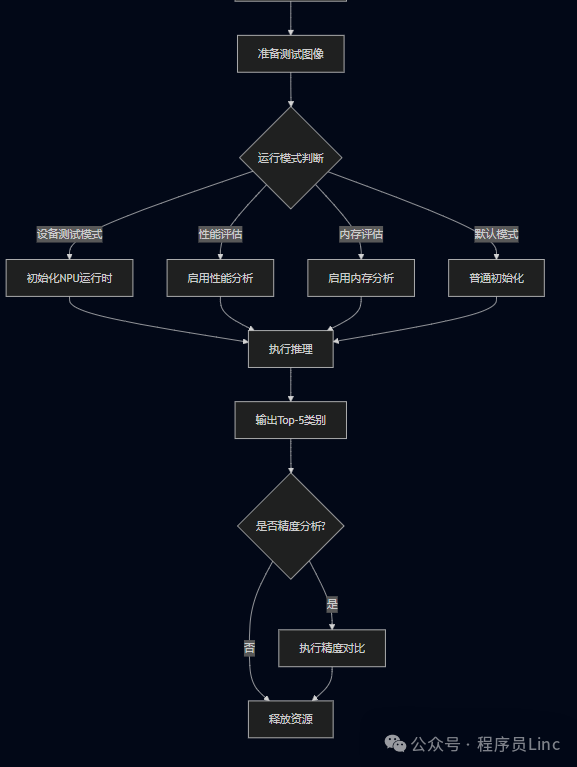

3. Inference and Evaluation

Performance Testing Supports time consumption evaluation (–eval_perf) and memory usage analysis (–eval_memory).

rknn.eval_perf()

rknn.eval_memory()

Classification Inference Input image preprocessing → Inference → Output Top-5 classification results.

outputs = rknn.inference(inputs=[img])

scores = softmax(outputs[0])

4. Cross-Platform Support

Multi-device compatibility by specifying the RKNPU platform using the –target parameter (e.g., rk3588, rk1808).

RKNPU1_TARGET = ['rk1808', 'rv1109', 'rv1126']

Command Line Parameter Description

| Parameter | Default Value | Optional Values | Description |

|---|---|---|---|

<span>--target</span> |

<span>rk3566</span> |

<span>rk3566</span>/<span>rk1808</span>/<span>rv1109</span>/<span>rv1126</span> |

Specify the NPU chip model; different platforms support different quantization types |

<span>--dtype</span> |

<span>i8</span> |

<span>i8</span>(INT8)/<span>fp32</span>(float)/<span>u8</span>(UINT8) |

Recommended to use<span>i8</span> for RKNPU2 platform; RKNPU1 platform requires<span>u8</span> |

<span>--eval_perf</span> |

<span>False</span> |

<span>True</span>/<span>False</span> |

When enabled, it outputs a time consumption analysis report for each layer |

<span>--accuracy_analysis</span> |

<span>False</span> |

<span>True</span>/<span>False</span> |

Requires connection to a physical device to run |

Usage Example:

# Perform INT8 quantization performance testing on the RK3566 platform

python mobilenet.py --target rk3566 --dtype i8 --eval_perf

Using mobilenet.py to Convert the Model and Perform Inference with the Simulator

rknn_model_zoo/examples/mobilenet/python

python mobilenet.py --model ../model/mobilenetv2-12.onnx --target rk3588

Execution flow: Parameter parsing → Model download → Cross-platform build → Multi-mode inference → Result analysis. Execution flow diagram: The results are as follows:

--> Download ../model/mobilenetv2-12.onnx

0.00% [--------------------------------------------------] Speed: 0.00 KB/s

100.02% [##################################################] Speed: 61.72 KB/s

done

I rknn-toolkit2 version: 2.3.0

--> Config model

done

--> Loading model

W load_onnx: If you don't need to crop the model, don't set 'inputs'/'input_size_list'/'outputs'!

I Loading : 100%|██████████████████████████████████████████████| 177/177 [00:00<00:00, 51774.31it/s]

done

--> Building model

I OpFusing 0: 100%|█████████████████████████████████████████████| 100/100 [00:00<00:00, 5654.91it/s]

I OpFusing 2 : 100%|█████████████████████████████████████████████| 100/100 [00:00<00:00, 405.05it/s]

W build: found outlier value, this may affect quantization accuracy

const nameabs_mean abs_std outlier value

478 0.89 1.59 -15.073

550 0.61 0.68 11.299

577 0.64 0.65 -9.877

604 0.60 0.55 -9.970

I GraphPreparing : 100%|███████████████████████████████████████| 100/100 [00:00<00:00, 20185.30it/s]

I Quantizating : 100%|████████████████████████████████████████████| 100/100 [00:01<00:00, 90.17it/s]

W build: The default input dtype of 'input' is changed from 'float32' to 'int8' in rknn model for performance!

Please take care of this change when deploy rknn model with Runtime API!

W build: The default output dtype of 'output' is changed from 'float32' to 'int8' in rknn model for performance!

Please take care of this change when deploy rknn model with Runtime API!

I rknn building ...

I rknn building done.

done

--> Export rknn model

done

--> Init runtime environment

I Target is None, use simulator!

done

--> Running model

W inference: The 'data_format' is not set, and its default value is 'nhwc'!

I GraphPreparing : 100%|███████████████████████████████████████| 102/102 [00:00<00:00, 20605.87it/s]

I SessionPreparing : 100%|██████████████████████████████████████| 102/102 [00:00<00:00, 2492.67it/s]

--> PostProcess

-----TOP 5-----

[494] score=0.98 class="n03017168 chime, bell, gong"

[653] score=0.01 class="n03764736 milk can"

[469] score=0.00 class="n02939185 caldron, cauldron"

[505] score=0.00 class="n03063689 coffeepot"

[463] score=0.00 class="n02909870 bucket, pail"

done

Using mobilenet.py for On-Board Execution

python mobilenet.py --target rk3588 --npu_device_test

C Language Project Board Deployment

Compilation

./build-android.sh -t rk3588 -a arm64-v8a -d mobilenet

Execution results:

./build-android.sh -t rk3588 -a arm64-v8a -d mobilenet

===================================

BUILD_DEMO_NAME=mobilenet

BUILD_DEMO_PATH=examples/mobilenet/cpp

TARGET_SOC=rk3588

TARGET_ARCH=arm64-v8a

BUILD_TYPE=Release

ENABLE_ASAN=OFF

DISABLE_RGA=

INSTALL_DIR=/rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_mobilenet_demo

BUILD_DIR=/rknn_model_zoo/build/build_rknn_mobilenet_demo_rk3588_android_arm64-v8a_Release

ANDROID_NDK_PATH=/rknn_model_zoo/android-ndk-r19c

===================================

-- Android: Targeting API '23' with architecture 'arm64', ABI 'arm64-v8a', and processor 'aarch64'

[100%] Built target imagedrawing

Install the project...

-- Install configuration: "Release"

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_mobilenet_demo/./rknn_mobilenet_demo

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_mobilenet_demo/model/bell.jpg

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_mobilenet_demo/model/synset.txt

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_mobilenet_demo/model/mobilenet_v2.rknn

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_mobilenet_demo/lib/librknnrt.so

-- Installing: /rknn_model_zoo/install/rk3588_android_arm64-v8a/rknn_mobilenet_demo/lib/librga.so

Pushing to the Development Board

# Switch to root user privileges

adb root

adb push install/rk3588_android_arm64-v8a/rknn_mobilenet_demo /data/rknn-test

Running the Demo on the Development Board

adb shell

# Enter the rknn_yolov5_demo directory on the development board

cd /data/rknn-test/rknn_mobilenet_demo/

# Set the library environment

export LD_LIBRARY_PATH=./lib

# Run the executable

./rknn_mobilenet_demo model/mobilenet_v2.rknn model/bell.jpg

The execution results are as follows:

/rknn_mobilenet_demo model/mobilenet_v2.rknn model/bell.jpg <

num_lines=1001

model input num: 1, output num: 1

input tensors:

index=0, name=input, n_dims=4, dims=[1, 224, 224, 3], n_elems=150528, size=150528, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=-14, scale=0.018658

output tensors:

index=0, name=output, n_dims=2, dims=[1, 1000, 0, 0], n_elems=1000, size=1000, fmt=UNDEFINED, type=INT8, qnt_type=AFFINE, zp=-55, scale=0.141923

model is NHWC input fmt

model input height=224, width=224, channel=3

origin size=500x333 crop size=496x320

input image: 500 x 333, subsampling: 4:4:4, colorspace: YCbCr, orientation: 1

src width is not 4/16-aligned, convert image use cpu

finish

rknn_run

[494] score=0.990035 class=n03017168 chime, bell, gong

[469] score=0.003907 class=n02939185 caldron, cauldron

[653] score=0.001089 class=n03764736 milk can

Conclusion

This article demonstrated the model conversion and deployment of the MobileNet model sample on the RK3588 development board running Android. We are just one step away from end-to-end deployment, stay tuned.