Author: Andrew Su Yong

As an AI technology expert at a globally renowned semiconductor company, I often receive inquiries from clients about machine learning algorithms when introducing Renesas’s AI hardware and AI tools, such as convolutional neural networks, K-means algorithms, or other algorithms. However, the reality is that during the process of building AI solutions, the algorithm is not the most critical factor (though it is necessary), especially for edge applications. Data is key; once you have data, what truly matters is the “features” hidden within it.

In machine learning terminology, features are specific variables that serve as inputs to the algorithm. Features can be raw values directly from the input data or values derived from processing (preprocessing) that data. With the right features, almost any machine learning algorithm can find what you are looking for. Without good features, no algorithm will be effective. This is especially true for real-world problems, as data in the real world often contains a lot of inherent noise and variability that can obscure the truth.

My colleague Jeff (Technical Director of Renesas AI COE and co-founder of the former Reality AI independent company) likes to explain the concept of features in machine learning to colleagues with the following example:

“Suppose I am trying to use an AI model to detect when my wife comes home. I would set up a set of sensors aimed at the door to collect data. To apply this data to machine learning, I need to determine a set of features that can help the model distinguish my wife from anything else the sensors might detect. So, what would be the best feature? It would be the feature that indicates ‘she is there!’ That would be perfect—a feature with complete predictive power. This would make the task of machine learning trivial.”

Indeed, if there were an algorithm that could predict the perfect features directly from the raw data, that would be ideal. In practice, deep learning has implemented this idea through multi-layer convolutional neural networks, but this comes with a significant computational overhead and requires a large number of data samples to support it, as discussed in the previous article “The Dilemma of Deep Learning.” However, there are other methods, such as using the toolset provided by Reality AI.

Renesas’s Reality AI tools create classifiers and detectors based on continuously sampled signal inputs (such as acceleration, vibration, sound, electrical signals, etc.), which often contain a lot of noise and inherent signal fluctuations. Our value lies in our ability to explore features that achieve the strongest inference capability (highest correlation) with the least computational overhead. The Reality AI tools follow a mathematical process that first discovers optimized features from the data before considering the details of the algorithms (SVM or NN) that will use these features to make decisions. The closer the features discovered by Reality AI are to perfection, the better the inference results will be, requiring less data for training and inference, shorter training times, more accurate results, and lower processing power. This has been validated and proven effective.

Here is an example illustrating the significant impact of effective features on subsequent decisions. When analyzing objectively sampled signal data from the real world at a relatively high sampling rate (50 Hz and above), patterns or regularities can be found in data sampled from vibrations or sounds converted into electrical signals. In the field of signal processing, engineers typically use frequency analysis methods to extract signal features. For this type of data, a common machine learning approach is to perform a fast Fourier transform (FFT) on the input data stream and then use the peaks of these frequency coefficients as inputs to a neural network or some other algorithm.

Why use this method?

-

On one hand, it is convenient, as all data processing tools used by data engineers support FFT, such as MATLAB, Numpy, and Scipy.

-

On the other hand, it is well understood, as everyone learns FFT in school.

-

Additionally, it is easy to explain, as its results can be easily related to underlying physical principles.

However, FFT does not always provide optimal features; it often blurs important temporal information, which can be extremely useful for classifying or detecting underlying temporal signals.

For example

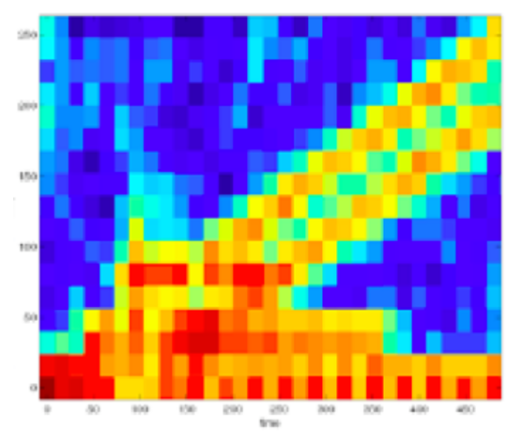

In a test, the features optimized by Reality AI were compared with FFT on a set of moderately complex and noisy signals. In Figure 1, the time-frequency representation (or spectrogram) of the signal input processed by FFT is shown. The vertical axis represents frequency, and the horizontal axis represents time, with FFT calculated in a sliding window over the streaming signal. The colors in the figure represent a heat map, with warmer colors indicating higher energy in that specific frequency range.

Figure 1 Spectrogram of the test signal

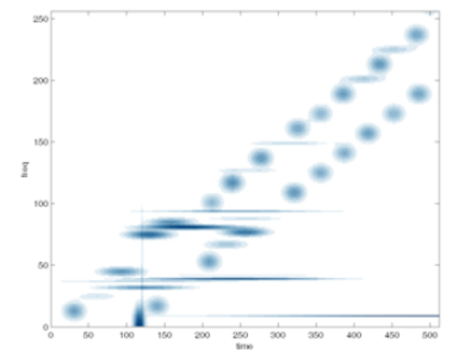

Now, compare this figure with another one, see Figure 2. Figure 2 shows the method discovered by Reality AI for the current classification problem, displaying the optimized features. In Figure 2, the details are richer, previously blurred areas are now distinguishable, and the complexity of the description is reduced (there is no need to use different colors to express the distribution of energy in a third dimension). Thus, understanding the content in the figure becomes much easier.

Figure 2 Distribution of the test signal in the feature space discovered by Reality AI

Looking closely at this figure,

we can attempt to explain the pattern of this segment of the signal:

-

The most basic signal is actually a low-pitched (initial frequency distribution in lower bands), buzzing sound with several tones (energy distributed across multiple frequency points).

-

It is also accompanied by a chirping sound that gradually increases in volume (the effective frequency distribution range gradually rises over time).

-

Additionally, there are other signals that suddenly appear and disappear (energy appears and then disappears at certain frequency points over time).

Haha, you might guess that this is a unique bird communicating some information.

Thanks to the method discovered by Reality AI, the information in the figure is no longer vague, and the interfering noise has been removed. Therefore, even those who are not professionals in signal processing can easily understand it. Now, regardless of the classification method used, detecting these signals is much simpler than before.

Optimizing features from the outset also has another key benefit: it enhances the computational efficiency of the final classifier. For embedded solutions, memory space and computational load are the most valuable resources. If the right features are not selected, it may lead to a dramatic increase in the size and computational complexity of the final usable model, resulting in higher chip costs.

As mentioned in the previous article “The Dilemma of Deep Learning,” deep learning can also discover features, but it is not efficient. Nevertheless, deep learning methods have been very successful in addressing certain problems using signal data, including object recognition in images and speech recognition in sounds. It is a viable method for a wide range of problems, but deep learning requires a large amount of training data, is not very computationally efficient, and may be difficult for non-professionals to use. The accuracy of classifiers often depends sensitively on a large number of configuration parameters, which leads many developers using deep learning to spend a lot of effort tuning pre-trained models instead of focusing on finding the best features for each new problem.

My colleague Jeff (who is also a mathematician) explains that deep learning is essentially “a generalized nonlinear function mapping—its mathematical principles are exquisite, but its convergence speed is ridiculously slow compared to almost any other method.” In contrast, Reality AI’s approach optimizes for signals, allowing for faster convergence with less data by exploring and extracting effective features. For resource-constrained edge AI applications, this method is several orders of magnitude more efficient than deep learning.

Although deep learning has achieved significant success in current mainstream AI products, leading people in related fields to focus more on algorithms, there have been many exciting innovations in machine learning algorithms in the deep learning and its surrounding fields. However, do not forget some fundamental principles:

Ultimately,

everything comes from data and is related to features.

Need technical support?

If you have any questions while using Renesas MCU/MPU products, you can scan the QR code below or copy the URL into your browser to access the Renesas Technical Forum for answers or online technical support.

https://community-ja.renesas.com/zh/forums-groups/mcu-mpu/

1

END

1

Recommended Reading

Let AI Happen | One-stop Embedded AI Development Platform: Reality AI Tools

Video Introduction | Defining Embedded Systems with AI Thinking

Expanding Mid-to-High-End Visual AI Applications | Introduction of New RZ V2N Products—High Efficiency, Advanced AI Technology